When testing RKE2 templates, I faced an issue with member assignments. When creating the cluster, a management cluster name is generated with the format c-m-xxxxxxxx, but the ClusterRoleTemplateBinding requires the cluster name to work. After digging into Rancher source code, I found out how to set the management cluster name. So let’s start!

Force the cluster name with RKE2 templates

Investigation

When searching how the cluster name is generated when provisioning, I found the following code in the Rancher GitHub repository.

func mgmtClusterName() (string, error) {

rand, err := randomtoken.Generate()

if err != nil {

return "", err

}

return name.SafeConcatName("c", "m", rand[:8]), nil

}

From the function mgmtClusterName I was able to find the following code.

if mgmtClusterNameAnnVal, ok := cluster.Annotations[mgmtClusterNameAnn]; ok && mgmtClusterNameAnnVal != "" && newCluster.Name == "" {

// If the management cluster name annotation is set to a non-empty value, and the mgmt cluster name has not been set yet, set the cluster name to the mgmt cluster name.

newCluster.Name = mgmtClusterNameAnnVal

} else if newCluster.Name == "" {

// If the management cluster name annotation is not set and the cluster name has not yet been generated, generate and set a new mgmt cluster name.

mgmtName, err := mgmtClusterName()

if err != nil {

return nil, status, err

}

newCluster.Name = mgmtName

}

Which finally leads to the following annotation.

mgmtClusterNameAnn = "provisioning.cattle.io/management-cluster-name"

Forced the management cluster name

To avoid using the generated Cluster Name given by mgmtClusterName(), we can add the following annotation “provisioning.cattle.io/management-cluster-name” into the cluster.provisioning.cattle.io resources.

We can pick the same code from the Rancher template example in ClusterRoleTemplateBinding and do the following.

apiVersion: provisioning.cattle.io/v1

kind: Cluster

metadata:

annotations:

provisioning.cattle.io/management-cluster-name: c-m-{{ trunc 8 (sha256sum (printf "%s/%s" $.Release.Namespace $.Values.cluster.name)) }}

{{- if .Values.cluster.annotations }}

{{ toYaml .Values.cluster.annotations | indent 4 }}

{{- end }}

The template code above will ensure that the management cluster name will always be the one we generated ourselves.

Now let’s check the ClusterRoleTemplateBinding resource for automatically assigning users and groups.

RKE2 ClusterRoleTemplateBinding

To predefined users and groups into the cluster, we can use the template clusterroletemplatebinding.yaml.

{{ $root := . }}

{{- range $index, $member := .Values.clusterMembers }}

apiVersion: management.cattle.io/v3

clusterName: c-m-{{ trunc 8 (sha256sum (printf "%s/%s" $root.Release.Namespace $root.Values.cluster.name)) }}

kind: ClusterRoleTemplateBinding

metadata:

name: ctrb-{{ trunc 8 (sha256sum (printf "%s/%s/%s" $root.Release.Namespace $member.principalName $member.roleTemplateName )) }}

namespace: c-m-{{ trunc 8 (sha256sum (printf "%s/%s" $root.Release.Namespace $root.Values.cluster.name)) }}

roleTemplateName: {{ $member.roleTemplateName }}

userPrincipalName: {{ $member.principalName }}

{{- end }}

For the metadata.name, I added the RoleTemplateName to avoid identical names if you add the same user with different roles.

In the values.yaml you will need to provide the following information:

clusterMembers:

- principalName: "local://u-xxxxx"

roleTemplateName: "cluster-member"

- principalName: "local://u-xxxxx"

roleTemplateName: "cluster-owner"

When using only local users, it is easier as you only specify local:// with the ID of the user. But if you use groups, you may not know what value to provide. The same applies to your custom roles. The easiest way is to manually assign your members, and read the YAML files created.

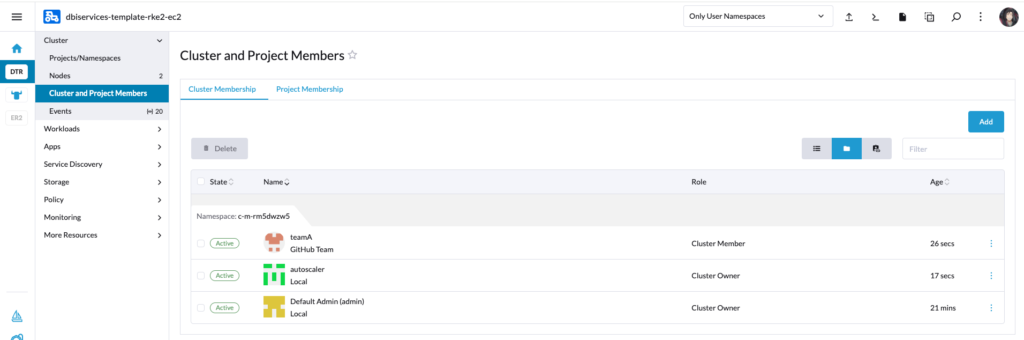

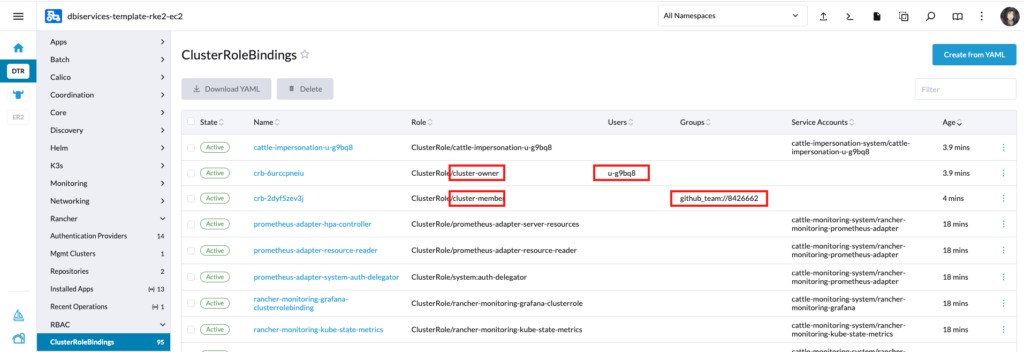

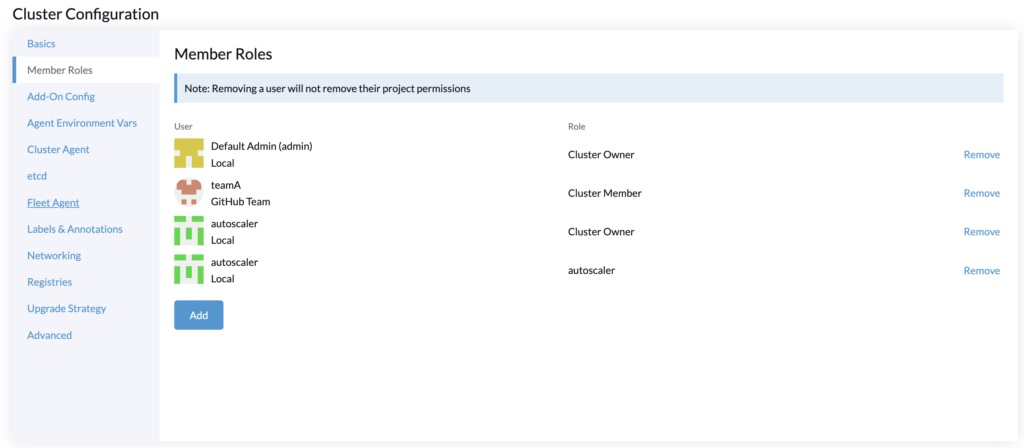

For this example, I am adding my GitHub group “teamA” as a cluster member, and a local user “autoscaler” as a cluster owner.

Now go to More Resources > RBAC > ClusterRoleBindings and sort by age.

You should find the values to specify for principalName and roleTemplateName.

New issues

When the cluster is created for the first time, Rancher automatically creates the namespace. ClusterRoleTemplateBinding needs to be deployed into this namespace. Therefore it cannot be created at the creation of the cluster. In addition, it needs to wait for the Cluster resources to be provisioned by Rancher.

helm install --generate-name=true --namespace=fleet-default --timeout=10m0s --values=/home/shell/helm/values-dbiservices-template-ec2-0.0.1.yaml --version=0.0.1 --wait=true /home/shell/helm/dbiservices-template-ec2-0.0.1.tgz

2024-02-28T12:57:21.998195671Z Error: INSTALLATION FAILED: 1 error occurred:

2024-02-28T12:57:21.998226718Z * namespaces "c-m-dc91e1f4" not found

or

2024-03-04T11:48:15.121066673Z * admission webhook "rancher.cattle.io.clusterroletemplatebindings.management.cattle.io" denied the request: clusterroletemplatebinding.clusterName: Invalid value: "c-m-dc91e1f4": specified cluster c-m-dc91e1f4 not found

Therefore, to ensure the assignment of users, the Helm charts must be updated after being deployed the first time.

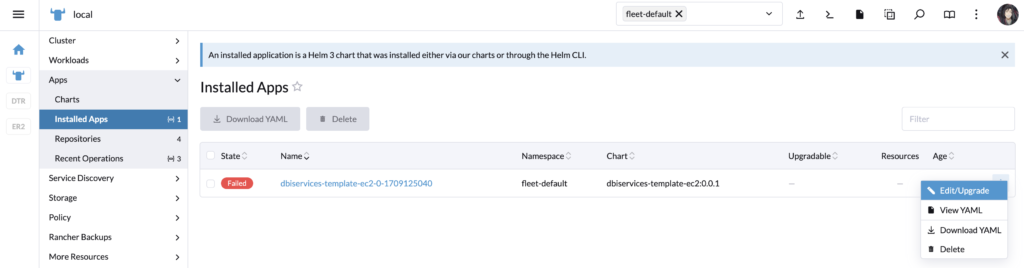

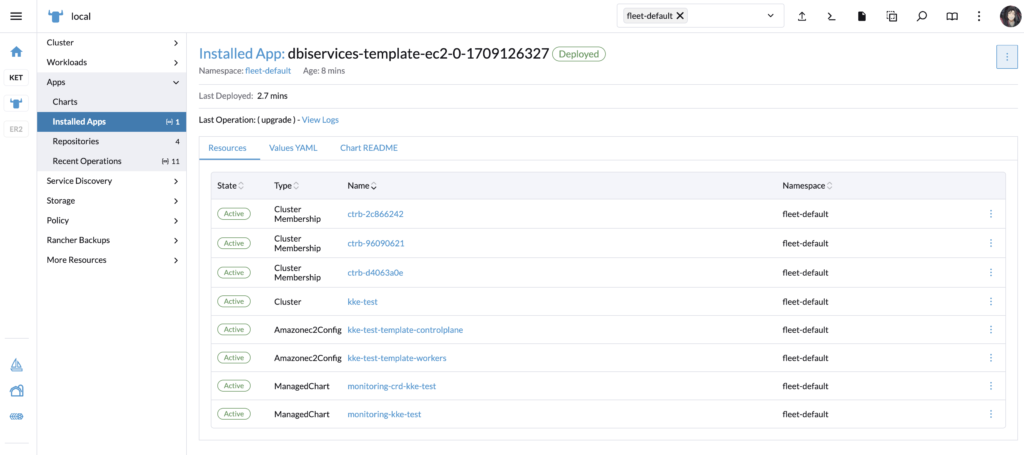

In the local > Apps > Installed Apps, in the fleet-default namespace, edit/update the App, and redeploy it.

You don’t need to change any values. It will deploy correctly the ClusterRoleTemplateBinding and assign the users/groups.

helm upgrade --history-max=5 --install=true --namespace=fleet-default --timeout=10m0s --values=/home/shell/helm/values-dbiservices-template-ec2-0.0.1.yaml --version=0.0.1 --wait=true dbiservices-template-ec2-0-1709125040 /home/shell/helm/dbiservices-template-ec2-0.0.1.tgz

2024-02-28T13:02:07.755854608Z checking 6 resources for changes

2024-02-28T13:02:07.770167607Z Patch Amazonec2Config "dbiservices-template-rke2-ec2-template-controlplane" in namespace fleet-default

2024-02-28T13:02:07.795076109Z Patch Amazonec2Config "dbiservices-template-rke2-ec2-template-workers" in namespace fleet-default

2024-02-28T13:02:07.831247897Z Patch Cluster "dbiservices-template-rke2-ec2" in namespace fleet-default

2024-02-28T13:02:07.931325393Z Created a new ClusterRoleTemplateBinding called "ctrb-d4063a0e" in c-m-dc91e1f4

2024-02-28T13:02:07.931347721Z

2024-02-28T13:02:07.961962070Z Patch ManagedChart "monitoring-crd-dbiservices-template-rke2-ec2" in namespace fleet-default

2024-02-28T13:02:08.027434827Z Patch ManagedChart "monitoring-dbiservices-template-rke2-ec2" in namespace fleet-default

2024-02-28T13:02:08.065622593Z beginning wait for 6 resources with timeout of 10m0s

2024-02-28T13:02:08.126940145Z Release "dbiservices-template-ec2-0-1709125040" has been upgraded. Happy Helming!

2024-02-28T13:02:08.126959636Z NAME: dbiservices-template-ec2-0-1709125040

2024-02-28T13:02:08.126964700Z LAST DEPLOYED: Wed Feb 28 13:02:06 2024

2024-02-28T13:02:08.126969197Z NAMESPACE: fleet-default

2024-02-28T13:02:08.126973071Z STATUS: deployed

2024-02-28T13:02:08.126977312Z REVISION: 2

2024-02-28T13:02:08.126981410Z TEST SUITE: None

2024-02-28T13:02:08.150360499Z

2024-02-28T13:02:08.150390675Z ---------------------------------------------------------------------

2024-02-28T13:02:08.156224976Z SUCCESS: helm upgrade --history-max=5 --install=true --namespace=fleet-default --timeout=10m0s --values=/home/shell/helm/values-dbiservices-template-ec2-0.0.1.yaml --version=0.0.1 --wait=true dbiservices-template-ec2-0-17091

/home/shell/helm/dbiservices-template-ec2-0.0.1.tgz

2024-02-28T13:02:08.157013523Z ---------------------------------------------------------------------

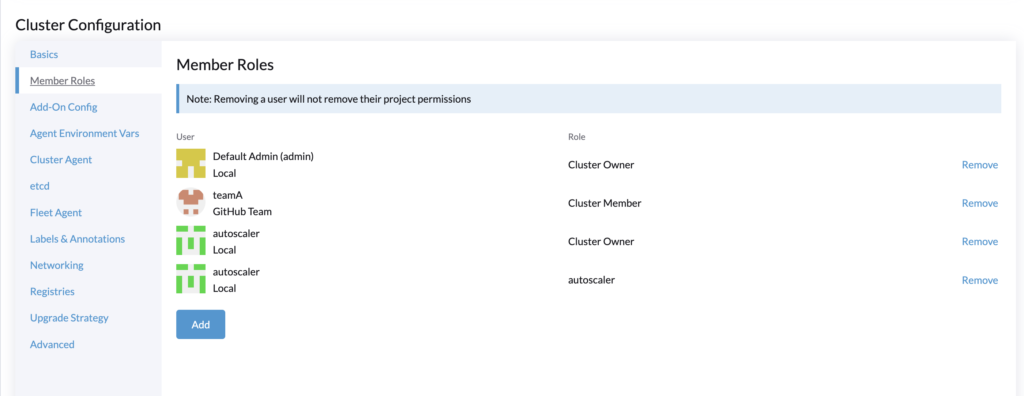

The following member roles have been created for the clusters and are showing in the cluster configuration.

- principalName: "local://u-g9bq8"

roleTemplateName: "cluster-owner"

- principalName: "github_team://8426662"

roleTemplateName: "cluster-member"

- principalName: "local://u-g9bq8"

roleTemplateName: "rt-tz9xs"

Helm lookup

Due to that issue with the namespace, setting the management cluster name doesn’t bring much advantage, as we need to manually redeploy the App (cluster template) to assign the users/groups.

Therefore we can use Helm function lookup, which can directly read the cluster name from the Kubernetes local cluster. Same as precedent, we need to redeploy the App (cluster template) the first time as it needs multiple resources to be provisioned by Rancher first.

Here is the code for the clusterroletemplatebinding.yaml

{{- $root := . }}

{{- $fetchedcluster := (lookup "provisioning.cattle.io/v1" "Cluster" "fleet-default" .Values.cluster.name) }}

{{- if ($fetchedcluster.status| default nil).clusterName | default nil }}

{{- range $index, $member := .Values.clusterMembers }}

---

apiVersion: management.cattle.io/v3

clusterName: {{ $fetchedcluster.status.clusterName }}

kind: ClusterRoleTemplateBinding

metadata:

name: ctrb-{{ trunc 8 (sha256sum (printf "%s/%s/%s" $root.Release.Namespace $member.principalName $member.roleTemplateName )) }}

namespace: {{ $fetchedcluster.status.clusterName }}

roleTemplateName: {{ $member.roleTemplateName }}

userPrincipalName: {{ $member.principalName }}

{{- end }}

{{- end }}

It will look into fleet-default for the cluster.provisionning.catte.io/v1 that is created by the RKE2 templates itself. On the first deployment of the RKE2 templates, it doesn’t exist yet, therefore an “if” condition is added.

Once the RKE2 template is deployed, you can like precedently, edit the App to redeploy it, and it will then create the ClusterRoleTemplateBinding.

The helm install will show no errors as the ClusterRoleTemplateBinding resources are skipped.

helm install --generate-name=true --namespace=fleet-default --timeout=10m0s --values=/home/shell/helm/values-dbiservices-template-ec2-0.0.1.yaml --version=0.0.1 --wait=true /home/shell/helm/dbiservices-template-ec2-0.0.1.tgz

2024-02-28T13:18:48.886232477Z creating 5 resource(s)

beginning wait for 5 resources with timeout of 10m0s

2024-02-28T13:18:49.098629894Z NAME: dbiservices-template-ec2-0-1709126327

2024-02-28T13:18:49.098705783Z LAST DEPLOYED: Wed Feb 28 13:18:47 2024

NAMESPACE: fleet-default

STATUS: deployed

2024-02-28T13:18:49.098717154Z REVISION: 1

2024-02-28T13:18:49.098720727Z TEST SUITE: None

2024-02-28T13:18:49.126871035Z

2024-02-28T13:18:49.126936065Z ---------------------------------------------------------------------

2024-02-28T13:18:49.135118662Z SUCCESS: helm install --generate-name=true --namespace=fleet-default --timeout=10m0s --values=/home/shell/helm/values-dbiservices-template-ec2-0.0.1.yaml --version=0.0.1 --wait=true /home/shell/helm/dbiservices-template-ec2-0.0.1.tgz

---------------------------------------------------------------------

And then, when redeploying the App, it does show the creation of the resources as the cluster name now exists.

helm upgrade --history-max=5 --install=true --namespace=fleet-default --timeout=10m0s --values=/home/shell/helm/values-dbiservices-template-ec2-0.0.1.yaml --version=0.0.1 --wait=true dbiservices-template-ec2-0-1709126327 /home/shell/helm/dbiservices-template-ec2-0.0.1.tgz

checking 8 resources for changes

Patch Amazonec2Config "kke-test-template-controlplane" in namespace fleet-default

Patch Amazonec2Config "kke-test-template-workers" in namespace fleet-default

Patch Cluster "kke-test" in namespace fleet-default

Created a new ClusterRoleTemplateBinding called "ctrb-96090621" in c-m-58wcfhnl

2024-02-28T13:24:13.652797054Z

Created a new ClusterRoleTemplateBinding called "ctrb-2c866242" in c-m-58wcfhnl

Created a new ClusterRoleTemplateBinding called "ctrb-d4063a0e" in c-m-58wcfhnl

2024-02-28T13:24:13.689031588Z

Patch ManagedChart "monitoring-crd-kke-test" in namespace fleet-default

Patch ManagedChart "monitoring-kke-test" in namespace fleet-default

beginning wait for 8 resources with timeout of 10m0s

Release "dbiservices-template-ec2-0-1709126327" has been upgraded. Happy Helming!

2024-02-28T13:24:13.872941208Z NAME: dbiservices-template-ec2-0-1709126327

2024-02-28T13:24:13.872946743Z LAST DEPLOYED: Wed Feb 28 13:24:11 2024

2024-02-28T13:24:13.872951269Z NAMESPACE: fleet-default

2024-02-28T13:24:13.872955002Z STATUS: deployed

2024-02-28T13:24:13.872958850Z REVISION: 4

2024-02-28T13:24:13.872962507Z TEST SUITE: None

---------------------------------------------------------------------

SUCCESS: helm upgrade --history-max=5 --install=true --namespace=fleet-default --timeout=10m0s --values=/home/shell/helm/values-dbiservices-template-ec2-0.0.1.yaml --version=0.0.1 --wait=true dbiservices-template-ec2-0-1709126327 /home/shell/helm/dbiservices-template-ec2-0.0.1.tgz

---------------------------------------------------------------------

Conclusion

The assignment of users/groups to a cluster through a template is not simple. My approach might be wrong but It was the first solution I thought of when I encountered the problem.

The issue of waiting for the creation (first deployment with Helm) is a little bit annoying but is not much of an issue when you are aware of redeploying the RKE2 template. Also if you use Fleet to continuously deploy/update managed clusters, you could add the values for member configuration after the first deployment to avoid managing through the UI like above.

Below is the link to the GitHub Repository branch for the RKE2 templates using a fixed management cluster name and Helm function lookup.

Sources

- Official documentation for RKE2 templates

https://ranchermanager.docs.rancher.com/how-to-guides/new-user-guides/manage-clusters/manage-cluster-templates#rke2-cluster-template - GitHub Branch for clusterroles

https://github.com/kkedbi/cluster-template-examples/tree/clusterroles

Blog

- RKE2 Autoscaling

https://www.dbi-services.com/blog/rancher-autoscaler-enable-rke2-node-autoscaling/ - Reestablish administrator role access to rancher users

https://www.dbi-services.com/blog/reestablish-administrator-role-access-to-rancher-users/ - Introduction and RKE2 cluster template for AWS EC2

https://www.dbi-services.com/blog/rancher-rke2-cluster-templates-for-aws-ec2

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2023/03/KKE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/martin_bracher_2048x1536.jpg)