So far, for Zabbix monitoring, I only covered Linux based servers. We can put aside MS-SQL which was monitored via ODBC, thus without any Zabbix agent involved.

In this blog post, I will explain you how I was able to monitor a Document Manage System (DMS) like M-Files which is running on Windows.

By the way, Vincent did a great blog post about M-Files overview.

Zabbix Agent Installation

Installation is straight forward as it consist as simple MSI file, so no need to spend too much time if there are no specific needs beside setting up agent in active mode (Zabbix agent pushes data to Zabbix server).

Why did I choose the active mode? Because I have something in mind. We will see that later in this blog post.

Components Monitoring

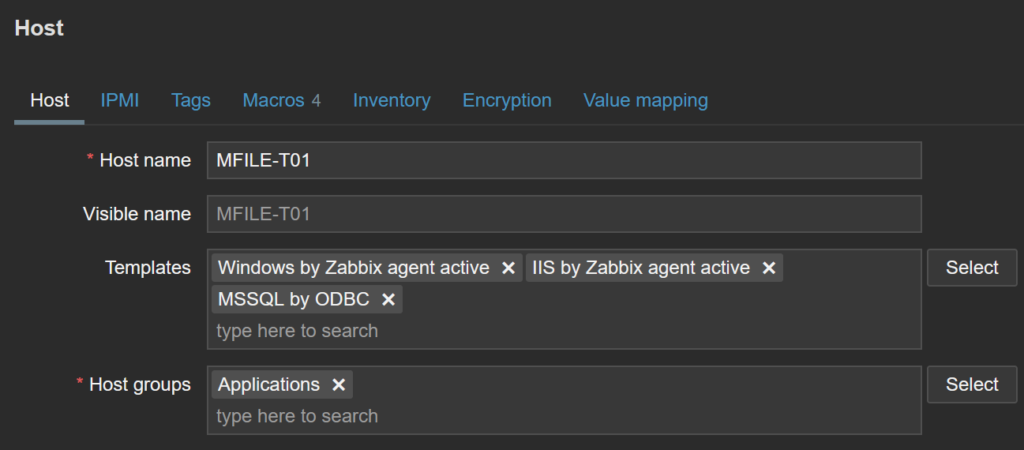

How would we monitor a whole application which uses multiple components? By using out-of-the-box template at a first stage. As known components, M-Files is running on a Windows server, uses a MS SQL database and an IIS Web Server. So, I will go for the following template:

To use these templates, we simply need to link them to the M-Files host:

And one click Update/Add button and we are done.

Macros

For Windows template, there are no extra settings required unless you need to customize threshold, which filesystems or services to monitor.

Regarding IIS template, as M-Files was setup on https, we must set two macros:

- {$IIS.PORT} to indicate port (ie.

443) - {$IIS.SERVICE} to

https

For the application pool of IIS, nothing else to define as it will be discovered by the Application pools discovery LLD.

As for MS SQL, you can refer to my previous blog post for more details.

And? That’s all? No. As you probably know me, I am curious to see how much further we can get with Zabbix.

Extend Monitoring

The M-Files server closely integrates with Windows, sending all M-Files logs to the Windows Event, for instance. Thankfully, Zabbix can access it.

When we want to monitor logs or events, we must use Zabbix agent in active mode. Why? Because we want the detected patterns in log (for example, ERROR, WARN, …) to be pushed to Zabbix server as soon as it occurs. We don’t want to wait for Zabbix server pool interval.

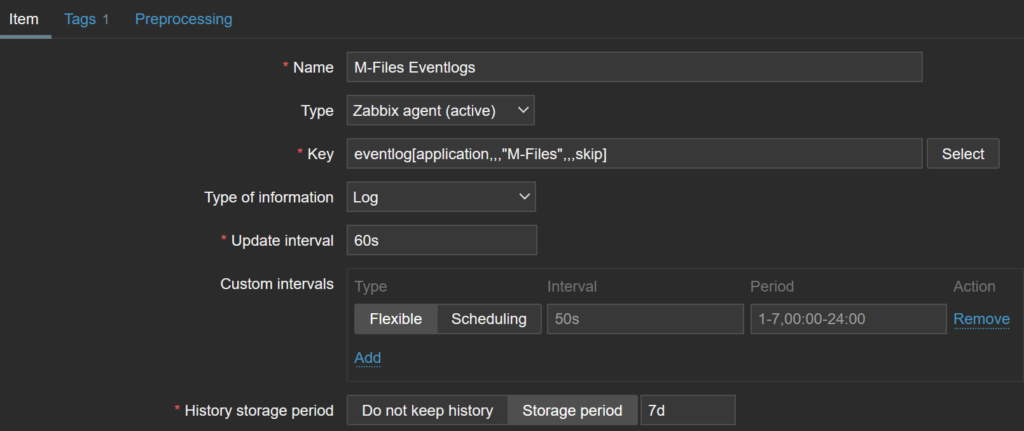

Let’s create a new template named M-Files by Zabbix agent active and create our first item in it:

application is the name of the event log, source is M-Files and skip indicates that it will process only latest (ie. newly) created lines. I put 7 days storage for debugging purpose, but in normal condition, it should be shorter, or even disabled, as we will create dependent item that will pick what we are interested in.

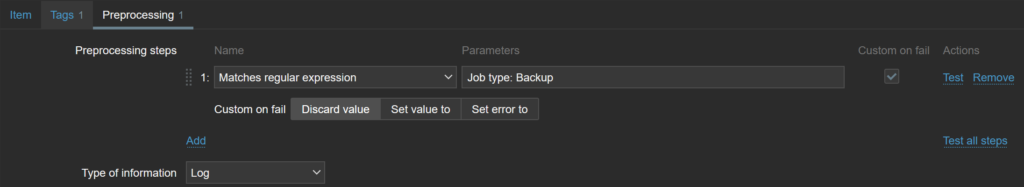

First level of dependent item will be on backup with a preprocessing step to filter what concerns backup:

This item looks for the string Job type: Backup and, if it is not found, will discard value. If not setting Custom on fail option, it will raise an error during evaluation when string is not found (not all events concern backup).

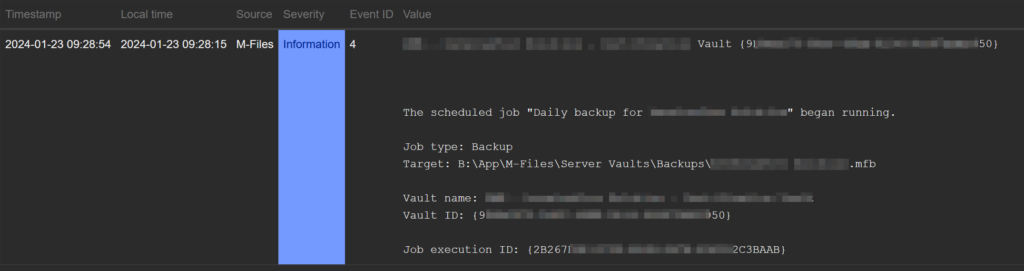

Now, as soon as a new event is logged, we will find it in item history (below is an event of a backup job starting):

Are we up to date on Backups?

One M-Files server can host multiple vaults and each vault should have a backup job running at least daily. I don’t want to define an item for each vault manually, thus we must find a way to discover them dynamically.

I found that the list of vaults can be extracted from Windows registry and Zabbix can read from it. The discovery key will be as the following:

registry.get[HKLM\SOFTWARE\Motive\M-Files\Version\Server\MFServer\Vaults,values,"^DisplayName$"]This will return an array of matching registry keys:

{

"DisplayName": "M-Files Vault Name",

"DataFolder": "B:\\App\M-Files\\Server Vaults\\VaultName",

"VaultUID": "{1BFAAAAA-D7A2-AAAA-8F17-DEAAAA26F1C0}"

}

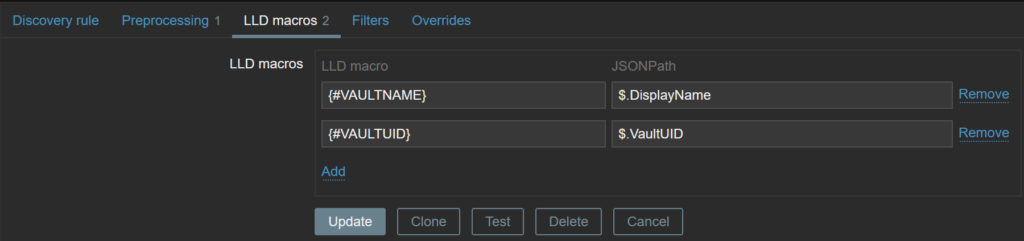

In preprocessing tab of the LLD, we must match the macro with a JSON path which we will later use in prototypes:

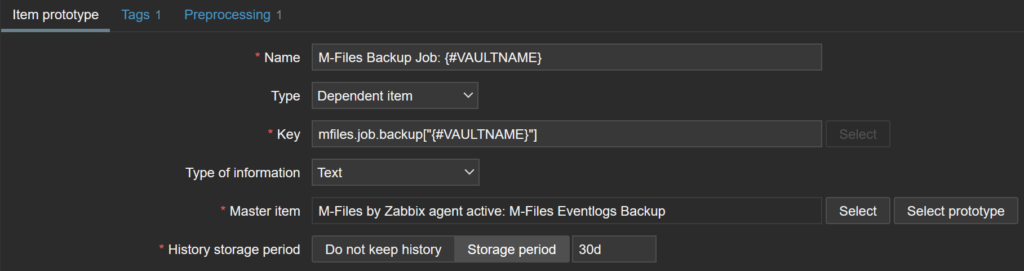

For each vault, we want to create a dedicated item which will filter on vault name (event log contains the vault name):

- Item prototype definition:

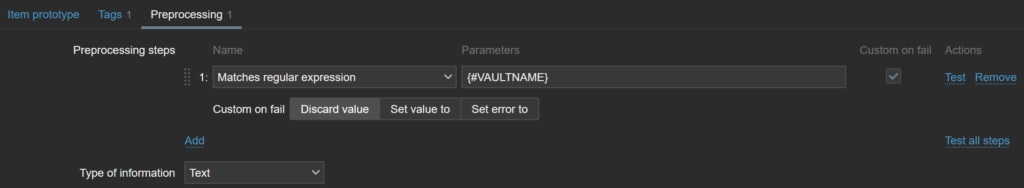

- Item prototype preprocessing which filter on vault name:

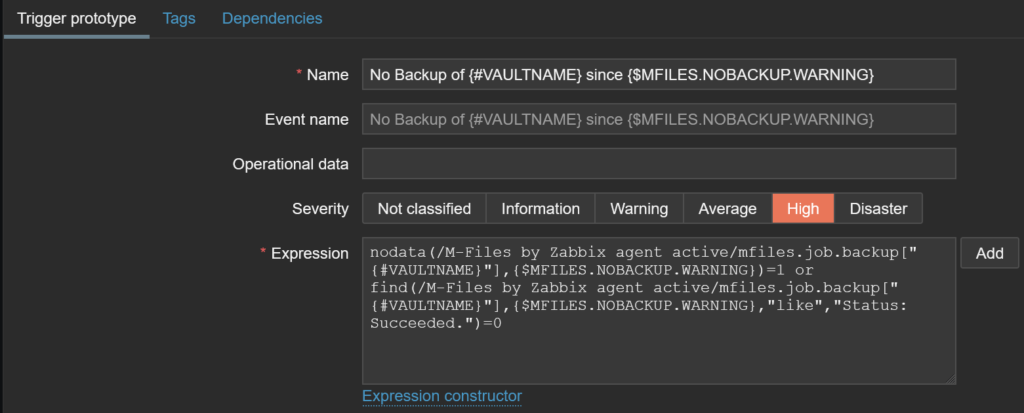

Now that we have an item which stores events for each vault, we can create trigger prototypes. I want following trigger conditions:

- There is no event related to the vault for a given period, for example, 25 hours

- There is no successful backup of the vault for a given period

First part of the expression will look like:

nodata(/M-Files by Zabbix agent active/mfiles.job.backup["{#VAULTNAME}"],{$MFILES.NOBACKUP.WARNING})=1

Second:

find(/M-Files by Zabbix agent active/mfiles.job.backup["{#VAULTNAME}"],{$MFILES.NOBACKUP.WARNING},"like","Status: Succeeded.")=0

I defined a user macro in the template named {$MFILES.NOBACKUP.WARNING} which will contain the number of seconds before raising the trigger. I will set the default value to 25 hours.

Finally, we are ready to link the M-Files template to the host to see how it behaves.

What Next?

MS-SQL database is not mandatory. M-Files also supports Firebird database for small setups. We can imagine to monitor the database size to ensure we are not getting out of supported limits. As we did on the LLD definition, we can also create items and triggers automatically for each vault hosted by the M-Files server.

Our Offer

As a Certified M-Files Delivery Partner (https://catalog.m-files.com/certified-delivery-partners), we offer:

- Enhanced expertise: With a deep understanding of M-Files solutions, our team ensures superior quality ECM implementations.

- Increased security and compliance: As a certified partner, we adhere to the highest standards to protect your data.

- Tailored and effective solution: This certification enables us to finely tune M-Files solutions to your specific needs, boosting the efficiency of your organization.

For more information: https://www.dbi-services.com

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/GME_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/PLE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/ATR_web-min-scaled.jpg)

Pranas

06.01.2025Hi i am trying to monitorig our m-file app server with zabbix, and the is no IIS on our m-file app server.

Morgan Patou

13.01.2025Hello,

Well, that depends on whether it has been installed/configured or not, it depends on what you are using / how you are using M-Files exactly.

Regards,

Morgan Patou