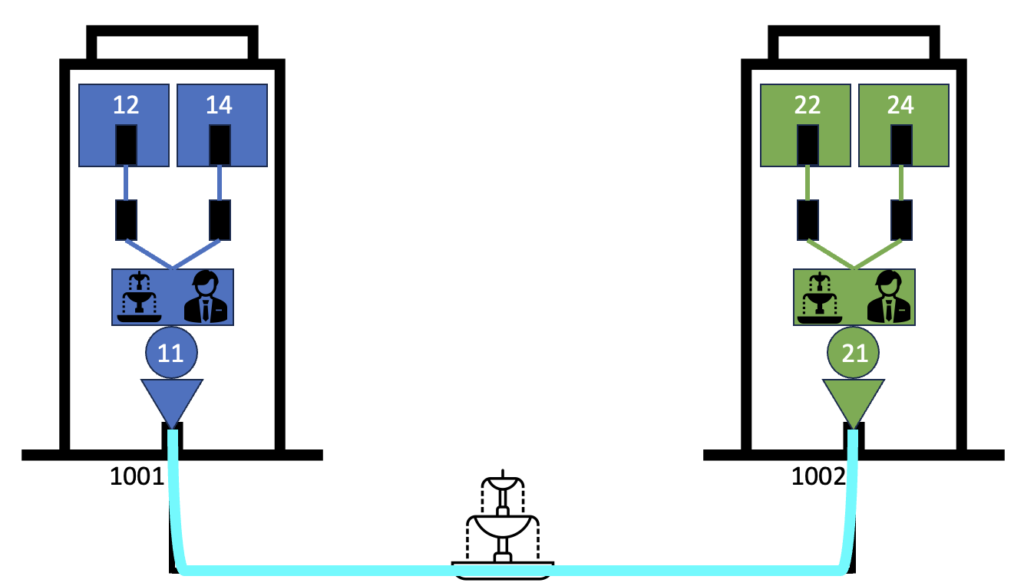

If you are new or uneasy with networking in Kubernetes, you may benefit from my previous blog for beginner level. In this blog post I will show you in a Kubernetes cluster what a building and its networking components look like. As a reminder, below is the picture I drew in my previous blog to illustrate the networking in a Kubernetes cluster with Cilium:

If you want to understand this networking in Kubernetes in more details, read on, this blog post is for you! I’ll consider you know the basics about Kubernetes and how to interact with it, otherwise you may find our training course on it very interesting (in English or in French)!

Diving into the IP Addresses configuration

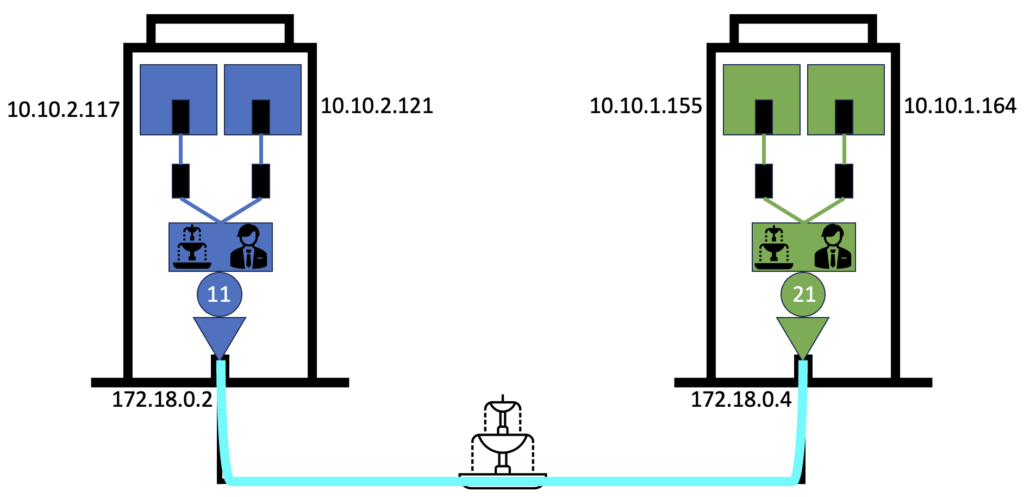

Let’s start by checking our environment and update our picture with real information from our Kubernetes cluster:

$ kubectl get no -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

mycluster-control-plane Ready control-plane 113d v1.27.3 172.18.0.3 <none> Debian GNU/Linux 11 (bullseye) 5.15.0-94-generic containerd://1.7.1

mycluster-worker Ready <none> 113d v1.27.3 172.18.0.2 <none> Debian GNU/Linux 11 (bullseye) 5.15.0-94-generic containerd://1.7.1

mycluster-worker2 Ready <none> 113d v1.27.3 172.18.0.4 <none> Debian GNU/Linux 11 (bullseye) 5.15.0-94-generic containerd://1.7.1

$ kubectl get po -n networking101 -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox-c8bbbbb84-fmhwc 1/1 Running 1 (24m ago) 3d23h 10.10.1.164 mycluster-worker2 <none> <none>

busybox-c8bbbbb84-t6ggh 1/1 Running 1 (24m ago) 3d23h 10.10.2.117 mycluster-worker <none> <none>

netshoot-7d996d7884-fwt8z 1/1 Running 0 79s 10.10.2.121 mycluster-worker <none> <none>

netshoot-7d996d7884-gcxrm 1/1 Running 0 80s 10.10.1.155 mycluster-worker2 <none> <none>

You can now see for real that the IP subnets of the pods are different than the one of the nodes. Also the IP subnet of pods on each node is different from each other. If you are not sure why, you are perfectly right because it is not so clear at this stage. So let’s clarify it by checking our Cilium configuration.

I’ve told you in my previous blog that there is one Cilium Agent per building. This Agent is a pod itself and he takes care about networking in the node. This is what they look like in our cluster:

$ kubectl get po -n kube-system -owide|grep cilium

cilium-9zh9s 1/1 Running 5 (65m ago) 113d 172.18.0.3 mycluster-control-plane <none> <none>

cilium-czffc 1/1 Running 5 (65m ago) 113d 172.18.0.4 mycluster-worker2 <none> <none>

cilium-dprvh 1/1 Running 5 (65m ago) 113d 172.18.0.2 mycluster-worker <none> <none>

cilium-operator-6b865946df-24ljf 1/1 Running 5 (65m ago) 113d 172.18.0.2 mycluster-worker <none> <none>

There is two things to notice here:

- The Cilium Agent is a Daemonset so that is how you make sure to always have one on each node of our cluster. As it is a pod, it also gets an IP Address… but wait a minute… this is the same IP Address as the node! Exactly! This is a special case for pods IP Address assignation, usually for system pods that need direct access to the node (host) network. If you look at the pods in the kube-system namespace, you’ll see most of them uses the node IP Address.

- The Cilium Operator pod is responsible for IP address management in the cluster and so it gives to each Cilium Agent its range to use.

Now you want to see which IP range is used by each node right? Let’s just check that Cilium Agent on each node as we have found their name above:

$ kubectl exec -it -n kube-system cilium-dprvh -- cilium debuginfo | grep IPAM

Defaulted container "cilium-agent" out of: cilium-agent, config (init), mount-cgroup (init), apply-sysctl-overwrites (init), mount-bpf-fs (init), clean-cilium-state (init), install-cni-binaries (init)

IPAM: IPv4: 5/254 allocated from 10.10.2.0/24,

$ kubectl exec -it -n kube-system cilium-czffc -- cilium debuginfo | grep IPAM

Defaulted container "cilium-agent" out of: cilium-agent, config (init), mount-cgroup (init), apply-sysctl-overwrites (init), mount-bpf-fs (init), clean-cilium-state (init), install-cni-binaries (init)

IPAM: IPv4: 5/254 allocated from 10.10.1.0/24,

You can now see the different IP subnet on each node. In my previous blog I told you that an IP Address belong to a group and it uses the subnet mask. This subnet mask is here /24 which means for the first node that any address starting with 10.10.2 belongs to the same group. For the second node it is 10.10.1 and so they are both in a separate group or IP subnet.

What now about checking the interfaces that are the doors of our drawing?

Diving into the interfaces configuration

Let’s explore our buildings and see what we could find out! We are going to start with our four pods:

$ kubectl get po -n networking101 -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox-c8bbbbb84-fmhwc 1/1 Running 1 (125m ago) 4d1h 10.10.1.164 mycluster-worker2 <none> <none>

busybox-c8bbbbb84-t6ggh 1/1 Running 1 (125m ago) 4d1h 10.10.2.117 mycluster-worker <none> <none>

netshoot-7d996d7884-fwt8z 1/1 Running 0 103m 10.10.2.121 mycluster-worker <none> <none>

netshoot-7d996d7884-gcxrm 1/1 Running 0 103m 10.10.1.155 mycluster-worker2 <none> <none>

$ kubectl exec -it -n networking101 busybox-c8bbbbb84-t6ggh -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue qlen 1000

link/ether 9e:80:70:0d:d9:37 brd ff:ff:ff:ff:ff:ff

inet 10.10.2.117/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::9c80:70ff:fe0d:d937/64 scope link

valid_lft forever preferred_lft forever

$ kubectl exec -it -n networking101 netshoot-7d996d7884-fwt8z -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ea:c4:71:d6:4f:a0 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.2.121/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::e8c4:71ff:fed6:4fa0/64 scope link

valid_lft forever preferred_lft forever

$ kubectl exec -it -n networking101 netshoot-7d996d7884-gcxrm -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether be:57:3d:54:40:f1 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.1.155/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::bc57:3dff:fe54:40f1/64 scope link

valid_lft forever preferred_lft forever

$ kubectl exec -it -n networking101 busybox-c8bbbbb84-fmhwc -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

10: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue qlen 1000

link/ether 2a:7f:05:a0:69:db brd ff:ff:ff:ff:ff:ff

inet 10.10.1.164/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::287f:5ff:fea0:69db/64 scope link

valid_lft forever preferred_lft forever

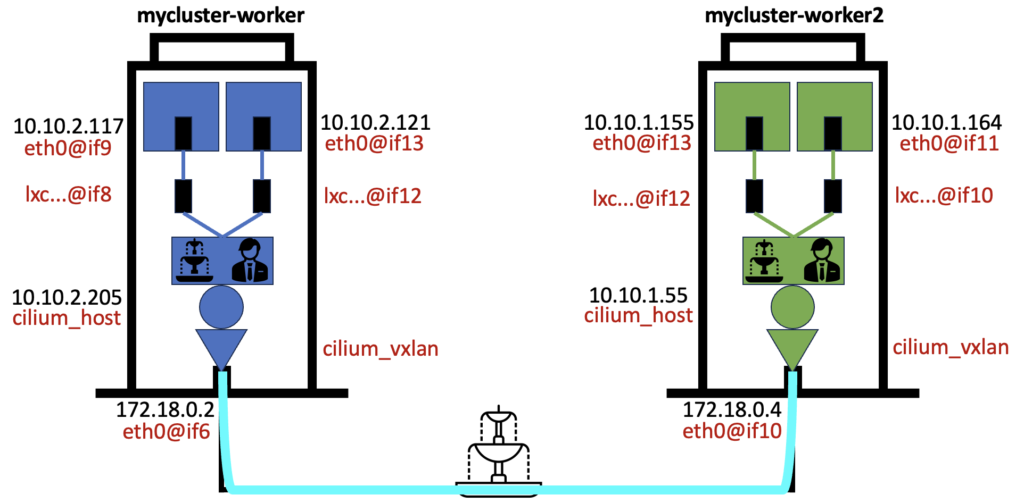

You can see that each container has only one network interface in addition to its local loopback. The format is for example 8: eth0@if9 which means the interface in the container has the number 9 and is linked to its pair interface number 8 of the node it is hosted on. These are the 2 doors connected by a corridor in my drawing.

Then check the nodes network interfaces:

$ sudo docker exec -it mycluster-worker ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: cilium_net@cilium_host: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 5e:84:64:22:90:7f brd ff:ff:ff:ff:ff:ff

3: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ca:7e:e1:cc:4e:74 brd ff:ff:ff:ff:ff:ff

inet 10.10.2.205/32 scope global cilium_host

valid_lft forever preferred_lft forever

4: cilium_vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether f6:bf:81:9b:e2:c5 brd ff:ff:ff:ff:ff:ff

5: eth0@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.2/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::2/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:2/64 scope link

valid_lft forever preferred_lft forever

7: lxc_health@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 8a:de:c1:2c:f5:83 brd ff:ff:ff:ff:ff:ff link-netnsid 1

9: lxc4a891387ff1a@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether d6:21:74:eb:67:6b brd ff:ff:ff:ff:ff:ff link-netns cni-67a5da05-a221-ade5-08dc-64808339ad05

11: lxc5b7b34955e61@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether f2:80:da:a5:17:74 brd ff:ff:ff:ff:ff:ff link-netns cni-0b438679-e5d3-d429-85c0-b6e3c8914250

13: lxc73d2e1d7cf4f@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether f6:87:b6:c3:a6:45 brd ff:ff:ff:ff:ff:ff link-netns cni-f608f13c-1869-6134-3d6b-a0f76fd6d483

$ sudo docker exec -it mycluster-worker2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: cilium_net@cilium_host: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether f2:91:2b:31:1f:47 brd ff:ff:ff:ff:ff:ff

3: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether be:7f:e0:2b:d6:b1 brd ff:ff:ff:ff:ff:ff

inet 10.10.1.55/32 scope global cilium_host

valid_lft forever preferred_lft forever

4: cilium_vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether e6:c8:8d:5d:1e:2d brd ff:ff:ff:ff:ff:ff

6: lxc_health@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether d2:cf:ec:4c:51:b6 brd ff:ff:ff:ff:ff:ff link-netnsid 1

8: lxcdc5fb9751595@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fe:b4:3a:e0:67:a3 brd ff:ff:ff:ff:ff:ff link-netns cni-c0d4bea2-92fd-03fb-ba61-3656864d8bd7

9: eth0@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 02:42:ac:12:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.4/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::4/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:4/64 scope link

valid_lft forever preferred_lft forever

11: lxc174c023046ff@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ae:7a:b9:6b:b3:c1 brd ff:ff:ff:ff:ff:ff link-netns cni-4172177b-df75-61a8-884c-f9d556165df2

13: lxce84a702bb02c@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 92:65:df:09:dd:28 brd ff:ff:ff:ff:ff:ff link-netns cni-d259ef79-a81c-eba6-1255-6e46b8d1c779

On each node there are several interfaces to notice. I’ll take the first node for example:

- eth0@if6: As our Kubernetes cluster is created with Kind, a node is actually a container (and this interface open a corridor to its pair interface on my laptop). If it feels like the movie Inception, well, it is a perfectly correct comparison! This interface is the main door of the building.

- lxc4a891387ff1a@if8: This is the pair interface number 8 that is linked to the left container above.

- lxc73d2e1d7cf4f@if12: This is the pair interface number 12 that is linked to the right container above.

- cilium_host@cilium_net: This is the circle interface in my drawing that allows the routing to/from other nodes in our cluster.

- cilium_vxlan: This is the rectangle in my drawing and is the tunnel interface that will transport you to/from the other nodes in our cluster.

Let’s now get the complete picture by updating our drawing with these information:

Wrap up

With this foundation knowledge, you now have all the key elements to understand the communication between pods on the same node or on different nodes. This is what we will look at in my next blog post. Stay tuned!

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/martin_bracher_2048x1536.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/03/AHI_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/HER_web-min-scaled.jpg)