The next version of Windows will provide some interesting features about WFSC architectures. One of them is the new quorum type: “Node majority and cloud witness” which will solve many cases where a third datacenter is mandatory and missing to achieve a truly resilient quorum.

Let’s imagine the following scenario that may concern the implementation of either an SQL Server availability group or a SQL Server FCI. Let’s say you have to implement a geo-cluster that includes 4 nodes across two datacenters with 2 nodes on each. To achieve the quorum in case of broken network link between the two datacenters, adding a witness is mandatory even if you work with dynamic weight nodes feature but where to put it? Having a third datacenter to host this witness seems to be the better solution but as you may imagine, it is a costly and not affordable solution for many customers.

Using a cloud witness in this case might be a very interesting workaround. Indeed, a cloud witness consists of a blob storage inside a storage account’s container. From cost perspective, it is a very cheap solution because you have to pay only for the storage space you will use (first 1TB/month – CHF 0.0217 / GB). Let’s take a look at the storage space consumed by my cloud witness from my storage account:

Interesting, isn’t it? To implement a cloud witness, you have to meet the following requirements:

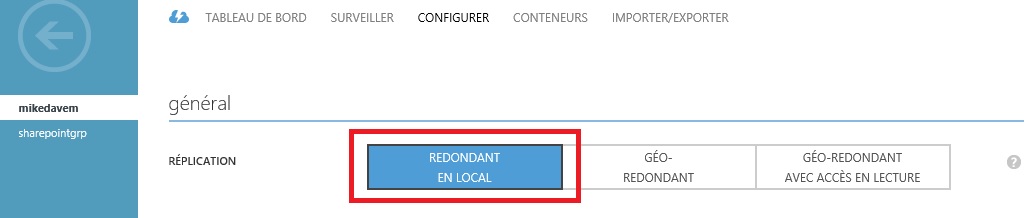

- Yourstorage account must be configured as a locally redundant storage (LRS) because the created blob file is used as the arbitration point, which requires some consistency guarantees when reading the data. All data in the storage account is made durable by replicating transactions synchronously in this case. LRS doesn’t protect against a complete regional disaster but it may be acceptable in our case because cloud witness is also dynamic weight-based feature.

- A special container, called msft-cloud-witness, is created to this purpose and contains the blob file lied to the cloud witness.

How to configure my cloud witness?

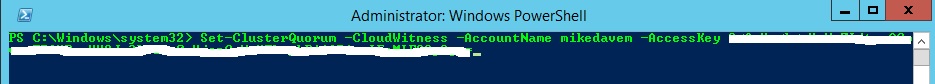

In the same way than before. By using the GUI, you have to select the quorum type you want to use and then you must provide the storage account information (storage account name and the access key). You may also prefer to configure your cloud witness by using PowerShell cmdlet Set-ClusterQuorum as follows:

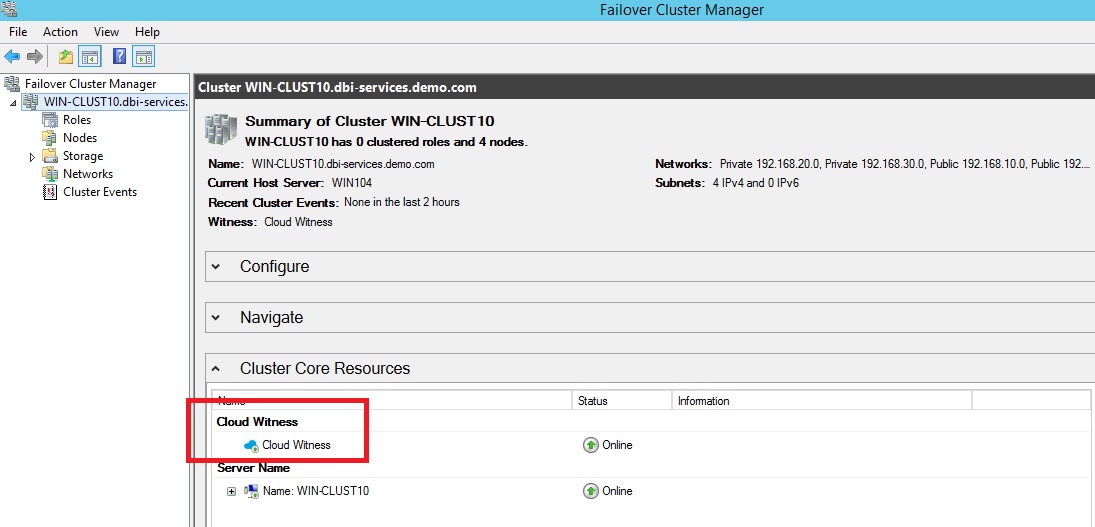

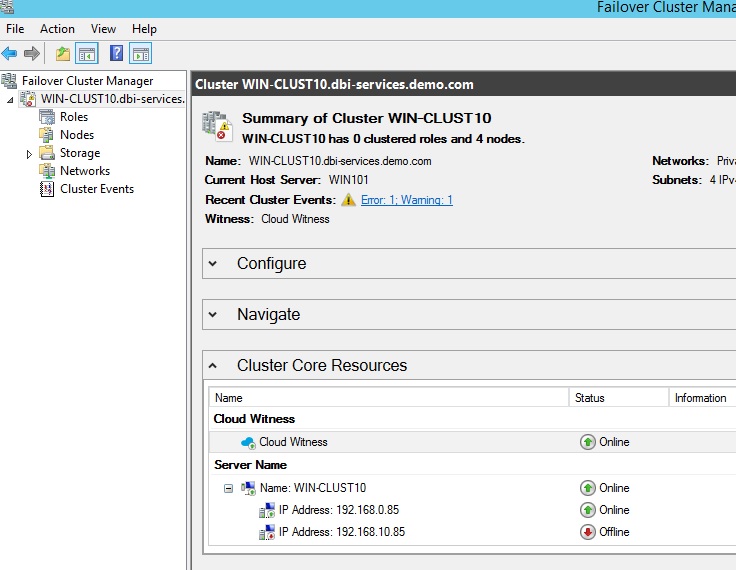

After configuring the cloud witness, a corresponded core resource is created with an online state as follows:

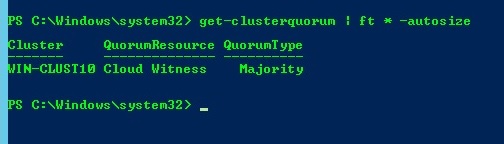

By using PowerShell:

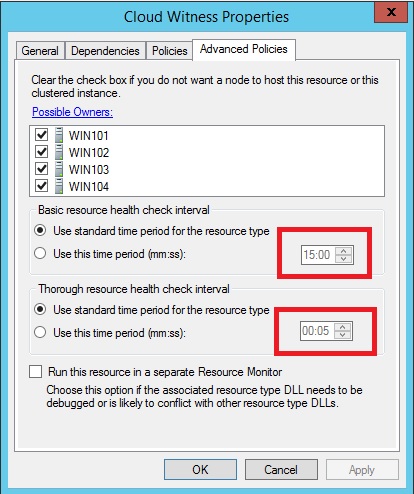

Let’s have a deeper look at this core resource, especially the following advanced policies parameters isAlive() and looksAlive() configuration:

We may notice that the basic resource health check interval default value is configured to 15 min. Hmm, I guess that this value will probably be customized according to the customer architecture configuration.

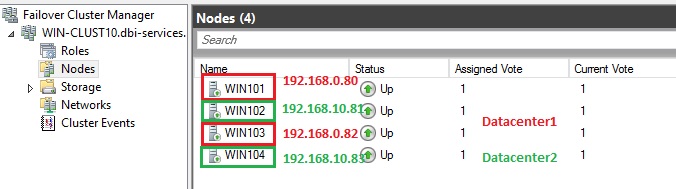

Go ahead and let’s perform some basic tests with my lab architecture. Basically, I have configured a multi-subnet failover cluster that includes four nodes across two (simulated) datacenters. Then, I have implemented a cloud witness hosted inmy storage account “mikedavem”. You may find a simplified picture of my environment below:

…

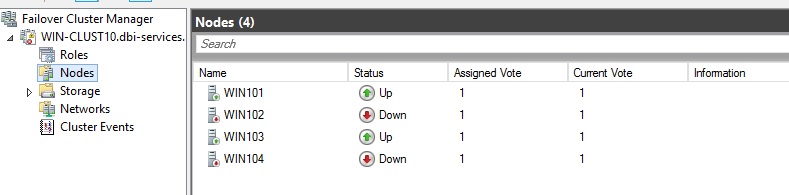

You may notice that because I implemented a cloud witness, the system changes the overall node weight configuration (4 nodes + 1 witness = 5 votes). In addition, in case of network failure between my 2 datacenters, I want to prioritize the first datacenter in terms of availability. In order to meet this requirement, I used the new cluster property LowerQuorumPriorityNodeID to change the priority of the WIN104 cluster node.

![]()

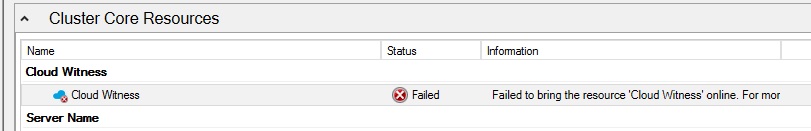

At this point we are not ready to perform our first test: simulate a failure of the cloud witness:

Then the system recalculates the overall node weight configuration to achieve a maximum quorum resiliency. As expected, the node weight of WIN104 cluster node is changed from 1 to 0 because it has the lower priority.

The second consists in simulating a network failure between the two datacenters. Once again, as expected, the first partition of the WFSC in the datacenter1 keeps online whereas the second partition brings offline according the node weight priority configuration.

Is the cloud witness dynamic behavior suitable with minimal configurations?

I wrote a blog post in the past about issues (maybe behavior is more suitable here) that exist with dynamic witness behavior and minimal configurations with only 2 cluster nodes. I hoped to see an improvement on that side but unfortunately no. Perhaps with the RTM release … wait and see.

Update 09.05.2017

No changes will be applied due to the cluster philosophy described by Microsoft here.

Happy clustering!

By David Barbarin

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/microsoft-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/04/SIT_web.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2023/05/STM_web_min.jpg)