In the last post we had a look on how you can use AWS Storage Gateway as File gateway to store your PostgreSQL backups safely offsite on AWS S3. Another method of doing that would be to use “Cached Volume gateway” instead of the File gateway we used in the last post. The volume gateways does not provide access via NFS or SMB but does provide a volume over iSCSI and the on-prem machines will directory work against the volumes. Let’s see how that works.

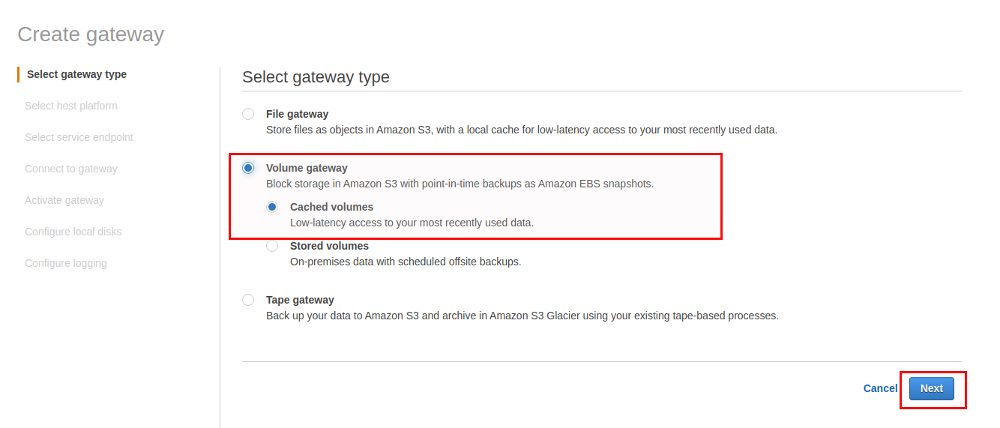

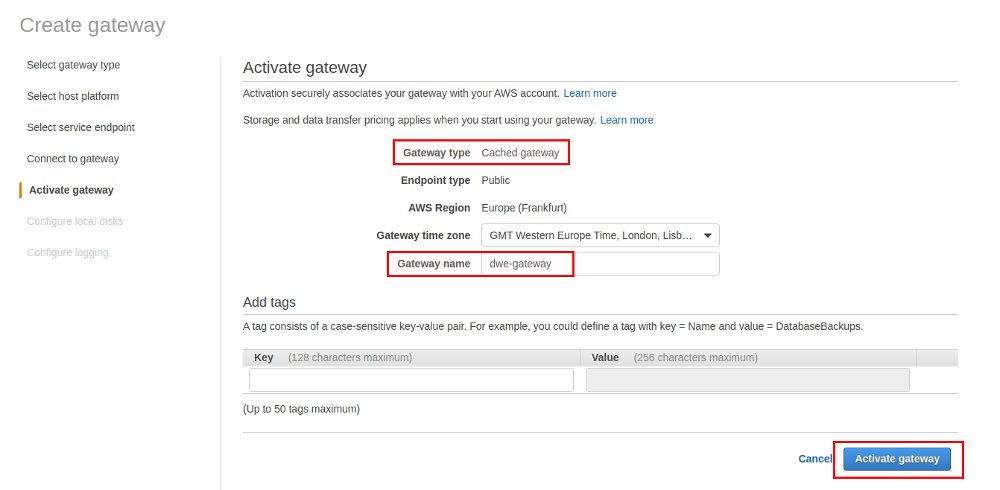

The procedure for creating a Volume gateway is more or less the same as the procedure we used in the last post, except that we are now going for the “Volume gateway” instead of the “File gateway”:

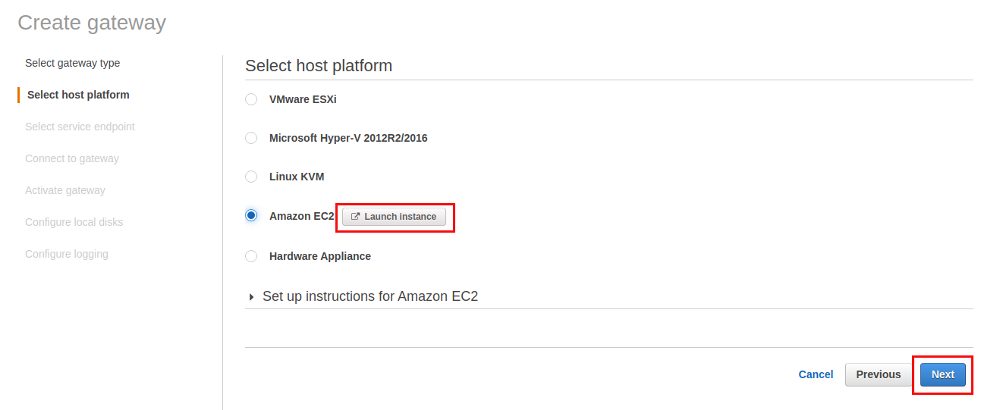

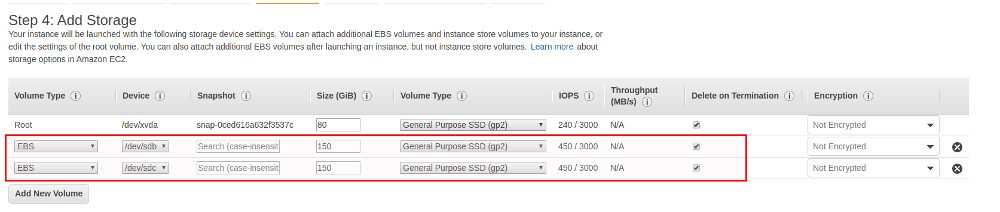

For setting up the EC2 instance you can refer to the last post, except that you’ll need two additional disks and not only one:

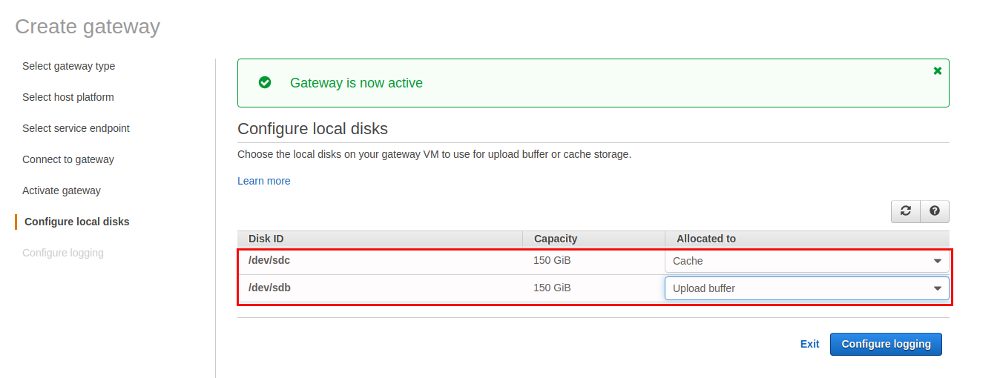

The first additional disk will be used as the cache and the second one will be used as the upload buffer. The upload buffer is used to upload the data encrypted to S3, check here for the concepts.

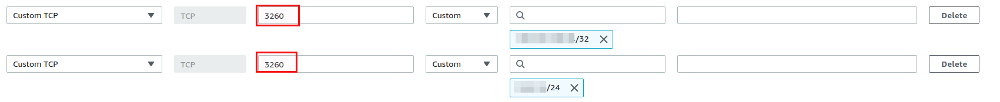

Make sure that you add the iSCSI initiator port to you incoming rules in the security group which is attached to the EC2 instance:

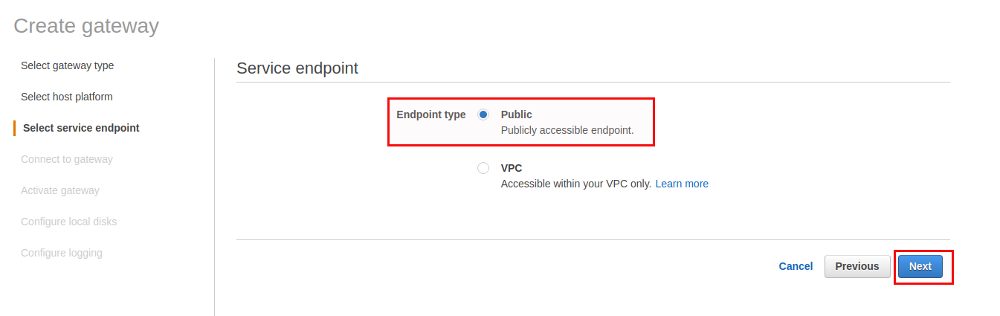

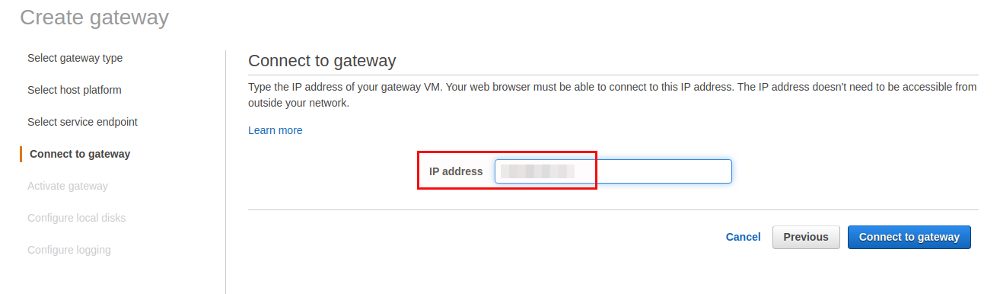

Again we’ll go for public access as we want to use the volumes on a local VM.

Assign the disks for the cache and the upload buffer and go with the default for the next screens:

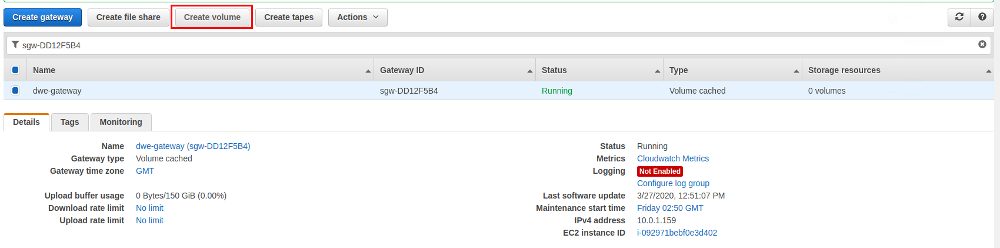

Last time we created a file share and now we are going to create a volume:

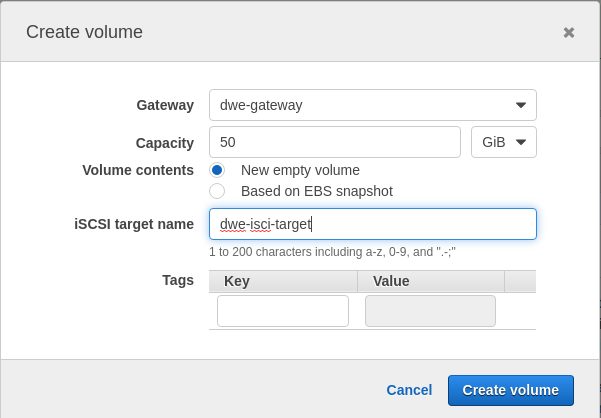

We are going for a brand new volume but we could have also used a snapshot as a base:

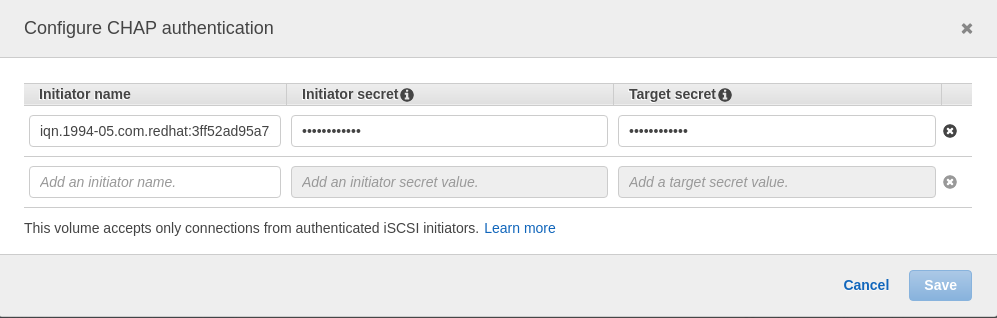

You can find the initiator name on the local machine:

postgres@centos8pg:/home/postgres/ [pg13] cat /etc/iscsi/initiatorname.iscsi InitiatorName=iqn.1994-05.com.redhat:3ff52ad95a75

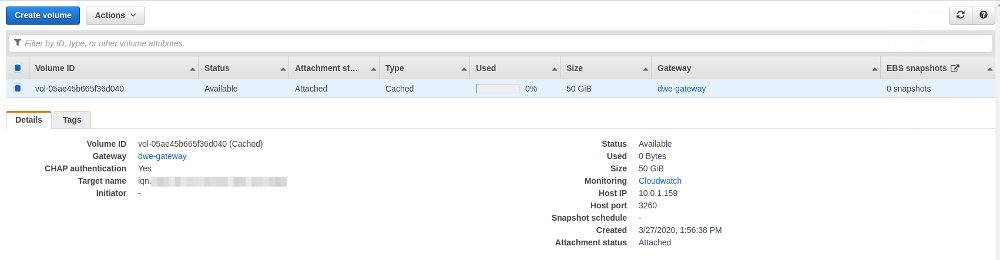

… and the new volume is ready:

Now we need to attach the volume to our local machine. First of all we need to install the required packages and then enable and start the service:

postgres@centos8pg:/home/postgres/ [pgdev] sudo dnf install -y iscsi-initiator-utils

postgres@centos8pg:/home/postgres/ [pgdev] sudo systemctl enable iscsid.service

Created symlink /etc/systemd/system/multi-user.target.wants/iscsid.service → /usr/lib/systemd/system/iscsid.service.

postgres@centos8pg:/home/postgres/ [pgdev] sudo systemctl start iscsid.service

postgres@centos8pg:/home/postgres/ [pgdev] sudo systemctl status iscsid.service

● iscsid.service - Open-iSCSI

Loaded: loaded (/usr/lib/systemd/system/iscsid.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2020-03-27 14:18:04 CET; 3s ago

Docs: man:iscsid(8)

man:iscsiadm(8)

Main PID: 5289 (iscsid)

Status: "Ready to process requests"

Tasks: 1

Memory: 3.6M

CGroup: /system.slice/iscsid.service

└─5289 /usr/sbin/iscsid -f

Mar 27 14:18:04 centos8pg systemd[1]: Starting Open-iSCSI...

Mar 27 14:18:04 centos8pg systemd[1]: Started Open-iSCSI.

Check that you are able to discover the target using the public IP address of the Storage Gateway EC2 instance:

postgres@centos8pg:/home/postgres/ [pgdev] sudo /sbin/iscsiadm --mode discovery --type sendtargets --portal xxx.xxx.xxx.xxx:3260 10.0.1.159:3260,1 iqn.xxxx-xx.com.amazon:dwe-isci-target

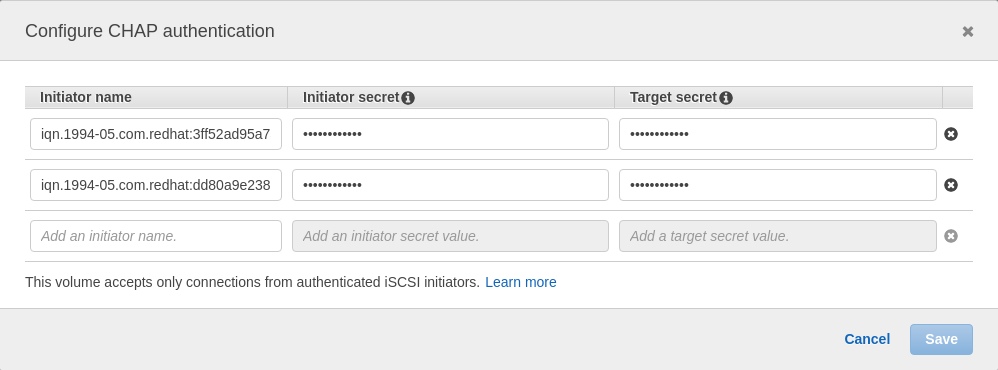

When that is wokring fine you should configure CHAP authentication (using the secrets we deinfed above):

postgres@centos8pg:/home/postgres/ [pg13] sudo vi /etc/iscsi/iscsid.conf node.session.auth.authmethod = CHAP # To set a CHAP username and password for initiator # authentication by the target(s), uncomment the following lines: node.session.auth.username = iqn.1994-05.com.redhat:3ff52ad95a75 node.session.auth.password = 123456789012 # To set a CHAP username and password for target(s) # authentication by the initiator, uncomment the following lines: node.session.auth.username_in = iqn.xxx-xx.com.amazon:dwe-isci-target node.session.auth.password_in = 098765432109

And then the big surprise when you try to login to the target:

postgres@centos8pg:/home/postgres/ [pg13] sudo /sbin/iscsiadm --mode node --targetname iqn.1997-05.com.amazon:dwe-isci-target --portal 10.0.1.159:3260,1 --login Logging in to [iface: default, target: iqn.1997-05.com.amazon:dwe-isci-target, portal: 10.0.1.159,3260] iscsiadm: Could not login to [iface: default, target: iqn.1997-05.com.amazon:dwe-isci-target, portal: 10.0.1.159,3260]. iscsiadm: initiator reported error (8 - connection timed out) iscsiadm: Could not log into all portals

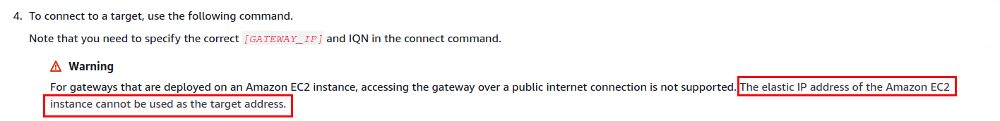

That of course can not work from a local machine and the explanation is in the documentation:

I’ve quickly started another EC2 instance, installed the same packages, enabled the iSCSI service and added the new intiator name to the CHAP configuration of the volume:

Trying to discover and to login again from the new ec2 host:

[root@ip-10-0-1-252 ec2-user]# sudo /sbin/iscsiadm --mode discovery --type sendtargets --portal 10.0.1.159:3260 10.0.1.159:3260,1 iqn.1997-05.com.amazon:dwe-isci-target [root@ip-10-0-1-252 ec2-user]# /sbin/iscsiadm --mode node --targetname iqn.1997-05.com.amazon:dwe-isci-target --portal 10.0.1.159:3260,1 --login Logging in to [iface: default, target: iqn.1997-05.com.amazon:dwe-isci-target, portal: 10.0.1.159,3260] (multiple) Login to [iface: default, target: iqn.1997-05.com.amazon:dwe-isci-target, portal: 10.0.1.159,3260] successful.

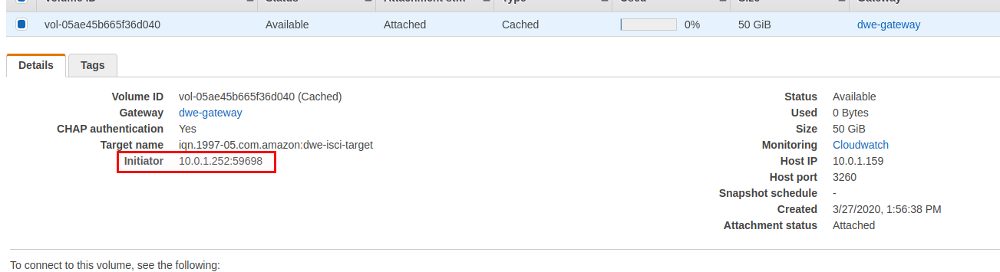

… and now it succeeds. You will see a successful connection also in the volume overview:

You can also confirm that on the client:

[root@ip-10-0-1-75 ec2-user]$ lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 50G 0 disk xvda 202:0 0 10G 0 disk |-xvda1 202:1 0 1M 0 part `-xvda2 202:2 0 10G 0 part / [root@ip-10-0-1-75 ec2-user]$ ls -la /dev/sda* brw-rw----. 1 root disk 8, 0 Mar 27 15:04 /dev/sda [root@ip-10-0-1-75 ec2-user]# [root@ip-10-0-1-75 ec2-user]$ fdisk -l /dev/sda Disk /dev/sda: 50 GiB, 53687091200 bytes, 104857600 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes

Before you can put data on that block device their needs to be a file system on it and it needs to be mounted:

[root@ip-10-0-1-75 ec2-user]$ parted /dev/sda mklabel msdos

Information: You may need to update /etc/fstab.

[root@ip-10-0-1-75 ec2-user]$ parted -a opt /dev/sda mkpart primary xfs 0% 100%

Information: You may need to update /etc/fstab.

[root@ip-10-0-1-75 ec2-user]$ ls -la /dev/sda*

brw-rw----. 1 root disk 8, 0 Mar 27 15:07 /dev/sda

brw-rw----. 1 root disk 8, 1 Mar 27 15:07 /dev/sda1

[root@ip-10-0-1-75 ec2-user]$ mkfs.xfs /dev/sda1

meta-data=/dev/sda1 isize=512 agcount=4, agsize=3276736 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1

data = bsize=4096 blocks=13106944, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=6399, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@ip-10-0-1-75 ec2-user]$ mount -t xfs /dev/sda1 /var/tmp/sgw/

[root@ip-10-0-1-75 ec2-user]$ df -h | grep sgw

/dev/sda1 50G 390M 50G 1% /var/tmp/sgw

So now we have block device that is coming from the storage gateway. Let’s put some data on it and check the statistics in the AWS console:

[root@ip-10-0-1-75 ec2-user]$ dd if=/dev/zero of=/var/tmp/sgw/ff1 bs=1M count=1000 1000+0 records in 1000+0 records out 1048576000 bytes (1.0 GB, 1000 MiB) copied, 27.5495 s, 38.1 MB/s

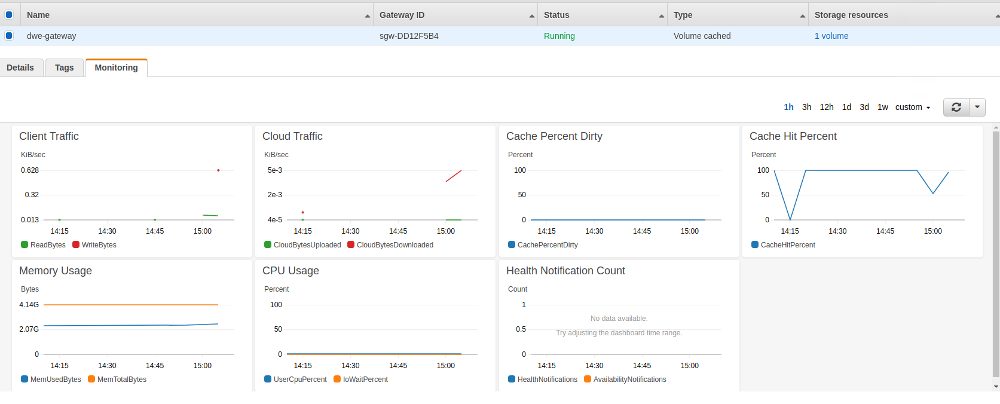

The statistics confirm that data is coming in:

One downside of the volume gateway is, that you won’t see anything in the S3 overview. The benefits are, that you can make snapshots of the volumes and also clone them. From a pure PostgreSQL backup perspective I would probably use the file gateway but for other use cases like application servers a cached volume gateway might be the right thing to use if you want to extend your local storage to AWS.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)