In the last post we had a quick look at AWS Kinesis data streams and how you can push data to AWS S3 using AWS Firehose. Another great AWS service is AWS Storage Gateway and in this post we’ll use that to push our PostgreSQL backups to AWS. The primary usage of AWS Storage Gateway is to extend you local storage to AWS so that you do not have to extend your local storage on one side and on the other side safely can store data offsite for backup purposes. We are already using that at some of our customers and experiences with that are quite well. One important point to keep in mind when you are using AWS Storage Gateway is: In case you need to restore the data to your on-prem location you will be charged for the outgoing traffic, so it might be a good idea to calculate the costs before you start using it.

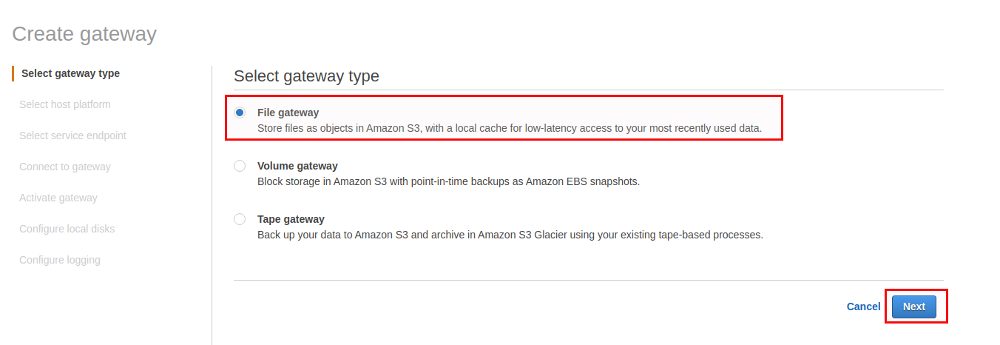

The initial deployment of an AWS Storage Gateway, as usual, is quite simple. Using the AWS console this is matter of a few clicks:

AWS Storage gateway comes with three types you can chose from:

- File gateway: This is what we’ll be using and it provides a file interface to AWS S3 using standard file protocols like NFS and SMB

- Volume gateway: This provides AWS backed cloud volumes that can by mounted over iSCSI, data goes to AWS S3

- Tage gateway: This provides virtual tape storage you can use with your on-prem backup solutions and data goes to AWS Glacier

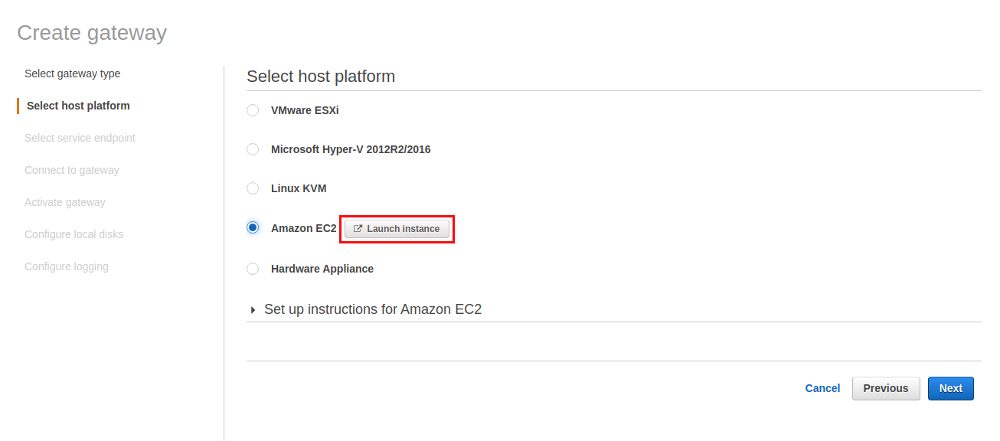

The AWS Storage Gateway comes as an appliance and depending on what you have available on-prem you import that either into your VMware ESXi, Microsoft Hyper-V 2012R2/2016 or KVM based virtual infrastructure. You can also use an EC2 instance and this is what we’ll be using here because it is the easiest way to deploy that for testing:

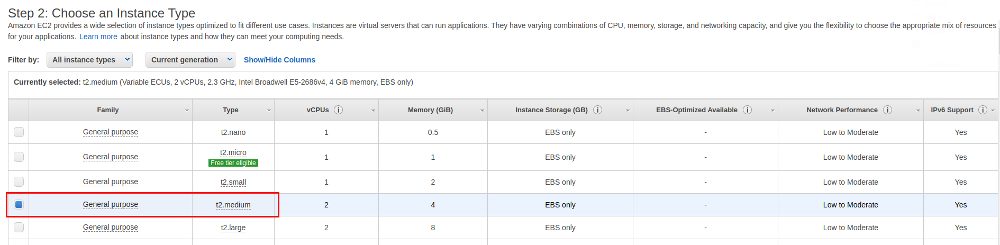

I am not going to provide all the screenshots for setting up the EC2 instance as that is straight forward, just the most important ones. For production usage AWS recommends several instance types. We’ll be using a small one here as it is only a test:

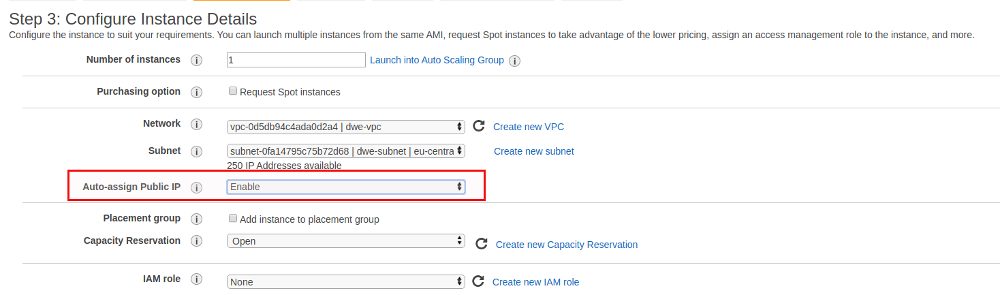

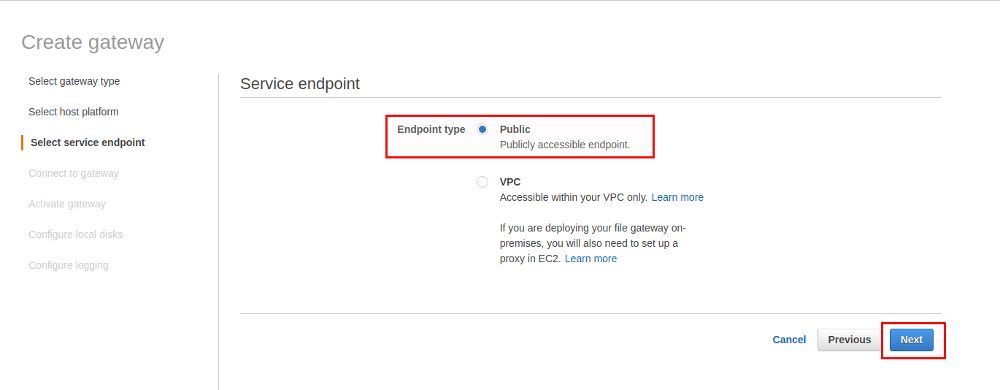

As we’ll be accessing the Storage Gateway from a location outside AWS we need a pubic IP address for the EC2 instance:

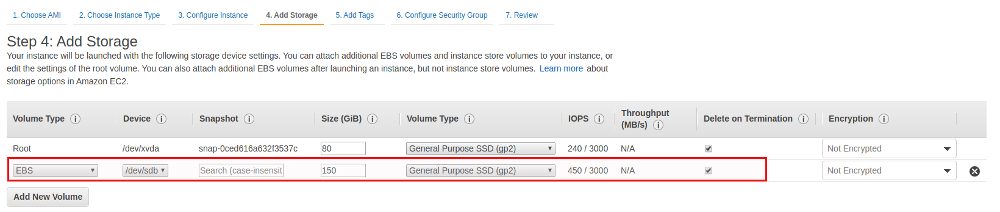

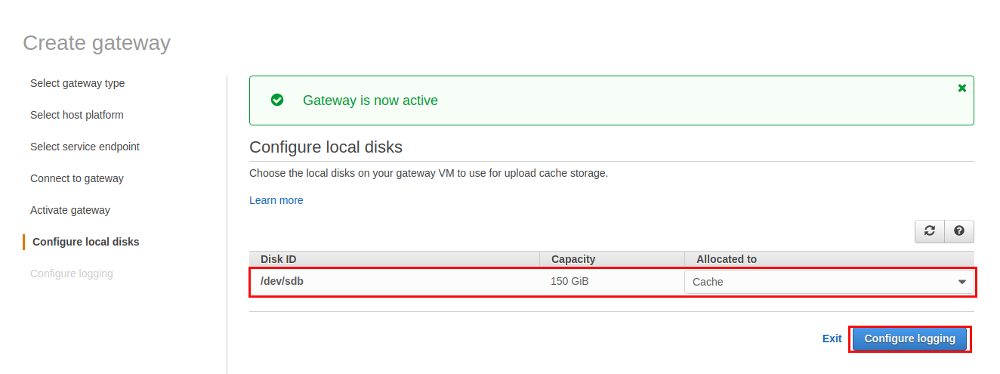

For the File Gateway we need one additional disk with a minimum recommended size of 150GB:

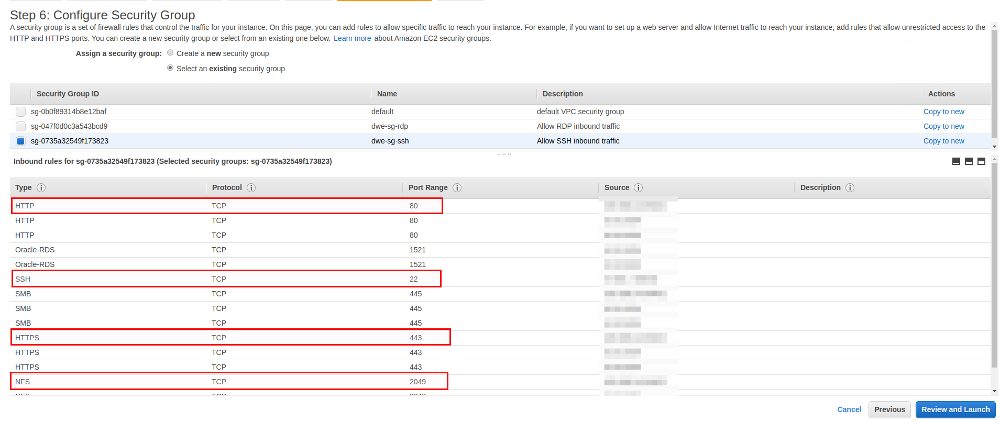

You need to make sure that the attached security group allows access from your local machines, at least for HTTP, HTTPS, NFS and SMB. An overview of the port requirements is here:

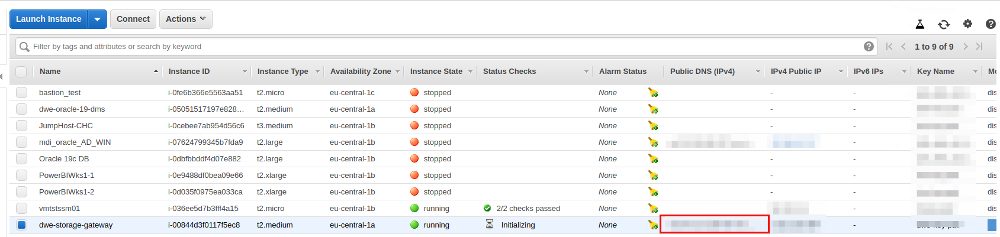

For the remaining EC2 settings this is standard stuff so no screenshots for that. Wait until the EC2 instance is running and make a note of the public IP address (you will need it in the next step of the Storage Gateway configuration):

Once the EC2 instance is ready we can go back to the Storage Gateway configuration and provide the public IP address of the EC2 instance we just created:

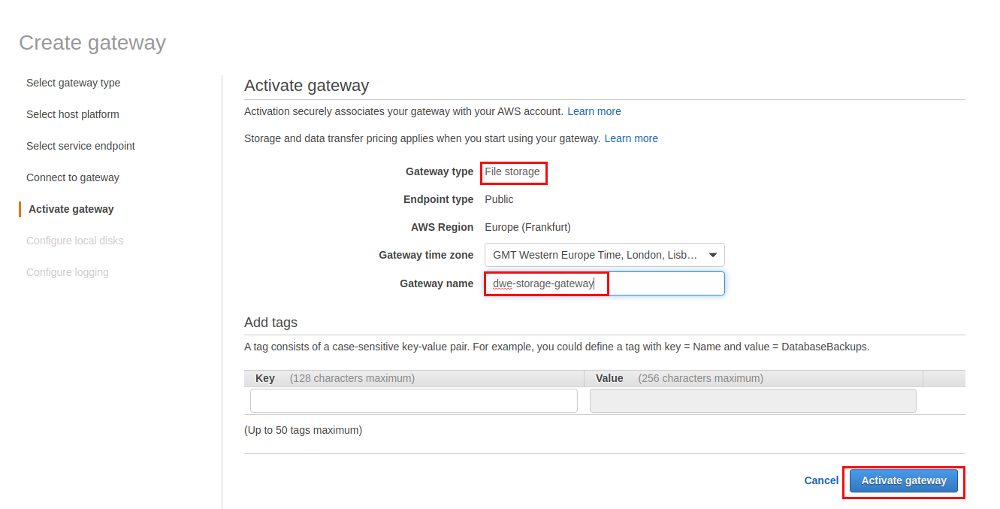

If the connection fails in the next step go back to your security group configuration because then required ports are probably missing. If all is fine the Storage Gateway can be activated in the next screen:

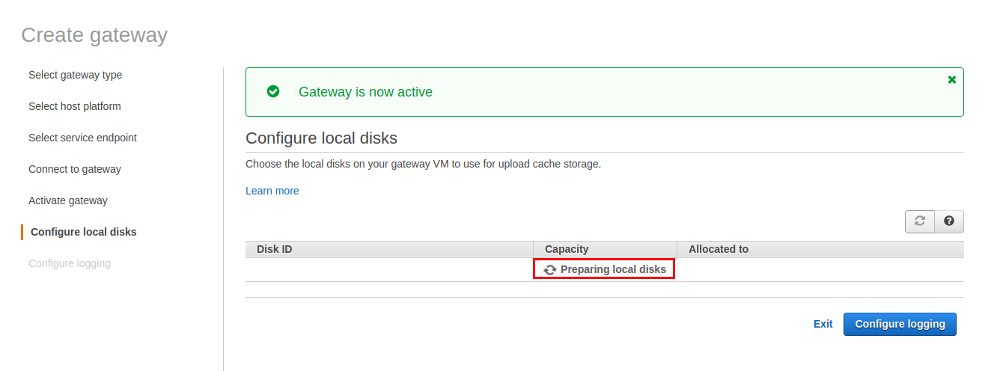

If we had skipped the additional disk setup when we created the EC2 instance we would be stuck in the next step as now we need to tell which disk we will use as the cache:

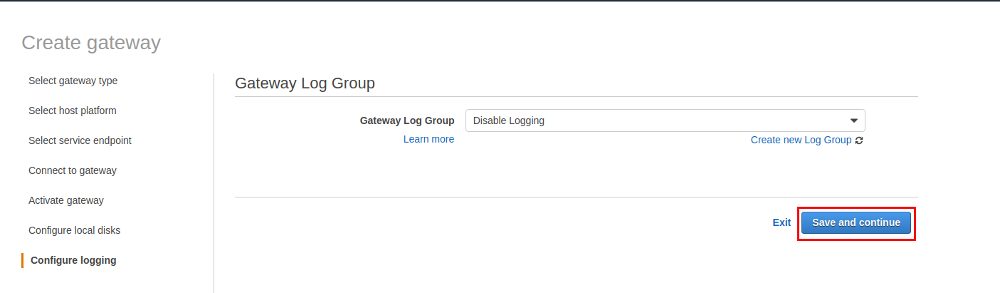

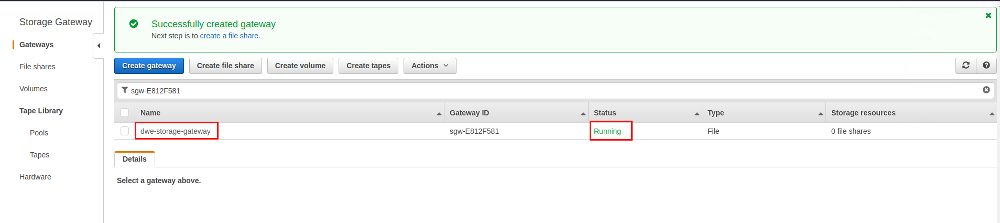

Logging should be enabled for production setups, we’ll skip it for this test and will go ahead and finally create the Storage Gateway:

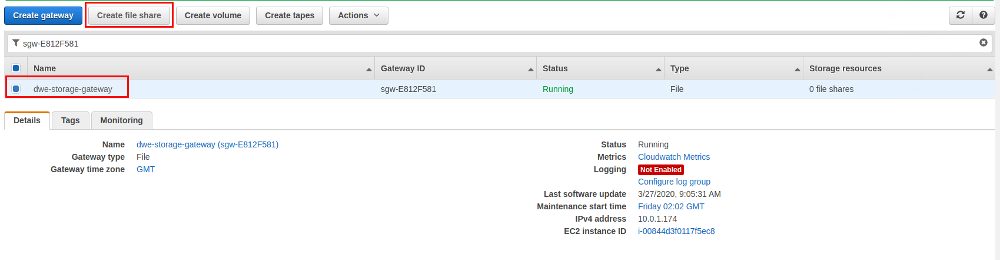

The Storage Gateway is now ready and we can go ahead and create a file share we can later mount on the PostgreSQL machine:

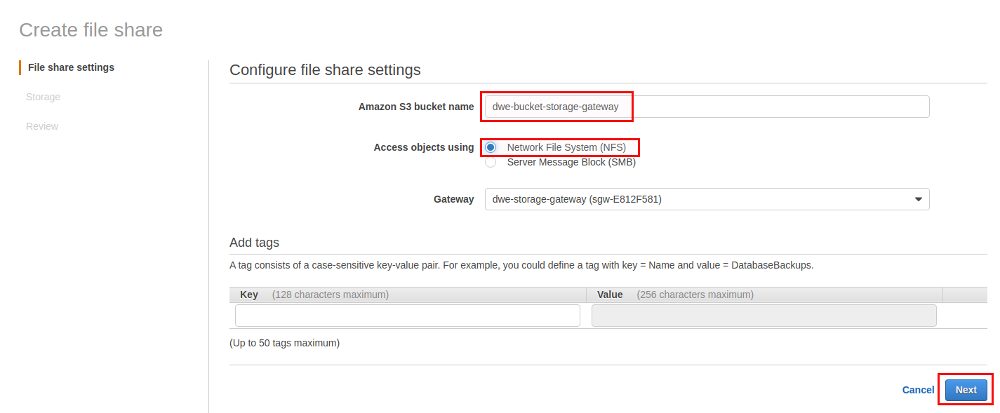

As objects will go to S3 in the background you should have created a S3 bucket you can specify now and as PostgreSQL will be running on a Linux host we’ll go for NFS:

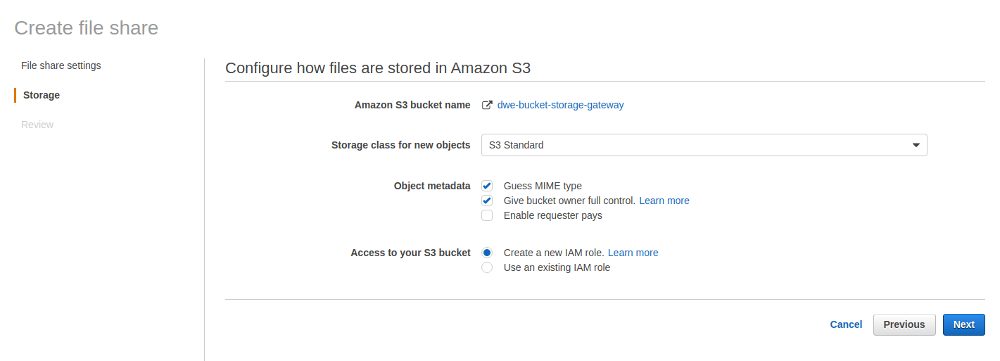

Go with the defaults on the next screen:

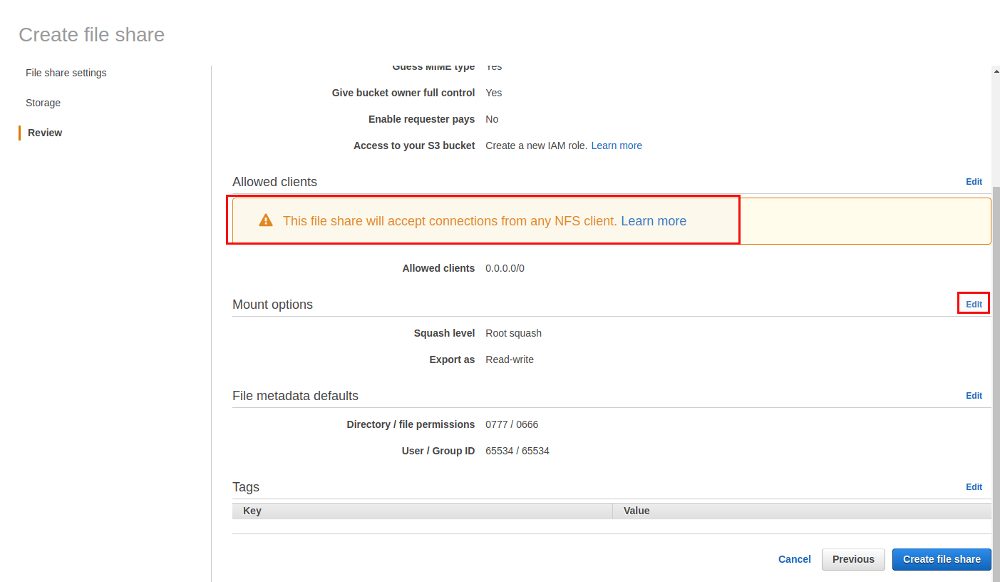

On the summary screen you should adjust the “Allowed clients” so only clients that you really know are safe to connect will be able to use the file share:

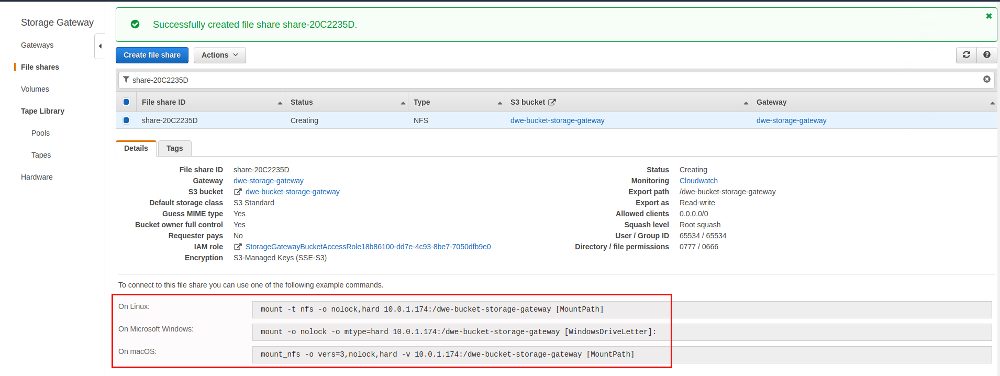

Once the share is ready there will be examples on how you can mount the file share for various operating systems (these examples will show the private IP addresses):

Time to mount the file share on our local PostgreSQL machine:

postgres@centos8pg:/home/postgres/ [pgdev] sudo mkdir /var/tmp/storageGateway postgres@centos8pg:/home/postgres/ [pgdev] sudo mount -t nfs -o nolock,hard xxx.xxx.xxx.xxx:/dwe-bucket-storage-gateway /var/tmp/storageGateway ostgres@centos8pg:/home/postgres/ [pgdev] df -h | grep storageGateway xxx.xxx.xxx.xxx:/dwe-bucket-storage-gateway 8.0E 0 8.0E 0% /var/tmp/storageGateway

This can now be used to write your PostgreSQL backups to and all the files will go to S3 in the background:

postgres@centos8pg:/home/postgres/ [pgdev] mkdir /var/tmp/storageGateway/basebackup_20200327 postgres@centos8pg:/home/postgres/ [pgdev] pg_basebackup -D /var/tmp/storageGateway/basebackup_20200327/ postgres@centos8pg:/home/postgres/ [pg13] ls -la /var/tmp/storageGateway/basebackup_20200327/ total 36 drwxr-xr-x. 1 postgres postgres 0 Mar 27 10:43 . drwxrwxrwx. 1 nobody nobody 0 Mar 27 10:41 .. -rw-------. 1 postgres postgres 224 Mar 27 10:41 backup_label drwx------. 1 postgres postgres 0 Mar 27 10:42 base -rw-------. 1 postgres postgres 33 Mar 27 10:43 current_logfiles drwx------. 1 postgres postgres 0 Mar 27 10:43 global drwx------. 1 postgres postgres 0 Mar 27 10:41 pg_commit_ts drwx------. 1 postgres postgres 0 Mar 27 10:41 pg_dynshmem -rw-------. 1 postgres postgres 4513 Mar 27 10:43 pg_hba.conf -rw-------. 1 postgres postgres 1636 Mar 27 10:43 pg_ident.conf drwxr-xr-x. 1 postgres postgres 0 Mar 27 10:43 pg_log drwx------. 1 postgres postgres 0 Mar 27 10:43 pg_logical drwx------. 1 postgres postgres 0 Mar 27 10:41 pg_multixact drwx------. 1 postgres postgres 0 Mar 27 10:41 pg_notify drwx------. 1 postgres postgres 0 Mar 27 10:43 pg_replslot drwx------. 1 postgres postgres 0 Mar 27 10:41 pg_serial drwx------. 1 postgres postgres 0 Mar 27 10:41 pg_snapshots drwx------. 1 postgres postgres 0 Mar 27 10:43 pg_stat drwx------. 1 postgres postgres 0 Mar 27 10:43 pg_stat_tmp drwx------. 1 postgres postgres 0 Mar 27 10:41 pg_subtrans drwx------. 1 postgres postgres 0 Mar 27 10:43 pg_tblspc drwx------. 1 postgres postgres 0 Mar 27 10:41 pg_twophase -rw-------. 1 postgres postgres 3 Mar 27 10:43 PG_VERSION drwx------. 1 postgres postgres 0 Mar 27 10:43 pg_wal drwx------. 1 postgres postgres 0 Mar 27 10:43 pg_xact -rw-------. 1 postgres postgres 252 Mar 27 10:43 postgresql.auto.conf -rw-------. 1 postgres postgres 27343 Mar 27 10:43 postgresql.conf

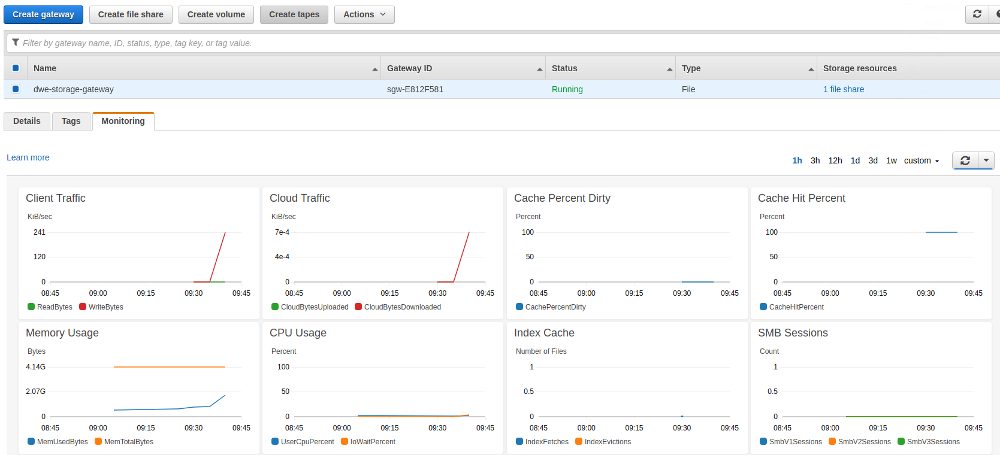

If you wait some time and then check the monitoring section of the Storage Gateway the various graphs should give you an idea what is happening:

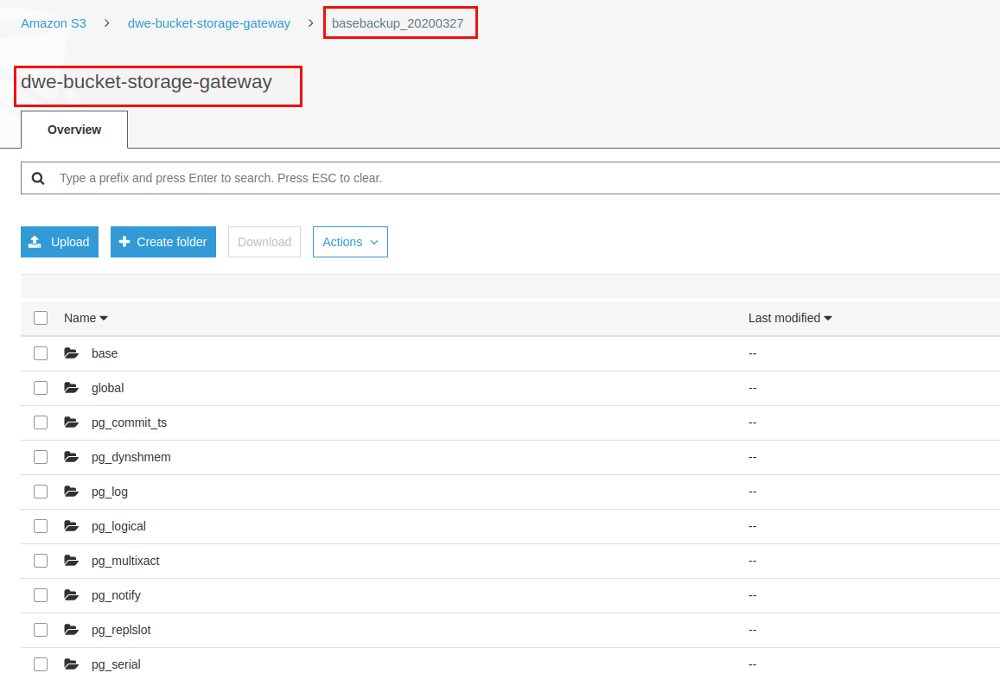

Checking the S3 bucket will also show you the files we have written to the Storage Gateway:

Combine that with a backup solution like pgBackRest, barman, EDB BART or whatever you use and you have your PostgreSQL backups safely written offsite to S3.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)