This is about something you maybe didn’t hear much yet: The openSUSE project and SUSE are working on a new enterprise operating system: ALP, the adaptive Linux Platform. As of today there is the second prototype, called Punta Baretti. The idea is: provide a minimal, immutable, operating system, which runs containers or virtual machines and does _not_ update any packages related to applications. This also means, that the base operating systems is not updated by the traditional package manager (zypper), but a command line utility which is called transactional-update. This is very much the same idea as in the relational database world when it comes to the “A” in “ACID“: Either all is done successfully, or the system is exactly in the same state it was before the update, if something failed.

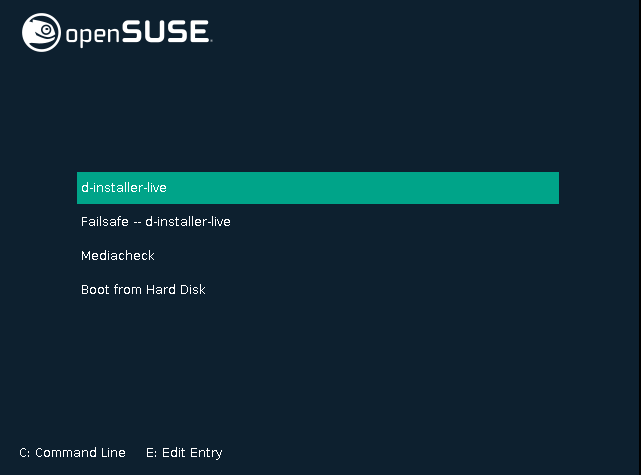

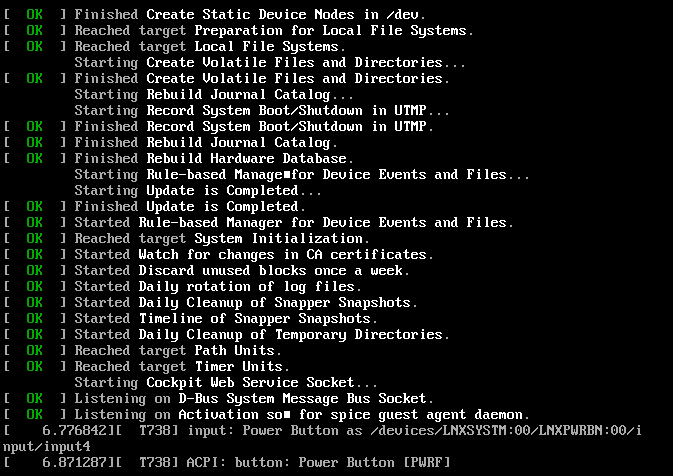

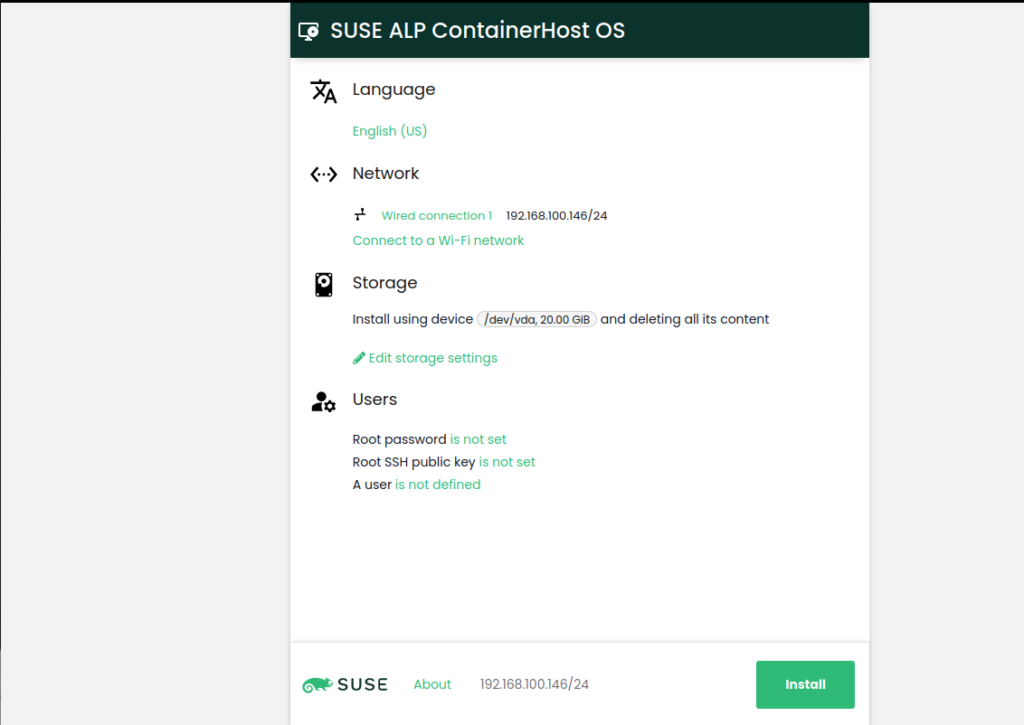

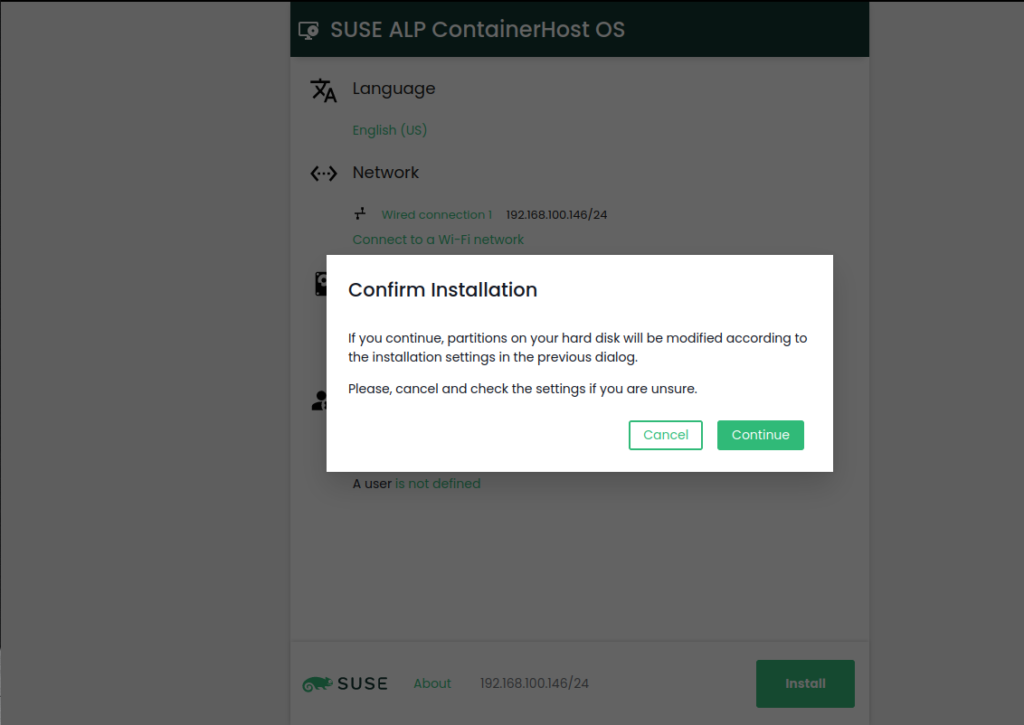

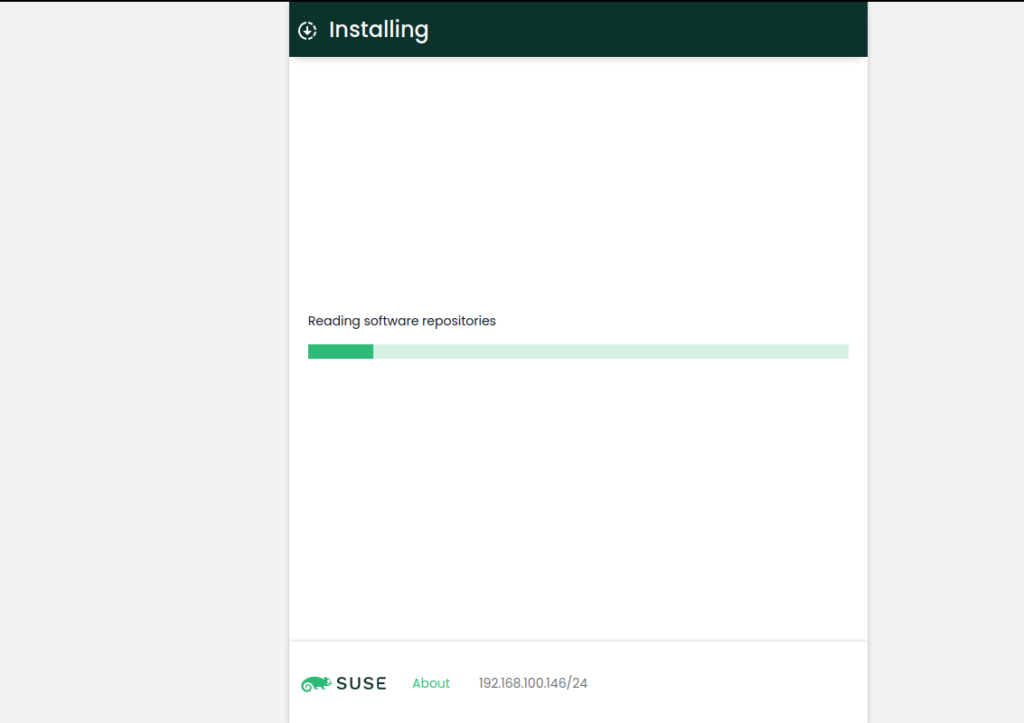

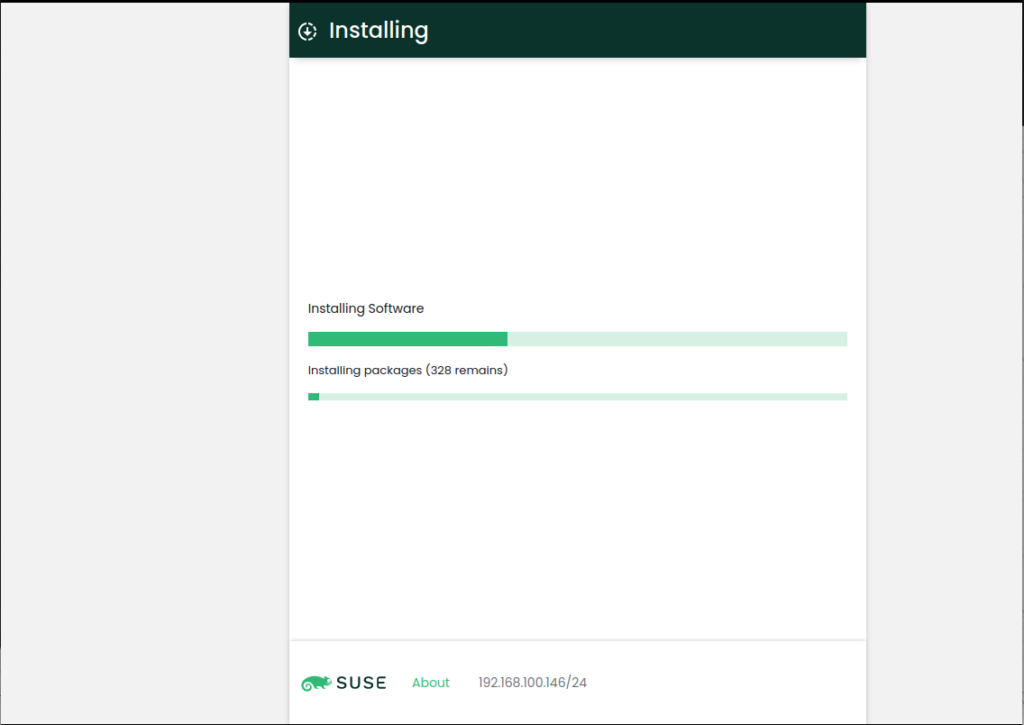

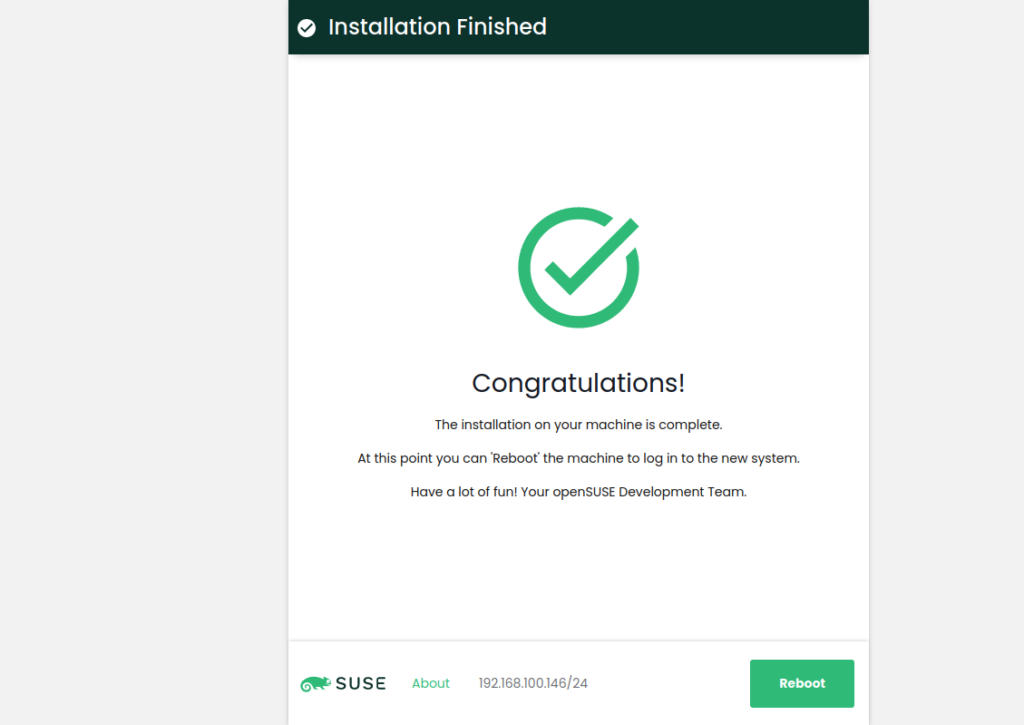

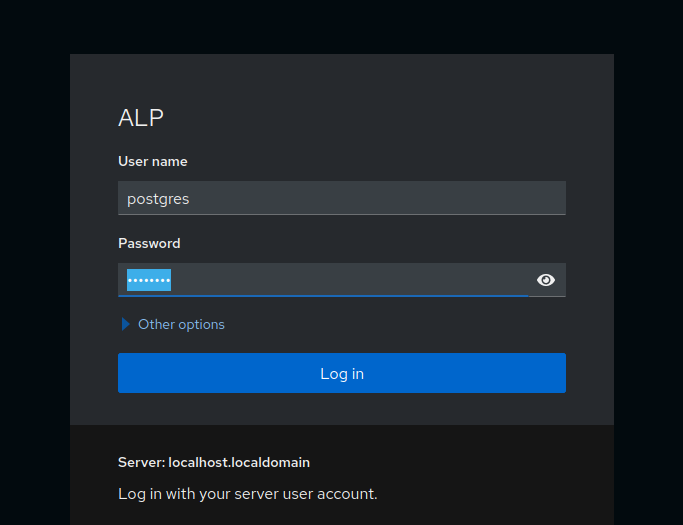

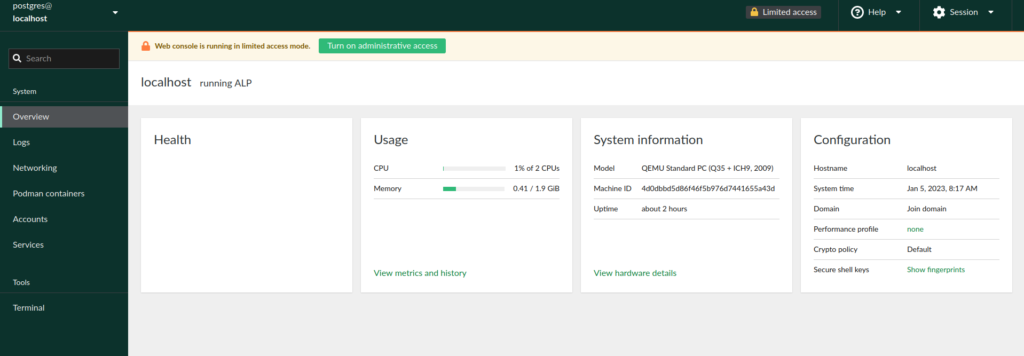

Let’s directly dive into the installation: If you want to try it using a virtual machine, head over here. If you want to try it using an installer based on an ISO, have a look here. Finally, you can use a raw disk image, check here. For the scope of this post, we’ll use the “d-installer” approach, based on an ISO, which is an installer based on a web browser. The installation process is pretty much self explaining, so just a series of screenshots:

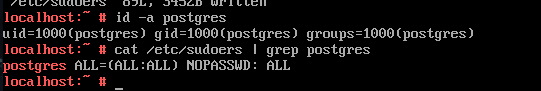

Please note that root access via ssh is disabled by default. Instead of enabling that, better create a new user and give sudo access, e.g. like this:

Having that in place, we can remotely login and check the system. Indeed the root file system is read only:

postgres@localhost:~> cat /etc/fstab

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c / btrfs ro 0 0

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c /var btrfs subvol=/@/var,x-initrd.mount 0 0

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c /usr/local btrfs subvol=/@/usr/local 0 0

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c /srv btrfs subvol=/@/srv 0 0

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c /root btrfs subvol=/@/root,x-initrd.mount 0 0

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c /opt btrfs subvol=/@/opt 0 0

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c /home btrfs subvol=/@/home 0 0

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c /boot/writable btrfs subvol=/@/boot/writable 0 0

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c /boot/grub2/x86_64-efi btrfs subvol=/@/boot/grub2/x86_64-efi 0 0

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c /boot/grub2/i386-pc btrfs subvol=/@/boot/grub2/i386-pc 0 0

UUID=e59bfc99-d700-45f1-8b0f-4b250062ed7c /.snapshots btrfs subvol=/@/.snapshots 0 0

overlay /etc overlay defaults,lowerdir=/sysroot/etc,upperdir=/sysroot/var/lib/overlay/1/etc,workdir=/sysroot/var/lib/overlay/work-etc,x-systemd.requires-mounts-for=/var,x-systemd.requires-mounts-for=/sysroot/var,x-initrd.mount 0 0

postgres@localhost:~> sudo touch /aa

touch: cannot touch '/aa': Read-only file system

Even if zypper is there, it cannot be used to install any packages:

/usr/bin/zypper

postgres@localhost:~> sudo zypper in nano

This is a transactional-server, please use transactional-update to update or modify the system.

postgres@localhost:~>

The error message is clear, if we want to update the system we need to use “transactional-update”:

postgres@localhost:~> sudo transactional-update

Checking for newer version.

transactional-update 4.1.0 started

Options:

Separate /var detected.

2023-01-05 06:32:27 tukit 4.1.0 started

2023-01-05 06:32:27 Options: -c1 open

2023-01-05 06:32:27 Using snapshot 1 as base for new snapshot 2.

2023-01-05 06:32:27 No previous snapshot to sync with - skipping

ID: 2

2023-01-05 06:32:27 Transaction completed.

Calling zypper up

zypper: nothing to update

Removing snapshot #2...

2023-01-05 06:32:27 tukit 4.1.0 started

2023-01-05 06:32:27 Options: abort 2

2023-01-05 06:32:27 Discarding snapshot 2.

2023-01-05 06:32:27 Transaction completed.

transactional-update finished

postgres@localhost:~>

As ALP is designed to run containers, podman should be enabled. It is installed by default:

postgres@localhost:~> sudo zypper se -i podman

Loading repository data...

Reading installed packages...

S | Name | Summary | Type

--+-------------------+-----------------------------------------------------------------------+--------

i | cockpit-podman | Cockpit component for Podman containers | package

i | podman | Daemon-less container engine for managing containers, pods and images | package

i | podman-cni-config | Basic CNI configuration for podman | package

Enabling podman to run by default is just a matter of enabling the systemd service:

postgres@localhost:~> sudo systemctl enable --now podman.service

Created symlink /etc/systemd/system/default.target.wants/podman.service → /usr/lib/systemd/system/podman.service.

postgres@localhost:~> sudo systemctl status podman.service

○ podman.service - Podman API Service

Loaded: loaded (/usr/lib/systemd/system/podman.service; enabled; preset: disabled)

Active: inactive (dead) since Thu 2023-01-05 06:37:24 UTC; 1s ago

Duration: 5.200s

TriggeredBy: ● podman.socket

Docs: man:podman-system-service(1)

Process: 1655 ExecStart=/usr/bin/podman $LOGGING system service (code=exited, status=0/SUCCESS)

Main PID: 1655 (code=exited, status=0/SUCCESS)

CPU: 130ms

Jan 05 06:37:19 localhost.localdomain systemd[1]: Starting Podman API Service...

Jan 05 06:37:19 localhost.localdomain systemd[1]: Started Podman API Service.

Jan 05 06:37:19 localhost.localdomain podman[1655]: time="2023-01-05T06:37:19Z" level=info msg="/usr/bin/podman filtering at log level info"

Jan 05 06:37:19 localhost.localdomain podman[1655]: 2023-01-05 06:37:19.839690815 +0000 UTC m=+0.147024259 system refresh

Jan 05 06:37:19 localhost.localdomain podman[1655]: time="2023-01-05T06:37:19Z" level=info msg="Setting parallel job count to 7"

Jan 05 06:37:19 localhost.localdomain podman[1655]: time="2023-01-05T06:37:19Z" level=info msg="Using systemd socket activation to determine API endpoint"

Jan 05 06:37:19 localhost.localdomain podman[1655]: time="2023-01-05T06:37:19Z" level=info msg="API service listening on \"/run/podman/podman.sock\". URI: \"/run/podman/podman.sock\""

Jan 05 06:37:19 localhost.localdomain podman[1655]: time="2023-01-05T06:37:19Z" level=info msg="API service listening on \"/run/podman/podman.sock\""

Jan 05 06:37:24 localhost.localdomain systemd[1]: podman.service: Deactivated successfully.

One of the containers that come by default is the Cockpit container. To bring it up:

postgres@localhost:~> sudo podman search cockpit-ws

ERRO[0000] error getting search results from v2 endpoint "registry.suse.com": unable to retrieve auth token: invalid username/password: errors:

denied: requested access to the resource is denied

unauthorized: authentication required

NAME DESCRIPTION

registry.opensuse.org/home/eisest/container/container/opensuse/cockpit-ws

registry.opensuse.org/home/jreidinger/suse_alp_workloads_sle_15sp4_containerfiles/suse/alp/workloads/cockpit-ws

registry.opensuse.org/home/luc14n0/containers/container/opensuse/cockpit-ws

registry.opensuse.org/opensuse/cockpit-ws

registry.opensuse.org/opensuse/factory/arm/totest/containers/opensuse/cockpit-ws

registry.opensuse.org/opensuse/factory/powerpc/totest/containers/opensuse/cockpit-ws

registry.opensuse.org/opensuse/factory/zsystems/totest/containers/opensuse/cockpit-ws

registry.opensuse.org/suse/alp/workloads/publish/tumbleweed_containerfiles/suse/alp/workloads/cockpit-ws

registry.opensuse.org/suse/alp/workloads/tumbleweed_containerfiles/suse/alp/workloads/cockpit-ws

docker.io/stefwalter/cockpit-ws Cockpit Webservice in a Privileged Container

docker.io/port/cockpit-ws

docker.io/sujitfulse/cockpit-ws-ppc64le

docker.io/alastair87/cockpit-ws

docker.io/dvdbrander/cockpit-ws

postgres@localhost:~> sudo podman container runlabel install registry.opensuse.org/suse/alp/workloads/tumbleweed_containerfiles/suse/alp/workloads/cockpit-ws:latest

Trying to pull registry.opensuse.org/suse/alp/workloads/tumbleweed_containerfiles/suse/alp/workloads/cockpit-ws:latest...

Getting image source signatures

Copying blob 0dad567d9346 done

Copying blob c7faa4ba95bf done

Copying config 9f0fe3fccd done

Writing manifest to image destination

Storing signatures

+ sed -e /pam_selinux/d -e /pam_sepermit/d /etc/pam.d/cockpit

+ mkdir -p /host/etc/cockpit/ws-certs.d /host/etc/cockpit/machines.d

+ chmod 755 /host/etc/cockpit/ws-certs.d /host/etc/cockpit/machines.d

+ chown root:root /host/etc/cockpit/ws-certs.d /host/etc/cockpit/machines.d

+ mkdir -p /etc/ssh

+ '[' podman = oci -o podman = podman ']'

+ '[' -n registry.opensuse.org/suse/alp/workloads/tumbleweed_containerfiles/suse/alp/workloads/cockpit-ws:latest ']'

+ '[' '!' -e /host/etc/systemd/system/cockpit.service ']'

+ mkdir -p /host/etc/systemd/system/

+ cat

+ /bin/mount --bind /host/etc/cockpit /etc/cockpit

+ /usr/libexec/cockpit-certificate-ensure

/usr/libexec/cockpit-certificate-helper: line 25: sscg: command not found

Generating a RSA private key

..............................+++++

..............................................................................+++++

writing new private key to '0-self-signed.key'

-----

postgres@localhost:~> sudo podman container runlabel --name cockpit-ws run registry.opensuse.org/suse/alp/workloads/tumbleweed_containerfiles/suse/alp/workloads/cockpit-ws:latest

fb0ddfd99c7a7afac462e4f74bb11762036d67699fc3fa108d7978ed24a84245

Once it is running the Cockpit web interface can be accessed at https://[hostname]:9090:

Several other containers / workloads are available here. Have fun exploring.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/04/SIT_web.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2023/05/STM_web_min.jpg)