I have recently written a blog introducing the Oracle ZDM (Zero Downtime Migration) tool : https://www.dbi-services.com/blog/zero-downtime-migration-zdm-to-oracle-cloud-infrastructure-oci/

I have also done some tests performing an offline logical database migration from a database running on an OCI VM (this would simulate an on-premises database) to an ADB (Autonomous DataBase) using a backup location in OCI. I would like through this blog to share my tests and demo. This demo will show all implementation details.

Create Source Database on an OCI VM

First of all I have created a database on an OCI VM, that would be my source database to migrate. This would simulate an on-premises database. I have deployed very easily the whole VM with a 19c multi-tenant database, named CDB01, using dbi services YAK software. YAK is a software that helps to easily deploy database instances and servers whatever the platform used is. YAK can be downloaded, as well as further explanation, on following web link : https://www.dbi-services.com/products/yak/

What is further important to know is the exact name of my OCI AD. I can get this with the next command:

mawcloud@cloudshell:~ (eu-zurich-1)$ oci iam availability-domain list

{

"data": [

{

"compartment-id": "ocid1.tenancy.oc1..aaaaaaaayt******************************************62na",

"id": "ocid1.availabilitydomain.oc1..aaaaaaaahcy******************************************a2ta",

"name": "NYRE:EU-ZURICH-1-AD-1"

}

]

}

YAK is executed within a container that I have started from my laptop using rancher.

I have prepared my YAML variables file for my infrastructure after creating my environment root directory. This file contains informations about the cloud like the cloud provider, the compartment id where the VM needs to be created, the cloud user, the regions id, the AD name (we have just gathered in the previous command), the security list and the subnet id.

yak@75b76d2b532a:~/yak$ mkdir ./configuration/infrastructure/oci_ace yak@75b76d2b532a:~/yak$ cp ./configuration/infrastructure_sample/oci/variables.yml ./configuration/infrastructure/oci_ace yak@75b76d2b532a:~/yak$ vi ./configuration/infrastructure/oci_ace/variables.yml yak@1f88f85e427c:~/yak$ cat ./configuration/infrastructure/oci_ace/variables.yml # Copyright: (c) 2022, dbi services, distributed without any warranty under the terms of the GNU General Public License v3 is_cloud_environment: yes environment: oci_ace ansible_user: opc provider: oci region_id: eu-zurich-1 compartment_id: ocid1.compartment.oc1..aaaaaaaa6c**********************************************74ktq availability_domain: NYRE:EU-ZURICH-1-AD-1 security_list: ocid1.securitylist.oc1.eu-zurich-1.aaaaaa**********************************************vtxq subnet_id: ocid1.subnet.oc1.eu-zurich-1.aaaaaaaao**********************************************ccqa

I can use following ansible command to check all my variables:

yak@75b76d2b532a:~/yak$ ansible-inventory --graph --varsI will declare the server YAML variables to define my server information as OS image, shape, ip and hostname.

yak@75b76d2b532a:~/yak$ mkdir ./configuration/infrastructure/oci_ace/sourcedb1

yak@75b76d2b532a:~/yak$ cp ./configuration/infrastructure_sample/oci/srv-linux-test-01/variables.yml ./configuration/infrastructure/oci_ace/sourcedb1

yak@75b76d2b532a:~/yak$ vi ./configuration/infrastructure/oci_ace/sourcedb1/variables.yml

yak@44371bd79b97:~/yak$ cat ./configuration/infrastructure/oci_ace/sourcedb1/variables.yml

# Copyright: (c) 2022, dbi services, distributed without any warranty under the terms of the GNU General Public License v3

hostname: srv-source-zdm-01

is_physical_server: no

ansible_user: opc

host_ip_access: public_ip

private_ip:

mode: auto

public_ip:

mode: auto

operating_system: Oracle-Linux-8.5-2022.01.24-0

image_id: ocid1.image.oc1.eu-zurich-1.aaaaaaaamtulj4fmm6cx6xq6delggc5jhfoy652lbxxj2xbnzzxik7sgsnva

shape:

name: VM.Standard.E3.Flex

memory_in_gbs: 32

ocpus: 6

I created some RSA key that I will be using to access the created VM. I will of course record them securely in my environment.

yak@75b76d2b532a:~/yak$ mkdir ./configuration/infrastructure/oci_ace/secrets yak@75b76d2b532a:~/yak$ cd ./configuration/infrastructure/oci_ace/secrets/ yak@75b76d2b532a:~/yak/configuration/infrastructure/oci_ace/secrets$ gen_secret yak@75b76d2b532a:~/yak/configuration/infrastructure/oci_ace/secrets$ ls sshkey sshkey.pub

I can check my inventory with following command:

yak@44371bd79b97:~/yak$ ansible-inventory --host oci_ace/sourcedb1I need to setup my OCI cloud authentication variable with following export commands:

export OCI_USER_ID=ocid1.user.oc1..aaaaaaaadl***********************************************sjiq export OCI_USER_FINGERPRINT=2f:**:**:**:**:**:**:**:**:**:**:**:**:**:**:da export OCI_TENANCY=ocid1.tenancy.oc1..aaaaaaaay***********************************************62na export OCI_REGION=eu-zurich-1 export OCI_USER_KEY_FILE=$HOME/.ssh/oci_api_key.pem export OCI_USE_NAME_AS_IDENTIFIER=true

I have then defined and prepared my database YAML variables:

yak@44371bd79b97:~/yak/components/oracle_instance/component_sample$ cp -R CDB01 /workspace/yak/configuration/infrastructure/oci_ace/sourcedb1/

yak@44371bd79b97:~/yak/configuration/infrastructure/oci_ace/sourcedb1$ cd CDB01

yak@44371bd79b97:~/yak/configuration/infrastructure/oci_ace/sourcedb1/CDB01$ ls

variables.yml

yak@44371bd79b97:~/yak/configuration/infrastructure/oci_ace/sourcedb1/CDB01$ vi variables.yml

yak@44371bd79b97:~/yak/configuration/infrastructure/oci_ace/sourcedb1/CDB01$ cat variables.yml

component_type: oracle_instance

storage: linux/storage/oracle_instance

templates:

- { name: ofa, path: oracle_flexible_architecture }

- { name: rdbms_base_release_19c, path: software/rdbms_base_release_19c }

- { name: rdbms_patch_campaigns_19c, path: software/rdbms_patch_campaigns_19c }

- { name: rdbms_compile_options_19c, path: software/rdbms_compile_options_19c }

- { name: dmk_packages, path: software/dbi-services_dmk_packages }

- { name: oracle_database, path: oracle_database }

edition: EE

#version: 19_12_210720_JVM_v0

#version: 19_14_220118_JVM_v0

version: 19_17_221018_JVM_v0

instance_details:

sid: "CDB01"

db_unique_name: "CDB01_SITE1"

domain_name: ""

character_set: "AL32UTF8"

national_character_set: "AL16UTF16"

rman_connect_string: "/" #connect / AS SYSDBA

tns_details:

default_domain: ""

hostname: "{{ ansible_hostname }}"

protocol: "TCP"

port: 1521

sqlnet_details:

default_domain: ""

encryption_server: true

encryption_client: true

crypto_checksum_server: true

crypto_checksum_client: true

listener_details:

name: "LISTENER"

hostname: "{{ ansible_hostname }}"

protocol: "TCP"

port: 1521

dmk_dbbackup_settings:

mail_title_customer_name: "xChange"

nosuccessmail: "1"

smtp_server: ""

mail_to: "[email protected]"

mail_from: ""

dmk_rman:

catalog: "nocatalog"

channel_numbers: 4

recovery_window: 4

bck_path: "" #Default: $cda/backup

schedules:

inc0: { job_desc: "Weekly inc0 backup", rcv: "bck_inc0_fra.rcv", minute: 00, hour: 22 , mday: "*", month: "*", weekday: 0 }

inc1: { job_desc: "Daily inc1 backup", rcv: "bck_inc1_fra.rcv", minute: 00, hour: 22 , mday: "*", month: "*", weekday: "1-6" }

arch: { job_desc: "Archive log backup", rcv: "bck_arc_fra.rcv" , minute: 00, hour: "*/2", mday: "*", month: "*", weekday: "*" }

mnt_obs: { job_desc: "Manage obsoletes", rcv: "mnt_obs.rcv" , minute: 00, hour: "01" , mday: "*", month: "*", weekday: "*" }

And added the bucket name for the artifacts:

yak@44371bd79b97:~/yak/configuration/infrastructure/oci_ace$ vi variables.yml

yak@44371bd79b97:~/yak/configuration/infrastructure/oci_ace$ cat variables.yml

# Copyright: (c) 2022, dbi services, distributed without any warranty under the terms of the GNU General Public License v3

is_cloud_environment: yes

environment: oci_ace

ansible_user: opc

provider: oci

region_id: eu-zurich-1

compartment_id: ocid1.compartment.oc1..aaaaaaaa6c**********************************************74ktq

availability_domain: NYRE:EU-ZURICH-1-AD-1

security_list: ocid1.securitylist.oc1.eu-zurich-1.aaaaaa**********************************************vtxq

subnet_id: ocid1.subnet.oc1.eu-zurich-1.aaaaaaaao**********************************************ccqa

artifacts:

provider: aws_s3

variables:

bucket_name: "dbi-services-yak-artifacts"

To access the artifacts stored in our dbi services AWS bucket, I had to set my AWS credentials:

export AWS_ACCESS_KEY_ID="ASI*************BE" export AWS_SECRET_ACCESS_KEY="8gR*******************************q7" export AWS_SESSION_TOKEN="IQoJ********************************************4RI/K/6"

And now I’m able to deploy my server (OCI VM):

yak@44371bd79b97:~/yak$ apdp oci_ace/sourcedb1

PLAY [Check target type] *****************************************************************************************************************************************************************************************************

Tuesday 14 March 2023 18:09:51 +0000 (0:00:00.017) 0:00:00.017 *********

[started TASK: ansible.builtin.assert on oci_ace/sourcedb1]

TASK [ansible.builtin.assert] ************************************************************************************************************************************************************************************************

ok: [oci_ace/sourcedb1 -> localhost] => {

"changed": false,

"msg": "All assertions passed"

}

...

...

...

PLAY RECAP *******************************************************************************************************************************************************************************************************************

oci_ace/sourcedb1 : ok=45 changed=14 unreachable=0 failed=0 skipped=17 rescued=0 ignored=0

Tuesday 14 March 2023 18:15:07 +0000 (0:00:06.018) 0:05:16.861 *********

===============================================================================

yak.core.oci_compute : Create instance ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 69.23s

yak.core.os_prerequisites : Install LVM ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ 69.12s

yak.core.os_storage : Create volume attachment ----------------------------------------------------------------------------------------------------------------------------------------------------------------------- 37.16s

yak.core.os_storage : Create volume ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 34.87s

yak.core.os_storage : Perform a ISCSI discovery ---------------------------------------------------------------------------------------------------------------------------------------------------------------------- 20.11s

Gathering Facts ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ 19.17s

yak.core.oci_compute : Wait for SSH to come up ----------------------------------------------------------------------------------------------------------------------------------------------------------------------- 18.60s

yak.core.os_storage : Create a filesystem on logical volume ----------------------------------------------------------------------------------------------------------------------------------------------------------- 7.36s

yak.core.os_storage : Create a logical volume ------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 6.84s

yak.core.os_storage : Mount logical volume ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 6.02s

yak.core.os_storage : Get UUID for each FS ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 5.40s

yak.core.os_storage : Login to ISCSI target --------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 5.16s

yak.core.os_prerequisites : Install XFS ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 4.40s

yak.core.oci_compute : Get OCI instances facts ------------------------------------------------------------------------------------------------------------------------------------------------------------------------ 1.98s

yak.core.os_storage : Get instances ID -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 1.97s

yak.core.oci_compute : Get OCI instances facts ------------------------------------------------------------------------------------------------------------------------------------------------------------------------ 1.79s

yak.core.os_storage : Create a volume group --------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 1.74s

yak.core.os_prerequisites : Define hostname to srv-source-zdm-01 ------------------------------------------------------------------------------------------------------------------------------------------------------ 1.60s

yak.core.os_prerequisites : Set timezone to Europe/Zurich ------------------------------------------------------------------------------------------------------------------------------------------------------------- 1.27s

Update yak inventory -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 0.39s

yak@44371bd79b97:~/yak$

And my database, CDB01 on my servers:

yak@44371bd79b97:~/yak$ ansible-playbook components/oracle_instance/playbooks/linux/deploy.yml -e target=oci_ace/sourcedb1/CDB01 PLAY [Deploy Oracle database instance oci_ace/sourcedb1/CDB01] *************************************************************************************************************************************************************** Tuesday 14 March 2023 19:46:41 +0000 (0:00:00.048) 0:00:00.049 ********* [started TASK: Gathering Facts on oci_ace/sourcedb1/CDB01] TASK [Gathering Facts] ******************************************************************************************************************************************************************************************************* [WARNING]: Platform linux on host oci_ace/sourcedb1/CDB01 is using the discovered Python interpreter at /usr/bin/python3.6, but future installation of another Python interpreter could change the meaning of that path. See https://docs.ansible.com/ansible-core/2.13/reference_appendices/interpreter_discovery.html for more information. ok: [oci_ace/sourcedb1/CDB01] Tuesday 14 March 2023 19:47:00 +0000 (0:00:19.064) 0:00:19.113 ********* [started TASK: assert on oci_ace/sourcedb1/CDB01] ... ... ... PLAY RECAP ******************************************************************************************************************************************************************************************************************* oci_ace/sourcedb1/CDB01 : ok=138 changed=52 unreachable=0 failed=0 skipped=26 rescued=0 ignored=0 Tuesday 14 March 2023 20:56:17 +0000 (0:00:01.468) 1:09:35.402 ********* =============================================================================== linux_ora_dmk_dbcreate : Create database using DBCA template (~50 minutes) ----------------------------------------------------------------------------------------------------------------------------------------- 3370.53s linux_ora_rdbms_base : Copy/Unzip Oracle Oracle RDBMS binaries ------------------------------------------------------------------------------------------------------------------------------------------------------ 266.80s linux_ora_rdbms_base : Start runInstaller (Oracle RDBMS Edition EE) -------------------------------------------------------------------------------------------------------------------------------------------------- 99.69s yak.core.artifacts : Download artifact ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 58.88s linux_ora_rdbms_combo_db_ovm_ru : Unzip Oracle Combo patch(es) ------------------------------------------------------------------------------------------------------------------------------------------------------- 47.89s linux_ora_rdbms_os_tools : Install troubleshooting tools ------------------------------------------------------------------------------------------------------------------------------------------------------------- 22.23s yak.core.artifacts : Change artifacts ownership and group ------------------------------------------------------------------------------------------------------------------------------------------------------------ 19.63s Gathering Facts ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ 19.06s yak.core.artifacts : Creates directory layout ------------------------------------------------------------------------------------------------------------------------------------------------------------------------ 17.05s linux_ora_rdbms_chopt : Disable - Oracle OLAP ------------------------------------------------------------------------------------------------------------------------------------------------------------------------ 13.49s linux_ora_rdbms_chopt : Disable - Oracle Advanced Analytics --------------------------------------------------------------------------------------------------------------------------------------------------------- 13.20s linux_ora_rdbms_os_tools : Install htop ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ 12.74s linux_ora_rdbms_chopt : Disable - Oracle Partitioning ---------------------------------------------------------------------------------------------------------------------------------------------------------------- 12.62s linux_ora_rdbms_dmk_packages : Unzip DMK archives -------------------------------------------------------------------------------------------------------------------------------------------------------------------- 12.58s linux_ora_rdbms_chopt : Disable - Oracle Real Application Testing ---------------------------------------------------------------------------------------------------------------------------------------------------- 12.12s linux_ora_rdbms_os_tools : Install required libraries for htop installation ------------------------------------------------------------------------------------------------------------------------------------------- 8.95s linux_ora_rdbms_chopt : Check Oracle RDBMS options status ------------------------------------------------------------------------------------------------------------------------------------------------------------- 5.33s linux_ora_dmk_dbbackup : Add cronjobs for DMK_DBBACKUP dmk_rman.ksh --------------------------------------------------------------------------------------------------------------------------------------------------- 5.11s linux_ora_rdbms_opatch : Unzip Opatch utility for version compare (indempodence - version context unknown) ------------------------------------------------------------------------------------------------------------ 4.95s linux_ora_rdbms_interim_patch : List of patches installed on top of Oracle Home --------------------------------------------------------------------------------------------------------------------------------------- 4.67s yak@44371bd79b97:~/yak$

Once my OCI VM and CDB01 database have been both deployed I can access the servers. The public IP has been inserted in the YAML variables file and I can otherwise get it directly from OCI GUI too. I will access to it using my private key generated previously:

ssh -i /Users/maw/Documents/Dokument/pem_ssh_key/Oracle_cloud_ace/yak_source_vm/ssh-key-VM-YAK-ace.key [email protected]After having connected to the OCI VM and CDB01 I have created a pluggable database, named pdb1.

oracle@srv-source-zdm-01:/home/oracle/ [rdbms19_17_221018_JVM_v0] CDB01

********* dbi services Ltd. *********

STATUS : OPEN

DB_UNIQUE_NAME : CDB01_SITE1

OPEN_MODE : READ WRITE

LOG_MODE : ARCHIVELOG

DATABASE_ROLE : PRIMARY

FLASHBACK_ON : NO

FORCE_LOGGING : NO

VERSION : 19.17.0.0.0

CDB Enabled : YES

List PDB(s) READ ONLY : PDB$SEED

*************************************

oracle@srv-source-zdm-01:/home/oracle/ [CDB01] sqh

SQL*Plus: Release 19.0.0.0.0 - Production on Wed Mar 15 07:02:08 2023

Version 19.17.0.0.0

Copyright (c) 1982, 2022, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.17.0.0.0

SQL> create pluggable database pdb1 admin user admin identified by manager file_name_convert=('pdbseed','pdb1');

Pluggable database created.

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 MOUNTED

SQL> alter pluggable database pdb1 open;

Pluggable database altered.

SQL> select a.name,b.state from v$pdbs a , dba_pdb_saved_states b where a.con_id = b.con_id;

no rows selected

SQL> SELECT con_name, instance_name, state FROM cdb_pdb_saved_states;

no rows selected

SQL> alter pluggable database pdb1 save state;

Pluggable database altered.

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 READ WRITE NO

SQL> select a.name,b.state from v$pdbs a , dba_pdb_saved_states b where a.con_id = b.con_id;

NAME STATE

---------- --------------

PDB1 OPEN

SQL> SELECT con_name, instance_name, state FROM cdb_pdb_saved_states;

CON_NAME INSTANCE_N STATE

---------- ---------- --------------

PDB1 CDB01 OPEN

Create ZDM host

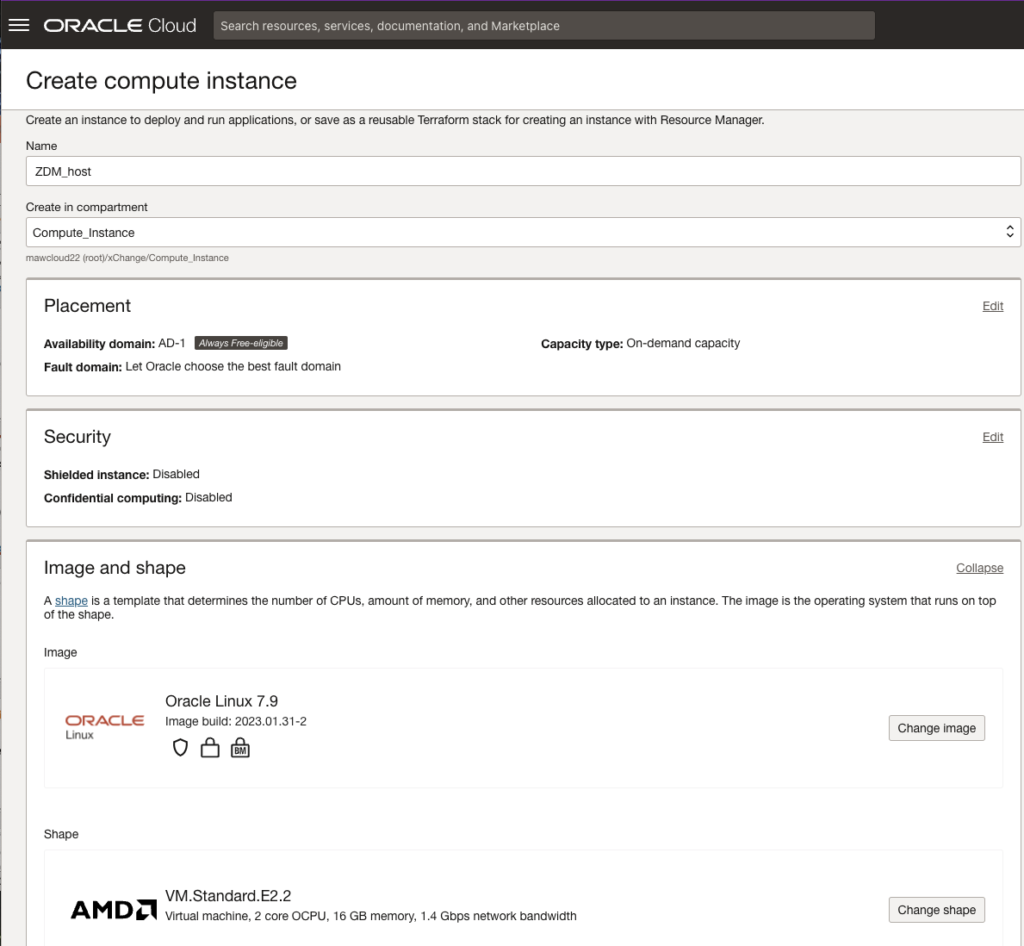

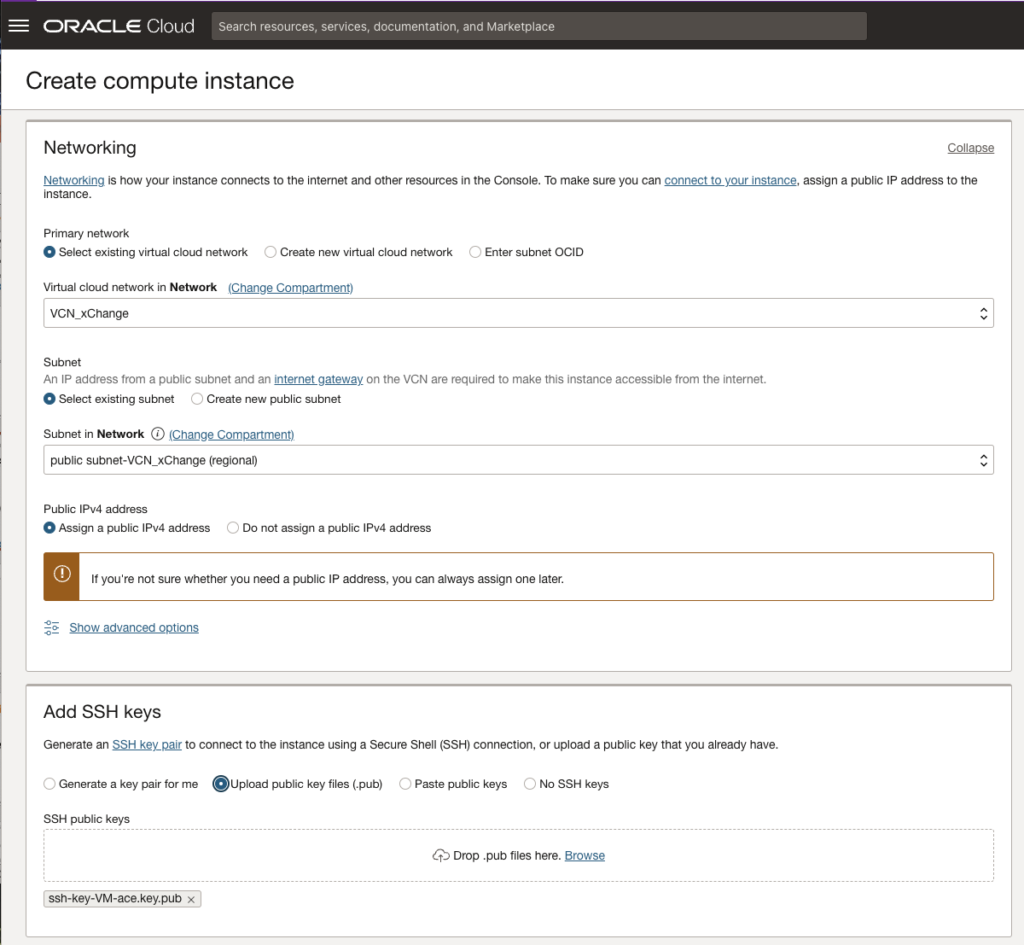

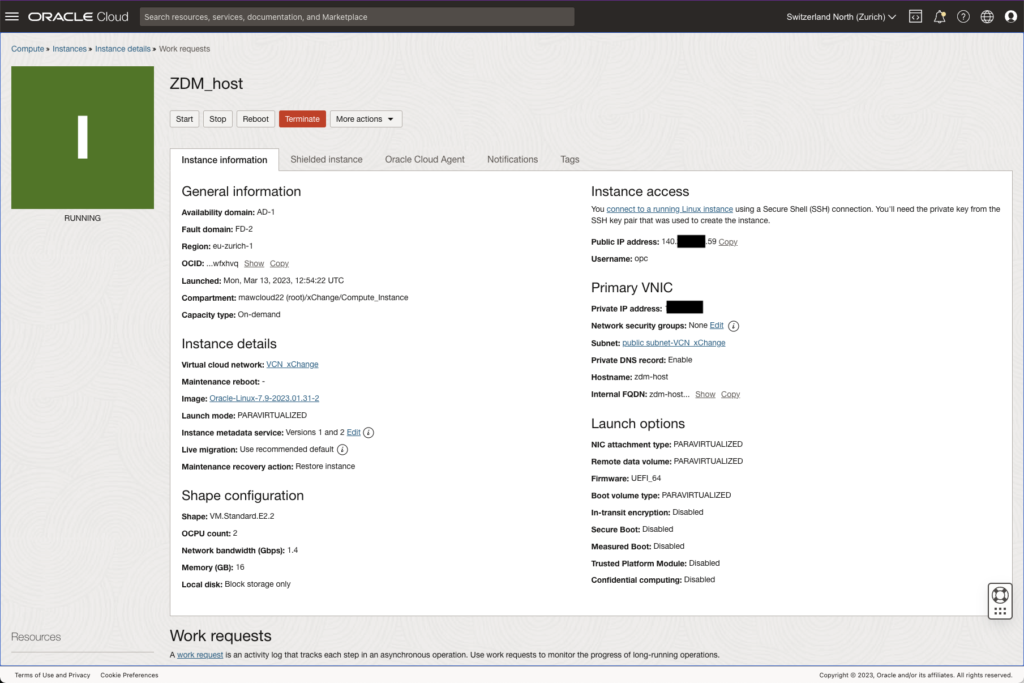

ZDM should be installed on a dedicated host. I have created a VM with oracle linux 7.9 Operating System as described in the next pictures.

To connect to the ZDM host I will use my private key:

ssh -i /Users/maw/Documents/Dokument/pem_ssh_key/Oracle_cloud_ace/VM/ssh-key-VM-ace.key [email protected]Install ZDM software on the ZDM host

The installation manual of ZDM can be found of the Oracle Web Site: https://docs.oracle.com/en/database/oracle/zero-downtime-migration/21.4/index.html

We first need to check if following packages are installed and otherwise we will need to install them:

- perl

- python36-oci-cli

- expect

- oraclelinux-developer-release-el7

- libaio

- glibc-devel

This has been done now:

[opc@zdm-host ~]$ which perl

/usr/bin/perl

[opc@zdm-host ~]$ sudo rpm -qa | grep python36-oci-cli

[opc@zdm-host ~]$ sudo yum install python36-oci-cli

Loaded plugins: langpacks, ulninfo

ol7_MySQL80

| 3.0 kB 00:00:00

...

...

... 11/11

Installed:

python36-oci-cli.noarch 0:3.23.2-1.el7

Dependency Installed:

python3-jmespath.noarch 0:0.10.0-1.el7 python3-prompt-toolkit.noarch 0:3.0.29-1.0.2.el7 python36-arrow.noarch 0:1.1.1-1.el7 python36-dateutil.noarch 1:2.7.5-1.0.1.el7 python36-pyyaml.x86_64 0:5.4.1-1.0.1.el7

python36-terminaltables.noarch 0:3.1.0-1.0.1.el7 python36-typing-extensions.noarch 0:3.7.4.2-1.el7 python36-wcwidth.noarch 0:0.2.5-1.el7

Dependency Updated:

python36-oci-sdk.x86_64 0:2.93.1-1.el7

Complete!

[opc@zdm-host ~]$ sudo rpm -qa | grep expect

[opc@zdm-host ~]$ sudo yum install expect

Loaded plugins: langpacks, ulninfo

Resolving Dependencies

...

...

... 2/2

Installed:

expect.x86_64 0:5.45-14.el7_1

Dependency Installed:

tcl.x86_64 1:8.5.13-8.el7

Complete!

[opc@zdm-host ~]$ sudo rpm -qa | grep expect

expect-5.45-14.el7_1.x86_64

[opc@zdm-host ~]$ sudo rpm -qa | grep oraclelinux-developer-release-el7

oraclelinux-developer-release-el7-1.0-6.el7.x86_64

[opc@zdm-host ~]$ sudo rpm -qa | grep libaio

libaio-0.3.109-13.el7.x86_64

[opc@zdm-host ~]$ sudo rpm -qa | grep glibc-devel

glibc-devel-2.17-326.0.3.el7_9.x86_64

We will now create ZDM group, user and folders:

[opc@zdm-host ~]$ sudo groupadd zdm [opc@zdm-host ~]$ sudo useradd -g zdm zdmuser [opc@zdm-host ~]$ sudo mkdir -p /u01/app/zdmhome [opc@zdm-host ~]$ sudo mkdir /u01/app/zdmbase [opc@zdm-host ~]$ sudo mkdir /u01/app/zdmdownload [opc@zdm-host ~]$ sudo chown zdmuser:zdm /u01/app/zdmhome [opc@zdm-host ~]$ sudo chown zdmuser:zdm /u01/app/zdmbase [opc@zdm-host ~]$ sudo chown zdmuser:zdm /u01/app/zdmdownload

We can now download ZDM software from the following Web Site: https://www.oracle.com/database/technologies/rac/zdm-downloads.html

We will now upload it to the ZDM host with opc user:

maw@DBI-LT-MAW2 Downloads % scp -i /Users/maw/Documents/Dokument/pem_ssh_key/Oracle_cloud_ace/VM/ssh-key-VM-ace.key V1034023-01.zip [email protected]:/home/opc V1034023-01.zip

We will unzip the software with ZDM user:

[opc@zdm-host ~]$ sudo cp -p /home/opc/V1034023-01.zip /u01/app/zdmdownload/ [opc@zdm-host ~]$ sudo chown zdmuser:zdm /u01/app/zdmdownload/V1034023-01.zip [opc@zdm-host ~]$ sudo su - zdmuser [zdmuser@zdm-host ~]$ cd /u01/app/zdmdownload [zdmuser@zdm-host zdmdownload]$ unzip -q V1034023-01.zip [zdmuser@zdm-host zdmdownload]$ ls -ltrh total 790M drwxr-xr-x. 3 zdmuser zdm 4.0K Feb 7 18:13 zdm21.4 -rw-r--r--. 1 zdmuser zdm 790M Mar 13 13:40 V1034023-01.zip

And we can now install ZDM software:

[zdmuser@zdm-host zdmdownload]$ cd zdm21.4

[zdmuser@zdm-host zdm21.4]$ ./zdminstall.sh setup oraclehome=/u01/app/zdmhome oraclebase=/u01/app/zdmbase ziploc=/u01/app/zdmdownload/zdm21.4/zdm_home.zip -zdm

ZDM kit home: /u01/app/zdmdownload/zdm21.4

/u01/app/zdmdownload/zdm21.4

---------------------------------------

Validating zip file...

---------------------------------------

25 02-07-2023 18:11 rhp/zdm.build

---------------------------------------

Unzipping shiphome to home...

---------------------------------------

Unzipping shiphome...

Shiphome unzipped successfully..

---------------------------------------

##### Performing GridHome Software Only Installation #####

---------------------------------------

Installation log location: /u01/app/zdmbase/crsdata/zdm-host/rhp/logs/runInstaller_1678715692.out

making dir /u01/app/zdmbase/crsdata/zdm-host/rhp/conf

---------------------------------------

Generating Preference file

---------------------------------------

/u01/app/zdmbase/crsdata/zdm-host/rhp/conf/rhp.pref

Using port 8899 for MySQL

---------------------------------------

Generating Root Certificate

---------------------------------------

Cluster root certificate generated successfully.

---------------------------------------

Generating CA CERTS file

---------------------------------------

spawn /u01/app/zdmhome/bin/crskeytoolctl -copycacerts -filestore /u01/app/zdmbase/crsdata/zdm-host/security

Enter JRE cacerts truststore password:

JRE cacerts copied to file [/u01/app/zdmbase/crsdata/zdm-host/security/cacerts].

---------------------------------------

Generating nogi.enabled file

---------------------------------------

nogi.enabled file generated sucessfully

---------------------------------------

Generating standalone_config.properties file

---------------------------------------

Setting base folder permissions

---------------------------------------

Copying service script to bin folder in Oracle Home

label_date is: 221207.9

---------------------------------------

Storing to wallet

---------------------------------------

cacerts crskeytoolctl.log cwallet.sso cwallet.sso.lck

---------------------------------------

Generating random password

---------------------------------------

-rw-------. 1 zdmuser zdm 4333 Mar 13 13:55 /u01/app/zdmbase/crsdata/zdm-host/security/cwallet.sso

-rw-------. 1 zdmuser zdm 4333 Mar 13 13:55 /u01/app/zdmbase/crsdata/zdm-host/security/cwallet.sso

---------------------------------------

Setting up MySQL...

---------------------------------------

spawn /u01/app/zdmhome/mysql/server/bin/mysqladmin --defaults-file=/u01/app/zdmbase/crsdata/zdm-host/rhp/conf/my.cnf -u root -p ping

---------------------------------------

Storing to wallet

---------------------------------------

cacerts crskeytoolctl.log cwallet.sso cwallet.sso.lck

---------------------------------------

Generating random password

---------------------------------------

-rw-------. 1 zdmuser zdm 4445 Mar 13 13:55 /u01/app/zdmbase/crsdata/zdm-host/security/cwallet.sso

-rw-------. 1 zdmuser zdm 4445 Mar 13 13:55 /u01/app/zdmbase/crsdata/zdm-host/security/cwallet.sso

spawn /u01/app/zdmhome/mysql/server/bin/mysql --socket=/u01/app/zdmbase/crsdata/zdm-host/rhp/mysql/metadata/mysql.sock -u root

---------------------------------------

Creating MySQL DB and user...

---------------------------------------

spawn /u01/app/zdmhome/mysql/server/bin/mysql --socket=/u01/app/zdmbase/crsdata/zdm-host/rhp/mysql/metadata/mysql.sock -u root -p -e CREATE DATABASE IF NOT EXISTS GHSUSER21;

spawn /u01/app/zdmhome/mysql/server/bin/mysql --socket=/u01/app/zdmbase/crsdata/zdm-host/rhp/mysql/metadata/mysql.sock -u root -p

spawn /u01/app/zdmhome/mysql/server/bin/mysql --socket=/u01/app/zdmbase/crsdata/zdm-host/rhp/mysql/metadata/mysql.sock -u root -p -e GRANT ALTER, CREATE, CREATE TEMPORARY TABLES, CREATE VIEW, DELETE, DROP, INDEX, INSERT, LOCK TABLES, REFERENCES, SELECT, SHOW VIEW, UPDATE ON GHSUSER21.* TO 'GHSUSER21'@'localhost';

current node is active node

spawn /u01/app/zdmhome/mysql/server/bin/mysqladmin --defaults-file=/u01/app/zdmbase/crsdata/zdm-host/rhp/conf/my.cnf -u root -p shutdown

---------------------------------------

Adding Certs to ZDM

---------------------------------------

ZDM service setup finished successfully...

We can start ZDM service:

[zdmuser@zdm-host ~]$ /u01/app/zdmhome/bin/zdmservice start

No instance detected, starting zdmservice

spawn /u01/app/zdmhome/mysql/server/bin/mysqladmin --defaults-file=/u01/app/zdmbase/crsdata/zdm-host/rhp/conf/my.cnf -u root -p ping

WARNING: oracle.jwc.jmx does not exist in the configuration file. It will be TRUE by default.

[jwcctl debug] Environment ready to start JWC

[jwcctl debug] Return code of initialization: [0]

[jwcctl debug] ... BEGIN_DEBUG [Action= start] ...

Start JWC

[jwcctl debug] Loading configuration file: /u01/app/zdmbase/crsdata/zdm-host/rhp/conf/jwc.properties

[jwcctl debug] oracle.jmx.login.credstore = CRSCRED

[jwcctl debug] oracle.jmx.login.args = DOMAIN=rhp CACHE_ENABLED=true CACHE_EXPIRATION=180

[jwcctl debug] oracle.rmi.url = service:jmx:rmi://{0}:{1,number,#}/jndi/rmi://{0}:{1,number,#}/jmxrmi

[jwcctl debug] oracle.http.url = http://{0}:{1,number,#}/rhp/gridhome

[jwcctl debug] oracle.jwc.tls.clientauth = false

[jwcctl debug] oracle.jwc.tls.rmi.clientfactory = RELOADABLE

[jwcctl debug] oracle.jwc.lifecycle.start.log.fileName = JWCStartEvent.log

[jwcctl debug] oracle.jwc.http.connector.ssl.protocols = TLSv1.2,TLSv1.3

[jwcctl debug] Get JWC PIDs

[jwcctl debug] Done Getting JWC PIDs

[jwcctl debug] ... JWC containers not found ...

[jwcctl debug] Start command:-server -Xms2048M -Xmx4096M -Djava.awt.headless=true -Ddisable.checkForUpdate=true -Djava.util.logging.config.file=/u01/app/zdmbase/crsdata/zdm-host/rhp/conf/logging.properties -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager -DTRACING.ENABLED=true -DTRACING.LEVEL=2 -Doracle.wlm.dbwlmlogger.logging.level=FINEST -Duse_scan_IP=true -Djava.rmi.server.hostname=localhost -Doracle.http.port=8898 -Doracle.jmx.port=8897 -Doracle.tls.enabled=false -Doracle.jwc.tls.http.enabled=false -Doracle.rhp.storagebase=/u01/app/zdmbase -Djava.security.manager -Djava.security.policy=/u01/app/zdmbase/crsdata/zdm-host/rhp/conf/catalina.policy -Djava.security.egd=file:/dev/urandom -Doracle.jwc.wallet.path=/u01/app/zdmbase/crsdata/zdm-host/security -Doracle.jmx.login.credstore=WALLET -Doracle.rest.enabled=false -Doracle.jmx.enabled=true -Dcatalina.home=/u01/app/zdmhome/tomcat -Dcatalina.base=/u01/app/zdmbase/crsdata/zdm-host/rhp -Djava.io.tmpdir=/u01/app/zdmbase/crsdata/zdm-host/rhp/temp -Doracle.home=/u01/app/zdmhome -Doracle.jwc.mode=STANDALONE -classpath /u01/app/zdmhome/jlib/cryptoj.jar:/u01/app/zdmhome/jlib/oraclepki.jar:/u01/app/zdmhome/jlib/osdt_core.jar:/u01/app/zdmhome/jlib/osdt_cert.jar:/u01/app/zdmhome/tomcat/lib/tomcat-juli.jar:/u01/app/zdmhome/tomcat/lib/bootstrap.jar:/u01/app/zdmhome/jlib/jwc-logging.jar org.apache.catalina.startup.Bootstrap start

[jwcctl debug] Get JWC PIDs

[jwcctl debug] Done Getting JWC PIDs

[jwcctl debug] ... JWC Container (pid=29786) ...

[jwcctl debug] ... JWC Container running (pid=29786) ...

[jwcctl debug] Check command:-Djava.net.preferIPv6Addresses=true -Dcatalina.base=/u01/app/zdmbase/crsdata/zdm-host/rhp -Doracle.wlm.dbwlmlogger.logging.level=FINEST -Doracle.jwc.client.logger.file.name=/u01/app/zdmbase/crsdata/zdm-host/rhp/logs/jwc_checker_stdout_err_%g.log -Doracle.jwc.client.logger.file.number=10 -Doracle.jwc.client.logger.file.size=1048576 -Doracle.jwc.wallet.path=/u01/app/zdmbase/crsdata/zdm-host/security -Doracle.jmx.login.credstore=WALLET -Doracle.tls.enabled=false -Doracle.jwc.tls.http.enabled=false -classpath /u01/app/zdmhome/jlib/jwc-logging.jar:/u01/app/zdmhome/jlib/jwc-security.jar:/u01/app/zdmhome/jlib/jwc-client.jar:/u01/app/zdmhome/jlib/jwc-cred.jar:/u01/app/zdmhome/jlib/srvm.jar:/u01/app/zdmhome/jlib/srvmhas.jar:/u01/app/zdmhome/jlib/cryptoj.jar:/u01/app/zdmhome/jlib/oraclepki.jar:/u01/app/zdmhome/jlib/osdt_core.jar:/u01/app/zdmhome/jlib/osdt_cert.jar:/u01/app/zdmhome/tomcat/lib/tomcat-juli.jar oracle.cluster.jwc.tomcat.client.JWCChecker localhost 8898 -1

[jwcctl debug] ... JWC Container is ready ...

[jwcctl debug] ... START - Return code = 0 ...

[jwcctl debug] ... END_DEBUG [Action=start] ...

[jwcctl debug] Return code of AGENT: [0]

Return code is 0

Server started successfully.

And check its status:

[zdmuser@zdm-host ~]$ /u01/app/zdmhome/bin/zdmservice status --------------------------------------- Service Status --------------------------------------- Running: true Tranferport: Conn String: jdbc:mysql://localhost:8899/ RMI port: 8897 HTTP port: 8898 Wallet path: /u01/app/zdmbase/crsdata/zdm-host/security

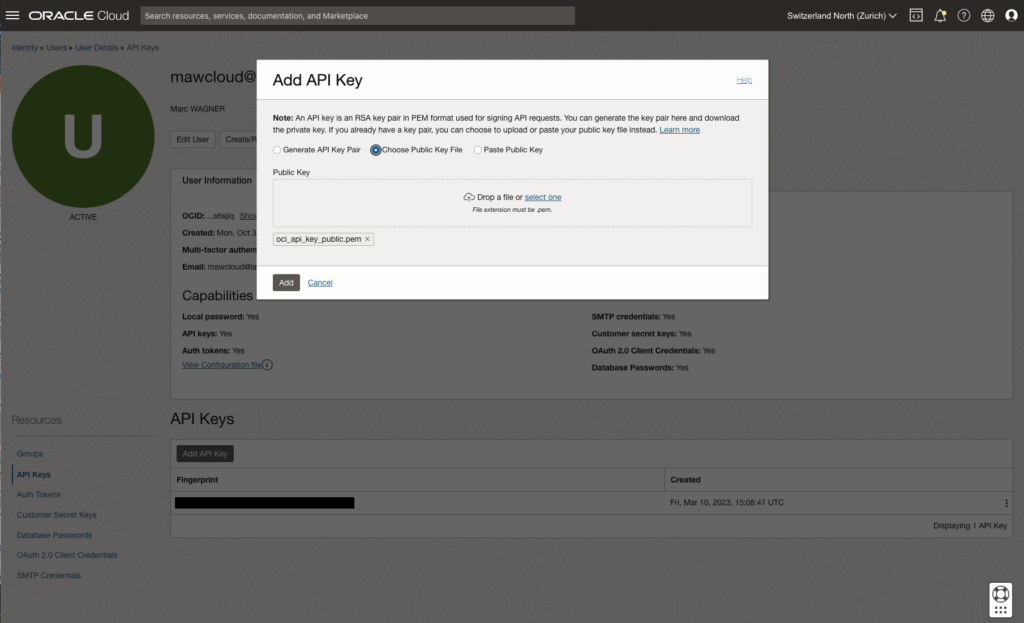

Create API keys

On the ZDM host, we will create API keys that we will upload to our OCI account. These keys will be used for ZDM to be able run OCI commands.

Generate API keys:

[zdmuser@zdm-host ~]$ cd /u01/app/zdmhome [zdmuser@zdm-host zdmhome]$ mkdir .oci [zdmuser@zdm-host zdmhome]$ cd .oci [zdmuser@zdm-host .oci]$ openssl genrsa -out /u01/app/zdmhome/.oci/oci_api_key.pem 2048 Generating RSA private key, 2048 bit long modulus ......................................+++ ..................+++ e is 65537 (0x10001) [zdmuser@zdm-host .oci]$ ls -al total 8 drwxr-xr-x. 2 zdmuser zdm 29 Mar 13 14:13 . drwxr-xr-x. 50 zdmuser zdm 4096 Mar 13 14:12 .. -rw-r--r--. 1 zdmuser zdm 1679 Mar 13 14:13 oci_api_key.pem [zdmuser@zdm-host .oci]$ openssl rsa -pubout -in /u01/app/zdmhome/.oci/oci_api_key.pem -out /u01/app/zdmhome/.oci/oci_api_key_public.pem writing RSA key [zdmuser@zdm-host .oci]$ ls -al total 12 drwxr-xr-x. 2 zdmuser zdm 59 Mar 13 14:13 . drwxr-xr-x. 50 zdmuser zdm 4096 Mar 13 14:12 .. -rw-r--r--. 1 zdmuser zdm 1679 Mar 13 14:13 oci_api_key.pem -rw-r--r--. 1 zdmuser zdm 451 Mar 13 14:13 oci_api_key_public.pem

API keys will be downloaded into the OCI account as described in the next pictures.

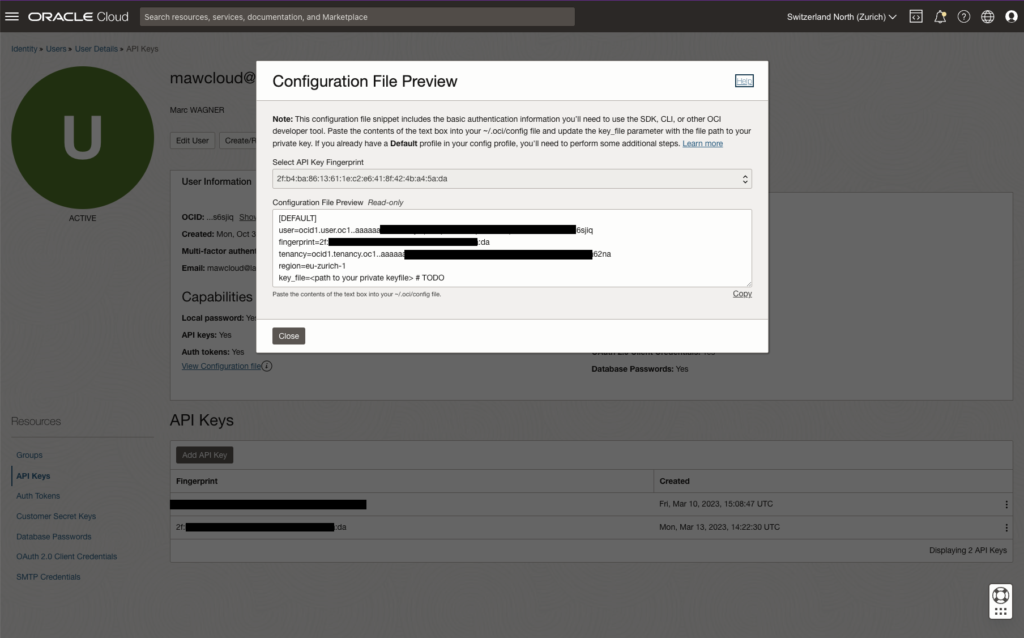

OCI config file on ZDM host

On the ZDM host for the zdmuser and opc user we will create the OCI config file to be able to run OCI commands directly from the ZDM host.

For ZDM user:

[zdmuser@zdm-host ~]$ cd /u01/app/zdmhome/.oci [zdmuser@zdm-host .oci]$ vi config [zdmuser@zdm-host .oci]$ cat config [DEFAULT] user=ocid1.user.oc1..aaaaaaaadl********************************************6sjiq fingerprint=2f:**:**:**:**:**:**:**:**:**:**:**:**:**:**:da tenancy=ocid1.tenancy.oc1..aaaaaaa********************************************62na region=eu-zurich-1 key_file=/u01/app/zdmhome/.oci/oci_api_key.pem

For opc user:

[opc@zdm-host ~]$ cd ~ [opc@zdm-host ~]$ mkdir .oci [opc@zdm-host ~]$ cp /u01/app/zdmhome/.oci/config /home/opc/.oci [opc@zdm-host ~]$ cp /u01/app/zdmhome/.oci/oci_api_key.pem /home/opc/.oci [opc@zdm-host ~]$ cp /u01/app/zdmhome/.oci/oci_api_key_public.pem /home/opc/.oci [opc@zdm-host ~]$ cd .oci [opc@zdm-host .oci]$ vi config [opc@zdm-host .oci]$ cat config [DEFAULT] user=ocid1.user.oc1..aaaaaaaadl********************************************6sjiq fingerprint=2f:**:**:**:**:**:**:**:**:**:**:**:**:**:**:da tenancy=ocid1.tenancy.oc1..aaaaaaa********************************************62na region=eu-zurich-1 key_file=/u01/app/zdmhome/.oci/oci_api_key.pem

Now we can test oci commands:

[opc@zdm-host .oci]$ oci iam region list

WARNING: Permissions on /home/opc/.oci/config are too open.

To fix this please try executing the following command:

oci setup repair-file-permissions --file /home/opc/.oci/config

Alternatively to hide this warning, you may set the environment variable, OCI_CLI_SUPPRESS_FILE_PERMISSIONS_WARNING:

export OCI_CLI_SUPPRESS_FILE_PERMISSIONS_WARNING=True

WARNING: Permissions on /home/opc/.oci/oci_api_key.pem are too open.

To fix this please try executing the following command:

oci setup repair-file-permissions --file /home/opc/.oci/oci_api_key.pem

Alternatively to hide this warning, you may set the environment variable, OCI_CLI_SUPPRESS_FILE_PERMISSIONS_WARNING:

export OCI_CLI_SUPPRESS_FILE_PERMISSIONS_WARNING=True

{

"data": [

{

"key": "AMS",

"name": "eu-amsterdam-1"

},

...

...

...

And we can lock the api private key for zdmuser:

[opc@zdm-host .oci]$ sudo su - zdmuser Last login: Mon Mar 13 14:27:19 GMT 2023 on pts/0 [zdmuser@zdm-host ~]$ cd /u01/app/zdmhome/.oci [zdmuser@zdm-host .oci]$ chmod go-rwx /u01/app/zdmhome/.oci/oci_api_key.pem

Create RSA Keys

We will generate RSA keys from the ZDM user and add the public key into the authorized keys from the opc user.

From ZDM user:

[zdmuser@zdm-host ~]$ ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/home/zdmuser/.ssh/id_rsa): Created directory '/home/zdmuser/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/zdmuser/.ssh/id_rsa. Your public key has been saved in /home/zdmuser/.ssh/id_rsa.pub. The key fingerprint is: SHA256:fGfzD**************************vzQ zdmuser@zdm-host The key's randomart image is: +---[RSA 2048]----+ ... ... ... +----[SHA256]-----+ [zdmuser@zdm-host ~]$ ls -ltrh /home/zdmuser/.ssh total 8.0K -rw-r--r--. 1 zdmuser zdm 398 Mar 13 14:46 id_rsa.pub -rw-------. 1 zdmuser zdm 1.7K Mar 13 14:46 id_rsa

With OPC user:

[opc@zdm-host ~]$ sudo cat /home/zdmuser/.ssh/id_rsa.pub >> /home/opc/.ssh/authorized_keys

Source DB preparation

We need to make some actions on the database we would like to migrate.

streams_pool_size

The first one is to set streams_pool_size parameter. For offline logical migration, as we are doing here, we need to set this parameter to a minimum of 256MB-350MB to have an initial pool allocated for optimal Data Pump performance. For online logical migrations, we need to set it to at least 2 GB. We will set it to 2 GB. Having enough memory we will increase sga size as well.

oracle@srv-source-zdm-01:/home/oracle/ [CDB01] sqh SQL*Plus: Release 19.0.0.0.0 - Production on Wed Mar 15 11:51:43 2023 Version 19.17.0.0.0 Copyright (c) 1982, 2022, Oracle. All rights reserved. Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production Version 19.17.0.0.0 SQL> show parameter streams_pool_size NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ streams_pool_size big integer 0 SQL> show parameter sga NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ allow_group_access_to_sga boolean FALSE lock_sga boolean FALSE pre_page_sga boolean TRUE sga_max_size big integer 2G sga_min_size big integer 0 sga_target big integer 2000M unified_audit_sga_queue_size integer 1048576 SQL> alter system set sga_max_size=8G scope=spfile; System altered. SQL> alter system set sga_target=8G scope=spfile; System altered. SQL> shutdown immediate; Database closed. Database dismounted. ORACLE instance shut down. SQL> startup ORACLE instance started. Total System Global Area 8589931688 bytes Fixed Size 13874344 bytes Variable Size 587202560 bytes Database Buffers 7969177600 bytes Redo Buffers 19677184 bytes Database mounted. Database opened. SQL> alter system set streams_pool_size=2g scope=both; System altered. SQL> show parameter streams_pool_size NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ streams_pool_size big integer 2G

Migration orcl_user user

We need to create a migration user, named orcl_user, on the PDB1 we are going to migrate.

oracle@srv-source-zdm-01:~/ [CDB01 (PDB1)] sqh SQL*Plus: Release 19.0.0.0.0 - Production on Wed Mar 15 12:14:43 2023 Version 19.17.0.0.0 Copyright (c) 1982, 2022, Oracle. All rights reserved. Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production Version 19.17.0.0.0 SQL> show con_name CON_NAME ------------------------------ PDB1 SQL> create user orcl_user identified by manager; User created. SQL> grant create user to orcl_user; Grant succeeded. SQL> grant create session to orcl_user; Grant succeeded. SQL> grant resource to orcl_user; Grant succeeded. SQL> grant create table to orcl_user; Grant succeeded. SQL> grant unlimited tablespace to orcl_user; Grant succeeded. SQL> grant create any directory to orcl_user; Grant succeeded. SQL> grant dba to orcl_user; Grant succeeded.

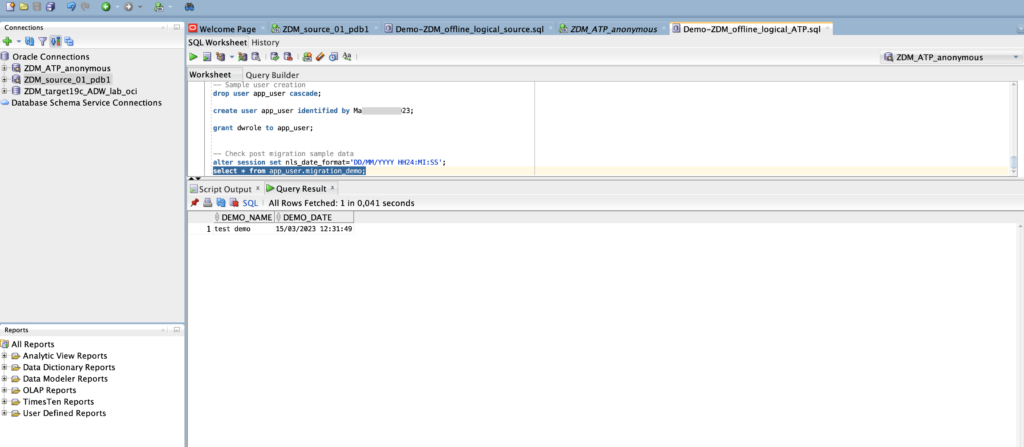

Sample user and data

We will create a sample user, sample table with some data on the PDB that we will check in the ADB after migration. This would show that the migration has been performed.

Create app_user and give appropriate permissions:

SQL> create user app_user identified by manager; User created. SQL> grant create user to app_user; Grant succeeded. SQL> grant create session to app_user; Grant succeeded. SQL> grant resource to app_user; Grant succeeded. SQL> grant create table to app_user; Grant succeeded. SQL> grant unlimited tablespace to app_user; Grant succeeded. SQL> grant create any directory to app_user; Grant succeeded.

Create sample table migration_demo:

oracle@srv-source-zdm-01:~/ [CDB01 (CDB$ROOT)] sqh SQL*Plus: Release 19.0.0.0.0 - Production on Wed Mar 15 12:27:44 2023 Version 19.17.0.0.0 Copyright (c) 1982, 2022, Oracle. All rights reserved. Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production Version 19.17.0.0.0 SQL> connect app_user@pdb1 Enter password: Connected. SQL> show con_name CON_NAME ------------------------------ PDB1 SQL> show user USER is "APP_USER" SQL> create table migration_demo (demo_name varchar (60), demo_date date); Table created.

Add sample data in the migration table:

SQL> alter session set nls_date_format='DD/MM/YYYY HH24:MI:SS';

Session altered.

SQL> insert into migration_demo values ('test demo', sysdate);

1 row created.

SQL> select * from migration_demo;

DEMO_NAME DEMO_DATE

------------------------ -------------------

test demo 15/03/2023 12:31:49

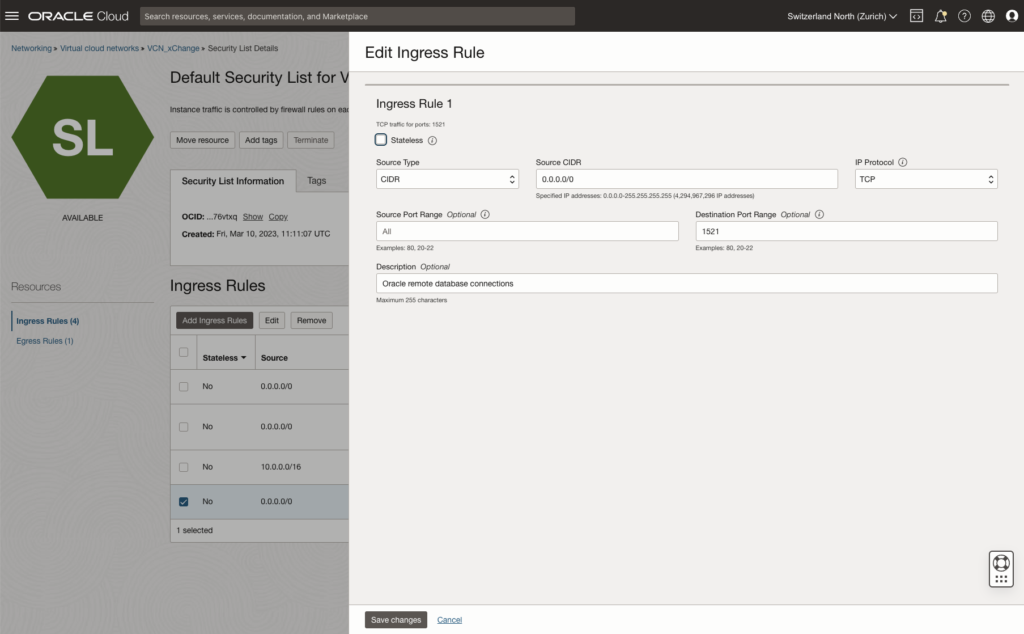

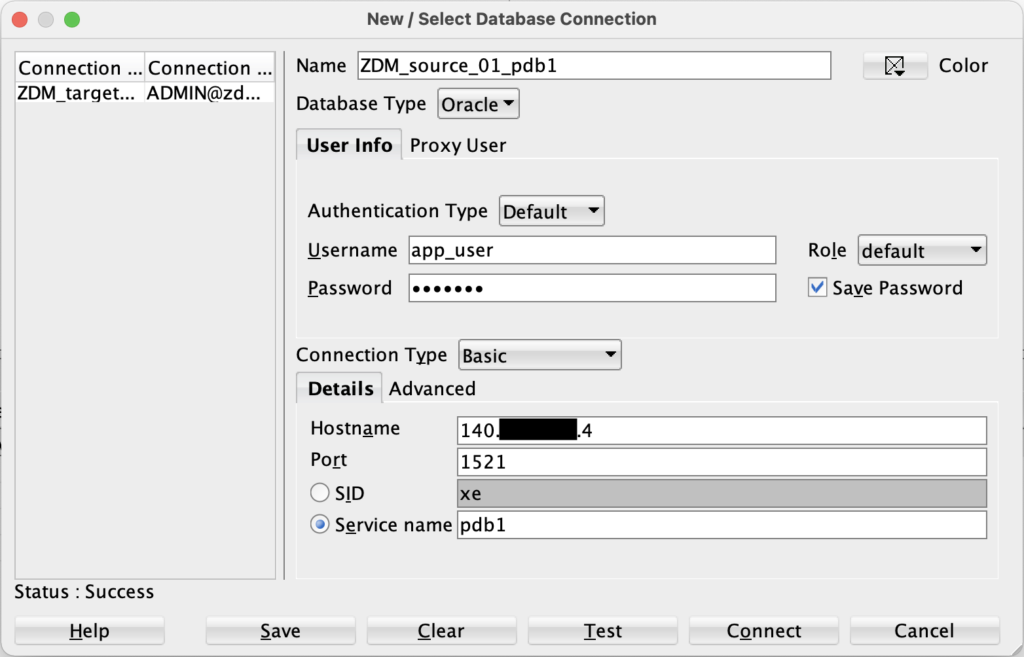

SQL Developer connection

We will configure a connection to the PDB1 on SQL Developer.

But first of all, we need to create appropriate ingress rule for port 1521. See next picture:

And we will configure and test SQL Developer connection. See next picture:

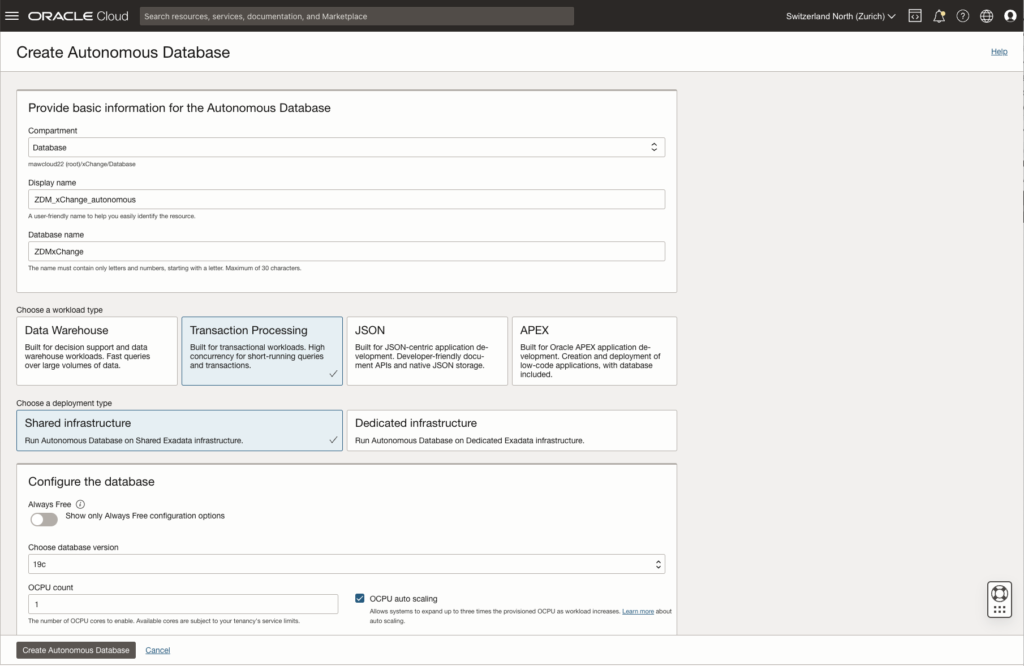

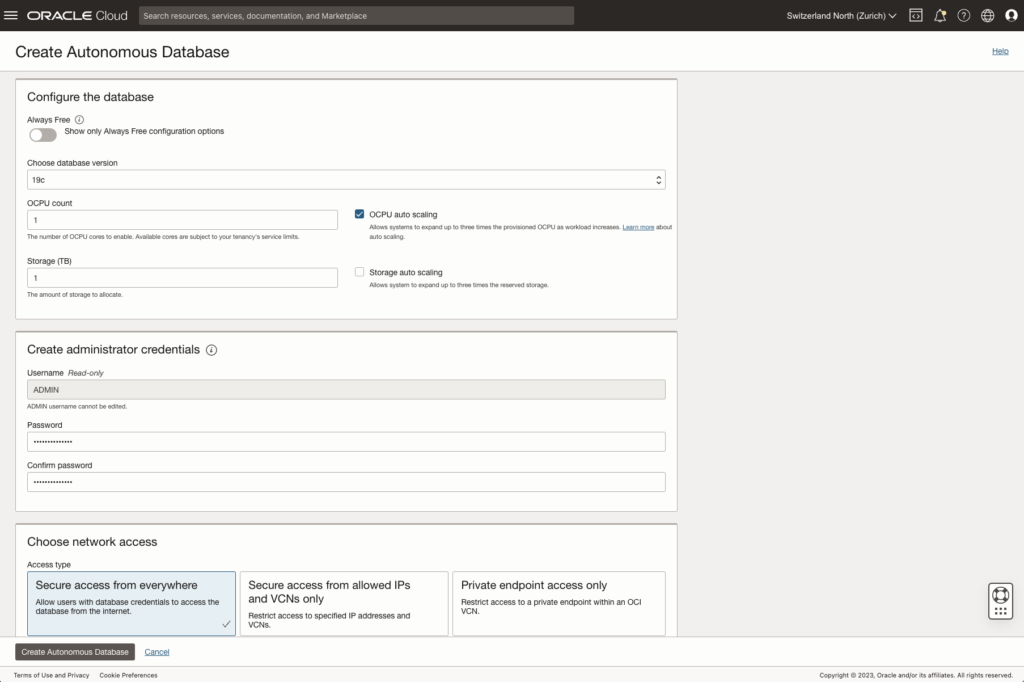

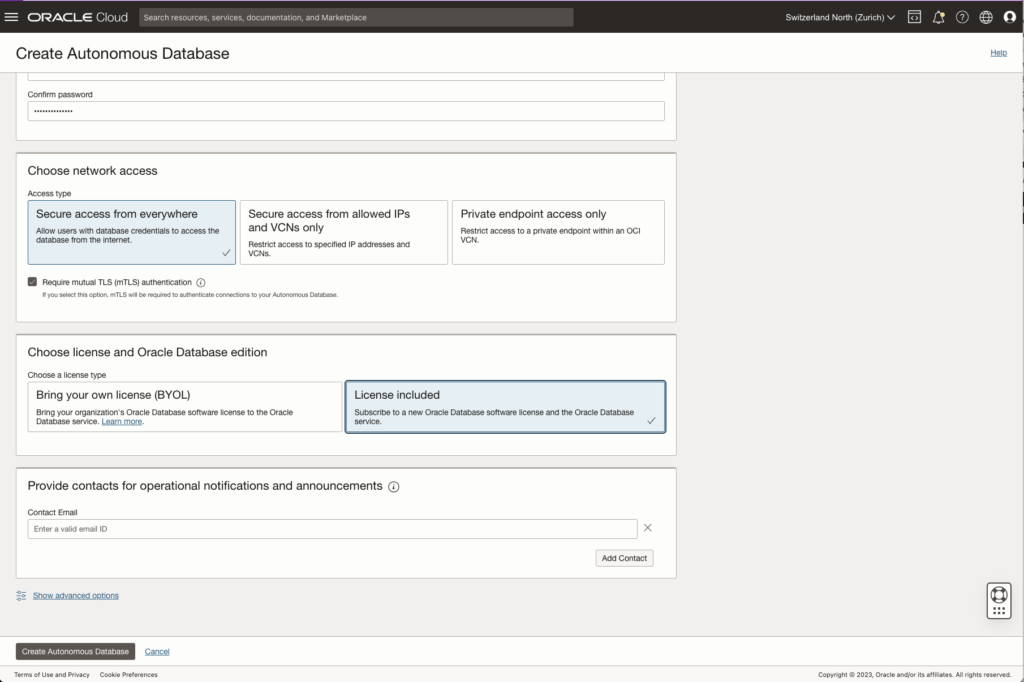

Provision target ATP (Autonomous Transaction Processing) database

We will create the ATP from the OCI GUI.

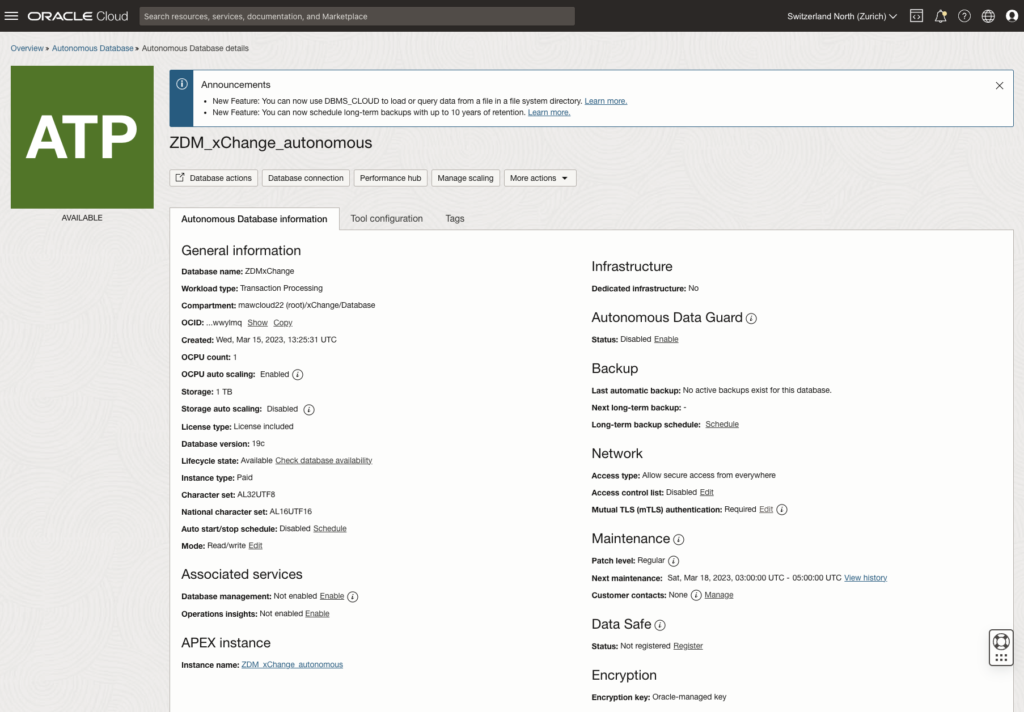

And we have our ATP database created and ready.

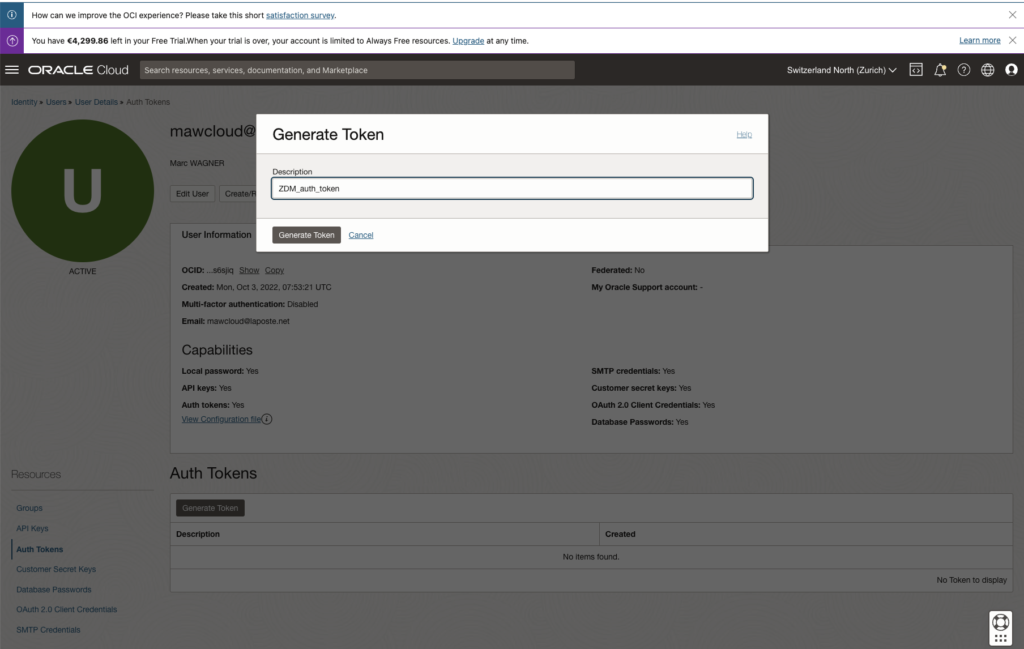

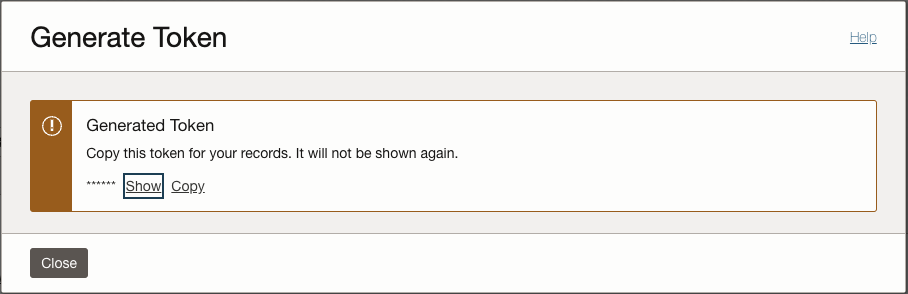

Create an auth token

We will create an auth token and store it in our keepass.

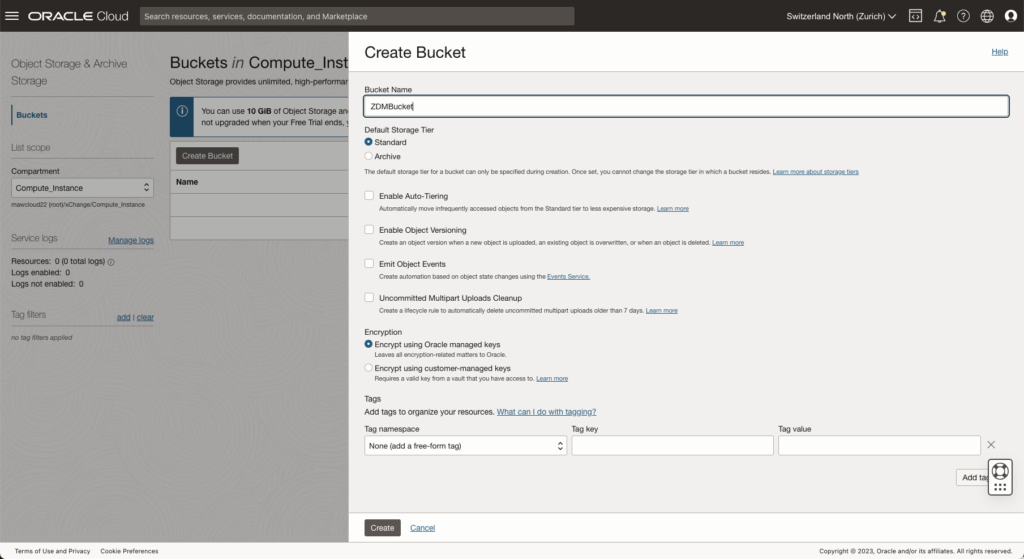

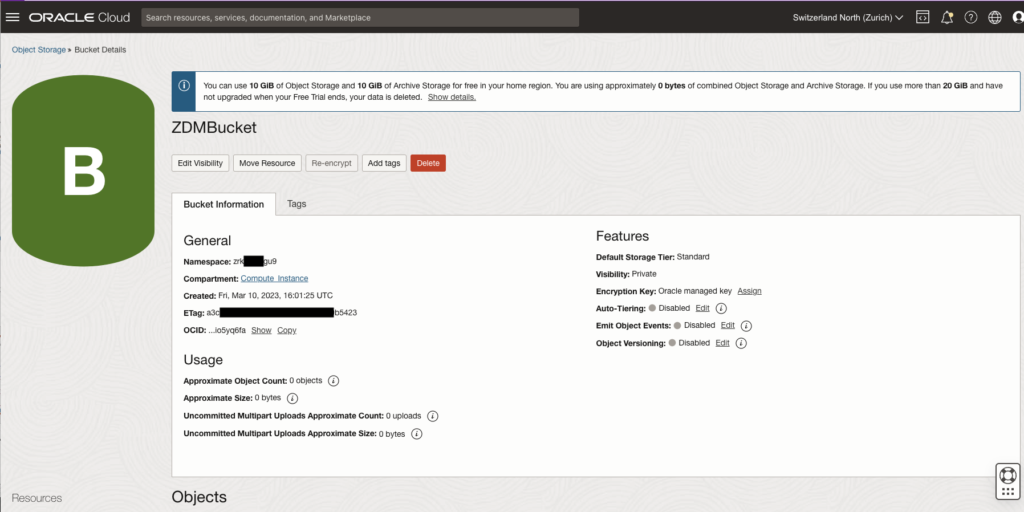

Create a bucket

We will create a bucket which will store the dump files created and used by the ZDM tool for the migration.

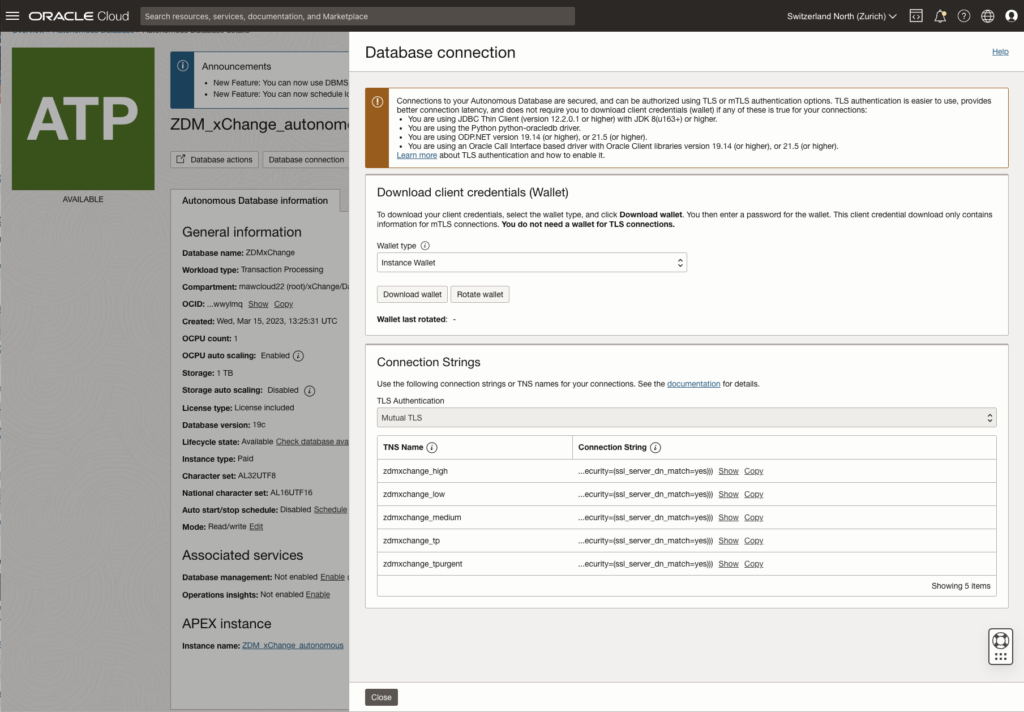

ATP connection with SQL Developer

We will configure a connection from SQL Developer to our target autonomous database.

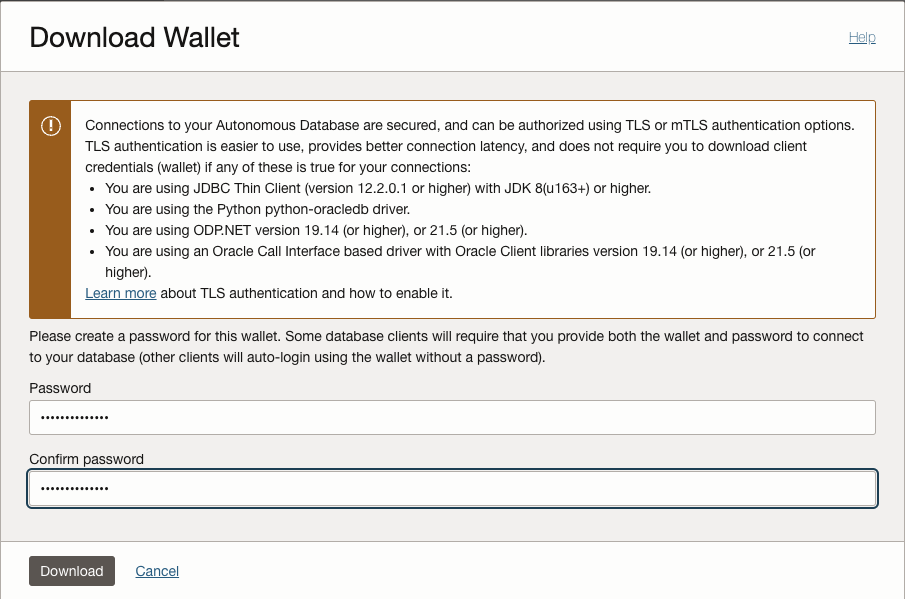

Let’s go in <Database Connection> to download the wallet for client connection.

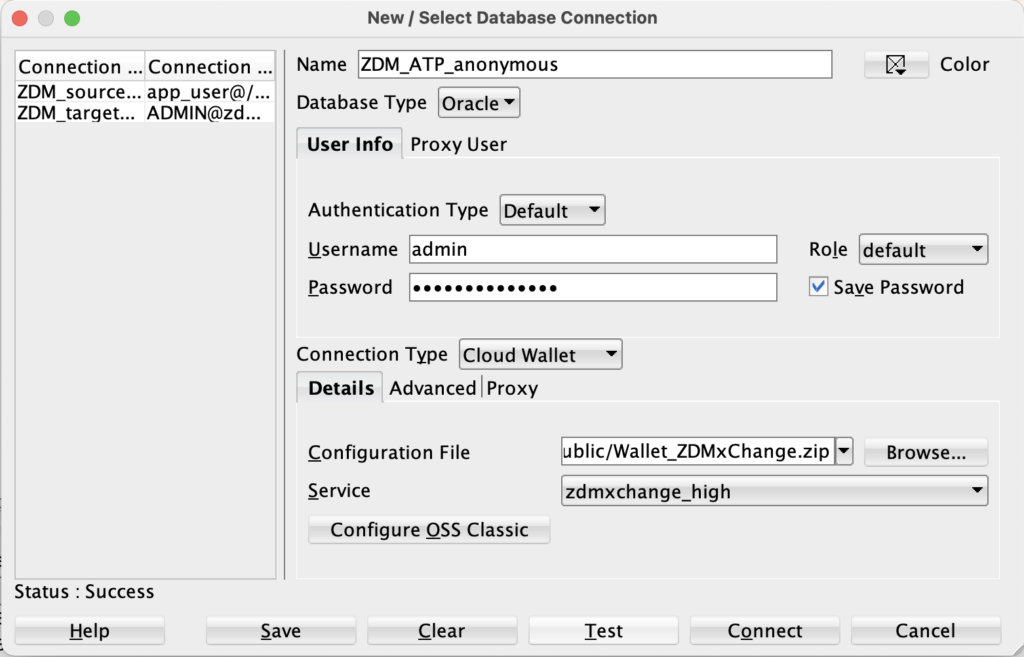

In SQL Developer load the wallet and test the connection. This one needs to be successful.

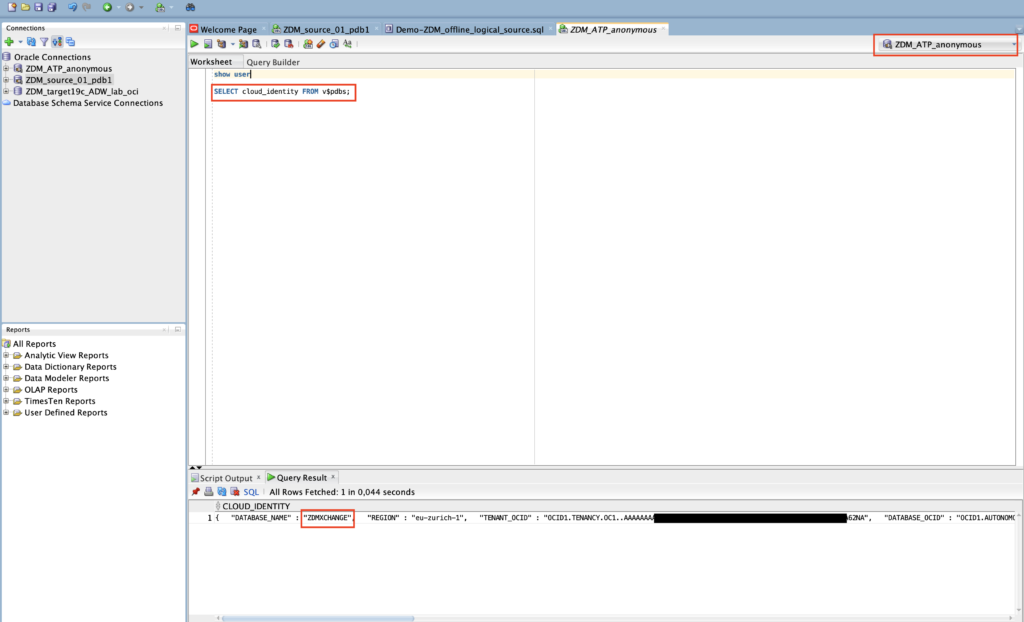

And check the connection.

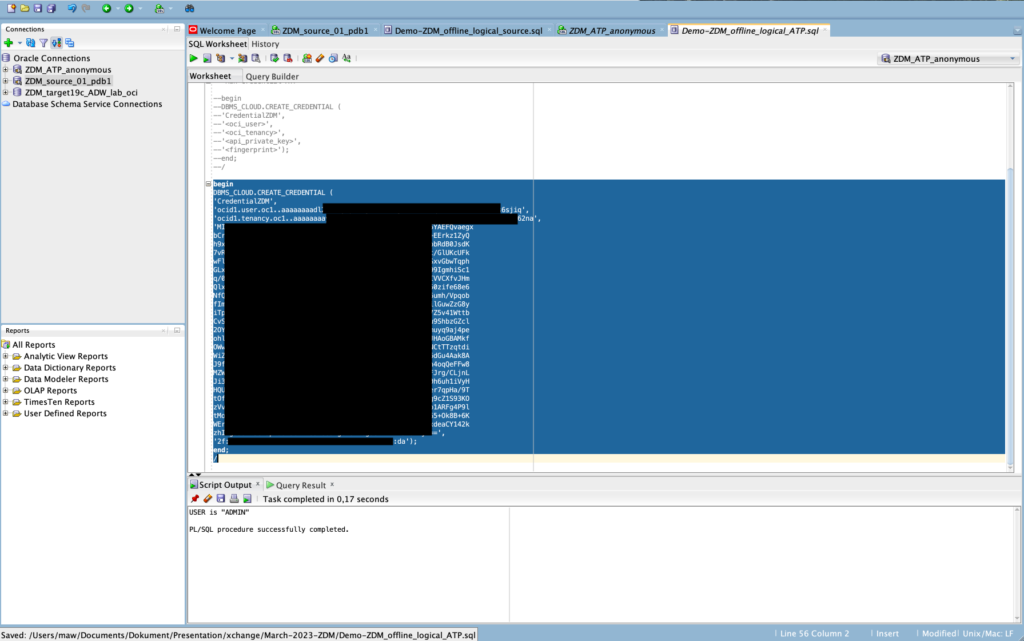

Create the credential in the ATP target database

We need to create the credential using the DBMS_CLOUD package. The command would be the following one:

begin

DBMS_CLOUD.CREATE_CREDENTIAL (

'CredentialZDM',

'<oci_user>',

'<oci_tenancy>',

'<api_private_key>',

'<fingerprint>');

end;

/

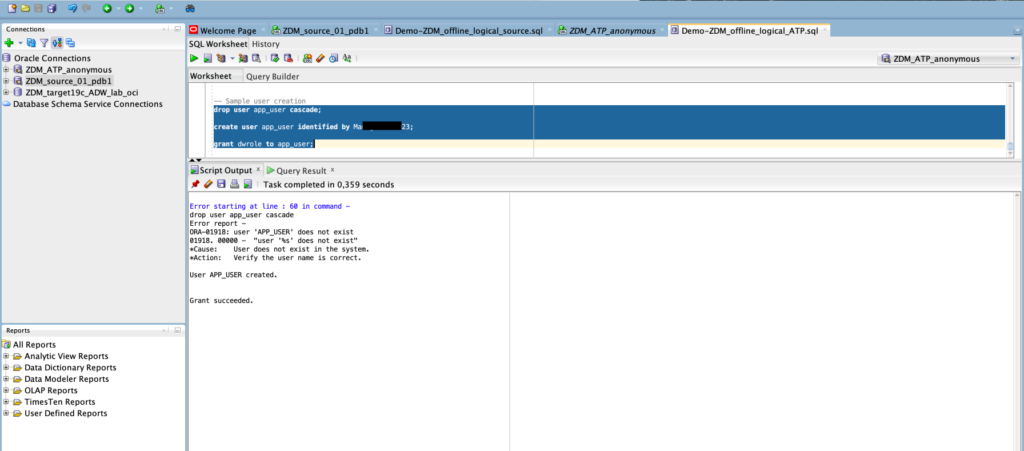

Create sample user in the target ATP database

We will create the sample user in the target database and provide him appropriate permissions.

ZDM template

We will now prepare the ZDM template for the migration. Knowing the both VM are not in the DMS we configured each /etc/hosts file accordingly.

We also need to configure appropriate RSA key to have got connection from ZDM host to the source database:

[opc@zdm-host ~]$ cd .ssh [opc@zdm-host .ssh]$ ls -l total 8 -rw-------. 1 opc opc 798 Mar 13 14:48 authorized_keys -rw-r--r--. 1 opc opc 362 Mar 15 16:05 known_hosts [opc@zdm-host .ssh]$ vi ssh-key-VM-YAK-ace.key [opc@zdm-host .ssh]$ chmod 600 ssh-key-VM-YAK-ace.key

And we test the connection:

[opc@zdm-host .ssh]$ ssh -i ssh-key-VM-YAK-ace.key opc@srv-source-zdm-01 Activate the web console with: systemctl enable --now cockpit.socket Last login: Wed Mar 15 12:11:33 2023 from 91.167.48.160 [opc@srv-source-zdm-01 ~]$

We need to test if the port 1521 on the source DB can be reached from the ZDM host:

[opc@zdm-host ~]$ sudo nc -vz srv-source-zdm-01 1521 Ncat: Version 7.50 ( https://nmap.org/ncat ) Ncat: Connected to 10.0.0.234:1521. Ncat: 0 bytes sent, 0 bytes received in 0.01 seconds.

We can now configure the template:

[zdmuser@zdm-host ~]$ mkdir migration [zdmuser@zdm-host ~]$ cp -p /u01/app/zdmhome/rhp/zdm/template/zdm_logical_template.rsp ~/migration/zdm_logical_off_to_adb.rsp [zdmuser@zdm-host migration]$ vi zdm_logical_off_to_adb.rsp [zdmuser@zdm-host migration]$ cat zdm_logical_off_to_adb.rsp MIGRATION_METHOD=OFFLINE_LOGICAL DATA_TRANSFER_MEDIUM=OSS TARGETDATABASE_OCID=ocid1.autonomousdatabase.oc1.eu-zurich-1.an5heljrv****************************************************lmq TARGETDATABASE_ADMINUSERNAME=ADMIN SOURCEDATABASE_ADMINUSERNAME=orcl_user SOURCEDATABASE_CONNECTIONDETAILS_HOST=srv-source-zdm-01 SOURCEDATABASE_CONNECTIONDETAILS_PORT=1521 SOURCEDATABASE_CONNECTIONDETAILS_SERVICENAME=pdb1 OCIAUTHENTICATIONDETAILS_USERPRINCIPAL_TENANTID=ocid1.tenancy.oc1..aaaaaaaay****************************************************62na OCIAUTHENTICATIONDETAILS_USERPRINCIPAL_USERID=ocid1.user.oc1..aaaaaaaadl****************************************************6sjiq OCIAUTHENTICATIONDETAILS_USERPRINCIPAL_FINGERPRINT=2f:**:**:**:**:**:**:**:**:**:**:**:**:**:**:da OCIAUTHENTICATIONDETAILS_USERPRINCIPAL_PRIVATEKEYFILE=/u01/app/zdmhome/.oci/oci_api_key.pem OCIAUTHENTICATIONDETAILS_REGIONID=eu-zurich-1 #TARGETDATABASE_CONNECTIONDETAILS_BASTIONDETAILS_PORT=22 #SOURCEDATABASE_CONNECTIONDETAILS_BASTIONDETAILS_PORT=22 #SOURCECONTAINERDATABASE_CONNECTIONDETAILS_BASTIONDETAILS_PORT=22 GOLDENGATESETTINGS_REPLICAT_MAPPARALLELISM=4 GOLDENGATESETTINGS_REPLICAT_MINAPPLYPARALLELISM=4 GOLDENGATESETTINGS_REPLICAT_MAXAPPLYPARALLELISM=50 GOLDENGATESETTINGS_ACCEPTABLELAG=30 DATAPUMPSETTINGS_JOBMODE=SCHEMA DATAPUMPSETTINGS_DELETEDUMPSINOSS=TRUE DATAPUMPSETTINGS_FIXINVALIDOBJECTS=TRUE DATAPUMPSETTINGS_DATAPUMPPARAMETERS_ENCRYPTION=ALL DATAPUMPSETTINGS_DATAPUMPPARAMETERS_TABLEEXISTSACTION=TRUNCATE DATAPUMPSETTINGS_DATAPUMPPARAMETERS_IMPORTPARALLELISMDEGREE=1 DATAPUMPSETTINGS_DATAPUMPPARAMETERS_EXPORTPARALLELISMDEGREE=1 DATAPUMPSETTINGS_DATAPUMPPARAMETERS_EXCLUDETYPELIST=user DATAPUMPSETTINGS_DATABUCKET_NAMESPACENAME=zrk0iitcogu9 DATAPUMPSETTINGS_DATABUCKET_BUCKETNAME=ZDMBucket DATAPUMPSETTINGS_EXPORTDIRECTORYOBJECT_NAME=DB_EXP_DR DATAPUMPSETTINGS_EXPORTDIRECTORYOBJECT_PATH=/u01/app/oracle/export DATAPUMPSETTINGS_CREATEAUTHTOKEN=FALSE DATAPUMPSETTINGS_OMITENCRYPTIONCLAUSE=TRUE DATAPUMPSETTINGS_SECUREFILELOB=TRUE DATAPUMPSETTINGS_SKIPDEFAULTTRANSFORM=FALSE EXCLUDEOBJECTS-1=owner:ORCL_USER, objectName:.* EXCLUDEOBJECTS-2=owner:PDBADMIN, objectName:.* DUMPTRANSFERDETAILS_PARALLELCOUNT=3 DUMPTRANSFERDETAILS_RETRYCOUNT=3 DUMPTRANSFERDETAILS_RSYNCAVAILABLE=FALSE DUMPTRANSFERDETAILS_SOURCE_USEOCICLI=FALSE DUMPTRANSFERDETAILS_TARGET_USEOCICLI=FALSE

Run the migration with ZDM

We are now ready to run the migration with ZDM.

We will use the eval option to check the configuration before really running the migration.

Let’s try it!

[zdmuser@zdm-host ~]$ /u01/app/zdmhome/bin/zdmcli migrate database -rsp /home/zdmuser/migration/zdm_logical_off_to_adb.rsp -sourcenode srv-source-zdm-01 -srcauth zdmauth -srcarg1 user:opc -srcarg2 identity_file:/home/zdmuser/.ssh/ssh-key-VM-YAK-ace.key -srcarg3 sudo_location:/bin/sudo -sourcedb CDB01 -eval zdm-host.sub03101110460.vcnxchange.oraclevcn.com: Audit ID: 21 Enter source database administrative user "orcl_user" password: Enter target database administrative user "ADMIN" password: Enter Data Pump encryption password: Operation "zdmcli migrate database" scheduled with the job ID "4".

Let’s query and check the job:

zdmuser@zdm-host ~]$ /u01/app/zdmhome/bin/zdmcli query job -jobid 4 zdm-host.sub03101110460.vcnxchange.oraclevcn.com: Audit ID: 26 Job ID: 4 User: zdmuser Client: zdm-host Job Type: "EVAL" Scheduled job command: "zdmcli migrate database -rsp /home/zdmuser/migration/zdm_logical_off_to_adb.rsp -sourcenode srv-source-zdm-01 -srcauth zdmauth -srcarg1 user:opc -srcarg2 identity_file:/home/zdmuser/.ssh/id_rsa -srcarg3 sudo_location:/bin/sudo -sourcedb CDB01 -eval" Scheduled job execution start time: 2023-03-15T16:59:31Z. Equivalent local time: 2023-03-15 16:59:31 Current status: FAILED Result file path: "/u01/app/zdmbase/chkbase/scheduled/job-4-2023-03-15-16:59:51.log" Metrics file path: "/u01/app/zdmbase/chkbase/scheduled/job-4-2023-03-15-16:59:51.json" Job execution start time: 2023-03-15 16:59:51 Job execution end time: 2023-03-15 17:00:05 Job execution elapsed time: 13 seconds ZDM_VALIDATE_TGT ...................... COMPLETED ZDM_VALIDATE_SRC ...................... FAILED ZDM_SETUP_SRC ......................... PENDING ZDM_PRE_MIGRATION_ADVISOR ............. PENDING ZDM_VALIDATE_DATAPUMP_SETTINGS_SRC .... PENDING ZDM_VALIDATE_DATAPUMP_SETTINGS_TGT .... PENDING ZDM_PREPARE_DATAPUMP_SRC .............. PENDING ZDM_DATAPUMP_ESTIMATE_SRC ............. PENDING ZDM_CLEANUP_SRC ....................... PENDING

The first attempt failed. Let’s check the log file:

[zdmuser@zdm-host scheduled]$ cat job-4-2023-03-15-16:59:51.log zdm-host: 2023-03-15T16:59:51.892Z : Starting zero downtime migrate operation ... zdm-host: 2023-03-15T16:59:51.899Z : Executing phase ZDM_VALIDATE_TGT zdm-host: 2023-03-15T16:59:51.903Z : Fetching details of Autonomous Database "ocid1.autonomousdatabase.oc1.eu-zurich-1.an5h***************************************************ylmq" zdm-host: 2023-03-15T16:59:52.779Z : Lifecycle state of OCI database : "Available" zdm-host: 2023-03-15T16:59:53.613Z : Type of OCI database : "Autonomous Transaction Processing - Serverless" zdm-host: 2023-03-15T16:59:54.449Z : Downloading credentials wallet for Autonomous Database "ocid1.autonomousdatabase.oc1.eu-zurich-1.an5hel***************************************************wwylmq" zdm-host: 2023-03-15T16:59:57.985Z : Connection string of Autonomous Database "ocid1.autonomousdatabase.oc1.eu-zurich-1.an5hel***************************************************lmq": "zdmxchange_high " zdm-host: 2023-03-15T16:59:58.066Z : Verifying configuration and status of target database "g9b10f83f9c09d6_zdmxchange_high.adb.oraclecloud.com" zdm-host: 2023-03-15T17:00:02.161Z : Global database name: G9B10F83F9C09D6_ZDMXCHANGE zdm-host: 2023-03-15T17:00:02.162Z : Target PDB name : G9B10F83F9C09D6_ZDMXCHANGE zdm-host: 2023-03-15T17:00:02.163Z : Database major version : 19 zdm-host: 2023-03-15T17:00:02.165Z : obtaining database G9B10F83F9C09D6_ZDMXCHANGE tablespace configuration details... zdm-host: 2023-03-15T17:00:02.805Z : Execution of phase ZDM_VALIDATE_TGT completed zdm-host: 2023-03-15T17:00:02.838Z : Executing phase ZDM_VALIDATE_SRC zdm-host: 2023-03-15T17:00:02.950Z : Verifying configuration and status of source database "pdb1" zdm-host: 2023-03-15T17:00:02.951Z : source database host srv-source-zdm-01 service pdb1 PRGZ-1141 : failed to verify configuration and status of Oracle database "srv-source-zdm-01:1521/pdb1" PRCZ-4001 : failed to execute command "/bin/sh" using the privileged execution plugin "zdmauth" on nodes "srv-source-zdm-01" within 120 seconds PRCZ-2006 : Unable to establish SSH connection to node "srv-source-zdm-01" to execute command "/bin/sh -c "if [ -f /u01/app/oracle/product/19_17_221018_JVM_v0/bin/oracle ]; then echo exists; fi"" No more authentication methods available

As we can understand, RSA key is missing for ZDM user. Let’s do the needful:

[opc@zdm-host .ssh]$ sudo cp -p ssh-key-VM-YAK-ace.key /home/zdmuser/.ssh [opc@zdm-host .ssh]$ sudo ls -l /home/zdmuser/.ssh total 12 -rw-------. 1 zdmuser zdm 1679 Mar 13 14:46 id_rsa -rw-r--r--. 1 zdmuser zdm 398 Mar 13 14:46 id_rsa.pub -rw-------. 1 opc opc 3247 Mar 15 16:07 ssh-key-VM-YAK-ace.key [opc@zdm-host .ssh]$ sudo chown zdmuser:zdm /home/zdmuser/.ssh/ssh-key-VM-YAK-ace.key [opc@zdm-host .ssh]$ sudo ls -l /home/zdmuser/.ssh total 12 -rw-------. 1 zdmuser zdm 1679 Mar 13 14:46 id_rsa -rw-r--r--. 1 zdmuser zdm 398 Mar 13 14:46 id_rsa.pub -rw-------. 1 zdmuser zdm 3247 Mar 15 16:07 ssh-key-VM-YAK-ace.key

And do a new try:

[zdmuser@zdm-host ~]$ /u01/app/zdmhome/bin/zdmcli migrate database -rsp /home/zdmuser/migration/zdm_logical_off_to_adb.rsp -sourcenode srv-source-zdm-01 -srcauth zdmauth -srcarg1 user:opc -srcarg2 identity_file:/home/zdmuser/.ssh/ssh-key-VM-YAK-ace.key -srcarg3 sudo_location:/bin/sudo -sourcedb CDB01 -eval zdm-host.sub03101110460.vcnxchange.oraclevcn.com: Audit ID: 27 Enter source database administrative user "orcl_user" password: Enter target database administrative user "ADMIN" password: Enter Data Pump encryption password: Operation "zdmcli migrate database" scheduled with the job ID "5".

We will check the new eval job:

[zdmuser@zdm-host ~]$ /u01/app/zdmhome/bin/zdmcli query job -jobid 5 zdm-host.sub03101110460.vcnxchange.oraclevcn.com: Audit ID: 31 Job ID: 5 User: zdmuser Client: zdm-host Job Type: "EVAL" Scheduled job command: "zdmcli migrate database -rsp /home/zdmuser/migration/zdm_logical_off_to_adb.rsp -sourcenode srv-source-zdm-01 -srcauth zdmauth -srcarg1 user:opc -srcarg2 identity_file:/home/zdmuser/.ssh/ssh-key-VM-YAK-ace.key -srcarg3 sudo_location:/bin/sudo -sourcedb CDB01 -eval" Scheduled job execution start time: 2023-03-15T17:24:11Z. Equivalent local time: 2023-03-15 17:24:11 Current status: FAILED Result file path: "/u01/app/zdmbase/chkbase/scheduled/job-5-2023-03-15-17:24:21.log" Metrics file path: "/u01/app/zdmbase/chkbase/scheduled/job-5-2023-03-15-17:24:21.json" Excluded objects file path: "/u01/app/zdmbase/chkbase/scheduled/job-5-filtered-objects-2023-03-15T17:24:39.619.json" Job execution start time: 2023-03-15 17:24:21 Job execution end time: 2023-03-15 17:25:21 Job execution elapsed time: 59 seconds ZDM_VALIDATE_TGT ...................... COMPLETED ZDM_VALIDATE_SRC ...................... COMPLETED ZDM_SETUP_SRC ......................... COMPLETED ZDM_PRE_MIGRATION_ADVISOR ............. COMPLETED ZDM_VALIDATE_DATAPUMP_SETTINGS_SRC .... FAILED ZDM_VALIDATE_DATAPUMP_SETTINGS_TGT .... PENDING ZDM_PREPARE_DATAPUMP_SRC .............. PENDING ZDM_DATAPUMP_ESTIMATE_SRC ............. PENDING ZDM_CLEANUP_SRC ....................... PENDING

Which is now failing on DATAPUMP SETTINGS check.

We will check the log file:

[zdmuser@zdm-host scheduled]$ pwd

/u01/app/zdmbase/chkbase/scheduled

[zdmuser@zdm-host scheduled]$ cat job-5-errormsg.json

[ {

"errorMsg" : "failed to validate specified database directory object path \"/u01/app/oracle/export\"",

"errId" : "PRGZ-1211 ",

"cause" : " An attempt to migrate a database was rejected because the validation of specified directory object to be used for storing Oracle Data Pump dump files failed.",

"action" : " Either specify a valid path required to create a new directory object or specify an existing directory object, and then resubmit the job."

}, {

"errorMsg" : "failed to operate in path \"/u01/app/oracle/export\" on node srv-source-zdm-01",

"errId" : "PRGZ-1212 ",

"cause" : " An attempt to migrate a database failed because the operation on specified directory path failed. The accompanying messages provided detailed failure information.",

"action" : " Review the accompanying messages, resolve the indicated problems, and then retry the operation."

} ]

The new failure is due to missing /u01/app/oracle/export folder. Let’s create it:

oracle@srv-source-zdm-01:~/ [rdbms19_17_221018_JVM_v0] mkdir /u01/app/oracle/export

Let’s try to evaluate the migration job a third time:

[zdmuser@zdm-host ~]$ /u01/app/zdmhome/bin/zdmcli migrate database -rsp /home/zdmuser/migration/zdm_logical_off_to_adb.rsp -sourcenode srv-source-zdm-01 -srcauth zdmauth -srcarg1 user:opc -srcarg2 identity_file:/home/zdmuser/.ssh/ssh-key-VM-YAK-ace.key -srcarg3 sudo_location:/bin/sudo -sourcedb CDB01 -eval zdm-host.sub03101110460.vcnxchange.oraclevcn.com: Audit ID: 32 Enter source database administrative user "orcl_user" password: Enter target database administrative user "ADMIN" password: Enter Data Pump encryption password: Operation "zdmcli migrate database" scheduled with the job ID "6".

We will query the new job:

[zdmuser@zdm-host ~]$ /u01/app/zdmhome/bin/zdmcli query job -jobid 6 zdm-host.sub03101110460.vcnxchange.oraclevcn.com: Audit ID: 35 Job ID: 6 User: zdmuser Client: zdm-host Job Type: "EVAL" Scheduled job command: "zdmcli migrate database -rsp /home/zdmuser/migration/zdm_logical_off_to_adb.rsp -sourcenode srv-source-zdm-01 -srcauth zdmauth -srcarg1 user:opc -srcarg2 identity_file:/home/zdmuser/.ssh/ssh-key-VM-YAK-ace.key -srcarg3 sudo_location:/bin/sudo -sourcedb CDB01 -eval" Scheduled job execution start time: 2023-03-15T17:35:18Z. Equivalent local time: 2023-03-15 17:35:18 Current status: SUCCEEDED Result file path: "/u01/app/zdmbase/chkbase/scheduled/job-6-2023-03-15-17:35:21.log" Metrics file path: "/u01/app/zdmbase/chkbase/scheduled/job-6-2023-03-15-17:35:21.json" Excluded objects file path: "/u01/app/zdmbase/chkbase/scheduled/job-6-filtered-objects-2023-03-15T17:35:40.384.json" Job execution start time: 2023-03-15 17:35:21 Job execution end time: 2023-03-15 17:37:17 Job execution elapsed time: 1 minutes 55 seconds ZDM_VALIDATE_TGT ...................... COMPLETED ZDM_VALIDATE_SRC ...................... COMPLETED ZDM_SETUP_SRC ......................... COMPLETED ZDM_PRE_MIGRATION_ADVISOR ............. COMPLETED ZDM_VALIDATE_DATAPUMP_SETTINGS_SRC .... COMPLETED ZDM_VALIDATE_DATAPUMP_SETTINGS_TGT .... COMPLETED ZDM_PREPARE_DATAPUMP_SRC .............. COMPLETED ZDM_DATAPUMP_ESTIMATE_SRC ............. COMPLETED ZDM_CLEANUP_SRC ....................... COMPLETED

This one is now all good. We are ready to migrate!

[zdmuser@zdm-host ~]$ /u01/app/zdmhome/bin/zdmcli migrate database -rsp /home/zdmuser/migration/zdm_logical_off_to_adb.rsp -sourcenode srv-source-zdm-01 -srcauth zdmauth -srcarg1 user:opc -srcarg2 identity_file:/home/zdmuser/.ssh/ssh-key-VM-YAK-ace.key -srcarg3 sudo_location:/bin/sudo -sourcedb CDB01 zdm-host.sub03101110460.vcnxchange.oraclevcn.com: Audit ID: 36 Enter source database administrative user "orcl_user" password: Enter target database administrative user "ADMIN" password: Enter Data Pump encryption password: Operation "zdmcli migrate database" scheduled with the job ID "7".

Let’s check the migration job output:

[zdmuser@zdm-host ~]$ /u01/app/zdmhome/bin/zdmcli query job -jobid 7 zdm-host.sub03101110460.vcnxchange.oraclevcn.com: Audit ID: 39 Job ID: 7 User: zdmuser Client: zdm-host Job Type: "MIGRATE" Scheduled job command: "zdmcli migrate database -rsp /home/zdmuser/migration/zdm_logical_off_to_adb.rsp -sourcenode srv-source-zdm-01 -srcauth zdmauth -srcarg1 user:opc -srcarg2 identity_file:/home/zdmuser/.ssh/ssh-key-VM-YAK-ace.key -srcarg3 sudo_location:/bin/sudo -sourcedb CDB01" Scheduled job execution start time: 2023-03-15T17:51:43Z. Equivalent local time: 2023-03-15 17:51:43 Current status: SUCCEEDED Result file path: "/u01/app/zdmbase/chkbase/scheduled/job-7-2023-03-15-17:51:51.log" Metrics file path: "/u01/app/zdmbase/chkbase/scheduled/job-7-2023-03-15-17:51:51.json" Excluded objects file path: "/u01/app/zdmbase/chkbase/scheduled/job-7-filtered-objects-2023-03-15T17:52:13.614.json" Job execution start time: 2023-03-15 17:51:51 Job execution end time: 2023-03-15 17:56:08 Job execution elapsed time: 4 minutes 16 seconds ZDM_VALIDATE_TGT ...................... COMPLETED ZDM_VALIDATE_SRC ...................... COMPLETED ZDM_SETUP_SRC ......................... COMPLETED ZDM_PRE_MIGRATION_ADVISOR ............. COMPLETED ZDM_VALIDATE_DATAPUMP_SETTINGS_SRC .... COMPLETED ZDM_VALIDATE_DATAPUMP_SETTINGS_TGT .... COMPLETED ZDM_PREPARE_DATAPUMP_SRC .............. COMPLETED ZDM_DATAPUMP_ESTIMATE_SRC ............. COMPLETED ZDM_PREPARE_DATAPUMP_TGT .............. COMPLETED ZDM_DATAPUMP_EXPORT_SRC ............... COMPLETED ZDM_UPLOAD_DUMPS_SRC .................. COMPLETED ZDM_DATAPUMP_IMPORT_TGT ............... COMPLETED ZDM_POST_DATAPUMP_SRC ................. COMPLETED ZDM_POST_DATAPUMP_TGT ................. COMPLETED ZDM_POST_ACTIONS ...................... COMPLETED ZDM_CLEANUP_SRC ....................... COMPLETED [zdmuser@zdm-host ~]$

The migration has been executed successfully. Let’s check the log file. For a successful migration the jobs should end with:

Execution of phase ZDM_CLEANUP_SRC completedHere is the end of the log file:

[zdmuser@zdm-host scheduled]$ pwd /u01/app/zdmbase/chkbase/scheduled [zdmuser@zdm-host scheduled]$ cat job-7-2023-03-15-17:51:51.log zdm-host: 2023-03-15T17:51:52.056Z : Starting zero downtime migrate operation ... zdm-host: 2023-03-15T17:51:52.063Z : Executing phase ZDM_VALIDATE_TGT ... ... ... zdm-host: 2023-03-15T17:56:06.876Z : Executing phase ZDM_POST_ACTIONS zdm-host: 2023-03-15T17:56:06.887Z : Execution of phase ZDM_POST_ACTIONS completed zdm-host: 2023-03-15T17:56:06.906Z : Executing phase ZDM_CLEANUP_SRC zdm-host: 2023-03-15T17:56:07.137Z : Cleaning up ZDM on the source node srv-source-zdm-01 ... zdm-host: 2023-03-15T17:56:08.093Z : Execution of phase ZDM_CLEANUP_SRC completed

And we can see that we have our data migrated in the migration_demo table in the target ATP.

Summary

As we could see we could easily migrate a database from a VM in OCI to an ATP database using ZDM tool doing an offline logical database migration using a backup destination. I will, in some next blogs, test and demonstrate a physical online migration and a logical online migration with DB links.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/MAW_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/11/NIJ-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/04/SIT_web.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/JEW_web-min-scaled.jpg)