A couple of weeks ago, Microsoft announced the first public CTP version of next SQL Server version (CTP2). It is not a surprise, the SQL Server vNext becomes SQL Server 2019 and there are a plenty of enhancements as well as new features to discover. But for now, let’s start with likely one of my favorites: availability groups on Kurbernetes (aka K8s). As far I may see from customers and hear from my colleagues as well, we assist to a strong adoption of K8s with OpenShift as a main driver. I would not be surprised to see some SQL Server pods at customer shops in a near future, especially with the support of availability groups on K8s. From my opinion, that is definitely something that was missing in the previous for microservices architectures or not, for either quality or production environments.

Well, I decided to learn more about this new feature but let’s say this write-up concerns the CTP 2.0 version and chances are things will likely change in the future. So, don’t focus strictly on my words or commands I’m using in this blog post.

It is some time since I used the Service Azure Kubernetes (AKS) and I already wrote about it in a previous blog post. I used the same environment to deploy my first availability group on K8s. It was definitely an interesting experience because it involved getting technical skills about K8s infrastructure.

So, let’s set briefly the context with my K8s cluster on Azure that is composed of 3 agent nodes as shown below:

$ kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME aks-nodepool1-78763348-0 Ready agent 126d v1.9.6 <none> Ubuntu 16.04.4 LTS 4.13.0-1016-azure docker://1.13.1 aks-nodepool1-78763348-1 Ready agent 126d v1.9.6 <none> Ubuntu 16.04.4 LTS 4.13.0-1016-azure docker://1.13.1 aks-nodepool1-78763348-2 Ready agent 35d v1.9.6 <none> Ubuntu 16.04.5 LTS 4.15.0-1023-azure docker://1.13.1

I also used a custom namespace – agdev – to scope my availability group resources names.

$ kubectl get ns NAME STATUS AGE ag1 Terminating 23h agdev Active 10h azure-system Active 124d default Active 124d kube-public Active 124d kube-system Active 124d

Referring to the Microsoft documentation, the SQL secrets (including master key and SA password secrets) are ready for use:

$ kubectl get secret sql-secrets -n agdev NAME TYPE DATA AGE sql-secrets Opaque 2 1d $ kubectl describe secret sql-secrets -n agdev Name: sql-secrets Namespace: default Labels: <none> Annotations: <none> Type: Opaque Data ==== masterkeypassword: 14 bytes sapassword: 14 bytes

- The operator

The first component to deploy is the operator which is a very important component in this infrastructure and that builds upon the basic Kubernetes resource and controller concepts. Kubernetes has a very pluggable way to add your own logic in the form of a controller in addition of existing built-in controllers as the old fashion replication controller, the replica sets and deployments. All of them are suitable for stateless applications but the story is not the same when we have to deal with stateful systems like databases because those system require specific application domain knowledge to correctly scale, upgrade and reconfigure while protecting against data loss or unavailability. For example, how to deal correctly with availability groups during a crash of pod? If we think about it, the work doesn’t consist only in restarting the crashing pod but the system will also have to execute custom tasks in a background including electing of a new primary (aka leader election), ensuring a safe transition during the failover period to avoid split brain scenarios etc.

Deploying the mssql-operator includes the creation of a new pod:

$ kubectl get pods -n agdev -l app=mssql-operator NAME READY STATUS RESTARTS AGE mssql-operator-67447c4bd8-s6tbv 1/1 Running 0 11h

Let’s go further by getting more details about this pod:

$ kubectl describe pod -n agdev mssql-operator-67447c4bd8-s6tbv

Name: mssql-operator-67447c4bd8-s6tbv

Namespace: agdev

Node: aks-nodepool1-78763348-0/10.240.0.4

Start Time: Mon, 01 Oct 2018 08:12:47 +0200

Labels: app=mssql-operator

pod-template-hash=2300370684

Annotations: <none>

Status: Running

IP: 10.244.1.56

Controlled By: ReplicaSet/mssql-operator-67447c4bd8

Containers:

mssql-operator:

Container ID: docker://148ba4b8ccd91159fecc3087dd4c0b7eb7feb36be4b3b5124314121531cd3a3c

Image: mcr.microsoft.com/mssql/ha:vNext-CTP2.0-ubuntu

Image ID: docker-pullable://mcr.microsoft.com/mssql/ha@sha256:c5d20c8b34ea096a845de0222441304a14ad31a447d79904bafaf29f898704d0

Port: <none>

Host Port: <none>

Command:

/mssql-server-k8s-operator

State: Running

Started: Mon, 01 Oct 2018 08:13:32 +0200

Ready: True

Restart Count: 0

Environment:

MSSQL_K8S_NAMESPACE: agdev (v1:metadata.namespace)

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from mssql-operator-token-bd5gc (ro)

…

Volumes:

mssql-operator-token-bd5gc:

Type: Secret (a volume populated by a Secret)

SecretName: mssql-operator-token-bd5gc

Optional: false

Some interesting items to note here:

- The SQL Server CTP image – mcr.microsoft.com/mssql/ha – comes from the new Microsoft Container Registry (MCR). The current tag is vNext-CTP2.0-ubuntu at the moment of this write-up

- Volume secret is mounted to pass sensitive data that concerns a K8s service account used by the pod. In fact, the deployment of availability groups implies the creation of multiple service accounts

$ kubectl describe secret -n agdev mssql-operator-token-bd5gc

Name: mssql-operator-token-bd5gc

Namespace: agdev

Labels: <none>

Annotations: kubernetes.io/service-account.name=mssql-operator

kubernetes.io/service-account.uid=03cb111e-c541-11e8-a34a-0a09b8f01b34

Type: kubernetes.io/service-account-token

Data

====

namespace: 5 bytes

token: xxxx

ca.crt: 1720 bytes

The command is /mssql-server-k8s-operator that is a binary file like other mssql-server* files packaged in the new SQL Server image and which are designed to respond to different events by appropriated actions like updating K8s resources:

$ kubectl exec -ti -n agdev mssql-operator-67447c4bd8-s6tbv -- /bin/bash root@mssql-operator-67447c4bd8-s6tbv:/# ll mssql* -rwxrwxr-x 1 root root 32277998 Sep 19 16:00 mssql-server-k8s-ag-agent* -rwxrwxr-x 1 root root 31848041 Sep 19 16:00 mssql-server-k8s-ag-agent-supervisor* -rwxrwxr-x 1 root root 31336739 Sep 19 16:00 mssql-server-k8s-failover* -rwxrwxr-x 1 root root 32203064 Sep 19 16:00 mssql-server-k8s-health-agent* -rwxrwxr-x 1 root root 31683946 Sep 19 16:00 mssql-server-k8s-init-sql* -rwxrwxr-x 1 root root 31422517 Sep 19 16:00 mssql-server-k8s-operator* -rwxrwxr-x 1 root root 31645032 Sep 19 16:00 mssql-server-k8s-rotate-creds* root@mssql-operator-67447c4bd8-s6tbv:/# file mssql-server-k8s-operator mssql-server-k8s-operator: ELF 64-bit LSB executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, not stripped

- The SQL Server instances and AGs

The next step consisted in running the SQL Server AG deployment. Looking at the manifest file, we may notice we deploy custom SQL Server objects (kind: SqlServer) from new mssql.microsoft.com API installed previously as well as their corresponding services to expose SQL Server pods to the external traffic.

The deployment includes 3 StatefulSets that manage pods with 2 containers, respectively the SQL Server engine and its agent (HA supervisor). I was surprised to not see a deployment with kind: StatefulSet but I got the confirmation that the “logic” is encapsulated in the SqlServer object definition. Why StatfulSets here? Well, because they are more valuable for applications like databases by providing, inter alia, stable and unique network identifiers as well as stable and persistent storage. Stateless pods do not provide such capabilities. To meet StafulSet prerequisites, we need first to define persistent volumes for each SQL Server pod. Recent version of K8s allows to use dynamic provisioning and this is exactly what is used in the initial Microsoft deployment file with the instanceRootVolumeClaimTemplate:

instanceRootVolumeClaimTemplate:

accessModes: [ReadWriteOnce]

resources:

requests: {storage: 5Gi}

storageClass: default

However, in my context I already created persistent volumes for previous tests as shown below:

$ kubectl get pv -n agdev NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-cb299d79-c5b4-11e8-a34a-0a09b8f01b34 10Gi RWO Delete Bound agdev/mssql-data-1 azure-disk 9h pvc-cb4915b4-c5b4-11e8-a34a-0a09b8f01b34 10Gi RWO Delete Bound agdev/mssql-data-2 azure-disk 9h pvc-cb67cd06-c5b4-11e8-a34a-0a09b8f01b34 10Gi RWO Delete Bound agdev/mssql-data-3 azure-disk 9h $ kubectl get pvc -n agdev NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE mssql-data-1 Bound pvc-cb299d79-c5b4-11e8-a34a-0a09b8f01b34 10Gi RWO azure-disk 9h mssql-data-2 Bound pvc-cb4915b4-c5b4-11e8-a34a-0a09b8f01b34 10Gi RWO azure-disk 9h mssql-data-3 Bound pvc-cb67cd06-c5b4-11e8-a34a-0a09b8f01b34 10Gi RWO azure-disk 9h

So, I changed a little bit the initial manifest file for each SqlServer object with my existing persistent claims:

instanceRootVolume:

persistentVolumeClaim:

claimName: mssql-data-1

…

instanceRootVolume:

persistentVolumeClaim:

claimName: mssql-data-2

…

instanceRootVolume:

persistentVolumeClaim:

claimName: mssql-data-3

Furthermore, next prerequisite for StatefulSet consists in using a headless service and this exactly we may find with the creation of ag1 service during the deployment:

$ kubectl get svc -n agdev NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ag1 ClusterIP None <none> 1433/TCP,5022/TCP 1d

I also noticed some other interesting items like extra pods in completed state:

$ kubectl get pods -n agdev -l app!=mssql-operator NAME READY STATUS RESTARTS AGE mssql-initialize-mssql1-plh8l 0/1 Completed 0 9h mssql-initialize-mssql2-l6z8m 0/1 Completed 0 9h mssql-initialize-mssql3-wrbkl 0/1 Completed 0 9h mssql1-0 2/2 Running 0 9h mssql2-0 2/2 Running 0 9h mssql3-0 2/2 Running 0 9h $ kubectl get sts -n agdev NAME DESIRED CURRENT AGE mssql1 1 1 9h mssql2 1 1 9h mssql3 1 1 9h

In fact, those pods are related to jobs created and executed in a background during the deployment of the SQL Server AG:

$ kubectl get jobs -n agdev NAME DESIRED SUCCESSFUL AGE mssql-initialize-mssql1 1 1 22h mssql-initialize-mssql2 1 1 22h mssql-initialize-mssql3 1 1 22h

Let’s take a look at the mssql-initialize-mssql1 job:

$ kubectl describe job -n agdev mssql-initialize-mssql1

Name: mssql-initialize-mssql1

Namespace: agdev

Selector: controller-uid=cd481f3c-c5b5-11e8-a34a-0a09b8f01b34

Labels: controller-uid=cd481f3c-c5b5-11e8-a34a-0a09b8f01b34

job-name=mssql-initialize-mssql1

Annotations: <none>

Parallelism: 1

Completions: 1

Start Time: Mon, 01 Oct 2018 22:08:45 +0200

Pods Statuses: 0 Running / 1 Succeeded / 0 Failed

Pod Template:

Labels: controller-uid=cd481f3c-c5b5-11e8-a34a-0a09b8f01b34

job-name=mssql-initialize-mssql1

Service Account: mssql-initialize-mssql1

Containers:

mssql-initialize:

Image: mcr.microsoft.com/mssql/ha:vNext-CTP2.0-ubuntu

Port: <none>

Host Port: <none>

Command:

/mssql-server-k8s-init-sql

Environment:

MSSQL_K8S_NAMESPACE: (v1:metadata.namespace)

MSSQL_K8S_SA_PASSWORD: <set to the key 'sapassword' in secret 'sql-secrets'> Optional: false

MSSQL_K8S_NUM_SQL_SERVERS: 1

MSSQL_K8S_SQL_POD_OWNER_UID: cd13319a-c5b5-11e8-a34a-0a09b8f01b34

MSSQL_K8S_SQL_SERVER_NAME: mssql1

MSSQL_K8S_SQL_POST_INIT_SCRIPT:

MSSQL_K8S_MASTER_KEY_PASSWORD: <set to the key 'masterkeypassword' in secret 'sql-secrets'> Optional: false

Mounts: <none>

Volumes: <none>

Events: <none>

These jobs are one-time initialization code that is executed when SQL Server and the AG is bootstrapped (thank you to @MihaelaBlendea to give more details on this topic) through the mssql-server-k8s-init-sql command. This is likely something you may remove according to your context (if you daily deal with a lot of K8s jobs for example).

Then, the deployment led to create 3 StatefulSets with their respective pods mssql1-0, mssql2-0 and mssql3-0. Each pod contains 2 containers as shown below for the mssql1-0 pod:

$ kubectl describe pod -n agdev mssql1-0

Name: mssql1-0

Namespace: agdev

Node: aks-nodepool1-78763348-1/10.240.0.5

…

Status: Running

IP: 10.244.0.38

Controlled By: StatefulSet/mssql1

Containers:

mssql-server:

Container ID: docker://8e23cec873ea3d1ebd98f8f4f0ab0b11b840c54c17557d23817b9c21a863bb42

Image: mcr.microsoft.com/mssql/server:vNext-CTP2.0-ubuntu

Image ID: docker-pullable://mcr.microsoft.com/mssql/server@sha256:87e691e2e5f738fd64a427ebe935e4e5ccd631be1b4f66be1953c7450418c8c8

Ports: 1433/TCP, 5022/TCP

Host Ports: 0/TCP, 0/TCP

State: Running

Started: Mon, 01 Oct 2018 22:11:44 +0200

Ready: True

Restart Count: 0

Liveness: http-get http://:8080/healthz delay=60s timeout=1s period=2s #success=1 #failure=3

Environment:

ACCEPT_EULA: y

MSSQL_PID: Developer

MSSQL_SA_PASSWORD: <set to the key 'initsapassword' in secret 'mssql1-statefulset-secret'> Optional: false

MSSQL_ENABLE_HADR: 1

Mounts:

/var/opt/mssql from instance-root (rw)

/var/run/secrets/kubernetes.io/serviceaccount from no-api-access (ro)

mssql-ha-supervisor:

Container ID: docker://f5a0d4d51a459752a2c509eb3ec7874d94586a7499201f559c9ad8281751e514

Image: mcr.microsoft.com/mssql/ha:vNext-CTP2.0-ubuntu

Image ID: docker-pullable://mcr.microsoft.com/mssql/ha@sha256:c5d20c8b34ea096a845de0222441304a14ad31a447d79904bafaf29f898704d0

Port: 8080/TCP

Host Port: 0/TCP

Command:

/mssql-server-k8s-ag-agent-supervisor

State: Running

Started: Mon, 01 Oct 2018 22:11:45 +0200

Ready: True

Restart Count: 0

Environment:

MSSQL_K8S_NAMESPACE: agdev (v1:metadata.namespace)

MSSQL_K8S_POD_NAME: mssql1-0 (v1:metadata.name)

MSSQL_K8S_SQL_SERVER_NAME: mssql1

MSSQL_K8S_POD_IP: (v1:status.podIP)

MSSQL_K8S_NODE_NAME: (v1:spec.nodeName)

MSSQL_K8S_MONITOR_POLICY: 3

MSSQL_K8S_HEALTH_CONNECTION_REBOOT_TIMEOUT:

MSSQL_K8S_SKIP_AG_ANTI_AFFINITY:

MSSQL_K8S_MONITOR_PERIOD_SECONDS:

MSSQL_K8S_LEASE_DURATION_SECONDS:

MSSQL_K8S_RENEW_DEADLINE_SECONDS:

MSSQL_K8S_RETRY_PERIOD_SECONDS:

MSSQL_K8S_ACQUIRE_PERIOD_SECONDS:

MSSQL_K8S_SQL_WRITE_LEASE_PERIOD_SECONDS:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from mssql1-token-5zlkq (ro)

….

Volumes:

no-api-access:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

instance-root:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: mssql-data-1

ReadOnly: false

mssql1-token-5zlkq:

Type: Secret (a volume populated by a Secret)

SecretName: mssql1-token-5zlkq

Optional: false

…

We recognize the mssql-server and mssql-ha-supervisor container as stated to the Microsoft documentation. The mssql-server container is listening on the port 1433 (SQL engine) and 5022 (hadr point). Note the container includes a HTTP liveness probes (http-get http://:8080/healthz delay=60s timeout=1s period=2s #success=1 #failure=3) to determine its health. Morever, the mssql-ha-supervisor container is self-explaining and aims to monitor the SQL Server instance if we refer to the environment variable names. I believe another blog post will be necessary to talk about it. Each SQL Server pod (meaning a SQL Server instance here that listen on the port 1433) is exposed to the external traffic by a dedicated service as shown below. External IPs are assigned to the K8s cluster load balancer services through the Azure Load Balancer (basic SKU).

$ kubectl get svc -n agdev NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ag1 ClusterIP None <none> 1433/TCP,5022/TCP 23h mssql1 LoadBalancer 10.0.43.216 xx.xx.xx.xxx 1433:31674/TCP 23h mssql2 LoadBalancer 10.0.28.27 xx.xx.xx.xxx 1433:32681/TCP 23h mssql3 LoadBalancer 10.0.137.244 xx.xx.xxx.xxx 1433:31152/TCP 23h

- The AG Services

Finally, I only deployed the service corresponding to ag1-primary that connects to the primary replica. It is up to you to deploy other ones according to your context. In fact, the ag1-primary service acts as the AG listener in this new infrastructure.

$ kubectl get svc -n agdev NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ag1 ClusterIP None <none> 1433/TCP,5022/TCP 23h ag1-primary LoadBalancer 10.0.32.104 xxx.xx.xx.xxx 1433:31960/TCP 1m mssql1 LoadBalancer 10.0.43.216 xx.xx.xx.xxx 1433:31674/TCP 23h mssql2 LoadBalancer 10.0.28.27 xx.xx.xx.xxx 1433:32681/TCP 23h mssql3 LoadBalancer 10.0.137.244 xx.xx.xxx.xxx 1433:31152/TCP 23h

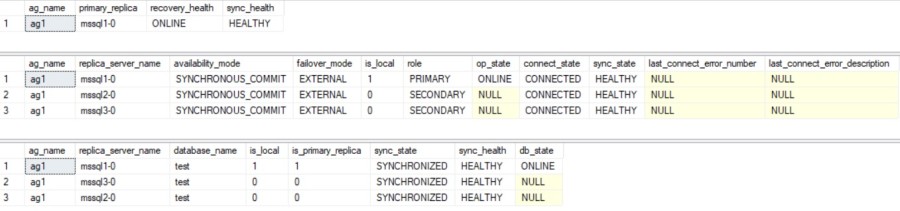

So, it’s time to connect to my availability group from the external IP of the ag1-primary service. I already add a test database to the availability group and here a picture of the situation:

-- groups info SELECT g.name as ag_name, rgs.primary_replica, rgs.primary_recovery_health_desc as recovery_health, rgs.synchronization_health_desc as sync_health FROM sys.dm_hadr_availability_group_states as rgs JOIN sys.availability_groups AS g ON rgs.group_id = g.group_id -- replicas info SELECT g.name as ag_name, r.replica_server_name, r.availability_mode_desc as [availability_mode], r.failover_mode_desc as [failover_mode], rs.is_local, rs.role_desc as role, rs.operational_state_desc as op_state, rs.connected_state_desc as connect_state, rs.synchronization_health_desc as sync_state, rs.last_connect_error_number, rs.last_connect_error_description FROM sys.dm_hadr_availability_replica_states AS rs JOIN sys.availability_replicas AS r ON rs.replica_id = r.replica_id JOIN sys.availability_groups AS g ON g.group_id = r.group_id ORDER BY r.replica_server_name, rs.is_local; -- DB level SELECT g.name as ag_name, r.replica_server_name, DB_NAME(drs.database_id) as [database_name], drs.is_local, drs.is_primary_replica, synchronization_state_desc as sync_state, synchronization_health_desc as sync_health, database_state_desc as db_state FROM sys.dm_hadr_database_replica_states AS drs JOIN sys.availability_replicas AS r ON r.replica_id = drs.replica_id JOIN sys.availability_groups AS g ON g.group_id = drs.group_id ORDER BY g.name, drs.is_primary_replica DESC; GO

This is a common picture we may get with traditional availability group. Another way to identify the primary replica is going through the kubectl command pod and to filter by label as follows:

$ kubectl get pods -n agdev -l="role.ag.mssql.microsoft.com/ag1"="primary" NAME READY STATUS RESTARTS AGE mssql1-0 2/2 Running 0 1d

To finish, let’s simulate the crash of the pod mssql1-0 and let’s see what happens:

$ kubectl delete pod -n agdev mssql1-0 pod "mssql1-0" deleted kubectl get pods -n agdev NAME READY STATUS RESTARTS AGE mssql-initialize-mssql1-plh8l 0/1 Completed 0 1d mssql-initialize-mssql2-l6z8m 0/1 Completed 0 1d mssql-initialize-mssql3-wrbkl 0/1 Completed 0 1d mssql-operator-67447c4bd8-s6tbv 1/1 Running 0 1d mssql1-0 0/2 Terminating 0 1d mssql2-0 2/2 Running 0 1d mssql3-0 2/2 Running 0 1d ... $ kubectl get pods -n agdev NAME READY STATUS RESTARTS AGE mssql-initialize-mssql1-plh8l 0/1 Completed 0 1d mssql-initialize-mssql2-l6z8m 0/1 Completed 0 1d mssql-initialize-mssql3-wrbkl 0/1 Completed 0 1d mssql-operator-67447c4bd8-s6tbv 1/1 Running 0 1d mssql1-0 0/2 ContainerCreating 0 9s mssql2-0 2/2 Running 0 1d mssql3-0 2/2 Running 0 1d ... $ kubectl get pods -n agdev NAME READY STATUS RESTARTS AGE mssql-initialize-mssql1-plh8l 0/1 Completed 0 1d mssql-initialize-mssql2-l6z8m 0/1 Completed 0 1d mssql-initialize-mssql3-wrbkl 0/1 Completed 0 1d mssql-operator-67447c4bd8-s6tbv 1/1 Running 0 1d mssql1-0 2/2 Running 0 2m mssql2-0 2/2 Running 0 1d mssql3-0 2/2 Running 0 1d

As expected, the controller detects the event and recreates accordingly an another mssql1-0 pod but that’s not all. Firstly, let’s say because we are concerned by StatefulSet the pod keeps the same identity. Then the controller performs also other tasks including failover the availability group to another pod and change the primary with the mssql3-0 pod as shown below. The label of this pod is updated to identify the new primary.

$ kubectl get pods -n agdev -l="role.ag.mssql.microsoft.com/ag1"="primary" NAME READY STATUS RESTARTS AGE mssql3-0 2/2 Running 0 1d

This blog post was just an overview of what could be a SQL Server availability group on K8s. Obviously, there are a plenty of other interesting items to cover and to deep dive … probably in a near future. Stay tuned!

By David Barbarin

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/microsoft-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)