Last part of our series on facial recognition (first part here, second part here and third part here). Let’s see if there are countermeasures and how to implement them if they do exist…

Countermeasures and proposals for implementation in a defensive context

The invasive nature of FRTs has motivated a growing number of attempts to counter it, addressing various stages of the process.

Preventing the collection of images

Deceiving the location of the face

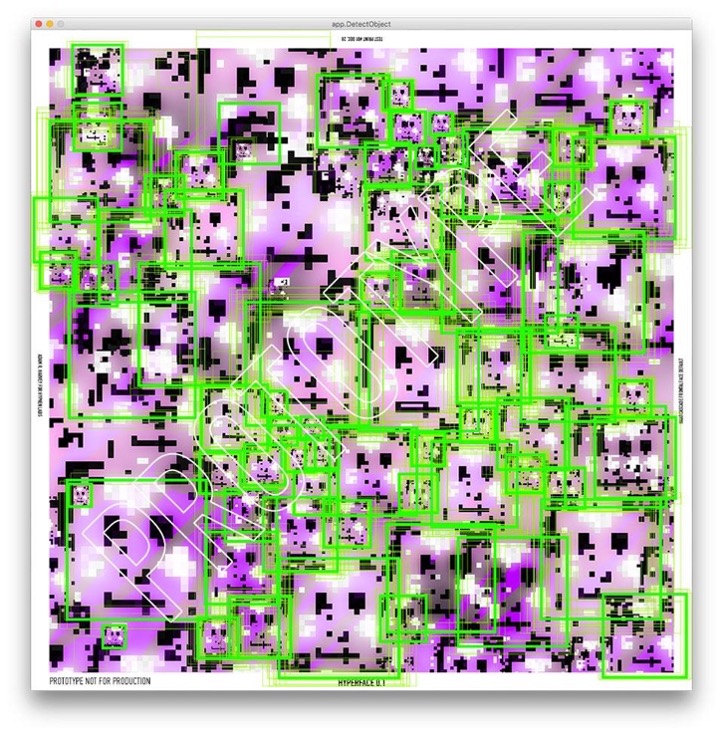

Some, like Adam Harvey (Berliner artist), have developed clothes whose patterns deceive about the location of the face.

This work on the other hand:

- Are still in the research stage more than 6 years after their initial design.

- Are therefore not currently for sale anywhere.

- Are becoming more and more obsolete in their design due to the rapid evolution of facial recognition technologies, and in particular the ever-increasing use of artificial neural networks.

- Generally work for only one detection algorithm at a time (ViolaJones Haar Cascade in the case of Adam Harvey’s Hyperface example above).

Confusing the infrared detection

The use of silver or aluminum plated clothing around the face, which reflects infrared, makes it very difficult to read a face or body by an infrared camera. [22]

Some cultures will find it easier to adopt this technique, especially cultures that already mask their faces, or generally wear fabrics that at least partially envelop them. Infrared is the most widely used method of circumventing face masking and complements this type of clothing well. However, the cost remains high and is therefore very difficult to generalize.

Making the face unreadable

The use of makeup and other accessories to “decorate” the face and distort the detection of the face by the AI has also been explored. The concept works, but has some very important limitations:

- It can only work by targeting a specific detection algorithm. If the technology is improved, or for any other algorithm, it will require redoing the face “camouflage” completely.

- The necessary decoration is not really something that can be worn easily on the street. Even attempts to make it more “trendy”, as by CVDazzle [23], cannot do much to the fact that the result makes the person very striking to anyone passing by.

The idea had received significant public interest when it was created, and many people, ranging from hackers to fashion designers became interested in it and created their own versions intended for specific algorithms.

In 2021, researchers at Ben-Gurion University in the Negev (Israel) published the results of research conducted with simple makeup, with AI-generated shapes to fool facial recognition. The results are very promising, and the makeup much easier to apply than CVDazzle’s, but again it only targets a specific recognition algorithm. [24]

It has become clear, however, that despite the hype, it is highly unlikely that such a solution will ever become viable on a large scale.

Facilitate face masking before posting a photograph online

Software using facial recognition has been developed to counteract the image collection itself: by running the photographs one wants to put online through the software, the photograph will have any detected face masked. This is very useful for example for photographs of demonstrations, to avoid that a photo of the crowd allows the identification of a large number of people.

This solution of course requires awareness on the part of the users uploading the photographs.

For photographs where the face cannot easily be hidden, there are also software solutions that alter the image enough to defeat facial recognition but without it being visible to the naked eye.

Circumventing authentication to get past controls

Face spoofing, also known as facial spoofing, is the most common case of identity theft in facial recognition authentication.

They are divided into two types: 2D and 3D:

- 2D facial spoofing relies on the use of an image of the person, but this technique can be enhanced by using a smartphone or tablet screen to play multiple images in close sequence, simulating movement, and fooling the protections responsible for detecting the “lifelessness” of a face.

- 3D spoofing, which is less frequent but is developing more and more with the rise of 3D printing in particular, is based on the use of a 3D mask to fool the systems. It has the advantage of being able to deceive 3D sensors, unlike 2D facial spoofing.

These methods are countered in particular by:

- Eyelash beat detection. The eyelash beat itself has characteristics that are specific to the individual (average time the eye remains closed, speed of beat, etc.). To date, it is almost impossible to deceive this detection. To use it, however, it is necessary to have initially stored this information in the database.

- Interactive live face detection, which will ask the user to perform certain actions with his face, such as smiling, moving his head, etc. This system can be implemented for systems requiring very high levels of security and is to date the most effective method for countering facial spoofing. [26]

- “Active Flash” technology, which briefly projects light in a flash-like fashion to verify that it is a real face. The effectiveness of the latter is however affected by the ambient light.

Case of using an infrared photo

Researchers belonging to a security company (SySS) demonstrated in 2017 that it was possible to pass Windows Hello authentication of Windows 10by using infrared photographs specially prepared for this purpose. Windows Hello uses infrared to detect the use of a photograph instead of a real face. The researchers simply printed a photograph taken in infrared at low resolution and presented it to the computer’s camera, fooling the system. [27]

Windows 10 version 1703 fixed this flaw partially, allowing the authentication settings to be changed to make this technique ineffective. But this setting brings its own set of drawbacks, and so remains disabled by default.

Since this initial discovery others, including hackers, have used this flaw to bypass Windows Hello authentication using a simple infrared photograph, demonstrating that the flaw is still active and exploitable.

Countering AI decoy solutions

Of course, FRT developers are well aware of the various attempts to counter facial recognition. Technologies are evolving very rapidly to improve the resistance of systems to fraud.

Model hacking, or learning by mistake

Researchers at McAfee have developed an AI training method that involves pitting facial recognition AI against an AI that is constantly trying to fool it by creating fake faces. In Machine Error Learning, also known as “GAN” for “Generative Adversarial Network,” the two AIs will learn from each other and evolve accordingly at a speed far greater than a human-made improvement. [28]

Personal reflections on the fight against facial recognition

Facial recognition, apart from the simpler case of simple detection, needs to respect several criteria in order to be carried out successfully up to the stage of face association.

- To have a labeled photograph of the same person in its database.

- Have a camera system that is efficient enough to obtain a usable photograph.

- To succeed in preparing the image to make it usable by the AI.

- Detecting fake faces.

Attempts to defend against facial recognition attack each of these key points. We will review each criterion to consider how effective the attempts are, can be countered, are viable, or have a future.

For 1., the fight is primarily fought by attempting to alter images before they are posted online, for example. It is impossible to prevent an authoritarian government from ordering its citizens to provide their photographs, so these attempts can only be made on photos and videos that citizens put online of their own free will. Unfortunately, as Clearview AI and its 10 billion images collected without permission proves, even on this point attempts to counter FRTs based on this criterion are far too late and far too partial to be more than a futile attempt to stop a hemorrhage that has already gone on for far too long. While the efforts and research are commendable, it is very likely that Clearview Ai is not the only private company (let alone states) that has already been making the rounds of the web sucking up image content and building up similar databases.

Efforts to counter point 2. are mostly focused on the idea of face masking. Such an action is obviously not a solution to deceive facial recognition and fraudulently pass through a secure FRT entrance for example, but it proves more effective to fight against simple mass surveillance, or snapshots. But here again, attempts have their limits. For one thing, nothing prevents a state from forcing you to uncover your face. This has already been reported in non-authoritarian states such as the United Kingdom, where passers-by reflexively hid their faces while walking past a facial recognition camera, only to be stopped a few meters away by police officers who asked them to uncover their faces and submit to the check and fined them. Other countries, such as France, for anti-terrorism reasons, prohibit being completely masked in public places. And SkyNet, the Chinese government’s facial recognition system, has already demonstrated that using a partial mask as against the Covid is no longer enough to fool the AI. In the end, masking the face remains a solution that technically works in part (techniques using infrared cameras already partially counter this method), but in reality, is difficult to repeat in everyday life.

Attempting to counter point 3. consists mainly in trying to counter the algorithms preparing the images. Unfortunately, as we have seen above, although the idea itself is promising, there are many different algorithms, and attempts usually only counter one of them, so there is nothing to prevent more sophisticated systems from using several different algorithms.

The attack on point 4. is where most fraud attempts are concentrated. Photos, fake faces in 3D, the attempts are multiple. But the technologies that counter them are evolving even faster, making only older or less secure systems vulnerable. Life detection via multiple criteria is constantly progressing. While these circumvention techniques will certainly continue to yield some successes for years to come, it is only a matter of time before all systems are capable of making the difference.

In the end, we can conclude that none of the current attempts are really promising to counter FRTs. If all of them can be effective in specific cases, they are often either too expensive, too restrictive, or in the process of being countered by recent FRT developments. While this is good news for FRTs, which should really be able to establish themselves as a reliable and secure technology on the market, it means that citizens and companies now have only one recourse for the respect of their rights: the law.

Indeed, it is only the laws, whether they are international, European or national, that will be able to protect us from the abuses and drifts of these technologies in the long term. It is essential that as many countries as possible agree to apply laws at the international level and prevent companies like Clearview AI from using our photos with impunity without our consent. To date, Clearview AI has just decided to completely ignore the summonses of the EDPB under the pretext that they do not trade their service in Europe, and therefore that the “GDPR does not apply to them”. It does apply, contrary to Clearview AI’s claim, and the fines are legitimate, but Clearview AI is not domiciled in Europe, so until the US agrees with Europe on the regulation of private data use, it is very difficult to do anything about it. It is also essential that the European states agree and make a common front on the restrictions to the use of FRTs in the public space, in order to avoid abuses such as those feared by the EDPB.

In the end, the best way to avoid facial recognition in public places is simply to limit or even prohibit its use in these public places.

Conclusion

We have seen throughout the document how much technological evolution has allowed the development of FRTs, and how efficient and secure the leading technologies in the field are now. The efficiency of algorithms continues to increase by 25% to 50% per year, and the most efficient systems do not hesitate to combine several of them for better protection and better results.

Some countries, such as India and China, have truly embraced biometrics at the national level.

Ukrainian authorities have just been offered Clearview AI technology to identify dead Russian soldiers, among others, and contact their families to tell them… [29]

On the other hand, in the almost total absence of laws governing the technology, restricting oneself to a so-called “benevolent” use of this technology remains at the discretion of the companies and states that use it.

As with any rapidly evolving technology, the European countries are lagging behind on legislation, and the lack of clarity here puts the rights of its citizens at risk. The laws currently being debated remain too vague, or too permissive in many respects, leaving it up to each member state to decide whether or not to authorize the use of FRT in cases where the technology could be misused. The various EDPB are monitoring the issue and have expressed their concern, but their requests remain unanswered for the moment.

The legal decisions taken at the European level as well as worldwide in the coming years will be critical for the future of this technology. Whether the advent of FRT will be remembered fifty years from now as a good thing or as a human rights disaster depends on the legal decisions that are taken. The worst-case scenario, however, is probably no decision at all.

________________________________________________________________________

[22] Official website of ADAM Harvey, Hyperface: https://ahprojects.com/hyperface/

[23] Official website of ADAM Harvey, CVDazzle: https://ahprojects.com/cvdazzle/

[24] NITZAN Guetta, ASAF Shabtai, et al., 2021, Dodging Attack Using Carefully Crafted Natural Makeup: https://arxiv.org/pdf/2109.06467.pdf

[25] VFrame.io application: https://github.com/vframeio/dface

[26] ZHANG Nana, Huang Jun, ZHANG Hui, 2020, Interactive Face Liveness Detection Based on OpenVINO and Near Infrared Camera.

[27] SySS, 2017, Biometricks: Bypassing an Enterprise-Grade Biometric Face Authentication System: https://www.syss.de/pentest-blog/2017/syss-2017-027-biometricks-bypassing-an-enterprise-grade-biometric-face-authentication-system/

[28] POVOLNY Steve, 2020, Dopple-ganging up on Facial Recognition Systems: https://www.trellix.com/en-us/about/newsroom/stories/threat-labs/dopple-ganging-up-on-facial-recognition-systems.html

[29] L’Ukraine a commencé à utiliser la reconnaissance faciale de Clearview AI: https://intelligence-artificielle.developpez.com/actu/332747/L-Ukraine-a-commence-a-utiliser-la-reconnaissance-faciale-de-Clearview-AI-pour-scanner-les-visages-des-Russes-decedes-puis-contacte-les-meres/

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DOM_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/martin_bracher_2048x1536.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2023/05/STM_web_min.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2023/09/SGR_web-2.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/03/OBA_web-scaled.jpg)