We’re now coming closer to the final setup of the OpenStack test environment. Again, looking at the minimum services we need, there are only three of them left:

- Keystone: Identity service (done)

- Glance: Image service (done)

- Placement: Placement service (done)

- Nova: Compute service

- Neutron: Network service

- Horizon: The OpenStack dashboard

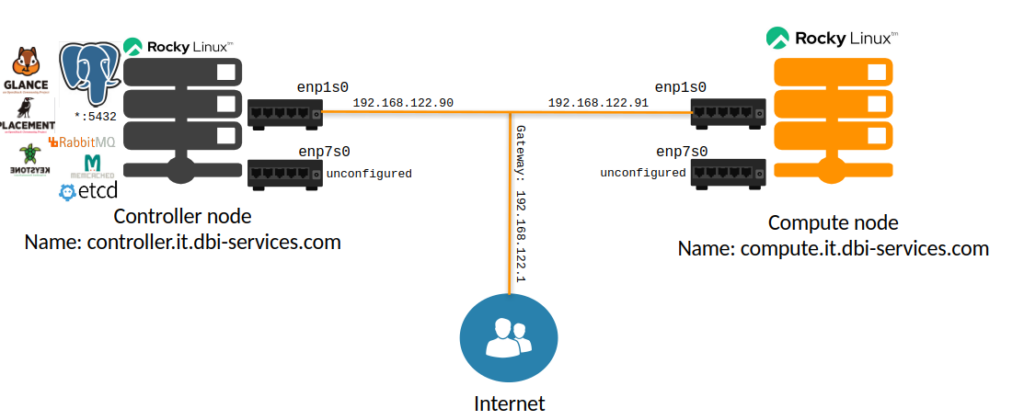

In this post we’ll continue with the Compute Service, which is called Nova. As a short reminder, if you followed the last posts, this is what we have currently:

In addition to that, we need something which is actually providing compute resources, and this is Nova. Nova “interacts with OpenStack Identity for authentication, OpenStack Placement for resource inventory tracking and selection, OpenStack Image service for disk and server images, and OpenStack Dashboard for the user and administrative interface”.

Nova, like the Glance and Placement services, needs the database backend so we’re going to prepare this on the controller node:

[root@controller ~]$ su - postgres -c "psql -c \"create user nova with login password 'admin'\""

CREATE ROLE

[root@controller ~]$ su - postgres -c "psql -c 'create database nova_api with owner=nova'"

CREATE DATABASE

[root@controller ~]$ su - postgres -c "psql -c 'create database nova with owner=nova'"

CREATE DATABASE

[root@controller ~]$ su - postgres -c "psql -c 'create database nova_cell0 with owner=nova'"

CREATE DATABASE

Again, we need to create the service credentials and the endpoints for the service:

[root@controller ~]$ . admin-openrc

[root@controller ~]$ openstack user create --domain default --password-prompt nova

User Password:

Repeat User Password:

No password was supplied, authentication will fail when a user does not have a password.

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| default_project_id | None |

| domain_id | default |

| email | None |

| enabled | True |

| id | 5f096012f1d74232a55d0bd76faad3e5 |

| name | nova |

| description | None |

| password_expires_at | None |

+---------------------+----------------------------------+

[root@controller ~]$ openstack role add --project service --user nova admin

[root@controller ~]$ openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| id | bcbf978986834cbca430a1a5c7b6d9b3 |

| name | nova |

| type | compute |

| enabled | True |

| description | OpenStack Compute |

+-------------+----------------------------------+

[root@controller ~]$ openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 09cb340bc9014ee69435360e8555a998 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | bcbf978986834cbca430a1a5c7b6d9b3 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]$ openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 7e90b9431c48442d805e43e9af65ef8e |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | bcbf978986834cbca430a1a5c7b6d9b3 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]$ openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | a04227f6f4d84011a186d2eda98b0c8b |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | bcbf978986834cbca430a1a5c7b6d9b3 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

Having this ready, we can continue to install the required packages on the controller node:

[root@controller ~]$ dnf install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler -y

The configuration to do for Nova is bit longer than for the previous services, but nothing special in this simple case: We need the connection to the Message Queue (RabbitMQ), the details of the controller node (same host), the database connection details for Nova itself, the details how to get to the Keystone service, the details for the Placement service, and a few VNC related settings:

[root@controller ~]$ egrep -v "^#|^$" /etc/nova/nova.conf

[DEFAULT]

my_ip=192.168.122.90

enabled_apis = osapi_compute,metadata

transport_url=rabbit://openstack:admin@controller:5672/

[api]

auth_strategy = keystone

[api_database]

connection=postgresql+psycopg2://nova:admin@localhost/nova_api

[barbican]

[barbican_service_user]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[cyborg]

[database]

connection=postgresql+psycopg2://nova:admin@localhost/nova

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[image_cache]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = admin

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[os_vif_linux_bridge]

[os_vif_ovs]

[oslo_concurrency]

[oslo_limit]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = admin

[privsep]

[profiler]

[profiler_jaeger]

[profiler_otlp]

[quota]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

send_service_user_token = true

auth_url = https://controller/identity

auth_strategy = keystone

auth_type = password

project_domain_name = Default

project_name = service

user_domain_name = Default

username = nova

password = admin

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[workarounds]

[wsgi]

[zvm]

Time to populate the databases for Nova (the following will also create a Cell, which is a concept used by Nova for sharding):

[root@controller ~]$ sh -c "nova-manage api_db sync" nova

2025-01-22 08:42:39.389 7200 INFO alembic.runtime.migration [-] Context impl PostgresqlImpl.

2025-01-22 08:42:39.390 7200 INFO alembic.runtime.migration [-] Will assume transactional DDL.

2025-01-22 08:42:39.397 7200 INFO alembic.runtime.migration [-] Running upgrade -> d67eeaabee36, Initial version

2025-01-22 08:42:39.670 7200 INFO alembic.runtime.migration [-] Running upgrade d67eeaabee36 -> b30f573d3377, Remove unused build_requests columns

2025-01-22 08:42:39.673 7200 INFO alembic.runtime.migration [-] Running upgrade b30f573d3377 -> cdeec0c85668, Drop legacy migrate_version table

[root@controller ~]$ sh -c "nova-manage cell_v2 map_cell0" nova

[root@controller ~]$ sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

--transport-url not provided in the command line, using the value [DEFAULT]/transport_url from the configuration file

--database_connection not provided in the command line, using the value [database]/connection from the configuration file

804d576e-ac59-4fc5-b83a-018088ea8c11

[root@controller ~]$ sh -c "nova-manage db sync" nova

2025-01-22 08:44:07.177 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Context impl PostgresqlImpl.

2025-01-22 08:44:07.178 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Will assume transactional DDL.

2025-01-22 08:44:07.192 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade -> 8f2f1571d55b, Initial version

2025-01-22 08:44:07.819 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade 8f2f1571d55b -> 16f1fbcab42b, Resolve shadow table diffs

2025-01-22 08:44:07.821 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade 16f1fbcab42b -> ccb0fa1a2252, Add encryption fields to BlockDeviceMapping

2025-01-22 08:44:07.823 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade ccb0fa1a2252 -> 960aac0e09ea, de-duplicate_indexes_in_instances__console_auth_tokens

2025-01-22 08:44:07.824 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade 960aac0e09ea -> 1b91788ec3a6, Drop legacy migrate_version table

2025-01-22 08:44:07.825 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade 1b91788ec3a6 -> 1acf2c98e646, Add compute_id to instance

2025-01-22 08:44:07.829 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade 1acf2c98e646 -> 13863f4e1612, create_share_mapping_table

2025-01-22 08:44:07.855 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Context impl PostgresqlImpl.

2025-01-22 08:44:07.856 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Will assume transactional DDL.

2025-01-22 08:44:07.867 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade -> 8f2f1571d55b, Initial version

2025-01-22 08:44:08.706 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade 8f2f1571d55b -> 16f1fbcab42b, Resolve shadow table diffs

2025-01-22 08:44:08.707 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade 16f1fbcab42b -> ccb0fa1a2252, Add encryption fields to BlockDeviceMapping

2025-01-22 08:44:08.709 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade ccb0fa1a2252 -> 960aac0e09ea, de-duplicate_indexes_in_instances__console_auth_tokens

2025-01-22 08:44:08.710 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade 960aac0e09ea -> 1b91788ec3a6, Drop legacy migrate_version table

2025-01-22 08:44:08.712 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade 1b91788ec3a6 -> 1acf2c98e646, Add compute_id to instance

2025-01-22 08:44:08.716 7262 INFO alembic.runtime.migration [None req-69377931-e25d-40d5-afe0-0eadfadd9ed4 - - - - - -] Running upgrade 1acf2c98e646 -> 13863f4e1612, create_share_mapping_table

[root@controller ~]$ sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+------------------------------------------+------------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+------------------------------------------+------------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | postgresql+psycopg2://nova:****@localhost/nova_cell0 | False |

| cell1 | 804d576e-ac59-4fc5-b83a-018088ea8c11 | rabbit://openstack:****@controller:5672/ | postgresql+psycopg2://nova:****@localhost/nova | False |

+-------+--------------------------------------+------------------------------------------+------------------------------------------------------+----------+

The final steps on the controller node are to enable and start the services:

[root@controller ~]$ systemctl enable \

openstack-nova-api.service \

openstack-nova-scheduler.service \

openstack-nova-conductor.service \

openstack-nova-novncproxy.service

Created symlink /etc/systemd/system/multi-user.target.wants/openstack-nova-api.service → /usr/lib/systemd/system/openstack-nova-api.service.

Created symlink /etc/systemd/system/multi-user.target.wants/openstack-nova-scheduler.service → /usr/lib/systemd/system/openstack-nova-scheduler.service.

Created symlink /etc/systemd/system/multi-user.target.wants/openstack-nova-conductor.service → /usr/lib/systemd/system/openstack-nova-conductor.service.

Created symlink /etc/systemd/system/multi-user.target.wants/openstack-nova-novncproxy.service → /usr/lib/systemd/system/openstack-nova-novncproxy.service.

[root@controller ~]$ systemctl start \

openstack-nova-api.service \

openstack-nova-scheduler.service \

openstack-nova-conductor.service \

openstack-nova-novncproxy.service

As the controller node will not run any compute resources, Nova is the first service which needs to be configured on the compute node(s) as well. In our configuration KVM will be used to run the virtual machines. Before we start with that, make sure that host running the compute node can use hardware acceleration:

[root@compute ~]$ egrep -c '(vmx|svm)' /proc/cpuinfo

8

Check the documentation for additional details. If the output is greater than 0 you should be fine. If it is 0, there is more configuration to do which is not in the scope of this post.

Of course, we need to install the Nova package on the compute node, before we can do anything:

[root@compute ~]$ dnf install openstack-nova-compute -y

The configuration of Nova on the compute node is not much different from the one we’ve done on the controller node, but we do not need to specify any connection details for the Nova databases. We need, however, to tell Nova how to virtualize by setting the driver and the type of virtualization:

[root@compute ~]$ egrep -v "^#|^$" /etc/nova/nova.conf

[DEFAULT]

compute_driver=libvirt.LibvirtDriver

my_ip=192.168.122.91

state_path=/var/lib/nova

enabled_apis = osapi_compute,metadata

transport_url=rabbit://openstack:admin@controller

[api]

auth_strategy = keystone

[api_database]

[barbican]

[barbican_service_user]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[cyborg]

[database]

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[image_cache]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = admin

[libvirt]

virt_type=kvm

[metrics]

[mks]

[neutron]

[notifications]

[os_vif_linux_bridge]

[os_vif_ovs]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_limit]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = admin

[privsep]

[profiler]

[profiler_jaeger]

[profiler_otlp]

[quota]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

send_service_user_token = true

auth_url = https://controller/identity

auth_strategy = keystone

auth_type = password

project_domain_name = Default

project_name = service

user_domain_name = Default

username = nova

password = admin

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[workarounds]

[wsgi]

[zvm]

As we want to use libvirt, this needs to be installed as well (this was not required on the controller node):

[root@compute ~]$ dnf install libvirt -y

Enable and start the services on the compute node:

[root@compute ~]$ systemctl enable libvirtd.service openstack-nova-compute.service

Created symlink /etc/systemd/system/multi-user.target.wants/libvirtd.service → /usr/lib/systemd/system/libvirtd.service.

Created symlink /etc/systemd/system/sockets.target.wants/libvirtd.socket → /usr/lib/systemd/system/libvirtd.socket.

Created symlink /etc/systemd/system/sockets.target.wants/libvirtd-ro.socket → /usr/lib/systemd/system/libvirtd-ro.socket.

Created symlink /etc/systemd/system/sockets.target.wants/libvirtd-admin.socket → /usr/lib/systemd/system/libvirtd-admin.socket.

Created symlink /etc/systemd/system/multi-user.target.wants/openstack-nova-compute.service → /usr/lib/systemd/system/openstack-nova-compute.service.

[root@compute ~]$ systemctl start libvirtd.service openstack-nova-compute.service

Compute nodes need to be attached to a cell (see above), so lets do this on the controller node:

[root@controller ~]$ . admin-openrc

[root@controller ~]$ openstack compute service list --service nova-compute

+--------------------------------------+--------------+-----------------------------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+--------------------------------------+--------------+-----------------------------+------+---------+-------+----------------------------+

| 3997f0ab-f9b1-4a7a-b635-01d71c805220 | nova-compute | compute.it.dbi-services.com | nova | enabled | up | 2025-01-22T08:41:19.727723 |

+--------------------------------------+--------------+-----------------------------+------+---------+-------+----------------------------+

[root@controller ~]$ sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 804d576e-ac59-4fc5-b83a-018088ea8c11

Checking host mapping for compute host 'compute.it.dbi-services.com': 04bfd9d9-df04-4479-843e-e97457c0ab67

Creating host mapping for compute host 'compute.it.dbi-services.com': 04bfd9d9-df04-4479-843e-e97457c0ab67

Found 1 unmapped computes in cell: 804d576e-ac59-4fc5-b83a-018088ea8c11

If all is fine, the compute node should show up when we ask for the compute service list:

[root@controller ~]$ openstack compute service list

+--------------------------------------+----------------+--------------------------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+--------------------------------------+----------------+--------------------------------+----------+---------+-------+----------------------------+

| 3997f0ab-f9b1-4a7a-b635-01d71c805220 | nova-compute | compute.it.dbi-services.com | nova | enabled | up | 2025-01-22T08:43:49.725537 |

| 29cc3084-1066-4a1f-b9f6-6f0c2187d6b5 | nova-scheduler | controller.it.dbi-services.com | internal | enabled | up | 2025-01-22T08:43:51.402825 |

| aea131c6-96b6-466f-802e-58018c931ec1 | nova-conductor | controller.it.dbi-services.com | internal | enabled | up | 2025-01-22T08:43:51.702004 |

+--------------------------------------+----------------+--------------------------------+----------+---------+-------+----------------------------+

Nova should show up in the catalog:

[root@controller ~]$ openstack catalog list

+-----------+-----------+-----------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+-----------------------------------------+

| keystone | identity | RegionOne |

| | | public: http://controller:5000/v3/ |

| | | RegionOne |

| | | internal: http://controller:5000/v3/ |

| | | RegionOne |

| | | admin: http://controller:5000/v3/ |

| | | |

| glance | image | RegionOne |

| | | public: http://controller:9292 |

| | | RegionOne |

| | | internal: http://controller:9292 |

| | | RegionOne |

| | | admin: http://controller:9292 |

| | | |

| placement | placement | RegionOne |

| | | public: http://controller:8778 |

| | | RegionOne |

| | | internal: http://controller:8778 |

| | | RegionOne |

| | | admin: http://controller:8778 |

| | | |

| nova | compute | RegionOne |

| | | public: http://controller:8774/v2.1 |

| | | RegionOne |

| | | internal: http://controller:8774/v2.1 |

| | | RegionOne |

| | | admin: http://controller:8774/v2.1 |

| | | |

| glance | image | |

| glance | image | |

| glance | image | |

+-----------+-----------+-----------------------------------------+

Once more (see last post), very the image service (Glance):

[root@controller ~]$ openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 150fd48b-8ed4-4170-ad98-213d9eddcba0 | cirros | active |

+--------------------------------------+--------+--------+

… and finally check that the cells and placement API are working properly:

[root@controller ~]$ nova-status upgrade check

+-------------------------------------------+

| Upgrade Check Results |

+-------------------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Cinder API |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Policy File JSON to YAML Migration |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Older than N-1 computes |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: hw_machine_type unset |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Service User Token Configuration |

| Result: Success |

| Details: None |

+-------------------------------------------+

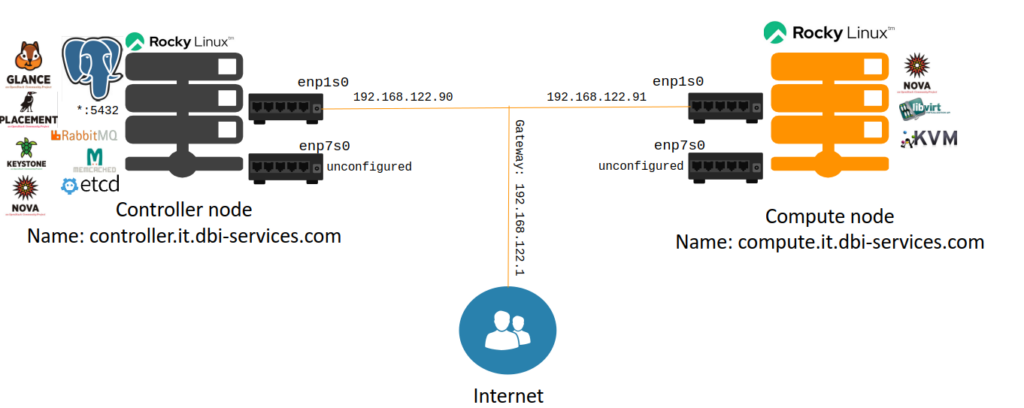

That’s it for configuring Nova on the controller and the compute nodes and this leaves us with the following setup:

As you can see, this is getting more and more complex as there are many pieces which make up our final OpenStack deployment. In the next post we’ll create and configure the Network Service (Neutron).

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/martin_bracher_2048x1536.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/11/NIJ-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/JEW_web-min-scaled.jpg)