By the end of the last post, we finished with preparing the controller and compute node for being ready to deploy the first OpenStack service: Keystone, the Identity Service. Before we dive into the details lets quickly talk about why we need such a service. In an OpenStack setup there needs to be some form of authentication and service discovery. This is the task of Keystone, and Keystone exposes an API to deal with this.

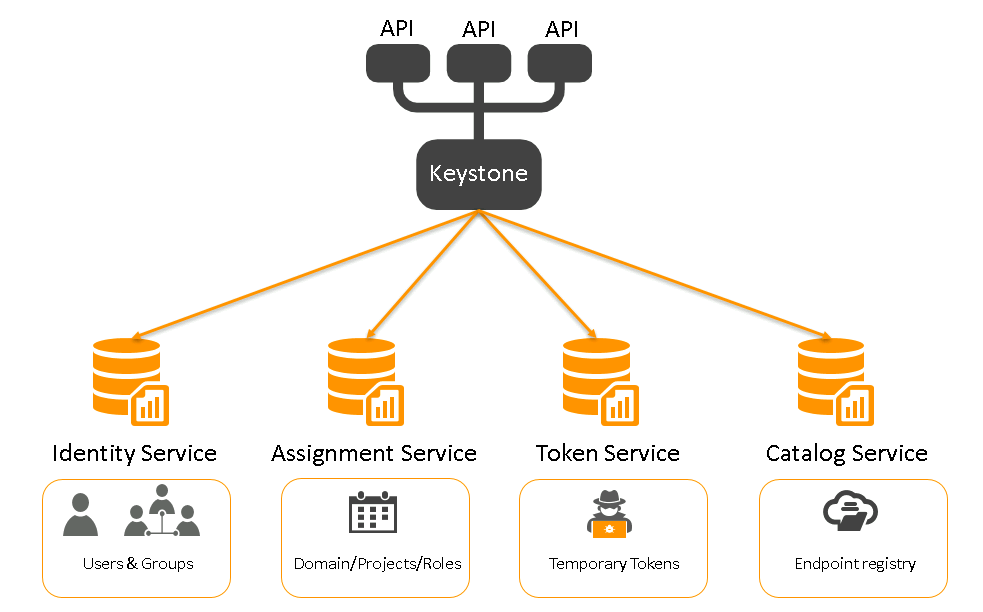

Keystone is not a single service but a combination of multiple internal services which expose endpoints:

Identity service: Provides authentication credentials for users and groups

- Users: Represent an individual API consumer (those are not globally unique, only in their domain)

- Groups: A collection of users

- Domains: A container for users, projects and groups

Assignment Service: Provides data about roles and role assignments

- Roles: The level of authorization the end user can obtain

- Role Assignments: A 3-tuple that has a Role, a Resource and an Identity

Token Service: Validates and manages tokens used for authenticating requests

Catalog Service: Provides an endpoint registry used for endpoint discovery

To visualize this, it looks like this:

Now, that we have an overview of Keystone, let’s start with setting up the service. But before Keystone can be setup, we need a message queue. The message queue is used by OpenStack to coordinate operations and status information among services. This service is typically installed on the controller node and we’ll use RabbitMQ for this. The steps to do that are quite simple:

[root@controller ~]$ dnf install rabbitmq-server -y

[root@controller ~]$ systemctl enable rabbitmq-server.service

[root@controller ~]$ systemctl start rabbitmq-server.service

[root@controller ~]$ rabbitmqctl add_user openstack admin

[root@controller ~]$ rabbitmqctl set_permissions openstack ".*" ".*" ".*"

The second last line creates the “openstack” user with the password “admin”, and the last line grants all permissions for the “openstack” user for the vhost “/”:

set_permissions [-p vhost] user conf write read

vhost The name of the virtual host to which to grant the user access, de‐

faulting to "/".

user The name of the user to grant access to the specified virtual host.

conf A regular expression matching resource names for which the user is

granted configure permissions.

write A regular expression matching resource names for which the user is

granted write permissions.

read A regular expression matching resource names for which the user is

granted read permissions.

What we need as well is something to cache the authentication tokens, and this is Memcached:

[root@controller ~]$ dnf install memcached python3-memcached -y

[root@controller ~]$ grep -i options /etc/sysconfig/memcached

OPTIONS="-l 192.168.122.90,::1"

[root@controller ~]$ systemctl enable memcached

[root@controller ~]$ systemctl start memcached

You need to make Memcached to listen externally, that’s why the “OPTIONS” parameter needs to be adjusted. On top of that we need a distributed configuration store, as OpenStack uses that for distributed key locking, storing configuration, keeping track of service live-ness and other scenarios. For that purpose etcd is used (in a production setup this would also be setup as a cluster, to make it highly available. Here, we just use one service on the controller node):

[root@controller ~]$ dnf install etcd -y

[root@controller ~]$ cat /etc/etcd/etcd.conf

name: controller

data-dir: /var/lib/etcd/default.etcd

max-wals: 2

auto-compaction-retention: 5m

auto-compaction-mode: revision

initial-advertise-peer-urls: http://192.168.122.90:2380

listen-peer-urls: http://192.168.122.90:2380

listen-client-urls: http://192.168.122.90:2379,http://localhost:2379

advertise-client-urls: http://192.168.122.90:2379

initial-cluster: controller=http://192.168.122.90:2380

initial-cluster-token: etcd-cluster-01

initial-cluster-state: new

[root@controller ~]$ cat /etc/systemd/system/etcd.service

[Unit]

Description=dbi services etcd service

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

User=etcd

Type=notify

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/bin/etcd --config-file /etc/etcd/etcd.conf

Restart=always

RestartSec=10s

LimitNOFILE=40000

[Install]

WantedBy=multi-user.target

[root@controller ~]$ systemctl enable etcd

[root@controller ~]$ systemctl start etcd

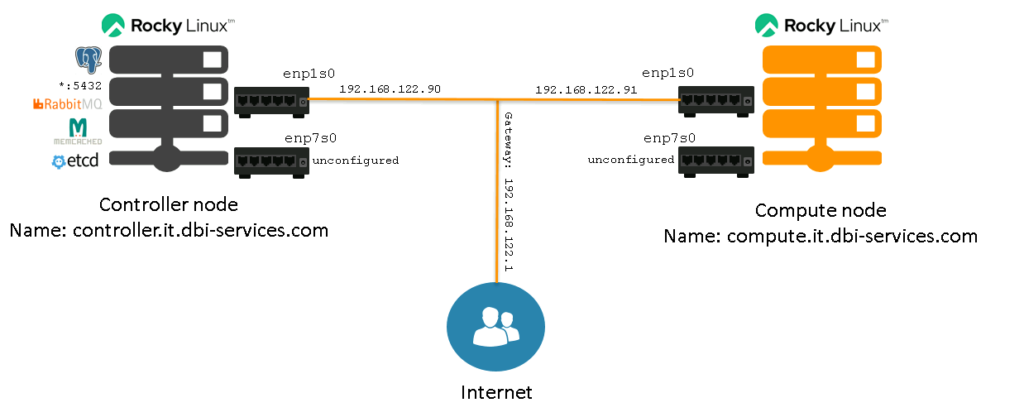

This makes our current setup look like this:

Quite a few services already, and we do not even have an OpenStack service running yet.

Keystone is one of the components which needs the database as a backend to store it’s configuration, so we need to create a user and a database:

[root@controller ~]$ su - postgres -c "psql -c \"create user keystone with login password 'admin'\""

CREATE ROLE

[root@controller ~]$ su - postgres -c "psql -c 'create database keystone with owner=keystone'"

CREATE DATABASE

Now we’re ready to install the OpenStack client, the http server, and the Python driver for the PostgreSQL backend:

[root@controller ~]$ dnf install openstack-keystone httpd python3-mod_wsgi python3-psycopg2

The configuration for Keystone is quite simple for this demo setup (basically the connection to the database to use and the token provider):

[root@ostack-controller ~]$ egrep "\@localhost|provider = fernet|backend =" /etc/keystone/keystone.conf | egrep -v "^#"

backend = postgresql

connection = postgresql+psycopg2://keystone:admin@localhost/keystone

provider = fernet

If configured correctly, the database can be populated:

[root@controller ~]$ /bin/sh -c "keystone-manage db_sync" keystone

2025-01-20 12:17:45.497 24424 INFO alembic.runtime.migration [-] Context impl PostgresqlImpl.

2025-01-20 12:17:45.498 24424 INFO alembic.runtime.migration [-] Will assume transactional DDL.

2025-01-20 12:17:45.524 24424 INFO alembic.runtime.migration [-] Running upgrade -> 27e647c0fad4, Initial version.

2025-01-20 12:17:45.905 24424 INFO alembic.runtime.migration [-] Running upgrade 27e647c0fad4 -> 29e87d24a316, Initial no-op Yoga expand migration.

2025-01-20 12:17:45.906 24424 INFO alembic.runtime.migration [-] Running upgrade 29e87d24a316 -> b4f8b3f584e0, Fix incorrect constraints.

2025-01-20 12:17:45.910 24424 INFO alembic.runtime.migration [-] Running upgrade b4f8b3f584e0 -> 11c3b243b4cb, Remove service_provider.relay_state_prefix server default.

2025-01-20 12:17:45.910 24424 INFO alembic.runtime.migration [-] Running upgrade 11c3b243b4cb -> 47147121, Add Identity Federation attribute mapping schema version.

2025-01-20 12:17:45.911 24424 INFO alembic.runtime.migration [-] Running upgrade 27e647c0fad4 -> e25ffa003242, Initial no-op Yoga contract migration.

2025-01-20 12:17:45.912 24424 INFO alembic.runtime.migration [-] Running upgrade e25ffa003242 -> 99de3849d860, Fix incorrect constraints.

Initialize the Fernet key repositories:

[root@controller ~]$ keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

2025-01-20 12:19:38.571 24512 INFO keystone.common.utils [-] /etc/keystone/fernet-keys/ does not appear to exist; attempting to create it

2025-01-20 12:19:38.571 24512 INFO keystone.common.fernet_utils [-] Created a new temporary key: /etc/keystone/fernet-keys/0.tmp

2025-01-20 12:19:38.571 24512 INFO keystone.common.fernet_utils [-] Become a valid new key: /etc/keystone/fernet-keys/0

2025-01-20 12:19:38.571 24512 INFO keystone.common.fernet_utils [-] Starting key rotation with 1 key files: ['/etc/keystone/fernet-keys/0']

2025-01-20 12:19:38.571 24512 INFO keystone.common.fernet_utils [-] Created a new temporary key: /etc/keystone/fernet-keys/0.tmp

2025-01-20 12:19:38.571 24512 INFO keystone.common.fernet_utils [-] Current primary key is: 0

2025-01-20 12:19:38.572 24512 INFO keystone.common.fernet_utils [-] Next primary key will be: 1

2025-01-20 12:19:38.572 24512 INFO keystone.common.fernet_utils [-] Promoted key 0 to be the primary: 1

2025-01-20 12:19:38.572 24512 INFO keystone.common.fernet_utils [-] Become a valid new key: /etc/keystone/fernet-keys/0

[root@controller ~]$ keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

2025-01-20 12:20:37.554 24557 INFO keystone.common.utils [-] /etc/keystone/credential-keys/ does not appear to exist; attempting to create it

2025-01-20 12:20:37.554 24557 INFO keystone.common.fernet_utils [-] Created a new temporary key: /etc/keystone/credential-keys/0.tmp

2025-01-20 12:20:37.554 24557 INFO keystone.common.fernet_utils [-] Become a valid new key: /etc/keystone/credential-keys/0

2025-01-20 12:20:37.554 24557 INFO keystone.common.fernet_utils [-] Starting key rotation with 1 key files: ['/etc/keystone/credential-keys/0']

2025-01-20 12:20:37.554 24557 INFO keystone.common.fernet_utils [-] Created a new temporary key: /etc/keystone/credential-keys/0.tmp

2025-01-20 12:20:37.554 24557 INFO keystone.common.fernet_utils [-] Current primary key is: 0

2025-01-20 12:20:37.554 24557 INFO keystone.common.fernet_utils [-] Next primary key will be: 1

2025-01-20 12:20:37.555 24557 INFO keystone.common.fernet_utils [-] Promoted key 0 to be the primary: 1

2025-01-20 12:20:37.555 24557 INFO keystone.common.fernet_utils [-] Become a valid new key: /etc/keystone/credential-keys/0

Bootstrap the identity service:

[root@controller ~]$ keystone-manage bootstrap --bootstrap-password admin \

--bootstrap-admin-url http://controller:5000/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne

2025-01-20 12:22:04.344 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created domain default

2025-01-20 12:22:04.362 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created project admin

2025-01-20 12:22:04.372 24637 WARNING keystone.common.password_hashing [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Truncating password to algorithm specific maximum length 72 characters.: keystone.exception.UserNotFound: Could not find user: admin.

2025-01-20 12:22:04.557 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created user admin

2025-01-20 12:22:04.562 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created role reader

2025-01-20 12:22:04.565 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created role member

2025-01-20 12:22:04.573 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created implied role where 07a25a2a31414277b7ca44513762d417 implies 7440218252584bcc9218dfdb35b26edf

2025-01-20 12:22:04.577 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created role manager

2025-01-20 12:22:04.583 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created implied role where 8095ebea194a4a4a80b1bffb5a75449e implies 07a25a2a31414277b7ca44513762d417

2025-01-20 12:22:04.586 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created role admin

2025-01-20 12:22:04.590 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created implied role where 778315a577de45ccbbbf9ed553ccd525 implies 8095ebea194a4a4a80b1bffb5a75449e

2025-01-20 12:22:04.594 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created role service

2025-01-20 12:22:04.606 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Granted role admin on project admin to user admin.

2025-01-20 12:22:04.612 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Granted role admin on the system to user admin.

2025-01-20 12:22:04.617 24637 WARNING py.warnings [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] /usr/lib/python3.9/site-packages/pycadf/identifier.py:71: UserWarning: Invalid uuid: RegionOne. To ensure interoperability, identifiers should be a valid uuid.

warnings.warn(('Invalid uuid: %s. To ensure interoperability, '

2025-01-20 12:22:04.619 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created region RegionOne

2025-01-20 12:22:04.635 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created public endpoint http://controller:5000/v3/

2025-01-20 12:22:04.642 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created internal endpoint http://controller:5000/v3/

2025-01-20 12:22:04.647 24637 INFO keystone.cmd.bootstrap [None req-26262c3e-e5c4-426f-9c5d-28c6fff534af - - - - - -] Created admin endpoint http://controller:5000/v3/

Configure, enable, and start the Apache web server:

[root@controller ~]$ grep ServerName /etc/httpd/conf/httpd.conf

# ServerName gives the name and port that the server uses to identify itself.

ServerName contoller:80

[root@controller ~]$ ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

[root@controller ~]$ systemctl enable httpd.service

[root@controller ~]$ systemctl start httpd.service

This is the time to check if we can really obtain an authentication token (using the password “admin” we’ve given above):

[root@controller ~]$ openstack --os-auth-url http://controller:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name admin --os-username admin token issue

Password:

+------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2025-01-20T12:27:21+0000 |

| id | gAAAAABnjjMZGHCbtGpg6vGrPfc69LKUTljP7rnfZycu9CmUC-qQftBo_r2jhKBo5GoYenm7-yLCE2eAtondTKCxa0FfA0hzxPCzUFS89wSptyHTI-OR- |

| | ayeldbMYrubT0G7snPAcgqhkx38Km3m_64tPGXtiAvtDvBAj7NoLmPQPf39mqDUJ3o |

| project_id | 920bf34a6c88454f90d405124ca1076d |

| user_id | 3d6998879b6c4fdd91ba4b6ec00b7157 |

+------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

All fine, this works as expected.

It is recommended to create a small environment script, which is setting the environment for us:

[root@controller ~]$ echo "export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2" > ~/admin-openrc

Sourcing that and asking for another token should not anymore ask for a password:

[root@controller ~]$ . admin-openrc

[root@controller ~]$ openstack token issue

+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2025-01-20T12:31:10+0000 |

| id | gAAAAABnjjP-sTJYCN3CE-6kA1AGOmfI8yPTeNCTKkyYj4c4pXqmCbF4FPO_-Snhw1NSN2CKi9WDGrTZtQQ2O4f_PsgZngNgvnza4-AHcj2ku4lUCt-A- |

| | nbBQCK_oP2f0FT5wDVUYZ3oVRLQPccbfdwHXf8C2MTMDaSmoQbXHZlItkQ_CkrS5_4 |

| project_id | 920bf34a6c88454f90d405124ca1076d |

| user_id | 3d6998879b6c4fdd91ba4b6ec00b7157 |

+------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

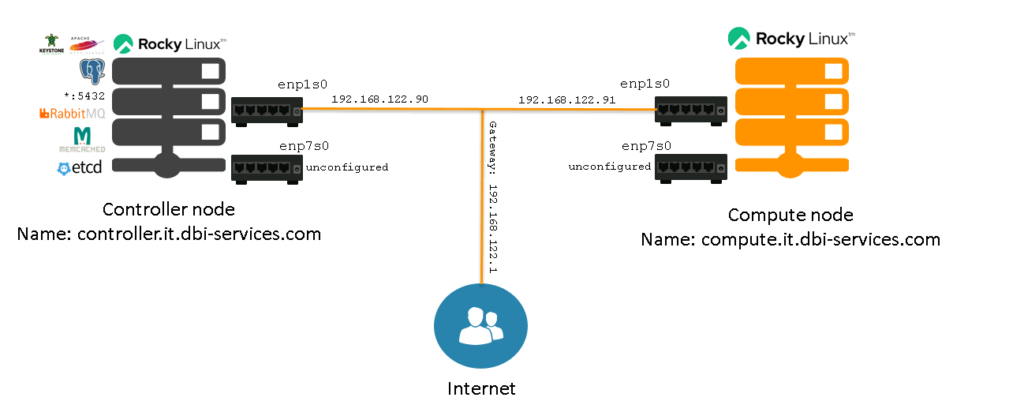

That was quite some stuff to follow for getting the first OpenStack service up and running. This leaves us with the following setup:

As you can see we already have 6 components on the controller node:

- The PostgreSQL instance

- RabbitMQ

- MemcacheD

- etcd

- The Apache web server

- Kestone (served by Apache)

In the next post we’ll setup the Image (Glance) and the Placement service.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/07/ALK_MIN.jpeg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/03/OBA_web-scaled.jpg)