This is the second part of my journey with Tanzu (follow the first part here in case you missed it: VMware Tanzu Kubernetes: Introduction), this time I’ll describe how to initiate our first managed cluster. Then, we’ll deep dive in how to start initializing our first cluster and deploy our first pod.

If you followed my first post regarding Tanzu, you may remember that I described Tanzu Kubernetes for vSphere aka TKGs.

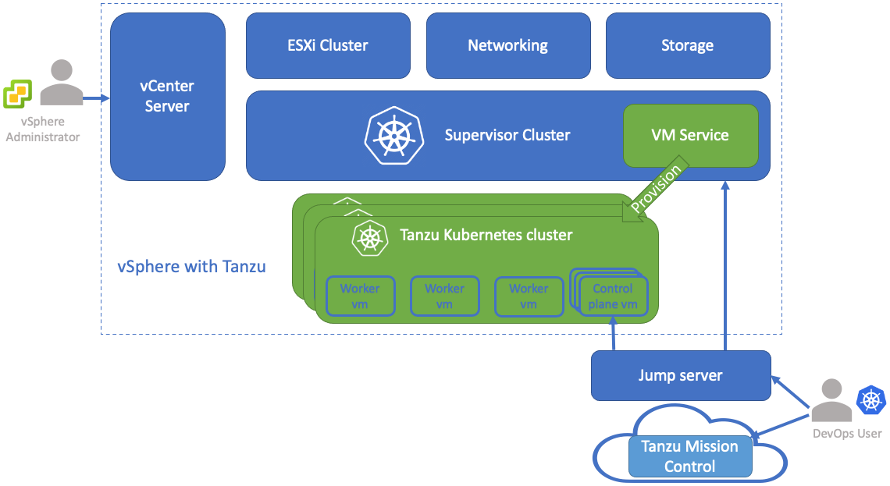

Infrastructure example

Before creating our first cluster, let’s talk about the topology I used for my infrastructure.

I decided to provision a VM as my “Jump server” for all commands to the supervisor and my provisioned cluster.

To run commands to administer our supervisor, we need to install Kubernetes CLI Tools for vSphere in the jump server.

Configure Tanzu CLI

The following link will detail exactly how to install the kubectl and its vSphere plugin.

Once installed in our environment, let’s try it.

$ kubectl vsphere

vSphere Plugin for kubectl.

Usage:

kubectl-vsphere [command]

Available Commands:

help Help about any command

login Authenticate user with vCenter Namespaces

logout Destroys current sessions with all vCenter Namespaces clusters.

version Prints the version of the plugin.

Flags:

-h, --help help for kubectl-vsphere

--request-timeout string Request timeout for HTTP client.

-v, --verbose int Print verbose logging information.

Use "kubectl-vsphere [command] --help" for more information about a command.

Nice, now we are ready to connect to the supervisor.

Connection to the Supervisor

To connect to the supervisor, you will require 2 things:

- An account in the supervisor

- Install the vCenter server root CA in the jump server (it can be override with –insecure-skip-tls-verify flag for test purpose)

- Supervisor server name (it can be an IP address)

$ kubectl-vsphere login --insecure-skip-tls-verify --server 172.18.100.102 --vsphere-username myaccount

KUBECTL_VSPHERE_PASSWORD environment variable is not set. Please enter the password below

Password:

Logged in successfully.

You have access to the following contexts:

172.18.100.102

tkg-test

If the context you wish to use is not in this list, you may need to try

logging in again later, or contact your cluster administrator.

To change context, use `kubectl config use-context <workload name>`

Tips: You can set your password using KUBECTL_VSPHERE_PASSWORD for more convenience.

$ export KUBECTL_VSPHERE_PASSWORD=mypassword

We can notice 2 contexts available which are in fact vSphere namespaces.

The first one (172.18.100.102) represents the Supervisor namespace and the second one is the vSphere namespace created by our vSphere admin.

Switch on it.

$ kubectl config use-context tkg-test

Switched to context "tkg-test".

Describe our cluster

In this step, the vSphere admin has already configured all requirements needed to configure our first cluster. Our task as a DevOps Engineer is to use these new resources into a YAML description file.

Let’s take a look at it.

apiVersion: run.tanzu.vmware.com/v1alpha2

kind: TanzuKubernetesCluster

metadata:

name: tkgs-cluster-1

namespace: tkg-test

spec:

topology:

controlPlane:

replicas: 3

vmClass: best-effort-medium

storageClass: tkg-gold-storagepolicy

tkr:

reference:

name: v1.21.6---vmware.1-tkg.1.b3d708a

nodePools:

- name: worker-pool

replicas: 3

vmClass: best-effort-large

storageClass: tkg-gold-storagepolicy

tkr:

reference:

name: v1.21.6---vmware.1-tkg.1.b3d708a

In this example, I used the kind TanzuKubernetesCluster to describe my cluster. It requires the following informations:

- number of replicas for each master and worker node

- vmClass: Instance type available

- storageClass: kind of storage at vSphere layer

- Kubernetes version to install

As my vSphere is version 7.0 u3, my highest available compatible Kubernetes version is v1.21.6

Lets’ create it.

$ kubectl apply -f test-cluster.yml

tanzukubernetescluster.run.tanzu.vmware.com/tkgs-cluster-1 created

Now, check our workload in it.

$ kubectl get tanzukubernetesclusters

NAME CONTROL PLANE WORKER TKR NAME AGE READY TKR COMPATIBLE UPDATES AVAILABLE

tkgs-cluster-1 3 4 v1.21.6---vmware.1-tkg.1.b3d708a 53s True True

And Voilà! Very easy to create a Kubernetes cluster isn’t it? Plus, we can observe we created a multi-master Kubernetes cluster with no effort comparing with kubeadm methodology

Jump into our cluster

To jump into our freshly deployed Kubernetes cluster, we will use again the kubectl vSphere command.

$ kubectl-vsphere login --insecure-skip-tls-verify \

--server 172.18.100.102 \

--vsphere-username myaccount \

--tanzu-kubernetes-cluster-namespace tkg-test \

--tanzu-kubernetes-cluster-name tkgs-cluster-1

Logged in successfully.

You have access to the following contexts:

172.18.160.102

tkg-test

tkgs-cluster-1

If the context you wish to use is not in this list, you may need to try

logging in again later, or contact your cluster administrator.

To change context, use `kubectl config use-context <workload name>`

We’re in! Our cluster is composed of 3 master nodes and 3 worker nodes. We can check that.

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

tkgs-cluster-1-control-plane-4rrqm Ready control-plane,master 15m v1.21.6+vmware.1 172.18.100.30 <none> VMware Photon OS/Linux 4.19.198-1.ph3-esx containerd://1.4.11

tkgs-cluster-1-control-plane-btvq5 Ready control-plane,master 15m v1.21.6+vmware.1 172.18.100.26 <none> VMware Photon OS/Linux 4.19.198-1.ph3-esx containerd://1.4.11

tkgs-cluster-1-control-plane-ftr6f Ready control-plane,master 15m v1.21.6+vmware.1 172.18.100.29 <none> VMware Photon OS/Linux 4.19.198-1.ph3-esx containerd://1.4.11

tkgs-cluster-1-worker-pool-bngdt-56b65999cd-6lqs5 Ready <none> 15m v1.21.6+vmware.1 172.18.100.24 <none> VMware Photon OS/Linux 4.19.198-1.ph3-esx containerd://1.4.11

tkgs-cluster-1-worker-pool-bngdt-56b65999cd-7xkzp Ready <none> 15m v1.21.6+vmware.1 172.18.100.23 <none> VMware Photon OS/Linux 4.19.198-1.ph3-esx containerd://1.4.11

tkgs-cluster-1-worker-pool-bngdt-56b65999cd-ggx7x Ready <none> 15m v1.21.6+vmware.1 172.18.100.62 <none> VMware Photon OS/Linux 4.19.198-1.ph3-esx containerd://1.4.11

Test our storage

Let’s query our cluster to create our Persistent Volume Claim (PVC).

$ kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

tkg-gold-storagepolicy csi.vsphere.vmware.com Delete Immediate true 5h38m

We can create our PVC using the storage class mentioned above.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: tkgs-test-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: tkg-gold-storagepolicy

resources:

requests:

storage: 2Gi

Once applied, we can check if it is available.

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

tkgs-test-pvc Bound pvc-232d636d-8904-40c5-b22b-283e21ecdb9f 2Gi RWO tkg-gold-storagepolicy 6s

Deploy our first pod

Maybe you’re wondering how can I already deploy my first pod without configuring my Kubernetes Network (CNI), this is all the magic of a managed Kubernetes, all is pre-configured by the vSphere administrator and by default TKGs cluster are provisioned with Antrea CNI.

$ kubectl get deploy -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

antrea-controller 1/1 1 1 30m

antrea-resource-init 1/1 1 1 30m

coredns 2/2 2 2 30m

Ok, now deploy our first “test pod”

$ kubectl run mypod --image=nginx

pod/mypod created

Is the pod functional and correctly scheduled?

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mypod 1/1 Running 0 19s 192.168.5.40 tkgs-cluster-1-worker-pool-bngdt-56b65999cd-xwgwp <none> <none>

Conclusion

Our cluster is now ready, we can go further on Tanzu Kubernetes specificities like components available regarding your edition and how to initialize Tanzu component packaging. Stay tuned for the next blog.

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/martin_bracher_2048x1536.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/03/AHI_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/HER_web-min-scaled.jpg)