Few months ago I attended to the Franck Pachot session about Microservices and databases at SOUG Romandie in Lausanne on 2019 May 21th. He covered some performance challenges that can be introduced by Microservices architecture design and especially when database components come into the game with chatty applications. One year ago, I was in a situation where a customer installed some SQL Server Linux 2017 containers in a Docker infrastructure with user applications located outside of this infrastructure. It is likely an uncommon way to start with containers but anyway when you are immerging in a Docker world you just notice there is a lot of network drivers and considerations you may be aware of and just for a sake of curiosity, I proposed to my customer to perform some network benchmark tests to get a clear picture of these network drivers and their related overhead in order to design correctly Docker infrastructure from a performance standpoint.

The initial customer’s scenario included a standalone Docker infrastructure and we considered different approaches about application network configurations from a performance perspective. We did the same for the second scenario that concerned a Docker Swarm infrastructure we installed in a second step.

The Initial reference – Host network and Docker host network

The first point was to get an initial reference with no network management overhead directly from the network host. We used the iperf3 tool for the tests. This is a kind of tool I’m using with virtual environments as well to ensure network throughput is what we really expect and sometimes I got some surprises on this topic. So, let’s go back to the container world and each test was performed from a Linux host outside to the concerned Docker infrastructure according to the customer scenario.

The attached network card speed link of the Docker Host is supposed to be 10GBits/sec …

$ sudo ethtool eth0 | grep "Speed"

Speed: 10000Mb/s

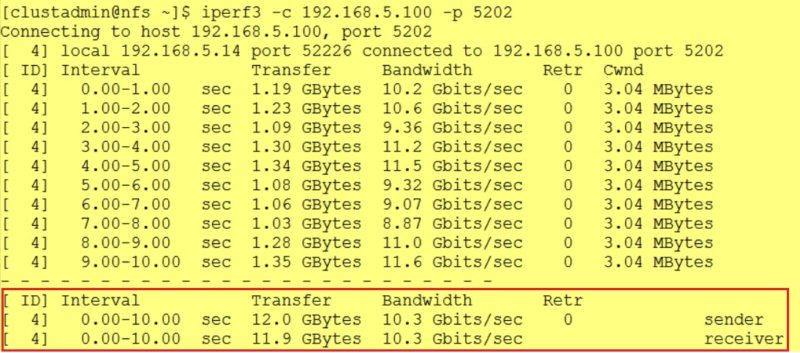

… and it is confirmed by the first iperf3 output below:

Let’s say that we tested the Docker host driver as well and we got similar results.

$ docker run -it --rm --name=iperf3-server --net=host networkstatic/iperf3 -s

Docker bridge mode

The default modus operandi for a Docker host is to create a virtual ethernet bridge (called docker0), attach each container’s network interface to the bridge, and to use network address translation (NAT) when containers need to make themselves visible to the Docker host and beyond. Unless specified, a docker container will use it by default and this is exactly the network driver used by containers in the context of my customer. In fact, we used user-defined bridge network but I would say it doesn’t matter for the tests we performed here.

$ ip addr show docker0

5: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:70:0a:e8:7a brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:70ff:fe0a:e87a/64 scope link

valid_lft forever preferred_lft forever

The iperf3 docker container I ran for my tests is using the default bridge network as show below. The interface with index 24 corresponds to the veth0bfc2dc peer of the concerned container.

$ docker run -d --name=iperf3-server -p 5204:5201 networkstatic/iperf3 -s

…

$ docker ps | grep iperf

5c739940e703 networkstatic/iperf3 "iperf3 -s" 38 minutes ago Up 38 minutes 0.0.0.0:5204->5201/tcp iperf3-server

$ docker exec -ti 5c7 ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

24: eth0@if25: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[clustadmin@docker1 ~]$ ethtool -S veth0bfc2dc

NIC statistics:

peer_ifindex: 24

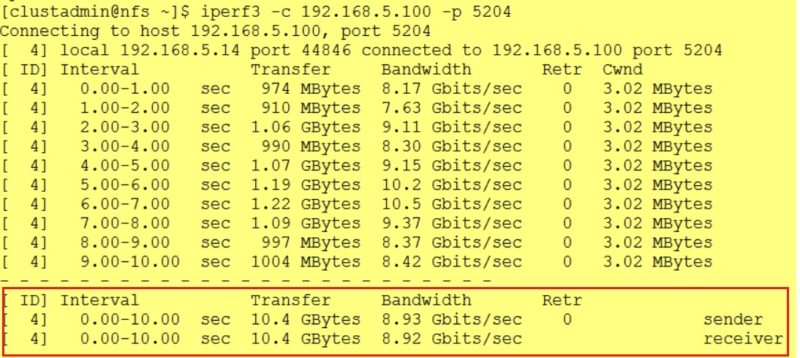

Here the output after running the iperf3 benchmark:

It’s worth noting that the “Bridge” network adds some overheads with an impact of 13% in my tests but in fact, it is an expected outcome to be honest and especially if we refer to the Docker documentation:

Compared to the default bridge mode, the host mode gives significantly better networking performance since it uses the host’s native networking stack whereas the bridge has to go through one level of virtualization through the docker daemon.

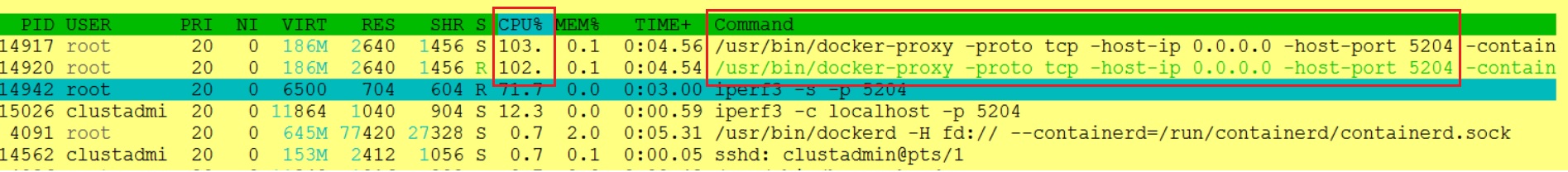

When the docker-proxy comes into play

Next scenario we wanted to test concerned the closet network proximity we may have between the user applications and the SQL Server containers in the Docker infrastructure. In other words, we assumed the application resides on the same host than the SQL Server container and we got some surprises from the docker-proxy itself.

Before running the iperf3 result, I think we have to answer to the million-dollar question here: what is the docker-proxy? But did you only pay attention to this process on your docker host? Let’s run a pstree command:

$ pstree

systemd─┬─NetworkManager───2*[{NetworkManager}]

├─agetty

├─auditd───{auditd}

├─containerd─┬─containerd-shim─┬─npm─┬─node───9*[{node}]

│ │ │ └─9*[{npm}]

│ │ └─12*[{containerd-shim}]

│ ├─containerd-shim─┬─registry───9*[{registry}]

│ │ └─10*[{containerd-shim}]

│ ├─containerd-shim─┬─iperf3

│ │ └─9*[{containerd-shim}]

│ └─16*[{containerd}]

├─crond

├─dbus-daemon

├─dockerd─┬─docker-proxy───7*[{docker-proxy}]

│ └─20*[{dockerd}]

Well, if I understand correctly the Docker documentation, the purpose of this process is to enable a service consumer to communicate with the service providing container …. but it’s only used in particular circumstances. Just bear in mind that controlling access to a container’s service is massively done through the host netfilter framework, in both NAT and filter tables and the docker-proxy mechanism is required only when this method of control is not available:

- When the Docker daemon is started with –iptables=false or –ip-forward=false or when the Linux host cannot act as a router with Linux kernel parameter ipv4.ip_forward=0. This is not my case here.

- When you are using localhost in the connection string of your application that implies to use the loopback interface (127.0.0.0/8) and the Kernel doesn’t allow routing traffic from it. Therefore, it’s not possible to apply netfilter NAT rules and instead, netfilter sends packets through the filter table’s INPUT chain to a local process listening on the docker-proxy

$ sudo iptables -L -n -t nat | grep 127.0.0.0 DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

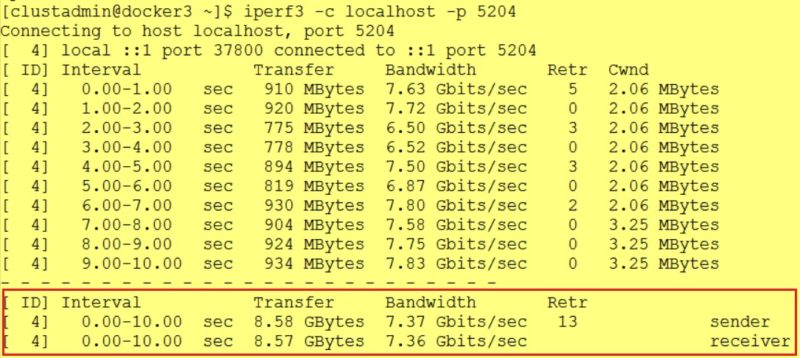

In the picture below you will notice I’m using the localhost key word in my connection string so the docker-proxy comes into play.

A huge performance impact for sure which is about 28%. This performance drop may be explained by the fact the docker-proxy process is consuming 100% of my CPUs:

The docker-proxy operates in userland and I may simply disable it with the docker daemon parameter – “userland-proxy”: false – but I would say this is a case we would not encounter in practice because applications will never use localhost in their connection strings. By the way, changing the connection string from localhost to the IP address of the host container gives a very different outcome similar to the Docker bridge network scenario.

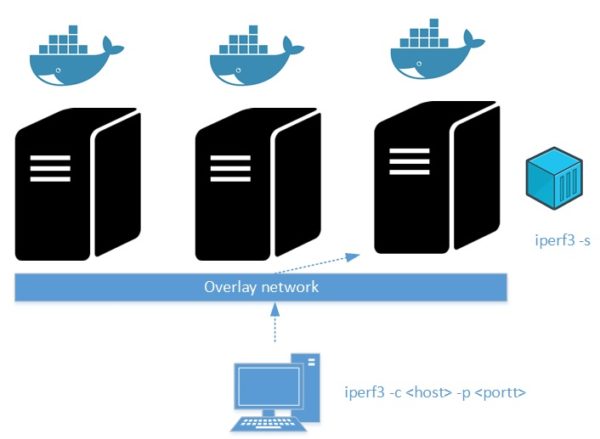

Using an overlay network

Using a single docker host doesn’t fit well with HA or scalability requirements and in a mission-critical environment I strongly guess no customer will go this way. I recommended to my customer to consider using an orchestrator like Docker Swarm or K8s to anticipate future container workload that was coming from future projects. The customer picked up Docker Swarm for its easier implementation compared to K8s.

After implementing a proof of concept for testing purposes (3 nodes included one manager and two worker nodes), we took the opportunity to measure the potential overhead implied by the overlay network which is the common driver used by containers through stacks and services in such situation. Referring to the Docker documentation overlay networks manage communications among the Docker daemons participating in the swarm and used by services deployed on it. Here the docker nodes in the swarm infrastructure:

$ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION vvdofx0fjzcj8elueoxoh2irj * docker1.dbi-services.test Ready Active Leader 18.09.5 njq5x23dw2ubwylkc7n6x63ly docker2.dbi-services.test Ready Active 18.09.5 ruxyptq1b8mdpqgf0zha8zqjl docker3.dbi-services.test Ready Active 18.09.5

An ingress overlay network is created by default when setting up a swarm cluster. User-defined overlay network may be created afterwards and extends to the other nodes only when needed by containers.

$ docker network ls | grep overlay NETWORK ID NAME DRIVER SCOPE ehw16ycy980s ingress overlay swarm

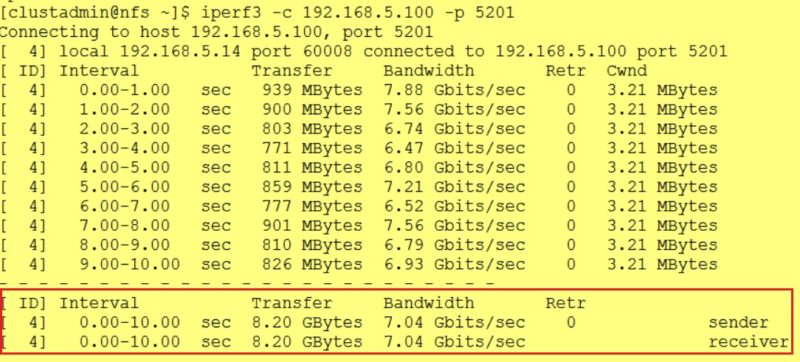

Here the result of the iperf3 benchmark:

Well, the same result than the previous test with roughly 30% of performance drop. Compared to the initial reference, this is again an expected outcome but I didn’t imagine how important could be the impact in such case. Overlay network introduces additional overhead by putting together behind the scene a VXLAN tunnel (virtual Layer 2 network on top of an existing Layer 3 infrastructure), VTEP endpoints for encapsulation/de-encapsulation stuff and traffic encryption by default.

Here a summary of the different scenarios and their performance impact:

| Scenario | Throughput (GB/s) | Performance impact |

| Host network | 10.3 | |

| Docker host network | 10.3 | |

| Docker bridge network | 8.93 | 0.78 |

| Docker proxy | 7.37 | 0.71 |

| Docker overlay network | 7.04 | 0.68 |

In the particular case of my customer where SQL Server instances sit on the Docker infrastructure and applications reside outside of it, it’s clear that using directly Docker host network may be a good option from a performance standpoint assuming this infrastructure remains simple with few SQL Server containers. But in this case, we have to change the SQL Server default listen port with MSSQL_TCP_PORT parameter because using Docker host networking doesn’t provide port mapping capabilities. According to our tests, we didn’t get any evidence of performance improvement in terms of application response time between Docker network drivers but probably because those applications are not network bound here. But I may imagine scenarios where it can be. Finally, this kind of scenario encountered here is likely uncommon and I see containerized apps with database components outside the Docker infrastructure more often but it doesn’t change the game at all and the same considerations apply here … Today I’m very curious to test real microservices scenarios where database and application components are all sitting on a Docker infrastructure.

See you!

By David Barbarin

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/microsoft-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)