Introduction

The Intelligent Query Processing (IQP) feature family is extended with SQL Server 2022.

One of the most anticipated features is the Parameter Sensitive Plan optimization.

I started to test this new feature. In this post, you will find some information to understand how it works and make your first tests too.

The issue with Parameter Sensitive Plan

Parameter Sensitive Plan, also known as “Parameter Sniffing” is a scenario caused by non-uniform data distribution where a single cached execution plan for a parameterized query performs poorly for some parameter values.

A few options are available to deal with a Parameter Sensitive Plan query:

- Use the RECOMPILE query hint to force a new plan compilation for all executions

- Use the OPTIMIZE FOR hint to generate an execution plan for a specific parameter value

- Force the last known good plan with Query Store

All the methods mentioned below require manual intervention, either at the level of the SQL code to add a query hint or by a DBA to force a particular execution plan.

New feature: PSP Optimization

This new PSP optimization feature will be introduced with SQL Server 2022 and enabled by default with Compatibility Level 160.

Even if the Query Store will be enabled by default with 2022, PSP optimization does not require to have Query Store enabled, unlike some other IQP features.

This feature introduces 2 new concepts. To quote the documentation:

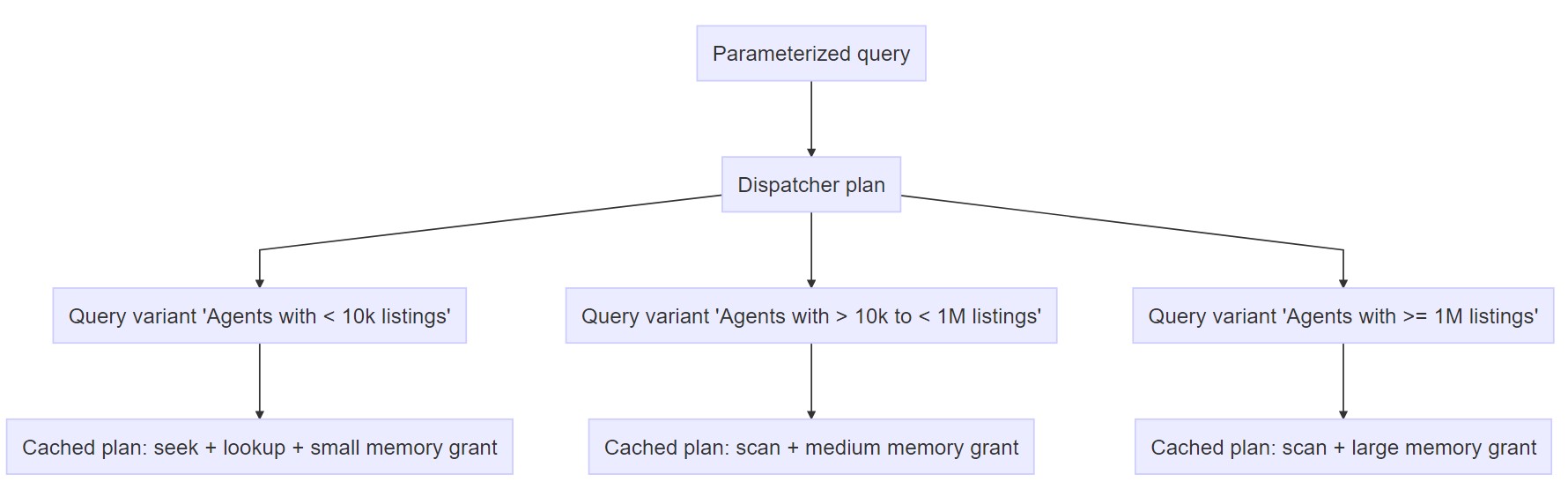

For eligible plans, the initial compilation produces a dispatcher plan that contains the PSP optimization logic called a dispatcher expression. A dispatcher plan maps to query variants based on the cardinality range boundary values predicates.

The idea is as follows; an eligible query will get a dispatcher plan containing the dispatcher expression. Each significant set of parameters has its query variant, an execution plan optimized for these parameters

PSP Optimization Demo

Prerequisites

SQL Server 2022 and Compatibility Level 160

For this demo, you obviously need SQL Server 2022. I’m now using the first public preview, CTP 2.0.

As just mentioned above the prerequisite for this feature is the Compatibility Level 160.

Enable the feature

The PSP Optimization feature is enabled by default. You can enable/disable it with the following command:

ALTER DATABASE SCOPED CONFIGURATION SET PARAMETER_SENSITIVE_PLAN_OPTIMIZATION = ONReason for PSP optimization being skipped

I had difficulty producing a scenario that triggers PSP optimization.

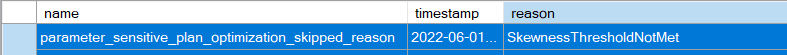

Using the documented XE events I found some reasons for PSP skipping my queries: SkewnessThresholdNotMet, UnsupportedComparisonType or ConjunctThresholdNotMet

I do not know what these thresholds are. I just used a larger table and a simpler query for the demo.

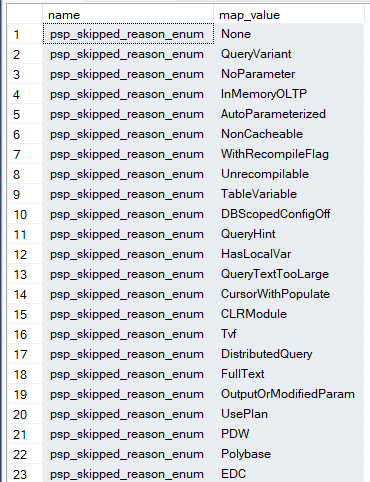

There are currently 32 reasons listed in the XE “psp_skipped_reason_enum” that you can get with this query:

SELECT name, map_value

FROM sys.dm_xe_map_values

WHERE name ='psp_skipped_reason_enum'

ORDER BY map_key

Demo

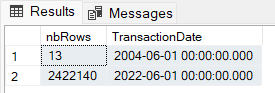

I used the bigTransactionHistory table that I slightly modified to get the following data distribution producing a parameter sniffing scenario.

I run the following query twice with different parameters and PSP optimization enabled.

EXEC sp_executesql

N'

select TransactionId, Quantity, ActualCost, TransactionDate

from dbo.bigTransactionHistory

where TransactionDate = @date'

, N'@date datetime'

, '2004-06-01 00:00:00';

GO

EXEC sp_executesql

N'

select TransactionId, Quantity, ActualCost, TransactionDate

from dbo.bigTransactionHistory

where TransactionDate = @date'

, N'@date datetime'

, '2022-06-01 00:00:00';

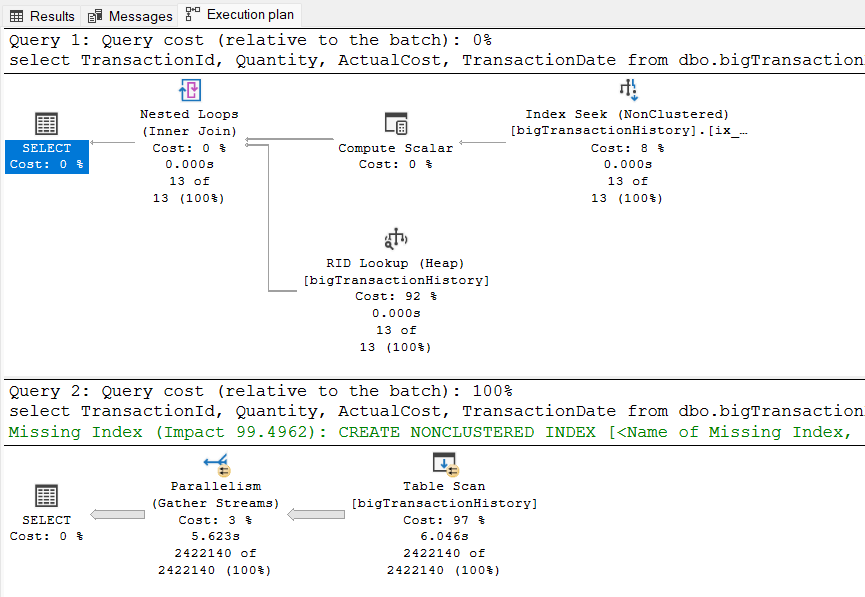

GOI get 2 different execution plans, without forcing a Recompile or forcing a plan myself.

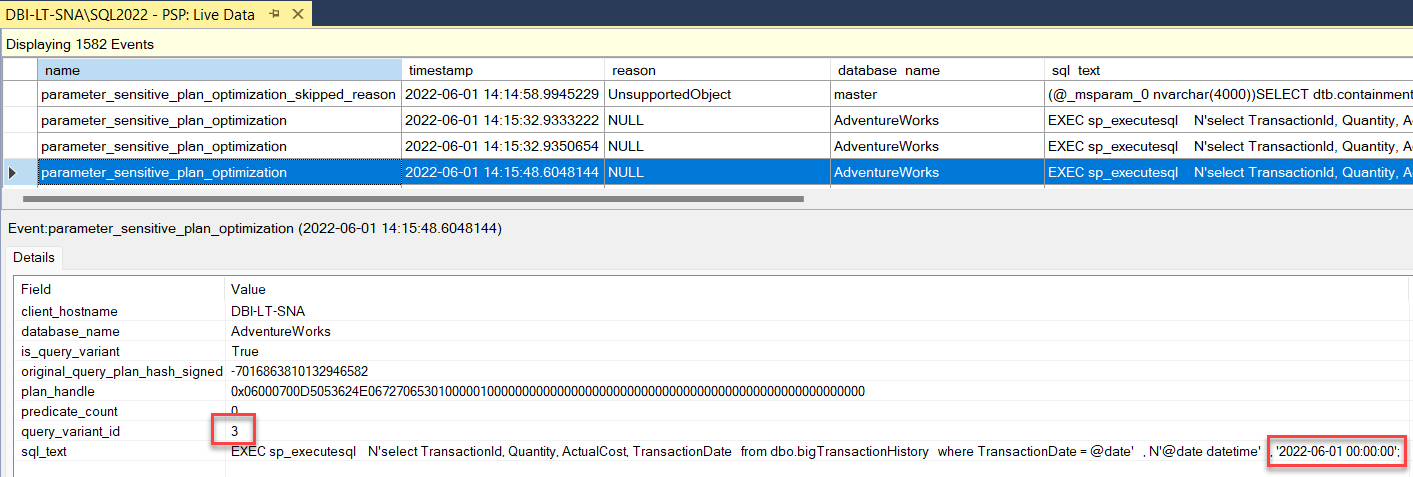

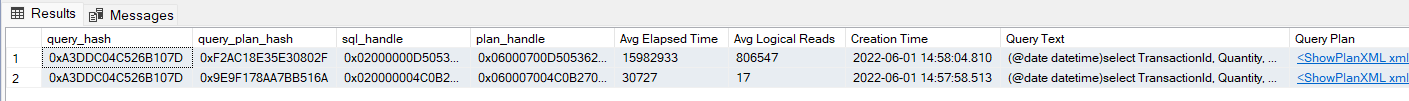

The paramter_sensitive_plan_optimization Extended Event was fired during both executions of the query. We can notice the variant_id information.

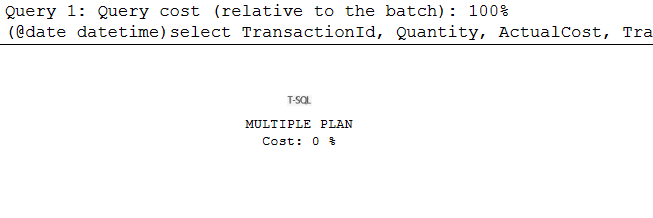

The execution plan shows a new hint option “PLAN PER VALUE” added to the query text:

select TransactionId, Quantity, ActualCost, TransactionDate

from dbo.bigTransactionHistory

where TransactionDate = @date option (PLAN PER VALUE(QueryVariantID = 1, predicate_range([AdventureWorks].[dbo].[bigTransactionHistory].[TransactionDate] = @date, 100.0, 10000.0)))

select TransactionId, Quantity, ActualCost, TransactionDate

from dbo.bigTransactionHistory

where TransactionDate = @date option (PLAN PER VALUE(QueryVariantID = 3, predicate_range([AdventureWorks].[dbo].[bigTransactionHistory].[TransactionDate] = @date, 100.0, 10000.0)))

Based on the parameter value provided when running the query SQL Server will choose the plan to be used at runtime.

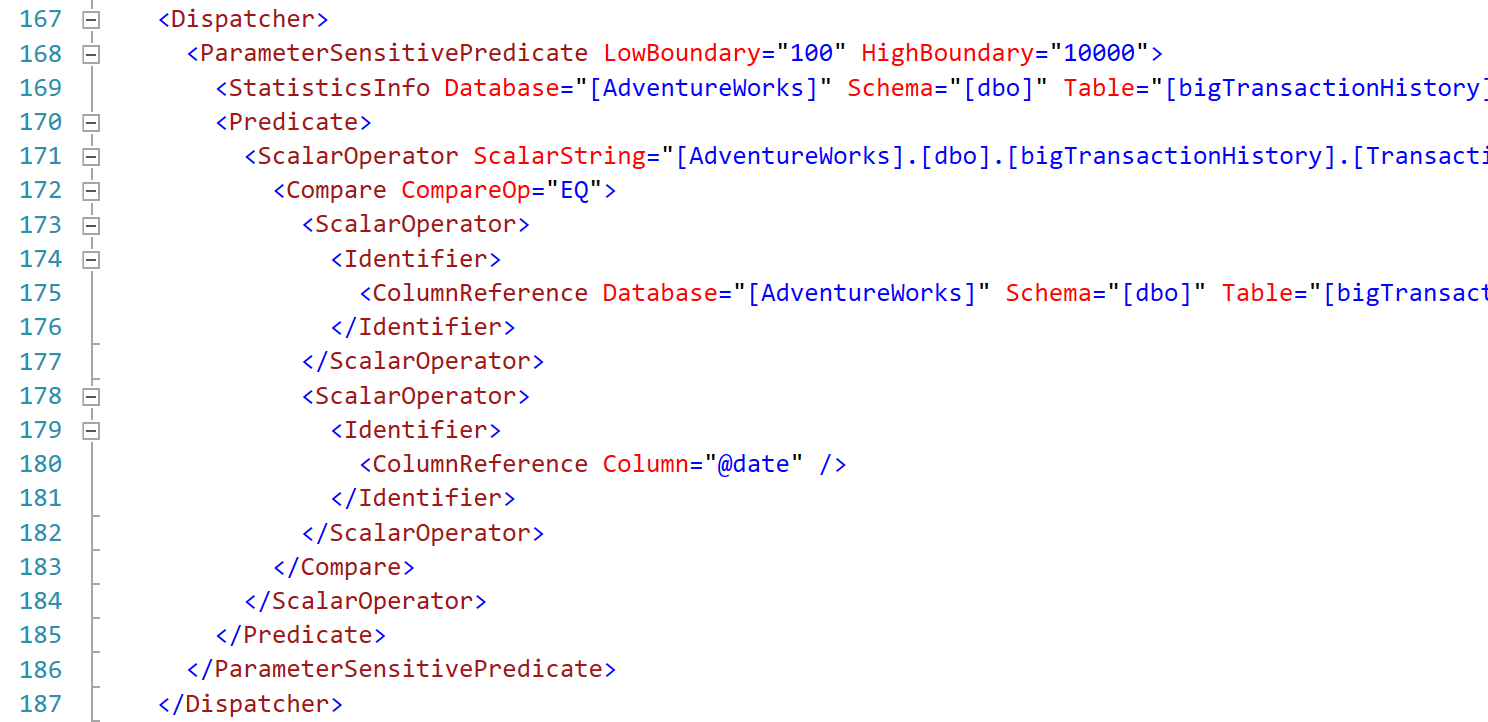

There’s a new “Dispatcher” section in the XML execution plan containing the dispatcher “expression”.

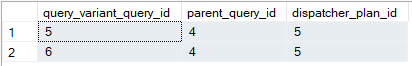

Even though the Query Store is not required for PSP to be working it is useful to have it enabled because you will get information about your query variants in a new DMV: sys.query_store_query_variant

The Query Store report doesn’t show an aggregated view of all variants at once. Looking for query_id 4 doesn’t show anything. That’s something that could be useful in the next versions of SSMS.

The query_hash in DMV sys.dm_exec_query_stats is common to all variants, so it’s possible to determine aggregate resource usage for queries that differ only by input parameter values.

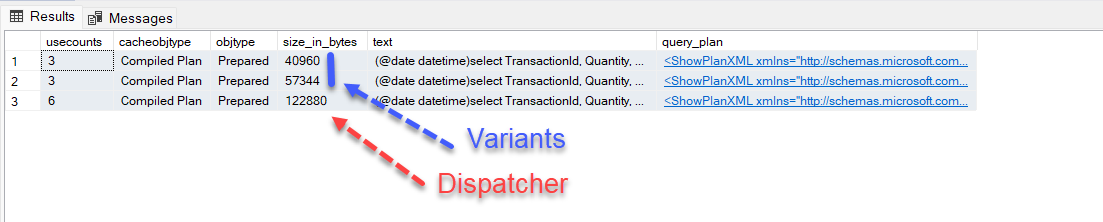

The plan cache shows the plan for each variant and the dispatcher.

SELECT

p.usecounts, p.cacheobjtype

, p.objtype, p.size_in_bytes

, t.[text]

, qp.query_plan

FROM sys.dm_exec_cached_plans p

CROSS APPLY sys.dm_exec_sql_text(p.plan_handle) t

CROSS APPLY sys.dm_exec_query_plan(p.plan_handle) AS qp

WHERE t.[text] like '%TransactionDate%'

AND p.objtype = 'Prepared'

ORDER BY p.objtype DESC

Although the Dispatcher plan is the largest, it only contains the XML dispatcher section mentioned above.

Conclusion

The Parameter Sensitive Plan optimization is working as described in SQL Server 2022 CTP2.0. There’s a lot to learn about this feature.

We do not yet know precisely what are the conditions for a request to be eligible for this feature. We don’t know yet what the side effects are if there are any.

This is a very promising feature that could help stabilize and make database performance more predictable in some cases.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)