Yesterday we got a Pure Storage All Flash Array for testing. As the name implies this is all about Flash storage. What makes Pure Storage different from other vendors is that you don’t buy just a storage box and then pay the usual maintenance costs but you pay for a storage subscription which should keep your storage up to date all the time. The promise is that all the components of the array get replaced by the then current versions over time without forcing you to re-buy. Check the link above for more details on the available subscriptions. This is the first post and describes the setup we did for connecting a PostgreSQL VMWare based machine to the Pure Storage box. The PostgreSQL server will be running as a virtual machine in VMWare ESX and connect over iSCSI to the storage system.

As usual we used CentOS 7 for the PostgreSQL server:

[root@pgpurestorage ~]$ cat /etc/centos-release CentOS Linux release 7.3.1611 (Core) [root@pgpurestorage ~]$ uname -a Linux pgpurestorage.it.dbi-services.com 3.10.0-514.el7.x86_64 #1 SMP Tue Nov 22 16:42:41 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

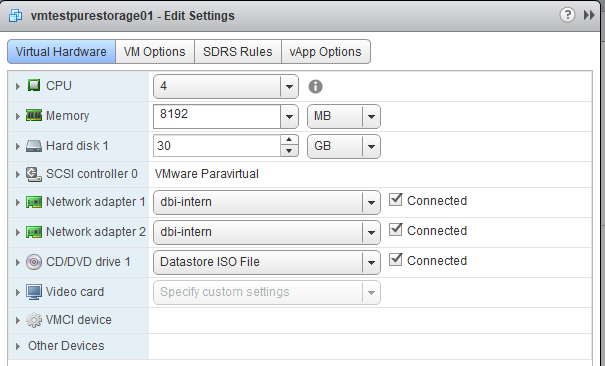

We have 4 vCPUs:

[root@pgpurestorage ~]$ lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 4 On-line CPU(s) list: 0-3 Thread(s) per core: 1 Core(s) per socket: 1 Socket(s): 4 NUMA node(s): 1 Vendor ID: GenuineIntel CPU family: 6 Model: 79 Model name: Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz Stepping: 1 CPU MHz: 2399.583 BogoMIPS: 4799.99 Hypervisor vendor: VMware Virtualization type: full L1d cache: 32K L1i cache: 32K L2 cache: 256K L3 cache: 35840K NUMA node0 CPU(s): 0-3

… and 8GB of memory:

[root@pgpurestorage ~]$ cat /proc/meminfo | head -5 MemTotal: 7994324 kB MemFree: 7508232 kB MemAvailable: 7528048 kB Buffers: 1812 kB Cached: 233648 kB

Because by default you’ll get the “virtual-guest” tuned profile when you install CentOS in a virtualized environment we created our own and switched to the same:

root@:/home/postgres/ [] tuned-adm active Current active profile: virtual-guest root@:/home/postgres/ [] tuned-adm profile dbi-postgres root@:/home/postgres/ [] tuned-adm active Current active profile: dbi-postgres root@:/home/postgres/ [] cat /usr/lib/tuned/dbi-postgres/tuned.conf | egrep -v "^#|^$" [main] summary=dbi services tuned profile for PostgreSQL servers [cpu] governor=performance energy_perf_bias=performance min_perf_pct=100 [disk] readahead=>4096 [sysctl] kernel.sched_min_granularity_ns = 10000000 kernel.sched_wakeup_granularity_ns = 15000000 vm.overcommit_memory=2 vm.swappiness=0 vm.dirty_ratio=2 vm.dirty_background_ratio=1 vm.nr_hugepages=1024

To gather statistics we created a cronjob:

root@:/home/postgres/ [] crontab -l * * * * * /usr/lib64/sa/sa1 -S XALL 60 1

PostgreSQL was installed from source with what was committed to the source tree as of today with the following options:

[postgres@pgpurestorage postgresql]$ PGHOME=/u01/app/postgres/product/10/db_0

[postgres@pgpurestorage postgresql]$ SEGSIZE=2

[postgres@pgpurestorage postgresql]$ BLOCKSIZE=8

[postgres@pgpurestorage postgresql]$ WALSEGSIZE=64

[postgres@pgpurestorage postgresql]$ ./configure --prefix=${PGHOME} \

> --exec-prefix=${PGHOME} \

> --bindir=${PGHOME}/bin \

> --libdir=${PGHOME}/lib \

> --sysconfdir=${PGHOME}/etc \

> --includedir=${PGHOME}/include \

> --datarootdir=${PGHOME}/share \

> --datadir=${PGHOME}/share \

> --with-pgport=5432 \

> --with-perl \

> --with-python \

> --with-tcl \

> --with-openssl \

> --with-pam \

> --with-ldap \

> --with-libxml \

> --with-libxslt \

> --with-segsize=${SEGSIZE} \

> --with-blocksize=${BLOCKSIZE} \

> --with-wal-segsize=${WALSEGSIZE} \

> --with-extra-version=" dbi services build"

For being able to connect to the Pure Storage box you’ll need the iSCSI IQN:

root@:/home/postgres/ [] cat /etc/iscsi/initiatorname.iscsi InitiatorName=iqn.1994-05.com.redhat:185a3499ac9

Knowing the IQN (InitiatorName) we can logon to the Pure Storage console for adding our host, creating a volume and attaching the volume to the host:

Thu May 04 11:44:10 2017 Welcome pureuser. This is Purity Version 4.8.8 on FlashArray dbipure01 http://www.purestorage.com/ pureuser@dbipure01> purehost create --iqn iqn.1994-05.com.redhat:185a3499ac9 pgpurestorage Name WWN IQN pgpurestorage - iqn.1994-05.com.redhat:185a3499ac9 pureuser@dbipure01> purevol create --size 500G volpgtest Name Size Source Created Serial volpgtest 500G - 2017-05-04 11:46:58 CEST BA56B4A72DE94A4400011012 pureuser@dbipure01> purehost connect --vol volpgtest pgpurestorage Name Vol LUN pgpurestorage volpgtest 1

The Pure Storage system has two controllers (10.10.1.93 and 10.10.1.94) so we should be able to ping them:

root@:/home/postgres/ [] ping 10.10.1.93 PING 10.10.1.93 (10.10.1.93) 56(84) bytes of data. 64 bytes from 10.10.1.93: icmp_seq=1 ttl=63 time=2.53 ms 64 bytes from 10.10.1.93: icmp_seq=2 ttl=63 time=0.816 ms 64 bytes from 10.10.1.93: icmp_seq=3 ttl=63 time=0.831 ms ... root@:/u02/pgdata/pgpure/ [] ping 10.10.1.94 PING 10.10.1.94 (10.10.1.94) 56(84) bytes of data. 64 bytes from 10.10.1.94: icmp_seq=1 ttl=63 time=0.980 ms 64 bytes from 10.10.1.94: icmp_seq=2 ttl=63 time=0.848 ms ...

Ok for the connectivity so a discover should work as well:

root@:/home/postgres/ [] iscsiadm -m discovery -t st -p 10.10.1.93 10.10.1.93:3260,1 iqn.2010-06.com.purestorage:flasharray.516cdd52f827bd21 10.10.1.94:3260,1 iqn.2010-06.com.purestorage:flasharray.516cdd52f827bd21 root@:/home/postgres/ [] iscsiadm -m node 10.10.1.93:3260,1 iqn.2010-06.com.purestorage:flasharray.516cdd52f827bd21 10.10.1.94:3260,1 iqn.2010-06.com.purestorage:flasharray.516cdd52f827bd21

Fine as well, so login:

root@:/home/postgres/ [] iscsiadm -m node --login Logging in to [iface: default, target: iqn.2010-06.com.purestorage:flasharray.516cdd52f827bd21, portal: 10.10.1.93,3260] (multiple) Logging in to [iface: default, target: iqn.2010-06.com.purestorage:flasharray.516cdd52f827bd21, portal: 10.10.1.94,3260] (multiple) Login to [iface: default, target: iqn.2010-06.com.purestorage:flasharray.516cdd52f827bd21, portal: 10.10.1.93,3260] successful. Login to [iface: default, target: iqn.2010-06.com.purestorage:flasharray.516cdd52f827bd21, portal: 10.10.1.94,3260] successful. root@:/home/postgres/ [] iscsiadm -m session -o show tcp: [13] 10.10.1.93:3260,1 iqn.2010-06.com.purestorage:flasharray.516cdd52f827bd21 (non-flash) tcp: [14] 10.10.1.94:3260,1 iqn.2010-06.com.purestorage:flasharray.516cdd52f827bd21 (non-flash)

The new device is available (sdb) from now on:

root@:/home/postgres/ [] ls -la /dev/sd* brw-rw----. 1 root disk 8, 0 May 4 13:23 /dev/sda brw-rw----. 1 root disk 8, 1 May 4 13:23 /dev/sda1 brw-rw----. 1 root disk 8, 2 May 4 13:23 /dev/sda2 brw-rw----. 1 root disk 8, 16 May 4 13:23 /dev/sdb brw-rw----. 1 root disk 8, 32 May 4 13:23 /dev/sdc

LVM setup:

root@:/home/postgres/ [] pvcreate /dev/sdb

Physical volume "/dev/sdb" successfully created.

root@:/home/postgres/ [] vgcreate vgpure /dev/sdb

Volume group "vgpure" successfully created

root@:/home/postgres/ [] lvcreate -L 450G -n lvpure vgpure

Logical volume "lvpure" created.

root@:/home/postgres/ [] mkdir -p /u02/pgdata

root@:/home/postgres/ [] mkfs.xfs /dev/mapper/vgpure-lvpure

meta-data=/dev/mapper/vgpure-lvpure isize=512 agcount=4, agsize=29491200 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=117964800, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=57600, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

root@:/home/postgres/ [] echo "/dev/mapper/vgpure-lvpure /u02/pgdata xfs defaults,noatime 0 0" >> /etc/fstab

root@:/home/postgres/ [] mount -a

root@:/home/postgres/ [] df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/cl_pgpurestorage-root 26G 2.0G 25G 8% /

devtmpfs 3.9G 0 3.9G 0% /dev

tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs 3.9G 8.5M 3.9G 1% /run

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/sda1 1014M 183M 832M 19% /boot

tmpfs 781M 0 781M 0% /run/user/1000

/dev/mapper/vgpure-lvpure 450G 33M 450G 1% /u02/pgdata

root@:/home/postgres/ [] chown postgres:postgres /u02/pgdata

Initialized the PostgreSQL cluster:

postgres@pgpurestorage:/home/postgres/ [pg10] initdb -D /u02/pgdata/

The files belonging to this database system will be owned by user "postgres".

This user must also own the server process.

The database cluster will be initialized with locales

COLLATE: en_US.UTF-8

CTYPE: en_US.UTF-8

MESSAGES: en_US.UTF-8

MONETARY: de_CH.UTF-8

NUMERIC: de_CH.UTF-8

TIME: en_US.UTF-8

The default database encoding has accordingly been set to "UTF8".

The default text search configuration will be set to "english".

Data page checksums are disabled.

fixing permissions on existing directory /u02/pgdata ... ok

creating subdirectories ... ok

selecting default max_connections ... 100

selecting default shared_buffers ... 128MB

selecting dynamic shared memory implementation ... posix

creating configuration files ... ok

running bootstrap script ... ok

performing post-bootstrap initialization ... ok

syncing data to disk ... ok

WARNING: enabling "trust" authentication for local connections

You can change this by editing pg_hba.conf or using the option -A, or

--auth-local and --auth-host, the next time you run initdb.

Success. You can now start the database server using:

pg_ctl -D /u02/pgdata/ -l logfile start

What we changed from the default configuration is:

postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] cat postgresql.auto.conf # Do not edit this file manually! # It will be overwritten by the ALTER SYSTEM command. listen_addresses = '*' logging_collector = 'on' log_truncate_on_rotation = 'on' log_filename = 'postgresql-%a.log' log_rotation_age = '8d' log_line_prefix = '%m - %l - %p - %h - %u@%d ' log_directory = 'pg_log' log_min_messages = 'WARNING' log_autovacuum_min_duration = '360s' log_min_error_statement = 'error' log_min_duration_statement = '5min' log_checkpoints = 'on' log_statement = 'ddl' log_lock_waits = 'on' log_temp_files = '1' log_timezone = 'Europe/Zurich' client_min_messages = 'WARNING' wal_level = 'replica' hot_standby_feedback = 'on' max_wal_senders = '10' cluster_name = 'pgpure' max_replication_slots = '10' shared_buffers=2048MB work_mem=128MB effective_cache_size=6144MB maintenance_work_mem=512MB max_wal_size=10GB

Calculating the minimum required amount of huge pages for the PostgreSQL instance:

postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] head -1 $PGDATA/postmaster.pid 3662 postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] grep ^VmPeak /proc/3662//status VmPeak: 2415832 kB postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] echo "2415832/2048" | bc 1179

Set it slightly higher:

postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] sudo sed -i 's/vm.nr_hugepages=1024/vm.nr_hugepages=1200/g' /usr/lib/tuned/dbi-postgres/tuned.conf postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] sudo tuned-adm profile dbi-postgres postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] cat /proc/meminfo | grep Huge AnonHugePages: 6144 kB HugePages_Total: 1200 HugePages_Free: 1200 HugePages_Rsvd: 0 HugePages_Surp: 0 Hugepagesize: 2048 kB

To disable transparent huge pages we created a file called “disable-thp.service” (from here):

postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] cat /etc/systemd/system/disable-thp.service # Disable transparent huge pages # put this file under: # /etc/systemd/system/disable-thp.service # Then: # sudo systemctl daemon-reload # sudo systemctl start disable-thp # sudo systemctl enable disable-thp [Unit] Description=Disable Transparent Huge Pages (THP) [Service] Type=simple ExecStart=/bin/sh -c "echo 'never' > /sys/kernel/mm/transparent_hugepage/enabled && echo 'never' > /sys/kernel/mm/transparent_hugepage/defrag" [Install] WantedBy=multi-user.target

Then reload the systemd daemon and start and enable the service:

postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] sudo systemctl daemon-reload postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] sudo systemctl start disable-thp postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] sudo systemctl enable disable-thp

To verify:

postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] cat /sys/kernel/mm/transparent_hugepage/enabled always madvise [never] postgres@pgpurestorage:/u02/pgdata/pgpure/ [pgpure] cat /sys/kernel/mm/transparent_hugepage/defrag always madvise [never]

For being sure that PostgreSQL really will use the huge pages set huge_pages to ‘on’ as this will prevent PostgreSQL from starting when the required pages can not be allocated:

pgpurestorage/postgres MASTER (postgres@5432) # alter system set huge_pages='on'; ALTER SYSTEM Time: 2.417 ms

… and then restart the instance. When all is fine PostgreSQL will come up.

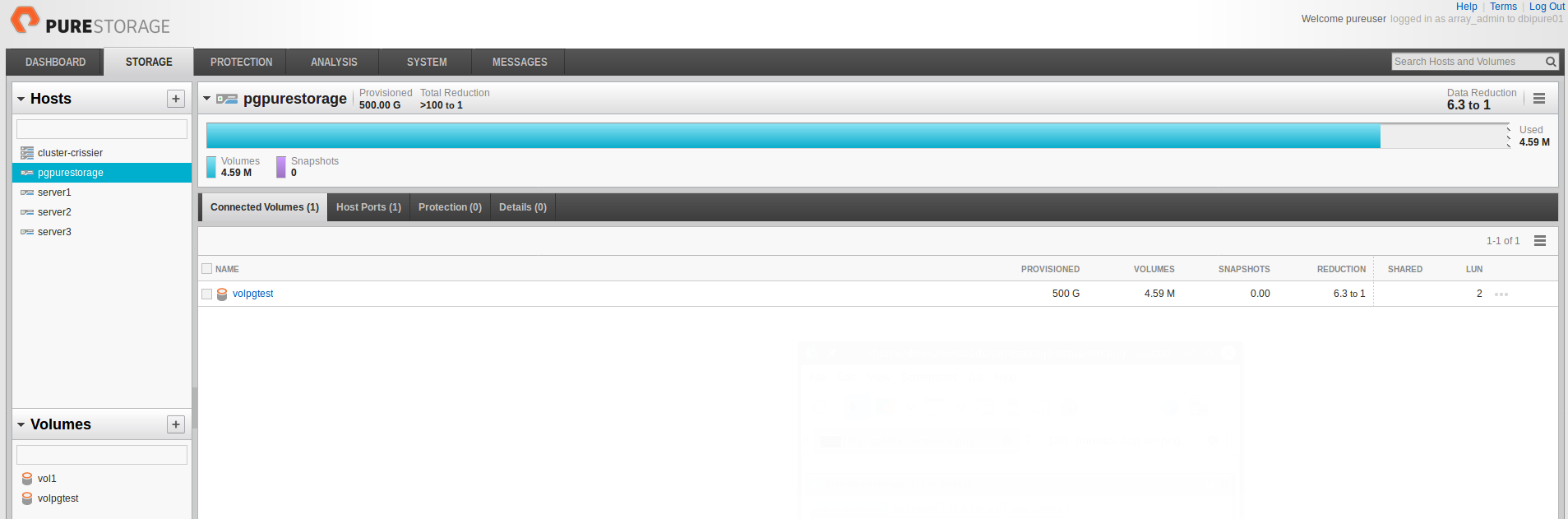

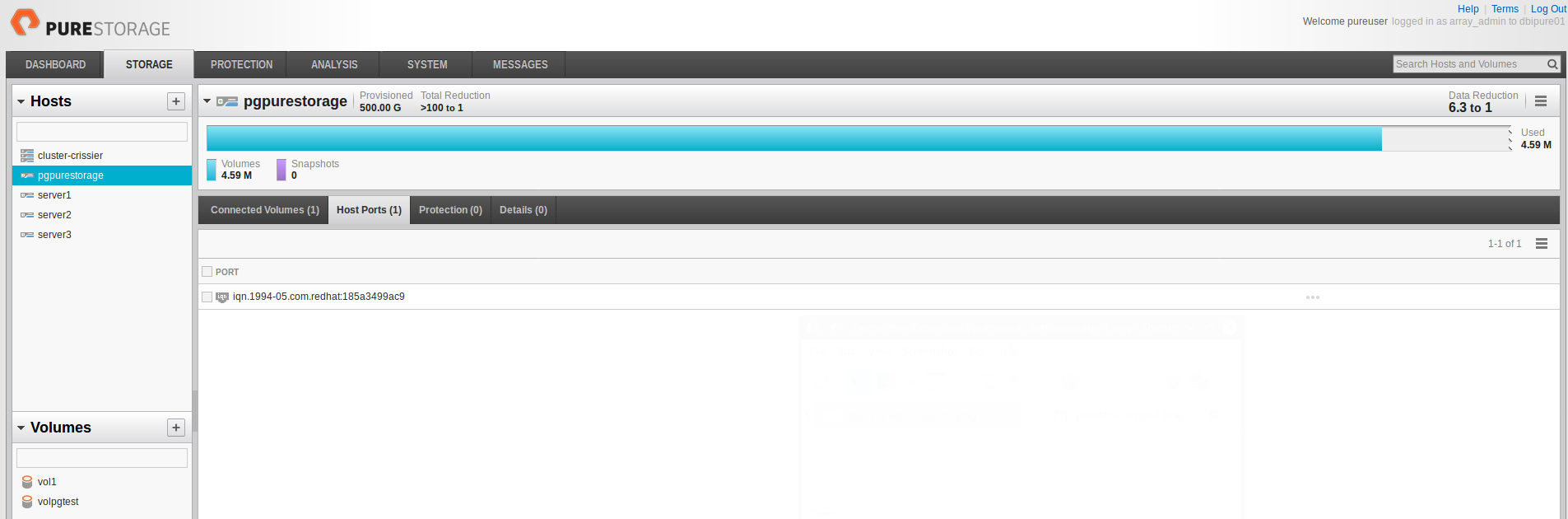

Finally to close this setup post here are some screenshots of the Pure Storage Management Web Console. The first one shows the “Storage” tab where you can see that the volume “volpgtest” is mapped to my host “pgpurestorage”.

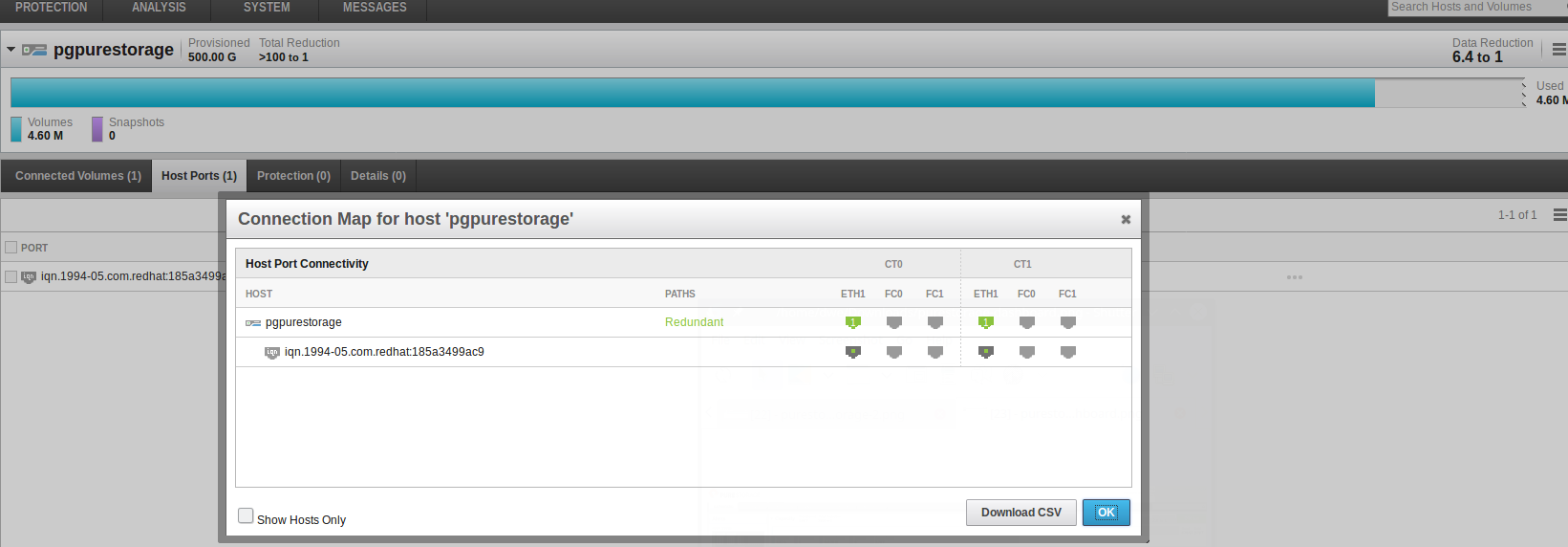

The name you give the server is not important. The important information is the mapping of the “Host Port” which you can see here (this is the iSCSI IQN):

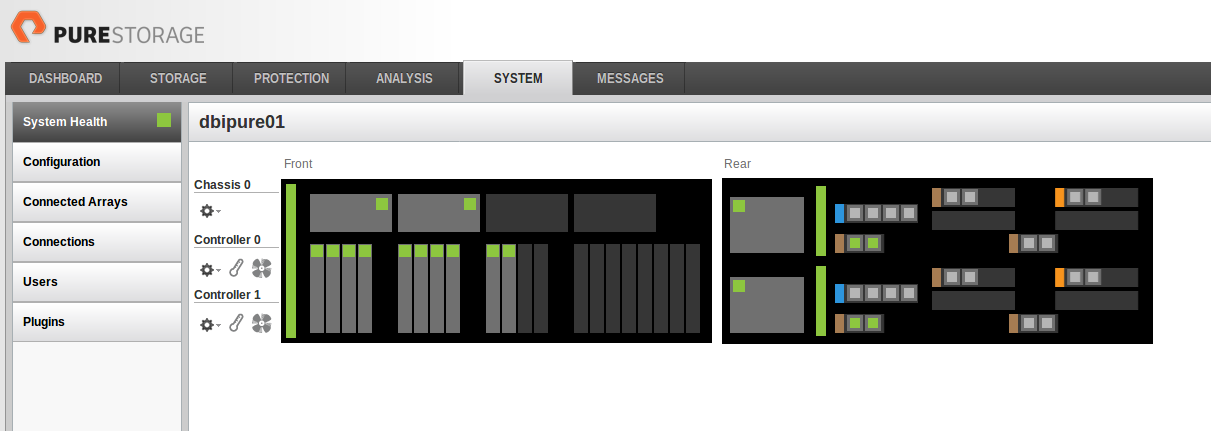

Once your server is connected you can see it in the connection map of the server in the console:

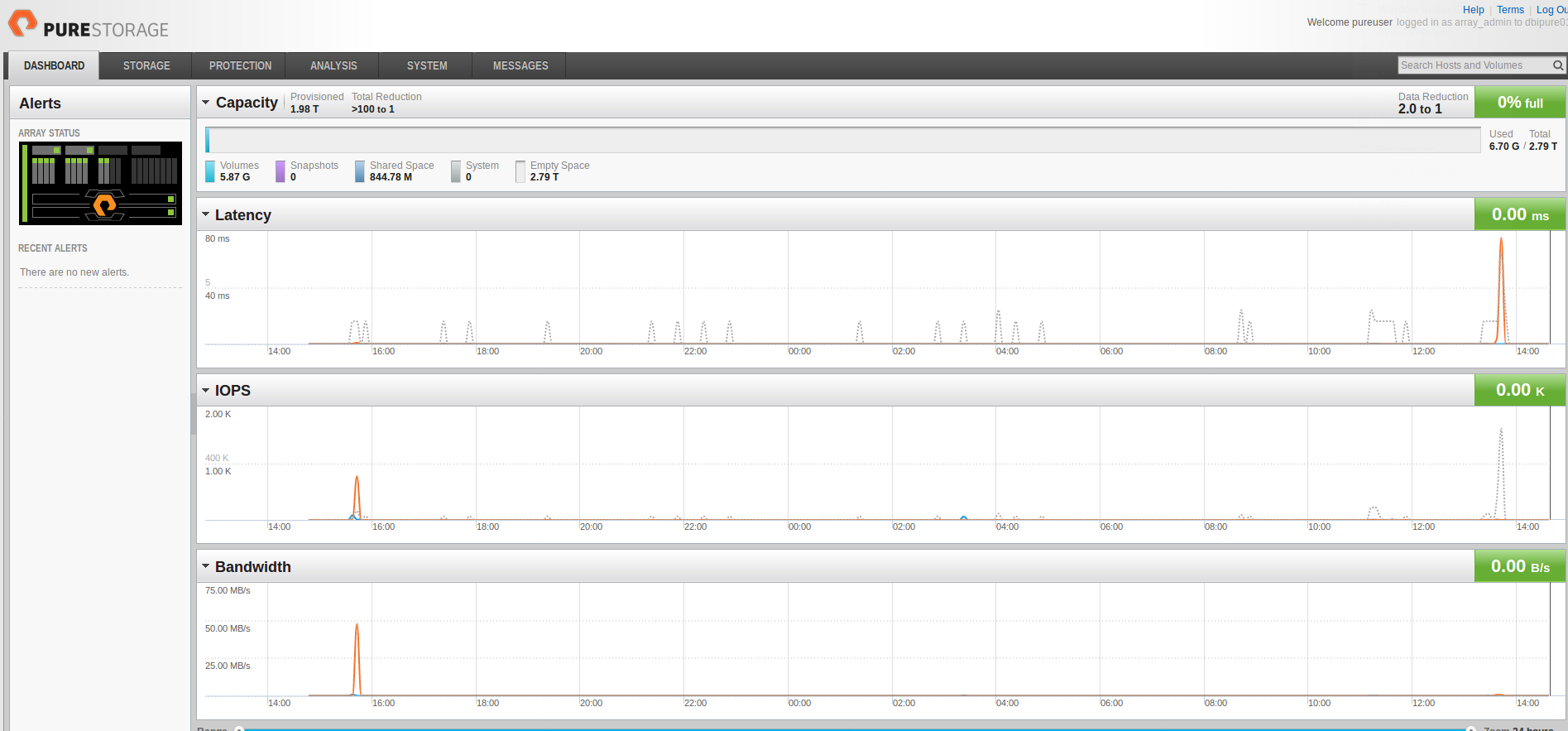

Last, but not least, here is the dashboard:

Not much traffic right now but we’ll be changing that in the next post.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)