By Mouhamadou Diaw

Patroni is one the most famous tool that can be used to setup a high avalaibilty for PostgreSQL. In this blog I am describing a test I did.

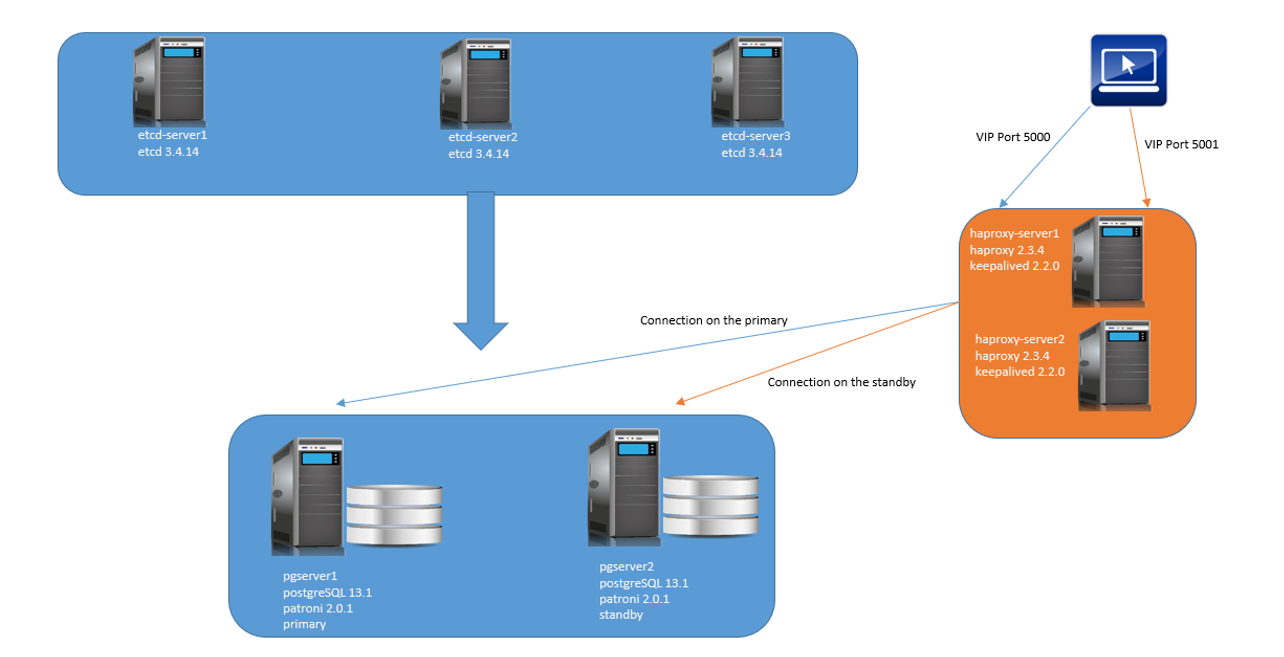

Below the environment we will use

The etcd cluster will contains 3 servers

etcd-server1: 192.168.56.100

etcd-server2: 192.168.56.101

etcd-server3: 192.168.56.106

HAProxy and Keepalived will be configured in this 2 servers.

haproxy-server1: 192.168.56.102

haproxy-server2: 192.168.56.103

A VIP will be configured

haproxyvip 192.168.56.108

We have 2 databases servers

pgserver1: 192.168.56.104 (initial primary server)

pgserver2: 192.168.56.105

Below a resume of all IP used in this blog

|

1

2

3

4

5

6

7

8

|

etcd-server1: 192.168.56.100etcd-server2: 192.168.56.101etcd-server3: 192.168.56.106haproxy-server1: 192.168.56.102haproxy-server2: 192.168.56.103pgserver1: 192.168.56.104pgserver2: 192.168.56.105haproxyvip 192.168.56.108 |

All servers are ppc64le architecture. But the steps will not change if you are using X64 architecture

Etcd Install

The first steps is to install etcd on all etcd servers

etcd-server1

etcd-server2

etcd-server3

For the installation of etcd, the latest archive is downloaded here

The following steps were done on all 3 etc servers

Unpack the etcd downloaded archive

|

1

|

[root@etcd-server1 ~]# tar xvzf etcd-v3.4.14-linux-ppc64le.tar.gz |

And then copy following executables to /usr/local/bin

|

1

2

3

|

[root@etcd-server1 etcd-v3.4.14-linux-ppc64le]# pwd/root/etcd-v3.4.14-linux-ppc64le[root@etcd-server1 etcd-v3.4.14-linux-ppc64le]# cp etcd etcdctl /usr/local/bin/ |

For the startup a etcd service is created for both servers. This service will contain the configuration values of the etcd cluster.

Below the contents of each service

-etcd.service etcd-server1

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@etcd-server1 ~]# cat /etc/systemd/system/etcd.service[Unit]Description=etcd serviceDocumentation=https://github.com/coreos/etcd[Service]Type=notifyExecStart=/usr/local/bin/etcd --name=etcd0 --data-dir=/var/lib/etcd/default.etcd --initial-advertise-peer-urls=http://192.168.56.100:2380 --listen-peer-urls=http://192.168.56.100:2380 --listen-client-urls=http://192.168.56.100:2379,http://127.0.0.1:2379 --advertise-client-urls=http://192.168.56.100:2379 --initial-cluster-token=etcd-cluster-1 --initial-cluster=etcd0=http://192.168.56.100:2380,etcd1=http://192.168.56.101:2380,etcd2=http://192.168.56.106:2380 --initial-cluster-state=newRestart=on-failureRestartSec=5[Install]WantedBy=multi-user.target[root@etcd-server1 ~]# |

-etcd.service etcd-server2

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[root@etcd-server2 ~]# cat /etc/systemd/system/etcd.serviceDescription=etcd serviceDocumentation=https://github.com/coreos/etcd[Service]Type=notifyExecStart=/usr/local/bin/etcd --name=etcd1 --data-dir=/var/lib/etcd/default.etcd --initial-advertise-peer-urls=http://192.168.56.101:2380 --listen-peer-urls=http://192.168.56.101:2380 --listen-client-urls=http://192.168.56.101:2379,http://127.0.0.1:2379 --advertise-client-urls=http://192.168.56.101:2379 --initial-cluster-token=etcd-cluster-1 --initial-cluster=etcd0=http://192.168.56.100:2380,etcd1=http://192.168.56.101:2380,etcd2=http://192.168.56.106:2380 --initial-cluster-state=newRestart=on-failureRestartSec=5[Install]WantedBy=multi-user.target[root@etcd-server2 ~]# |

-etcd.service etcd-server3

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@etcd-server3 ~]# cat /etc/systemd/system/etcd.service[Unit]Description=etcd serviceDocumentation=https://github.com/coreos/etcd[Service]Type=notifyExecStart=/usr/local/bin/etcd --name=etcd2 --data-dir=/var/lib/etcd/default.etcd --initial-advertise-peer-urls=http://192.168.56.106:2380 --listen-peer-urls=http://192.168.56.106:2380 --listen-client-urls=http://192.168.56.106:2379,http://127.0.0.1:2379 --advertise-client-urls=http://192.168.56.106:2379 --initial-cluster-token=etcd-cluster-1 --initial-cluster=etcd0=http://192.168.56.100:2380,etcd1=http://192.168.56.101:2380,etcd2=http://192.168.56.106:2380 --initial-cluster-state=newRestart=on-failureRestartSec=5[Install]WantedBy=multi-user.target[root@etcd-server3 ~]# |

Now let’s start etcd services on both servers (stop your firewall or do requested rules)

|

1

2

3

4

5

6

|

[root@etcd-server1 ~]# systemctl start etcd.service[root@etcd-server2 ~]# systemctl start etcd.service[root@etcd-server3 ~]# systemctl start etcd.service[root@etcd-server1 ~]# systemctl enable etcd.service[root@etcd-server2 ~]# systemctl enable etcd.service[root@etcd-server3 ~]# systemctl enable etcd.service |

If everything is OK, you can query the state of the etcd cluster

|

1

2

3

4

5

6

7

8

|

[root@etcd-server2 ~]# etcdctl endpoint status --cluster -w table+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| http://192.168.56.101:2379 | 56fde5fc4136bc8b | 3.4.14 | 328 kB | false | false | 18 | 14 | 14 | || http://192.168.56.100:2379 | fa26cebd7281c24 | 3.4.14 | 328 kB | false | false | 18 | 14 | 14 | || http://192.168.56.106:2379 | b75652c4850bb9d2 | 3.4.14 | 328 kB | true | false | 18 | 14 | 14 | |+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ |

Keepalived and HAProxy Install

These components are installed on following servers

haproxy-server1

haproxy-server2

To install Keepalived download the latest archive here

You will need maybe to install some linux required packages.

Unpack the archive and do the configure

|

1

2

3

|

[root@ haproxy-server1 ~]# tar xvzf keepalived-2.2.0.tar.gz[root@ haproxy-server1 ~]# cd keepalived-2.2.0/[root@ haproxy-server1 keepalived-2.2.0]# ./configure |

If there is no error then run make and make install

|

1

2

|

[root@ haproxy-server1 keepalived-2.2.0]# make[root@ haproxy-server1 keepalived-2.2.0]# make install |

For Keepalived startup the same service is created on both servers.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@ haproxy-server1 ~]# cat /etc/systemd/system/keepalived.service[Unit]Description=LVS and VRRP High Availability MonitorAfter=network-online.target syslog.targetWants=network-online.target[Service]Type=forkingPIDFile=/run/keepalived.pidKillMode=processEnvironmentFile=-/usr/local/etc/sysconfig/keepalivedExecStart=/usr/local/sbin/keepalived $KEEPALIVED_OPTIONSExecReload=/bin/kill -HUP $MAINPID[Install]WantedBy=multi-user.target[root@etcd- haproxy-server1 ~]# |

Before starting keepalived, we have to configure a configuration file on both servers.

-haproxy-server1

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

[root@haproxy-server1 ]# cat /etc/keepalived/keepalived.confglobal_defs {}vrrp_script chk_haproxy { # Requires keepalived-1.1.13 script "killall -0 haproxy" # widely used idiom interval 2 # check every 2 seconds weight 2 # add 2 points of prio if OK}vrrp_instance VI_1 { interface eth0 state MASTER # or "BACKUP" on backup priority 101 # 101 on master, 100 on backup virtual_router_id 51 authentication { auth_type PASS auth_pass 1234 } virtual_ipaddress { 192.168.56.108 } track_script { chk_haproxy }}[root@haproxy-server1 ]# |

-haproxy-server2

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

[root@haproxy-server2 ]# cat /etc/keepalived/keepalived.confglobal_defs {}vrrp_script chk_haproxy { # Requires keepalived-1.1.13 script "killall -0 haproxy" # widely used idiom interval 2 # check every 2 seconds weight 2 # add 2 points of prio if OK}vrrp_instance VI_1 { interface eth0 state BACKUP # or "BACKUP" on backup priority 100 # 101 on master, 100 on backup virtual_router_id 51 authentication { auth_type PASS auth_pass 1234 } virtual_ipaddress { 192.168.56.108 } track_script { chk_haproxy }} [root@haproxy-server2 ]# |

Now it’s time to start Keepalived on both servers can be started on both servers

|

1

2

3

4

5

6

7

|

[root@haproxy-server1 keepalived]# systemctl start keepalived[root@haproxy-server1 keepalived]# systemctl status keepalived[root@haproxy-server1 keepalived]# systemctl enable keepalived[root@haproxy-server2 keepalived]# systemctl start keepalived[root@haproxy-server2 keepalived]# systemctl status keepalived[root@haproxy-server2 keepalived]# systemctl enable keepalived |

If everything is fine, the VIP 192.168.56.108 should be run on one server and will automatically failover to the the second server if there is any issue with the server.

Now let’s install the HAProxy on both servers haproxy-server1 and haproxy-server2. We have downloaded the latest HAProxy archive here

Then unpack the archive and run the make and make install commands (Maybe you will have to install some linux packages)

|

1

2

3

4

|

[root@etcd-server2 ~]# tar xzzf haproxy-2.3.4.tar.gz[root@etcd-server2 ~]# cd haproxy-2.3.4/[root@haproxy-server1 haproxy-2.1.5]# make TARGET=linux-glibc[root@haproxy-server1 haproxy-2.1.5]# make install |

Following directories are created in my case

|

1

2

3

|

[root@haproxy-server1 haproxy-2.1.5]# mkdir -p /etc/haproxy[root@haproxy-server1 haproxy-2.1.5]# mkdir -p /var/lib/haproxy[root@haproxy-server1 haproxy-2.1.5]# touch /var/lib/haproxy/stats |

To startup HAProxy a service is created on both HAProxy servers

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

[root@haproxy-server1 system]# cat haproxy.service[Unit]Description=HAProxy Load BalancerDocumentation=man:haproxy(1)Documentation=file:/usr/share/doc/haproxy/configuration.txt.gz# allows us to do millisecond level restarts without triggering alert in Systemd#StartLimitInterval=0#StartLimitBurst=0After=network.target syslog.serviceWants=syslog.service[Service]Environment="CONFIG=/etc/haproxy/haproxy.cfg" "PIDFILE=/run/haproxy.pid"# EXTRAOPTS and RELOADOPS come from this default file# EnvironmentFile=-/etc/default/haproxyExecStartPre=/usr/local/sbin/haproxy -f $CONFIG -c -qExecStart=/usr/local/sbin/haproxy -W -f $CONFIG -p $PIDFILE $EXTRAOPTSExecReload=/usr/local/sbin/haproxy -f $CONFIG -c -q $EXTRAOPTS $RELOADOPTSExecReload=/bin/kill -USR2 $MAINPIDKillMode=mixed#Restart=always#Type=forkingRestart=on-failureRestartSec=5[Install]WantedBy=multi-user.target[root@haproxy-server1 system]# |

The same haproxy.cfg file is configured on both HAProxy servers like

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

[root@haproxy-server1 ]# cat /etc/haproxy/haproxy.cfgglobal maxconn 100defaults log global mode tcp retries 2 timeout client 30m timeout connect 4s timeout server 30m timeout check 5slisten stats mode http bind *:7000 stats enable stats uri /listen production bind 192.168.56.108:5000 option httpchk OPTIONS/master http-check expect status 200 default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions server postgresql_192.168.56.104_5432 192.168.56.104:5432 maxconn 100 check port 8008 server postgresql_192.168.56.105_5432 192.168.56.105:5432 maxconn 100 check port 8008listen standby bind 192.168.56.108:5001 option httpchk OPTIONS/replica http-check expect status 200 default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions server postgresql_192.168.56.104_5432 192.168.56.104:5432 maxconn 100 check port 8008 server postgresql_192.168.56.105_5432 192.168.56.105:5432 maxconn 100 check port 8008[root@haproxy-server1 ]# |

Let’s start HAProxy

|

1

2

3

4

5

6

7

8

9

|

[root@haproxy-server1 haproxy-2.1.5]# setsebool -P haproxy_connect_any=1[root@haproxy-server1 system]# systemctl enable haproxy.service[root@haproxy-server1 system]# systemctl start haproxy.service[root@haproxy-server1 system]# systemctl status haproxy.service[root@haproxy-server2 system]# setsebool -P haproxy_connect_any=1[root@haproxy-server2 system]# systemctl start haproxy.service[root@haproxy-server2 system]# systemctl status haproxy.service[root@haproxy-server2 system]# systemctl enable haproxy.service |

Patroni Install

Patroni will be installed on both PostgreQL servers pgserver1 and pgserver2. Some components will be installed with root and some components with the user postgres

In my case I had to install some linux packages

|

1

2

|

[root@pgserver1 ~]# yum install python3-pip.noarch[root@pgserver1 ~]# yum install python36-devel.x86_64 |

With the user root install following component

|

1

|

[root@pgserver1 ~]# pip3 install --upgrade setuptools |

With the user postgres install these components

|

1

2

3

4

|

[postgres@pgserver1 ~]$ pip3 install --user psycopg2-binary[postgres@pgserver1 ~]$ pip3 install --user python-etcd[postgres@pgserver1 ~]$ pip3 install --user wheel[postgres@pgserver1 ~]$ pip3 install --user patroni |

If all components are installed without error, we can now configure a configuration file for each patroni

-pgserver1

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

[postgres@pgserver1 cat /etc/patroni.yamlscope: postgres_pocname: pg-patroni1restapi: listen: 192.168.56.104:8008 connect_address: 192.168.56.104:8008etcd3: hosts: 192.168.56.100:2379,192.168.56.101:2379,192.168.56.106:2379bootstrap: dcs: ttl: 30 loop_wait: 10 retry_timeout: 10 maximum_lag_on_failover: 1048576 postgresql: use_pg_rewind: truepostgresql: listen: 192.168.56.104:5432 bin_dir: /u01/app/postgres/product/13/db_1/bin connect_address: 192.168.56.104:5432 data_dir: /u02/pgdata pgpass: /tmp/pgpass authentication: replication: username: postgres password: postgres superuser: username: postgres password: postgres parameters: unix_socket_directories: '.'tags: nofailover: false noloadbalance: false clonefrom: false nosync: false[postgres@pgserver1 ~]$ |

-pgserver1

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

[postgres@pgserver2 ~]$ cat /etc/patroni.yamlscope: postgres_pocname: pg-patroni2restapi: listen: 192.168.56.105:8008 connect_address: 192.168.56.105:8008etcd3: hosts: 192.168.56.100:2379,192.168.56.101:2379,192.168.56.106:2379bootstrap: dcs: ttl: 30 loop_wait: 10 retry_timeout: 10 maximum_lag_on_failover: 1048576 postgresql: use_pg_rewind: truepostgresql: listen: 192.168.56.105:5432 bin_dir: /u01/app/postgres/product/13/db_1/bin connect_address: 192.168.56.105:5432 data_dir: /u02/pgdata pgpass: /tmp/pgpass authentication: replication: username: postgres password: postgres superuser: username: postgres password: postgres parameters: unix_socket_directories: '.'tags: nofailover: false noloadbalance: false clonefrom: false nosync: false[postgres@pgserver2 ~]$ |

The pg_hba.conf file should be configured on both databse servers to allow connections from the different servers. Then patroni can be started on both servers.

On pgserver1 which is actullaly the primary, we will see outputs like

|

1

2

3

4

5

6

7

|

[postgres@pgserver1 PG1]$ patroni /etc/patroni.yaml……2020-01-15 20:31:45,898 INFO: acquired session lock as a leader2020-01-15 20:31:55,862 INFO: Lock owner: pg-patroni1; I am pg-patroni12020-01-15 20:31:55,871 INFO: Lock owner: pg-patroni1; I am pg-patroni12020-01-15 20:31:55,905 INFO: no action. i am the leader with the lock |

On pgserver2 which is the standby server we will have outputs like

|

1

2

3

4

5

|

[postgres@pgserver2 PG1]$ patroni /etc/patroni.yaml..2020-01-15 20:41:25,941 INFO: Lock owner: pg-patroni1; I am pg-patroni22020-01-15 20:41:25,941 INFO: does not have lock2020-01-15 20:41:25,952 INFO: no action. i am a secondary and i am following a leader |

For the automatic startup of Patroni a service can be created on both databases servers

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

[root@pgserver1 ~]# cat /etc/systemd/system/patroni.service[Service]Type=simpleUser=postgresGroup=postgres#ExecStart=/usr/local/bin/patroni /opt/patroni/patroni.ymlExecStart=/home/postgres/.local/bin/patroni /etc/patroni.yamlKillMode=processTimeoutSec=30Restart=no[Install]WantedBy=multi-user.target[root@pgserver1 ~]# |

The command patronictl will be used to manage Patroni

-List the members of the configuration

|

1

2

3

4

5

6

7

8

|

[postgres@pgserver1 ~]$ patronictl -c /etc/patroni.yaml list+----------+-------------+----------------+--------+---------+----+-----------+| Cluster | Member | Host | Role | State | TL | Lag in MB |+----------+-------------+----------------+--------+---------+----+-----------+| postgres | pg-patroni1 | 192.168.56.104 | Leader | running | 1 | || postgres | pg-patroni2 | 192.168.56.105 | Replica| running | 1 | 0 |+----------+-------------+----------------+--------+---------+----+-----------+ |

Perform a switchover

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

[postgres@pgserver1 ~]$ patronictl -c /etc/patroni.yaml switchoverMaster [pg-patroni1]:Candidate ['pg-patroni2'] []:When should the switchover take place (e.g. 2021-01-15T11:16 ) [now]:Current cluster topology+ Cluster: postgres_poc (6917537380726361322) ----+----+-----------+| Member | Host | Role | State | TL | Lag in MB |+-------------+---------------+---------+---------+----+-----------+| pg-patroni1 | 192.168.56.104| Leader | running | 1 | || pg-patroni2 | 192.168.56.105| Replica | running | 1 | 0 |+-------------+---------------+---------+---------+----+-----------+Are you sure you want to switchover cluster postgres_poc, demoting current master pg-patroni1? [y/N]: y2021-01-15 10:16:35.39948 Successfully switched over to "pg-patroni2"+ Cluster: postgres_poc (6917537380726361322) ----+----+-----------+| Member | Host | Role | State | TL | Lag in MB |+-------------+---------------+---------+---------+----+-----------+| pg-patroni1 | 192.168.56.104| Replica | stopped | | unknown || pg-patroni2 | 192.168.56.105| Leader | running | 1 | |+-------------+---------------+---------+---------+----+-----------+[postgres@pgserver1 ~]$ patronictl -c /etc/patroni.yaml list+ Cluster: postgres_poc (6917537380726361322) ----+----+-----------+| Member | Host | Role | State | TL | Lag in MB |+-------------+---------------+---------+---------+----+-----------+| pg-patroni1 | 192.168.56.104| Replica | running | 2 | 0 || pg-patroni2 | 192.168.56.105| Leader | running | 2 | |+-------------+---------------+---------+---------+----+-----------+ |

If the primary crash, the replica will be automatically promoted.

For the connection to the databases, client can use the VIP configured via HAProxy

-To connect to the standby the port 5001 is used

|

1

2

3

4

5

6

7

8

9

10

|

[postgres@ [~]> psql -h 192.168.56.108 -p 5001 -U postgresPassword for user postgres:psql (13.1)SSL connection (protocol: TLSv1.2, cipher: ECDHE-RSA-AES256-GCM-SHA384, bits: 256, compression: off)Type "help" for help.postgres=# select pg_is_in_recovery(); pg_is_in_recovery------------------- t(1 row) |

-To connect to the primary the port 5000 is used

|

1

2

3

4

5

6

7

8

9

10

|

[postgres@ [~]> psql -h 192.168.56.108 -p 5000 -U postgresPassword for user postgres:psql (13.1)SSL connection (protocol: TLSv1.2, cipher: ECDHE-RSA-AES256-GCM-SHA384, bits: 256, compression: off)Type "help" for help.postgres=# select pg_is_in_recovery(); pg_is_in_recovery------------------- f(1 row) |

Conclusion

Hope this may help

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/05/open-source-author.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)

Nadir

28.08.2024In this document i am unable to understand that incase of failure how HAProxy will come to know which etcd node is leader and it has to route the R/W connections to that node?