In the last post about SUSE Multi Linux Manager we had a look at how you can schedule OpenSCAP reports using the API. In this post we’ll look into something very basic: How can you patch the server components of SUSE Multi Linux Manager. We speak about components because you need to patch the host (which is a SLE Micro in this case) and the container hosting the application.

Looking at the host operating system we can see this is a SLE Micro 5.5:

suma:~ $ cat /etc/os-release

NAME="SLE Micro"

VERSION="5.5"

VERSION_ID="5.5"

PRETTY_NAME="SUSE Linux Enterprise Micro 5.5"

ID="sle-micro"

ID_LIKE="suse"

ANSI_COLOR="0;32"

CPE_NAME="cpe:/o:suse:sle-micro:5.5"

As this comes with a read only root file system we cannot directly use zypper to patch the system. The tool to use in this case is transactional-update. This still uses zypper in the background, but the updates are installed into a new Btrfs snapshot. Using this approach the running system is not touched at all and the updates only become available when the system is rebooted into the new snapshot (which happens automatically when the system is rebooted). If something is wrong with the new snapshot, the system can be booted from the old snapshot and the system is back to what it was before patching.

Before we patch the host system let’s have a look at the snapshots we currently have available:

suma:~ $ snapper list

# | Type | Pre # | Date | User | Used Space | Cleanup | Description | Userdata

---+--------+-------+----------------------------------+------+------------+---------+-----------------------+--------------

0 | single | | | root | | | current |

1 | single | | Fri 08 Mar 2024 10:45:41 AM CET | root | 1.30 GiB | number | first root filesystem | important=yes

2 | single | | Mon 07 Jul 2025 12:18:08 PM CEST | root | 1.51 MiB | number | Snapshot Update of #1 | important=yes

3 | single | | Mon 07 Jul 2025 12:30:01 PM CEST | root | 1.02 MiB | number | Snapshot Update of #2 | important=yes

4 | single | | Tue 08 Jul 2025 05:33:39 AM CEST | root | 39.78 MiB | number | Snapshot Update of #3 | important=yes

5 | single | | Wed 16 Jul 2025 09:25:23 AM CEST | root | 45.07 MiB | | Snapshot Update of #4 |

6* | single | | Wed 23 Jul 2025 04:13:09 PM CEST | root | 58.62 MiB | | Snapshot Update of #5 |

Let’s patch and compare what we’ll have afterwards:

suma:~ $ zypper ref

Warning: The gpg key signing file 'repomd.xml' has expired.

Repository: SLE-Micro-5.5-Updates

Key Fingerprint: FEAB 5025 39D8 46DB 2C09 61CA 70AF 9E81 39DB 7C82

Key Name: SuSE Package Signing Key <[email protected]>

Key Algorithm: RSA 2048

Key Created: Mon 21 Sep 2020 10:21:47 AM CEST

Key Expires: Fri 20 Sep 2024 10:21:47 AM CEST (EXPIRED)

Rpm Name: gpg-pubkey-39db7c82-5f68629b

Retrieving repository 'SLE-Micro-5.5-Updates' metadata ..............................................................................................................[done]

Building repository 'SLE-Micro-5.5-Updates' cache ...................................................................................................................[done]

Warning: The gpg key signing file 'repomd.xml' has expired.

Repository: SUSE-Manager-Server-5.0-Updates

Key Fingerprint: FEAB 5025 39D8 46DB 2C09 61CA 70AF 9E81 39DB 7C82

Key Name: SuSE Package Signing Key <[email protected]>

Key Algorithm: RSA 2048

Key Created: Mon 21 Sep 2020 10:21:47 AM CEST

Key Expires: Fri 20 Sep 2024 10:21:47 AM CEST (EXPIRED)

Rpm Name: gpg-pubkey-39db7c82-5f68629b

Retrieving repository 'SUSE-Manager-Server-5.0-Updates' metadata ....................................................................................................[done]

Building repository 'SUSE-Manager-Server-5.0-Updates' cache .........................................................................................................[done]

Repository 'SLE-Micro-5.5-Pool' is up to date.

Repository 'SUSE-Manager-Server-5.0-Pool' is up to date.

All repositories have been refreshed.

suma:~ $ transactional-update

Checking for newer version.

transactional-update 4.1.9 started

Options:

Separate /var detected.

2025-07-30 09:42:32 tukit 4.1.9 started

2025-07-30 09:42:32 Options: -c6 open

2025-07-30 09:42:33 Using snapshot 6 as base for new snapshot 7.

2025-07-30 09:42:33 /var/lib/overlay/6/etc

2025-07-30 09:42:33 Syncing /etc of previous snapshot 5 as base into new snapshot "/.snapshots/7/snapshot"

2025-07-30 09:42:33 SELinux is enabled.

ID: 7

2025-07-30 09:42:36 Transaction completed.

Calling zypper up

2025-07-30 09:42:38 tukit 4.1.9 started

2025-07-30 09:42:38 Options: callext 7 zypper -R {} up -y --auto-agree-with-product-licenses

2025-07-30 09:42:39 Executing `zypper -R /tmp/transactional-update-JsIr01 up -y --auto-agree-with-product-licenses`:

Refreshing service 'SUSE_Linux_Enterprise_Micro_5.5_x86_64'.

Refreshing service 'SUSE_Manager_Server_Extension_5.0_x86_64'.

Loading repository data...

Reading installed packages...

The following 21 packages are going to be upgraded:

boost-license1_66_0 libboost_system1_66_0 libboost_thread1_66_0 libpolkit-agent-1-0 libpolkit-gobject-1-0 mgradm mgradm-bash-completion mgrctl mgrctl-bash-completion polkit python3-pyparsing python3-pytz python3-PyYAML python3-requests python3-salt python3-simplejson python3-urllib3 salt salt-minion salt-transactional-update uyuni-storage-setup-server

21 packages to upgrade.

Package download size: 16.8 MiB

Package install size change:

| 71.4 MiB required by packages that will be installed

654.0 KiB | - 70.8 MiB released by packages that will be removed

Backend: classic_rpmtrans

Continue? [y/n/v/...? shows all options] (y): y

...

2025-07-30 09:44:40 New default snapshot is #7 (/.snapshots/7/snapshot).

2025-07-30 09:44:40 Transaction completed.

Please reboot your machine to activate the changes and avoid data loss.

New default snapshot is #7 (/.snapshots/7/snapshot).

transactional-update finished

As noted above we must reboot the system for the updates to become active. Before we do that, let’s again have a look at the snapshots:

suma:~ $ snapper list

# | Type | Pre # | Date | User | Used Space | Cleanup | Description | Userdata

---+--------+-------+----------------------------------+------+------------+---------+-----------------------+--------------

0 | single | | | root | | | current |

1 | single | | Fri 08 Mar 2024 10:45:41 AM CET | root | 1.30 GiB | number | first root filesystem | important=yes

2 | single | | Mon 07 Jul 2025 12:18:08 PM CEST | root | 1.51 MiB | number | Snapshot Update of #1 | important=yes

3 | single | | Mon 07 Jul 2025 12:30:01 PM CEST | root | 1.02 MiB | number | Snapshot Update of #2 | important=yes

4 | single | | Tue 08 Jul 2025 05:33:39 AM CEST | root | 39.78 MiB | number | Snapshot Update of #3 | important=yes

5 | single | | Wed 16 Jul 2025 09:25:23 AM CEST | root | 45.07 MiB | | Snapshot Update of #4 |

6- | single | | Wed 23 Jul 2025 04:13:09 PM CEST | root | 4.11 MiB | | Snapshot Update of #5 |

7+ | single | | Wed 30 Jul 2025 09:42:32 AM CEST | root | 88.39 MiB | | Snapshot Update of #6 |

We got a new snapshot (number 7) which is not yet active, let’s reboot and check again:

suma:~ $ reboot

...

suma:~ $ snapper list

# | Type | Pre # | Date | User | Used Space | Cleanup | Description | Userdata

---+--------+-------+----------------------------------+------+------------+---------+-----------------------+--------------

0 | single | | | root | | | current |

1 | single | | Fri 08 Mar 2024 10:45:41 AM CET | root | 1.30 GiB | number | first root filesystem | important=yes

2 | single | | Mon 07 Jul 2025 12:18:08 PM CEST | root | 1.51 MiB | number | Snapshot Update of #1 | important=yes

3 | single | | Mon 07 Jul 2025 12:30:01 PM CEST | root | 1.02 MiB | number | Snapshot Update of #2 | important=yes

4 | single | | Tue 08 Jul 2025 05:33:39 AM CEST | root | 39.78 MiB | number | Snapshot Update of #3 | important=yes

5 | single | | Wed 16 Jul 2025 09:25:23 AM CEST | root | 45.07 MiB | | Snapshot Update of #4 |

6 | single | | Wed 23 Jul 2025 04:13:09 PM CEST | root | 4.11 MiB | | Snapshot Update of #5 |

7* | single | | Wed 30 Jul 2025 09:42:32 AM CEST | root | 88.39 MiB | | Snapshot Update of #6 |

The new snapshot became active and we’re fully patched on the host system.

Now that the host system is fully patched, we can proceed with patching the SUSE Multi Linux Manager application. Before we do that, let’s check what we currently have:

suma:~ $ mgradm inspect

10:40AM INF Welcome to mgradm

10:40AM INF Executing command: inspect

10:40AM INF Computed image name is registry.suse.com/suse/manager/5.0/x86_64/server:5.0.4.1

10:40AM INF Ensure image registry.suse.com/suse/manager/5.0/x86_64/server:5.0.4.1 is available

WARN[0002] Path "/etc/SUSEConnect" from "/etc/containers/mounts.conf" doesn't exist, skipping

10:40AM INF

{

"CurrentPgVersion": "16",

"ImagePgVersion": "16",

"DBUser": "spacewalk",

"DBPassword": "<REDACTED>",

"DBName": "susemanager",

"DBPort": 5432,

"UyuniRelease": "",

"SuseManagerRelease": "5.0.4.1",

"Fqdn": "suma.dwe.local"

}

The currently running version is “5.0.4.1”. Patching is quite simple as this just updates the container:

suma:~ $ mgradm upgrade podman

10:41AM INF Welcome to mgradm

10:41AM INF Use of this software implies acceptance of the End User License Agreement.

10:41AM INF Executing command: podman

...

10:41AM INF No changes requested for hub. Keep 0 replicas.

10:41AM INF Computed image name is registry.suse.com/suse/manager/5.0/x86_64/server-hub-xmlrpc-api:5.0.5

10:41AM INF Ensure image registry.suse.com/suse/manager/5.0/x86_64/server-hub-xmlrpc-api:5.0.5 is available

10:42AM INF Cannot find RPM image for registry.suse.com/suse/manager/5.0/x86_64/server-hub-xmlrpc-api:5.0.5

Checking the version again:

suma:~ $ mgradm inspect

10:36AM INF Welcome to mgradm

10:36AM INF Use of this software implies acceptance of the End User License Agreement.

10:36AM INF Executing command: inspect

10:36AM INF Computed image name is registry.suse.com/suse/manager/5.0/x86_64/server:5.0.5

10:36AM INF Ensure image registry.suse.com/suse/manager/5.0/x86_64/server:5.0.5 is available

10:36AM ??? time="2025-07-30T10:36:20+02:00" level=warning msg="Path \"/etc/SUSEConnect\" from \"/etc/containers/mounts.conf\" doesn't exist, skipping"

10:36AM INF

{

"CurrentPgVersion": "16",

"ImagePgVersion": "16",

"DBUser": "spacewalk",

"DBPassword": "<REDACTED>",

"DBName": "susemanager",

"DBPort": 5432,

"UyuniRelease": "",

"SuseManagerRelease": "5.0.5",

"Fqdn": "suma.dwe.local"

}

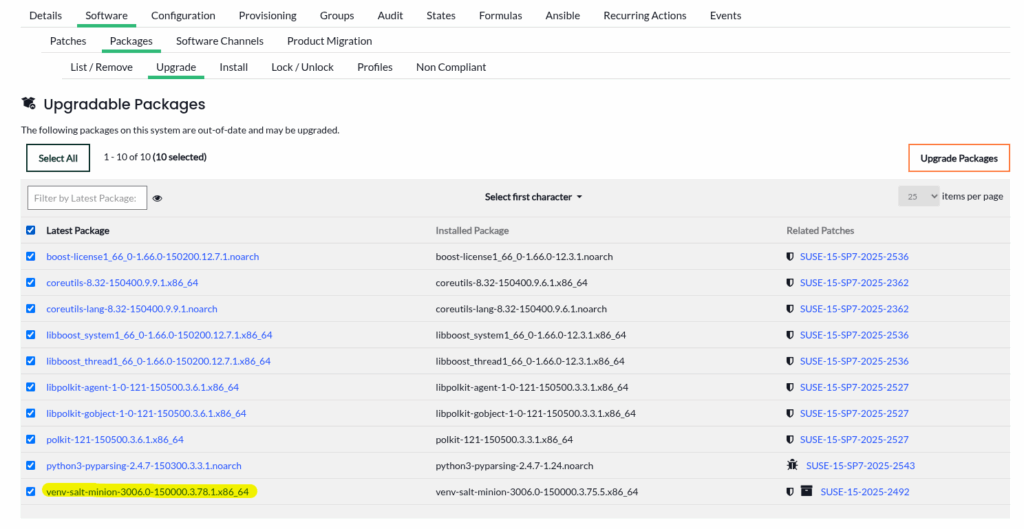

Now we are on version “5.0.5” and we’re done with our patching for the server part. Clients also should be upgraded, especially the Salt client as SUSE Multi Linux Manager uses Salt to manage the clients. You can either do that manually by using the package manager of the distributions you’re managing or you can do that from the WebUI:

That’s it, not hard to do and an easy process to follow.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/04/SIT_web.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2023/05/STM_web_min.jpg)