In contrary of views, materialized views avoid executing the SQL query for every access by storing the result set of the query.

When a master table is modified, the related materialized view becomes stale and a refresh is necessary to have the materialized view up to date.

I will not show you the materialized view concepts, the Oracle Datawarehouse Guide is perfect for that.

I will show you, from a user real case, all steps you have to follow to investigate and tune your materialized view refresh.

And, as very often in performance and tuning task, most of the performance issue comes from the way to write and design your SQL (here the SQL statement loading the materialized view).

First of all, I’m saying that spending almost 50 mins (20% of my DWH Load) to refresh materialized view is too much :

The first step is to check which materialized view has the highest refresh time :

SELECT *

FROM (

SELECT OWNER,

MVIEW_NAME,

CONTAINER_NAME,

REFRESH_MODE,

REFRESH_METHOD,

LAST_REFRESH_TYPE,

STALENESS,

ROUND((LAST_REFRESH_END_TIME-LAST_REFRESH_DATE)*24*60,2) as REFRESH_TIME_MINS

FROM ALL_MVIEWS

WHERE LAST_REFRESH_TYPE IN ('FAST','COMPLETE')

)

ORDER BY REFRESH_TIME_MINS DESC;

OWNER MVIEW_NAME CONTAINER_NAME REFRESH_MODE REFRESH_METHOD LAST_REFRESH_TYPE STALENESS REFRESH_TIME_MINS

------- ------------------------------- ------------------------------- ------------- -------------- ----------------- --------------------------------

SCORE MV$SCORE_ST_SI_MESSAGE_HISTORY MV$SCORE_ST_SI_MESSAGE_HISTORY DEMAND FAST FAST FRESH 32.52

SCORE MV$SCORE_ST_SI_MESSAGE MV$SCORE_ST_SI_MESSAGE DEMAND FAST FAST FRESH 16.38

SCORE MV$SC1_MYHIST2_STOP MV$SC1_MYHIST2_STOP DEMAND FORCE COMPLETE NEEDS_COMPILE .03

SCORE MV$SC1_MYHIST2_START MV$SC1_MYHIST2_START DEMAND FORCE COMPLETE NEEDS_COMPILE .03

SCORE MV$SC1_RWQ_FG_TOPO MV$SC1_RWQ_FG_TOPO DEMAND FORCE COMPLETE NEEDS_COMPILE .02

All the refresh time comes from the mview : MV$SCORE_ST_SI_MESSAGE_HISTORY and MV$SCORE_ST_SI_MESSAGE.

Thanks to columns ALL_MVIEWS.LAST_REFRESH_DATE and ALL_MVIEWS.LAST_REFRESH_END_TIME, we got the sql statements and the executions plans related to the refresh operation :

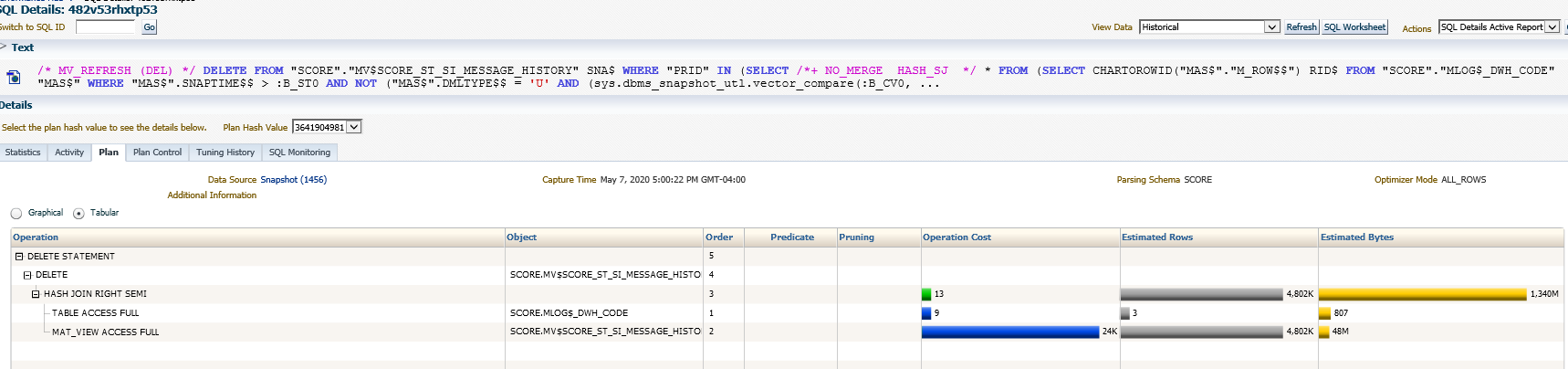

The first operation is a “Delete“:

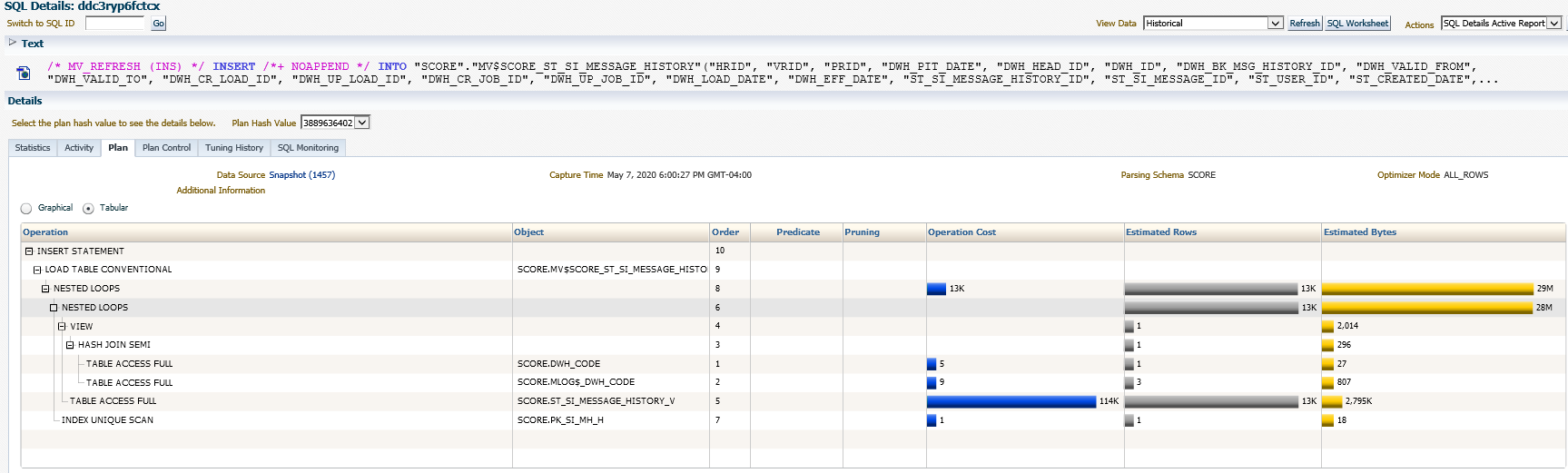

The second operation is an “Insert“:

Let’s extract the PL/SQL procedure doing the refresh used by the ETL tool :

dbms_mview.refresh('SCORE.'||l_mview||'','?',atomic_refresh=>FALSE);

--'?' = Force : If possible, a fast refresh is attempted, otherwise a complete refresh.

Being given that, here all questions which come to me :

- My materialized view can be fast-refreshed, so why it takes more than 48 mins to refresh ?

- With atomic_refresh set to false, oracle normally optimize refresh by using parallel DML and truncate DDL, so why a “Delete” operation is done instead a “Truncate” more faster ?

To answer to the first point, to be sure that my materialized view can be fast refresh, we can also use explain_mview procedure and check the capability_name called “REFRESH_FAST”:

SQL> truncate table mv_capabilities_table;

Table truncated.

SQL> exec dbms_mview.explain_mview('MV$SCORE_ST_SI_MESSAGE_HISTORY');

PL/SQL procedure successfully completed.

SQL> select capability_name,possible,related_text,msgtxt from mv_capabilities_table;

CAPABILITY_NAME POSSIBLE RELATED_TEXT MSGTXT

------------------------------ -------------------- ----------------------- ----------------------------------------------------------------------------

PCT N

REFRESH_COMPLETE Y

REFRESH_FAST Y

REWRITE Y

PCT_TABLE N ST_SI_MESSAGE_HISTORY_H relation is not a partitioned table

PCT_TABLE N ST_SI_MESSAGE_HISTORY_V relation is not a partitioned table

PCT_TABLE N DWH_CODE relation is not a partitioned table

REFRESH_FAST_AFTER_INSERT Y

REFRESH_FAST_AFTER_ONETAB_DML Y

REFRESH_FAST_AFTER_ANY_DML Y

REFRESH_FAST_PCT N PCT is not possible on any of the detail tables in the materialized view

REWRITE_FULL_TEXT_MATCH Y

REWRITE_PARTIAL_TEXT_MATCH Y

REWRITE_GENERAL Y

REWRITE_PCT N general rewrite is not possible or PCT is not possible on any of the detail tables

PCT_TABLE_REWRITE N ST_SI_MESSAGE_HISTORY_H relation is not a partitioned table

PCT_TABLE_REWRITE N ST_SI_MESSAGE_HISTORY_V relation is not a partitioned table

PCT_TABLE_REWRITE N DWH_CODE relation is not a partitioned table

18 rows selected.

Let’s try to force a complete refresh with atomic_refresh set to FALSE in order to check if the “Delete” operation is replaced by a “Truncate” operation:

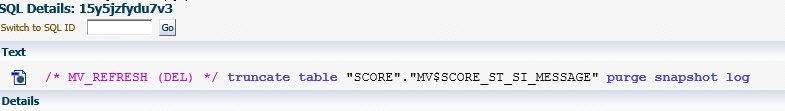

Now we have a “Truncate“:

--c = complete refresh

dbms_mview.refresh('SCORE.'||l_mview||'','C',atomic_refresh=>FALSE);

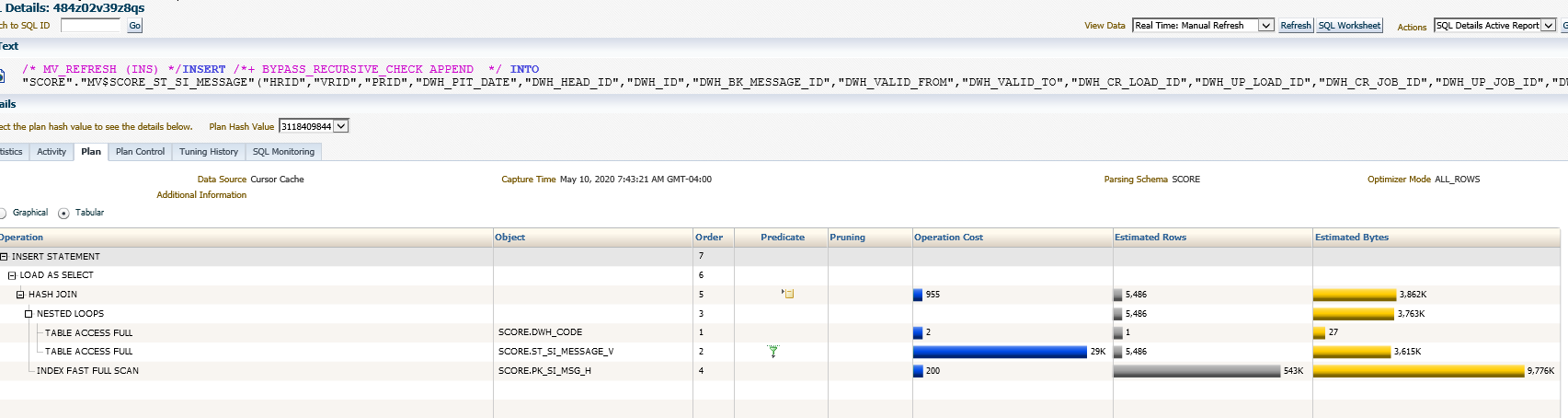

Plus an “Insert” :

Let’s check now the refresh time :

SELECT *

FROM ( SELECT OWNER,

MVIEW_NAME,

CONTAINER_NAME,

REFRESH_MODE,

LAST_REFRESH_TYPE,

STALENESS,

round((LAST_REFRESH_END_TIME-LAST_REFRESH_DATE)*24*60,2) as REFRESH_TIME_MINS

FROM ALL_MVIEWS

WHERE LAST_REFRESH_TYPE IN ('FAST','COMPLETE')

)

ORDER BY REFRESH_TIME_MINS DESC;

OWNER MVIEW_NAME CONTAINER_NAME REFRESH_MODE LAST_REFRESH_TYPE STALENESS REFRESH_TIME_MINS

------- -------------------------------- -------------------------------- ------------ ----------------- -------------------------------------

SCORE MV$SCORE_ST_SI_MESSAGE MV$SCORE_ST_SI_MESSAGE FAST COMPLETE FRESH 6.75

SCORE MV$SCORE_ST_SI_MESSAGE_HISTORY MV$SCORE_ST_SI_MESSAGE_HISTORY FAST COMPLETE FRESH 1

Conclusion (for my environment) :

- The “Complete” refresh (7.75 mins) is more faster than the “Fast” refresh (48.9 mins),

- The parameter “atomic_refresh=FALSE” works only with “complete” refresh, so “truncate” is only possible with “complete“.

- It’s not a surprise to have “Complete” more faster than “Fast” since the materialized views are truncated instead of being deleted.

Now, I want to understand why “Fast refresh” is very long (48.9 mins).

In order to be fast refreshed, materialized view requires materialized view logs storing the modifications propagated from the base tables to the container tables (regular table with same name as materialized view which stores the results set returned by the query).

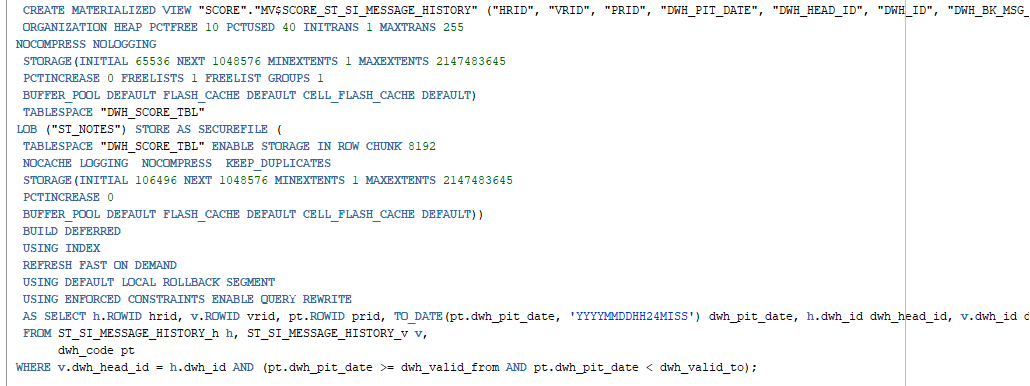

Let’s check the base tables used into the SQL statement loading the materialized view :

Be focus on the table names after the clause “FROM“:

- ST_SI_MESSAGE_HISTORY_H

- ST_SI_MESSAGE_HISTORY_V

- DWH_CODE

Let’s check the number of rows which exist on each tables sources :

SQL> SELECT 'DWH_CODE' as TABLE_NAME,NUM_ROWS FROM USER_TABLES WHERE TABLE_NAME = 'DWH_CODE' 2 UNION ALL 3 SELECT 'ST_SI_MESSAGE_HISTORY_H' as TABLE_NAME,NUM_ROWS FROM USER_TABLES WHERE TABLE_NAME = 'ST_SI_MESSAGE_HISTORY_H' 4 UNION ALL 5 SELECT 'ST_SI_MESSAGE_HISTORY_V' as TABLE_NAME,NUM_ROWS FROM USER_TABLES WHERE TABLE_NAME = 'ST_SI_MESSAGE_HISTORY_V'; TABLE_NAME NUM_ROWS ----------------------- ---------- DWH_CODE 1 ST_SI_MESSAGE_HISTORY_H 4801733 ST_SI_MESSAGE_HISTORY_V 5081578

To be fast refreshed, the MV$SCORE_ST_SI_MESSAGE_HISTORY materialized view requires materialized logs on the ST_SI_MESSAGE_HISTORY_H, ST_SI_MESSAGE_HISTORY_V and DWH_CODE tables:

SQL> SELECT LOG_OWNER,MASTER,LOG_TABLE

2 FROM all_mview_logs

3 WHERE MASTER IN ('DWH_CODE','ST_SI_MESSAGE_H','ST_SI_MESSAGE_V');

LOG_OWNER MASTER LOG_TABLE

----------- ------------------ ----------------------------

SCORE ST_SI_MESSAGE_V MLOG$_ST_SI_MESSAGE_V

SCORE ST_SI_MESSAGE_H MLOG$_ST_SI_MESSAGE_H

SCORE DWH_CODE MLOG$_DWH_CODE

As, the materialized view logs contains only the modifications during a fast refresh, let’s check the contents (number of rows modified coming from the base tables) just before to execute the fast-refresh :

SQL> SELECT 2 owner, 3 mview_name, 4 container_name, 5 refresh_mode, 6 last_refresh_type, 7 staleness 8 FROM 9 all_mviews 10 WHERE 11 last_refresh_type IN ( 12 'FAST', 13 'COMPLETE' 14 ) 15 ; OWNER MVIEW_NAME CONTAINER_NAME REFRESH_MODE LAST_REFRESH_TYPE STALENESS ------- ------------------------------ --------------------------------------------------------------------------- SCORE MV$SCORE_ST_SI_MESSAGE MV$SCORE_ST_SI_MESSAGE DEMAND COMPLETE NEEDS_COMPILE SCORE MV$SCORE_ST_SI_MESSAGE_HISTORY MV$SCORE_ST_SI_MESSAGE_HISTORY DEMAND COMPLETE NEEDS_COMPILE

STALENESS = NEEDS_COMPILE means the materialized view need to be refreshed because base tables have been modified. It’s normal since we have stopped the ETL process just before the execution of the refresh mview procedure in order to see the content of the mview logs.

The contents of materialized view logs are :

SQL> SELECT * FROM "SCORE"."MLOG$_DWH_CODE"; M_ROW$$ SNAPTIME$ DMLTYPE$$ OLD_NEW$$ CHANGE_VECTOR$$ XID$$ --------------------- --------- --------- --------- ---------------- ---------------- AAAbvUAAUAAABtjAAA 01-JAN-00 U U 02 1125921382632021 AAAbvUAAUAAABtjAAA 01-JAN-00 U N 02 1125921382632021 SQL> SELECT * FROM "SCORE"."MLOG$_ST_SI_MESSAGE_V"; no rows selected SQL> SELECT * FROM "SCORE"."MLOG$_ST_SI_MESSAGE_H";

no rows selected SQL>

I’m a little bit surprised because:

- Being given my refresh time, I expected to have a lot of modifications coming from the big tables : ST_SI_MESSAGE_V (5081578 rows) and ST_SI_MESSAGE_H (4801733 rows) instead of DWH_CODE (1 row).

After analyzing the ETL process, it appears that only this table (DWH_CODE) is modified every day with the sysdate. This table is a metadata table which contents only one row identifying the loading date.

If we check the SQL statement loading the materialized view, this table is used to populate the column DWH_PIT_DATE (see print screen above).

But since this table is joined with ST_SI_MESSAGE_H and ST_SI_MESSAGE_V, the oracle optimizer must do a full scan on the materialized view MV$SCORE_ST_SI_MESSAGE_HISTORY (more than 500K rows) to populate each row with exactly the same value:

SQL> select distinct dwh_pit_date from score.mv$score_st_si_message_history; DWH_PIT_DATE ------------ 09-MAY-20

There is no sense to have a column having always the same value, here we have definitely a materialized view design problem.Whatever the refresh mode using : “Complete” or “Fast”, we always scan all the materialized view logs to populate column DWH_PIT_DATE.

To solve this issue, let’s check the materialized view logs dependencies :

SQL> SELECT DISTINCT NAME

2 FROM ALL_DEPENDENCIES

3 WHERE TYPE = 'VIEW'

4 AND REFERENCED_OWNER = 'DWH_LOAD'

5 AND REFERENCED_NAME IN ('MV$SCORE_ST_SI_MESSAGE','MV$SCORE_ST_SI_MESSAGE_HISTORY')

6 ORDER BY NAME;

NAME

--------------------------------------------------------------------------------

V$LOAD_CC_DMSG

V$LOAD_CC_FMSG

V$LOAD_CC_MSG_ACK

V$LOAD_CC_MSG_BOOKEDIN_BY

V$LOAD_CC_MSG_PUSHEDBACK

V$LOAD_CC_MSG_SCENARIO

V$LOAD_CC_MSG_SOURCE

V$LOAD_CC_MSG_SRC

V$LOAD_CC_MSG_TRIAGED_BY

9 rows selected.

SQL>

In my environment, only this objects (oracle views) use the materialized views, so I can safely remove the column DWH_CODE.DWH_PIT_DATE (the column not the join with the table DWH_CODE) from the materialized views and move it to the dependent objects.

After this design modifications, let’s execute the refresh and check the refresh time :

SQL> SELECT *

2 FROM ( SELECT OWNER,

3 MVIEW_NAME,

4 CONTAINER_NAME,

5 REFRESH_MODE,

6 REFRESH_METHOD,

7 LAST_REFRESH_TYPE,

8 STALENESS,

9 ROUND((LAST_REFRESH_END_TIME-LAST_REFRESH_DATE)*24*60,2) as REFRESH_TIME_MINS

10 FROM ALL_MVIEWS

11 WHERE LAST_REFRESH_TYPE IN ('FAST','COMPLETE')

12 )

13 ORDER BY REFRESH_TIME_MINS DESC;

OWNER MVIEW_NAME CONTAINER_NAME REFRES REFRESH_ LAST_REF STALENESS REFRESH_TIME_MINS

--------- --------------------------------- ------------------------------------ ------ -------- -------- ------------------- ---------------------

SCORE MV$SCORE_ST_SI_MESSAGE MV$SCORE_ST_SI_MESSAGE DEMAND FAST COMPLETE FRESH 1.58

SCORE MV$SCORE_ST_SI_MESSAGE_HISTORY MV$SCORE_ST_SI_MESSAGE_HISTORY DEMAND FAST COMPLETE FRESH .28

The refresh time is faster (1.86 mins) than the last one (7.75 mins) and now oracle optimizer does not full scan the materialized view to populate each row with same value (DWH_CODE.DWH_PIT_DATE).

Conclusion :

- We have reduced the refresh time from 50mins to 1.86 mins.

- Fast Refresh is not always more faster than Complete Refresh, it depends of the SQL statement loading the view and the number of rows propagated from the base tables to the container tables within the materialized view logs.

- To decrease the refresh time, act only on the refresh option (Fast, Complete, Index,etc.) is not enough, we have to also analyze and modify the SQL statement loading the materialized view.

- If you have design problem, never be afraid to modify the SQL statement and even some part of your architecture (like here the dependent objects). Of course you have to know very well the impact on your application and on your ETL process.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/oracle-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)