After having fun with my ACE friends on previous day ACE dinner and Oracle CloudWorld party (next pictures might speak by themselves) it was time to be back on sessions and meetings for the last day at Las Vegas.

Without forgetting the great concert given by Steve Miller Band.

Oracle Database In-Memory: Best Practices and Use Cases [LRN3515]

This session was presented by Andy Rivenes, Senior Principal Product Manager at Oracle and Donna Cooksey, Solution Specialist, Cloud Business Group at Oracle.

They talked about the different use cases for Oracle Database In-Memory. They also highlighted how several key customers have used Database In-Memory to accelerate their analytic workloads, and also discussed best practices for implementing and using it in our environment.

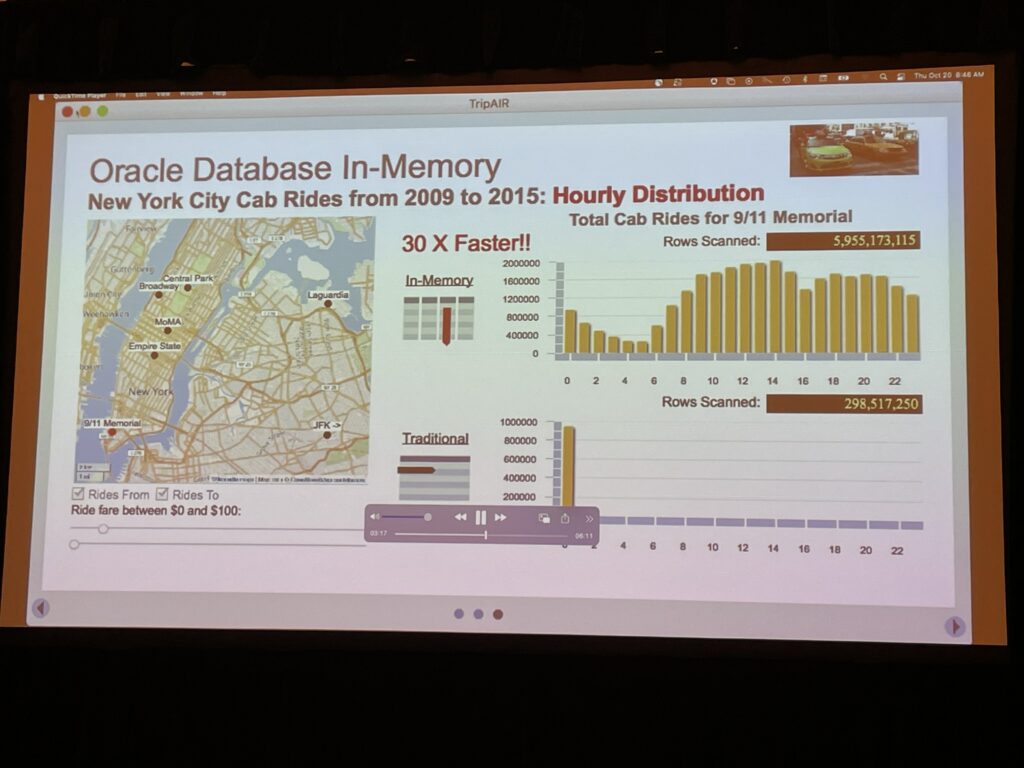

Analytics are critical. And we need Real-time analytics. We need to get immediate answers and database In-Memory will help here knowing it is only cpu and memory, so no constraint access on the data.

What will provide Database In-Memory:

- 10x faster reporting

- Real-time analytics

- Improving efficiency, reducing cpu and I/O consumption for queries for example

Transaction Processing versus Analytics Queries:

- Row format Database (fast for OLTP, slower for analytics)

- Column Format (fast for analytics, really slower for OLTP)

Database in-Memory is column format database store.

We sitll have both row and column formats for same table.

How to implement oracle In-Memory? Easy…:

- inmemory_size parameter to be set. This parameter will configure Memory Capacity (alter system)

- Configure tables or partitions to be in-memory (alter table|partition …inmemory;)

- Later drop analytic indexes to speed up OLTP

Database In-Memory is an option for Oracle EE. Pay for it on On-premises. But already included in the Cloud, so free of charge.

Oracle In-Memory advisor:

- In-Memory advisor is free of charge. But Oracle Diagnostics and Tuning pack should be licensed.

- Analyzes existing database workload via AWR and ASH repositories

- Provide lists of objects that would benefit most from being populated into IM column store

What about In-Memory and Exadata?

- Exadata further accelerates Database in-Memory analytics using flash cache

- On exadata, single queries can span across storage tiers achieving the speed of DRAM, I/O of flash and cost to disk

- With exadata smart scan uniquely improves performance : Analyze data faster using fewer OCPUs

- Lowers cots using storage cores for cpu intensive processing reducing OCPU spend. Uses 20-30% less OCPU, get answers faster (less data transfer between database server and storage)

- Increase ROI of Active Data Guard standby databases : Run real-time reporting on standby databases using the same or different in-memory tables as the one of the primary database side

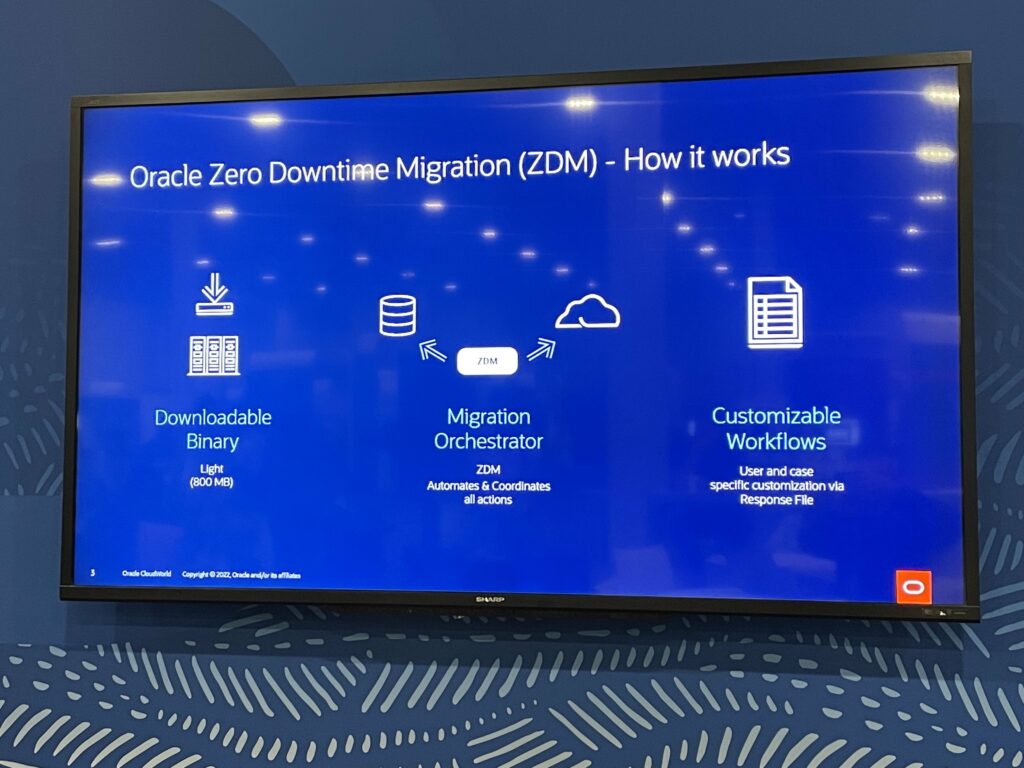

Migrate with Ease—Zero Downtime Migration to Oracle Autonomous Database [LIT4034]

I had the opportunity to follow a 20 minutes session provided by Ludovico Caldara, Senior Principal Product Manager at Oracle.

Ludovico presented Oracle Zero Downtime Migration (ZDM) tool which is Oracle’s premier solution for a simplified and automated database migration. This tool results in zero to negligible downtime when migrating a production system to or between any Oracle-owned infrastructure.

This tool will have benefit when migrating databases from On-premises to the cloud.

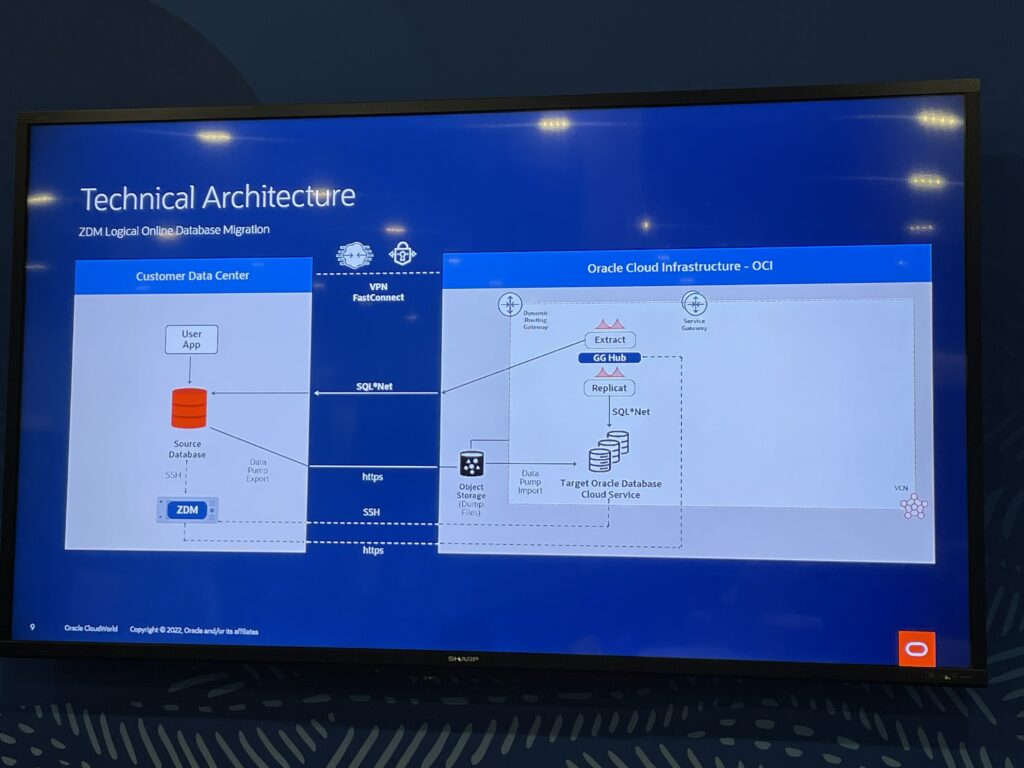

As we can see in the next picture, the migration orchestrator will connect to the source and destination database. It is important to mention that destination needs to have grid infrastructure.

Software is simple, free and allowed end-to-end automation. It work swith SE2, EE, cloud to cloud, On-premises to cloud, all versions 11.2.0.4 to 21c. As destination database is an autonomous database in the cloud golden gate comes free for 6 months use.

Here is a picture of the technical architecture:

With physical Migration source and destination should be the same. To migrate to autonomous DB, we do not have any idea of the HW and we can not touch, so no physical migration possible. Need to use logical migration to migrate database to autonomous DB.

ZDM Logical Online Database Migration scenario:

- Download and configure ZDM (GoldenGate Hub needs to be provisioned as well)

- ZDM starts Database migration

- ZDM configures an OGG (Oracle Golden Gate) extract Microservice

- ZDM starts a DataPump Export job

- ZDM starts a DataPump Import job

- ZDM configures an OGG Replicat Microservice

- ZDM monitors OGG replication

- ZDM switches over (here we will have short downtime)

- ZDM validates the migration, cleans up all required connectivity and finalizes the migration process

Use following web site http://www.oracle.com/goto/zdm to get:

- Downloads

- Documentation

- Guides

- LiveLabs

Conclusion, I will have to test this tool in the cloud. 😉

The Least-Known Facts About Oracle Data Guard and Oracle Active Data Guard [LIT4029]

I followed another very interesting 20 minutes session from Ludovico about Data Guard features that are not well known by customer.

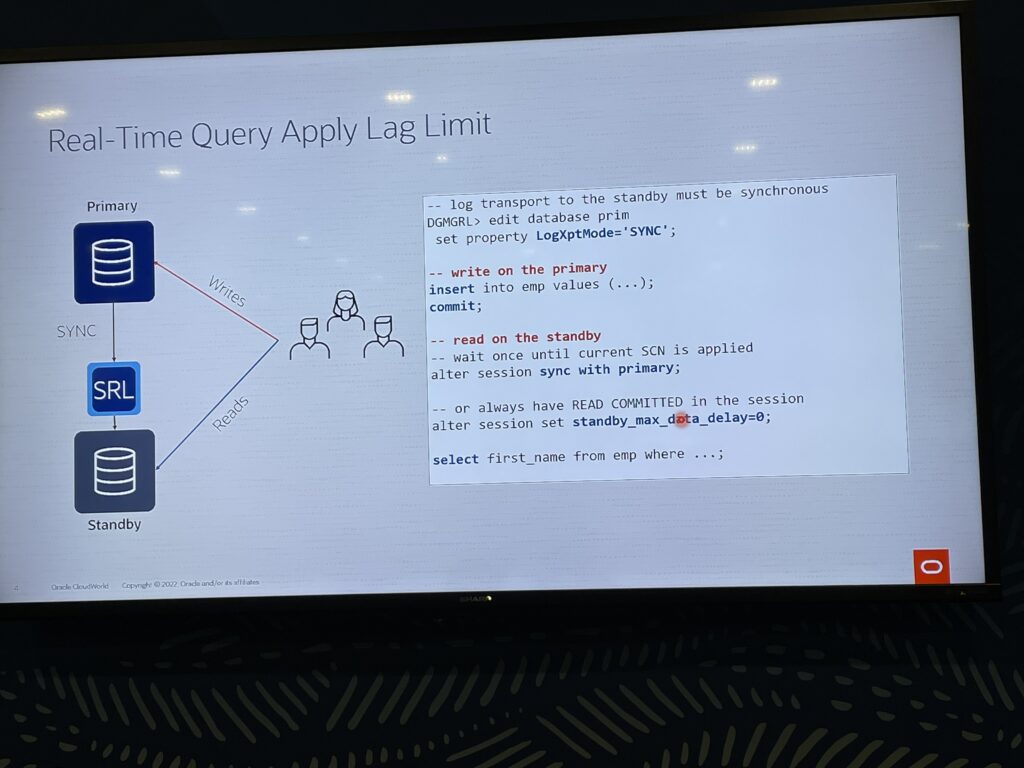

Real time query apply lag limit with active Data Guard

Transport mode needs to be SYNC. The standby waits that the transaction is committed to avoid consistency issue.

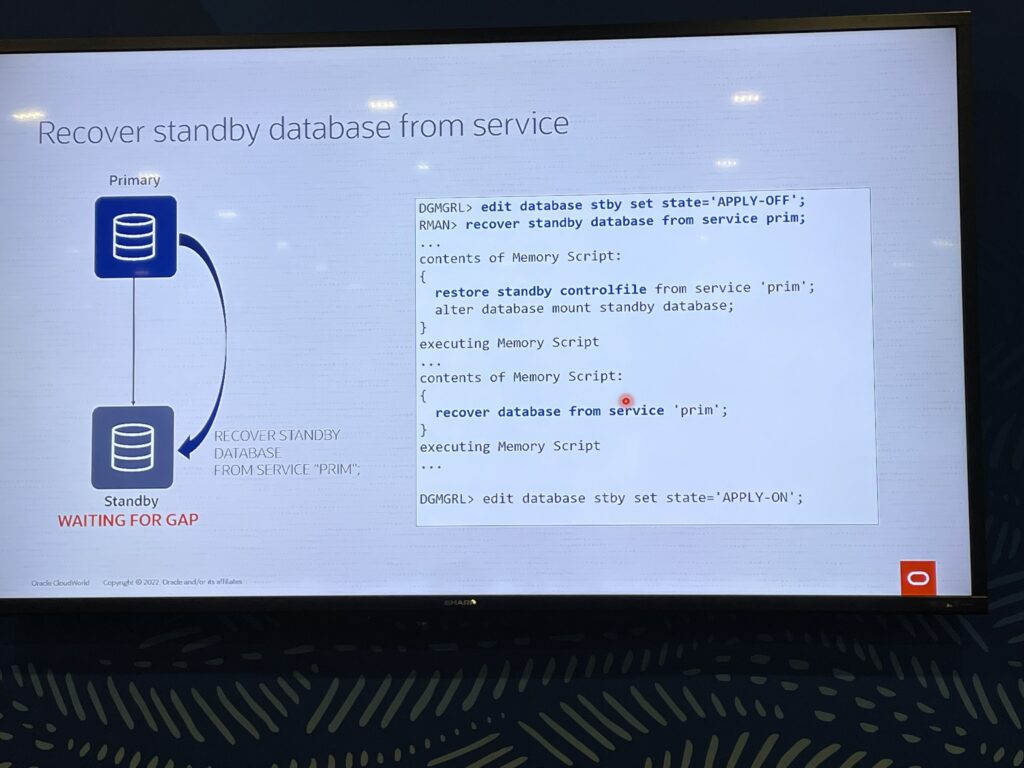

19c recover standby database from service

When standby is out of sync, the standby database needs to resolve the gap. But if the gap is huge, before 19c we had to use incremental backup with SCN. Since 19c, the recover standby command will do the job using incrementatl backups automatically.

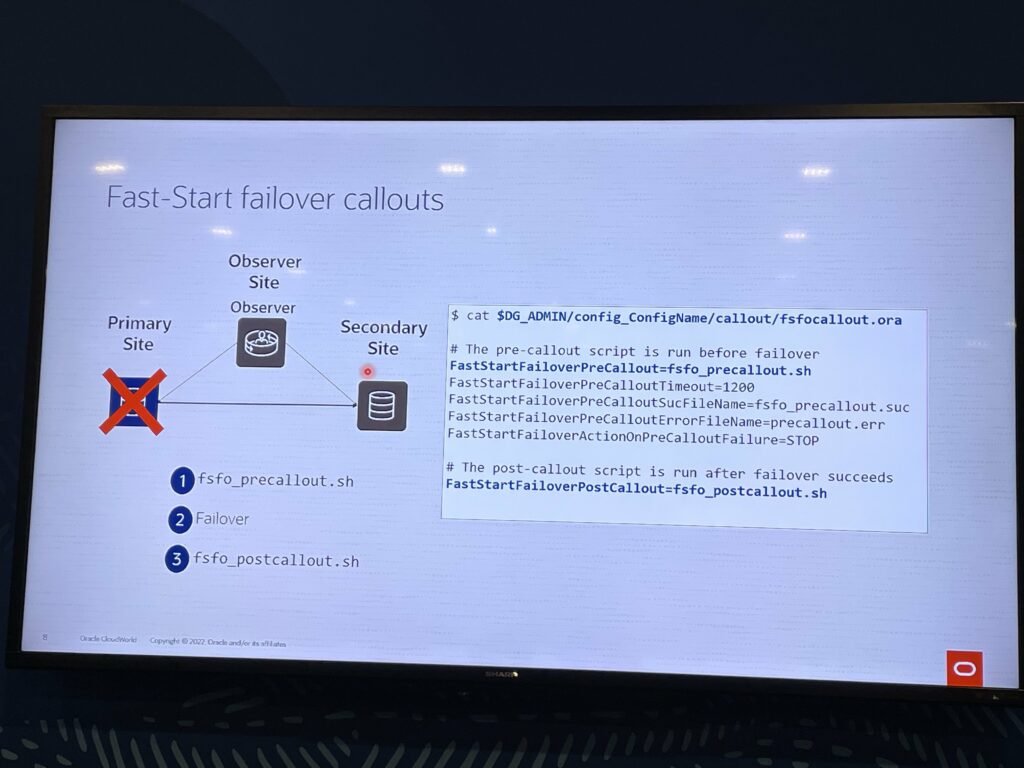

21c fast-start failover callouts

Possible cook to be executed when there is a failover.

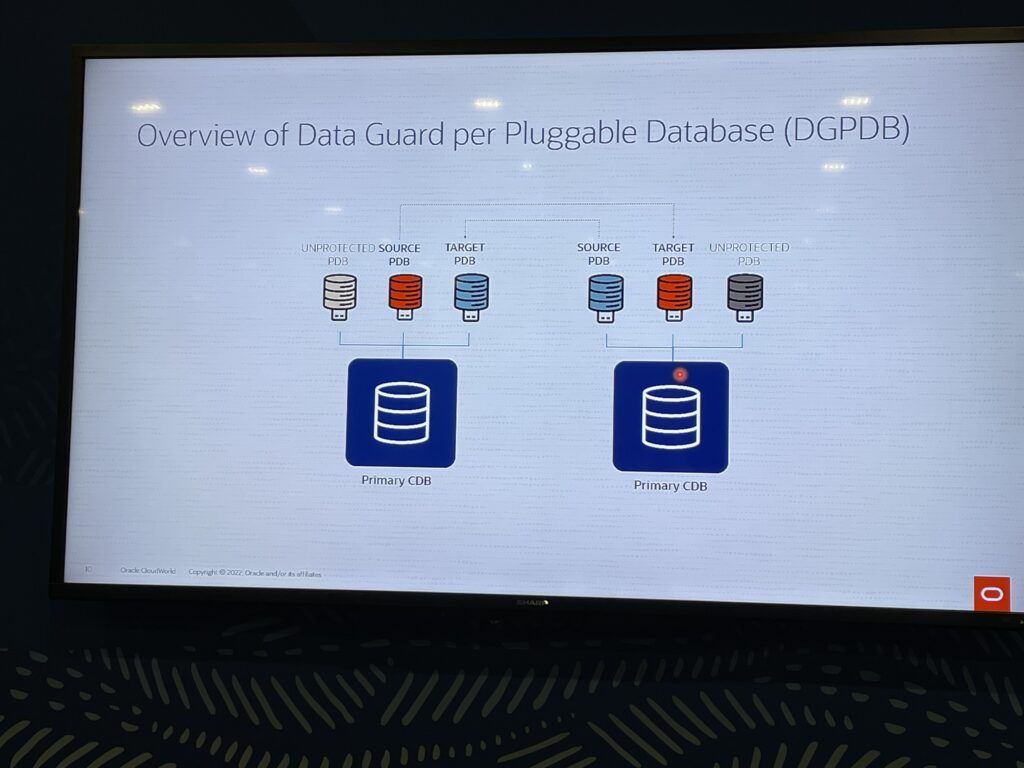

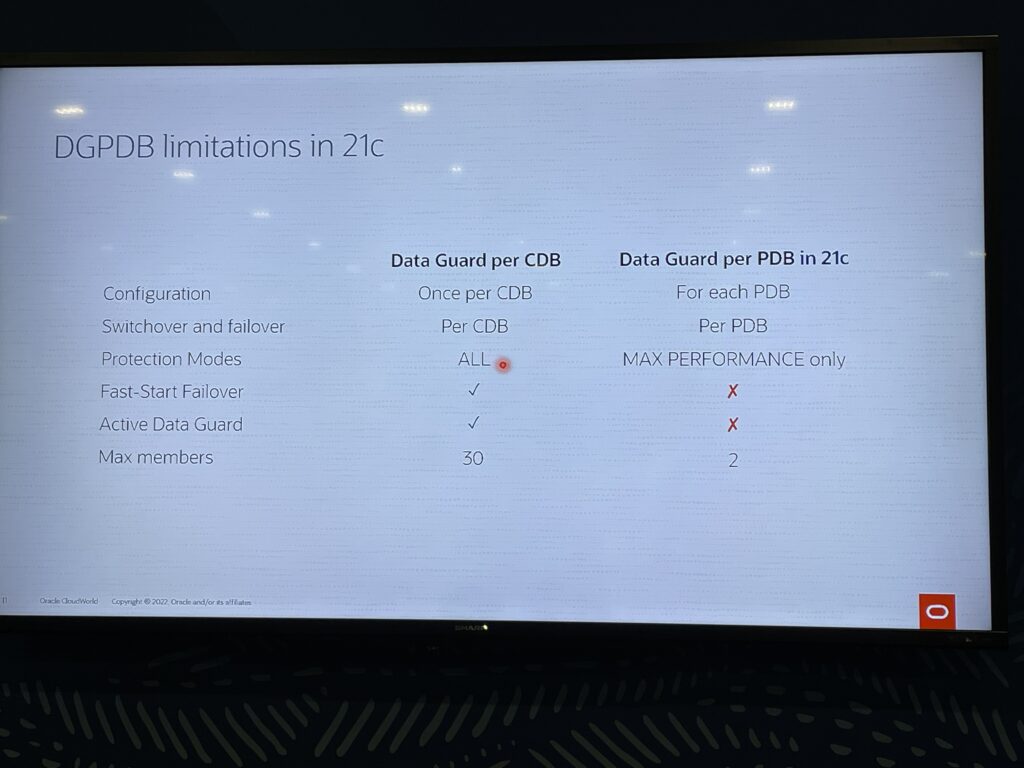

21.7 Oracle Data Guard per Pluggable Database

Data Guard transport will not be done at the CDB level but at the PDB level. This means that switchover/failover will be possible at the PDB Level.

Few requirements missing that will be integrated in next release:

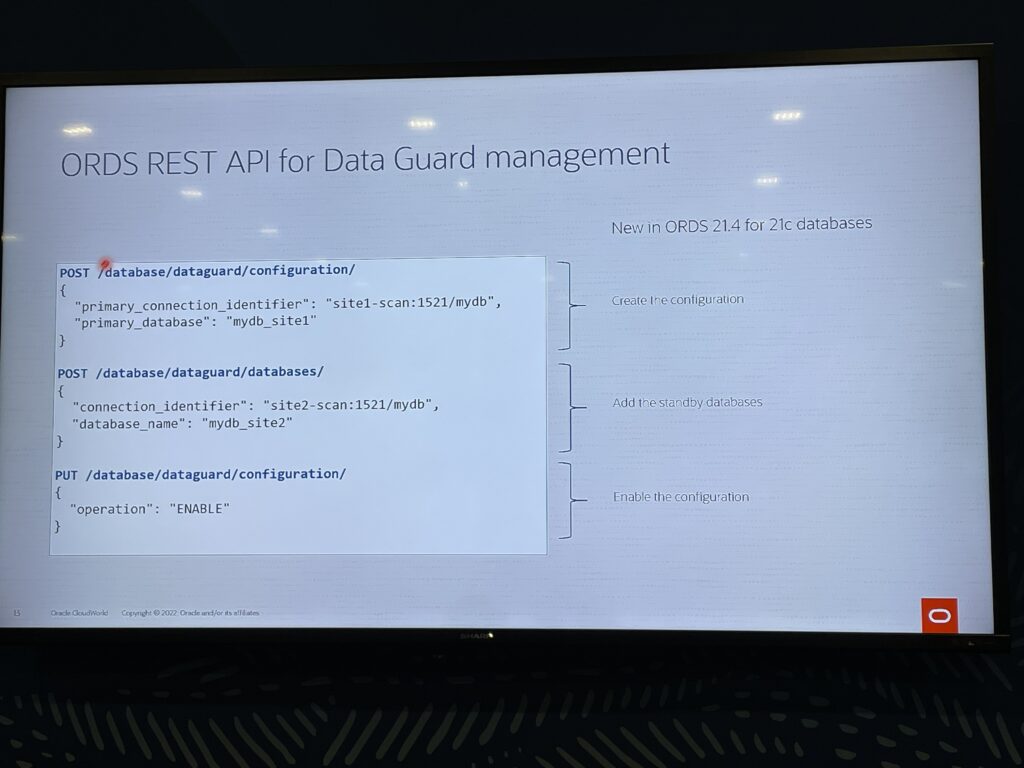

21c ORDS REST API for DG management

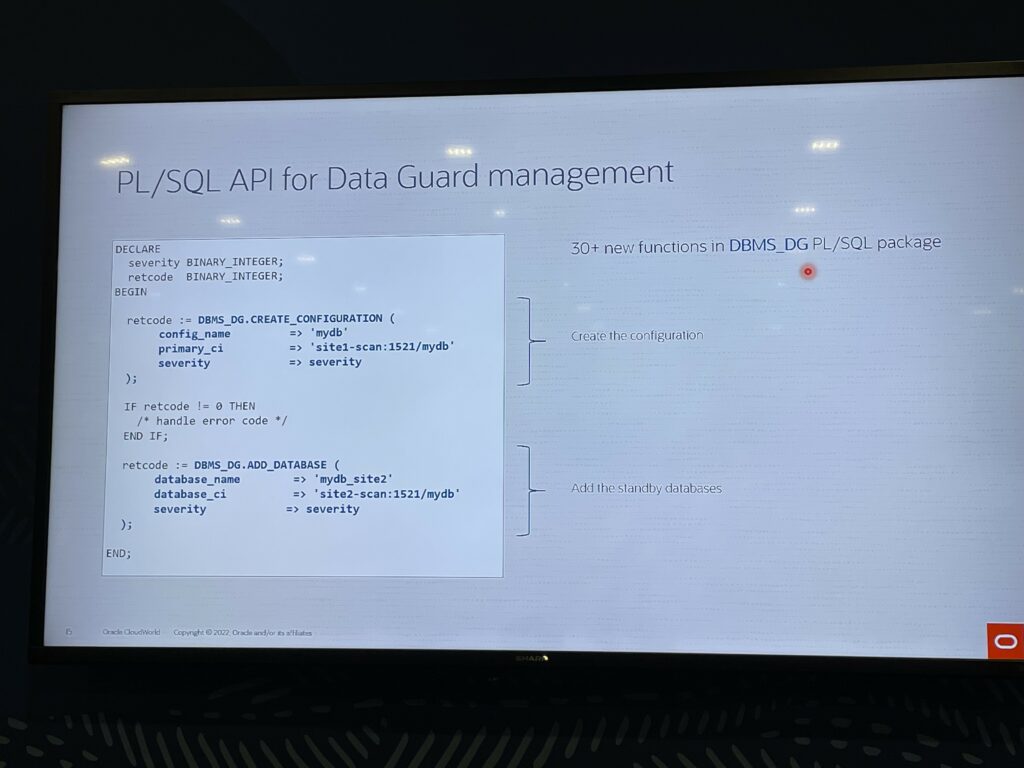

23c PL/SQL API for DG Management

Use case : create DG configuration programmatically.

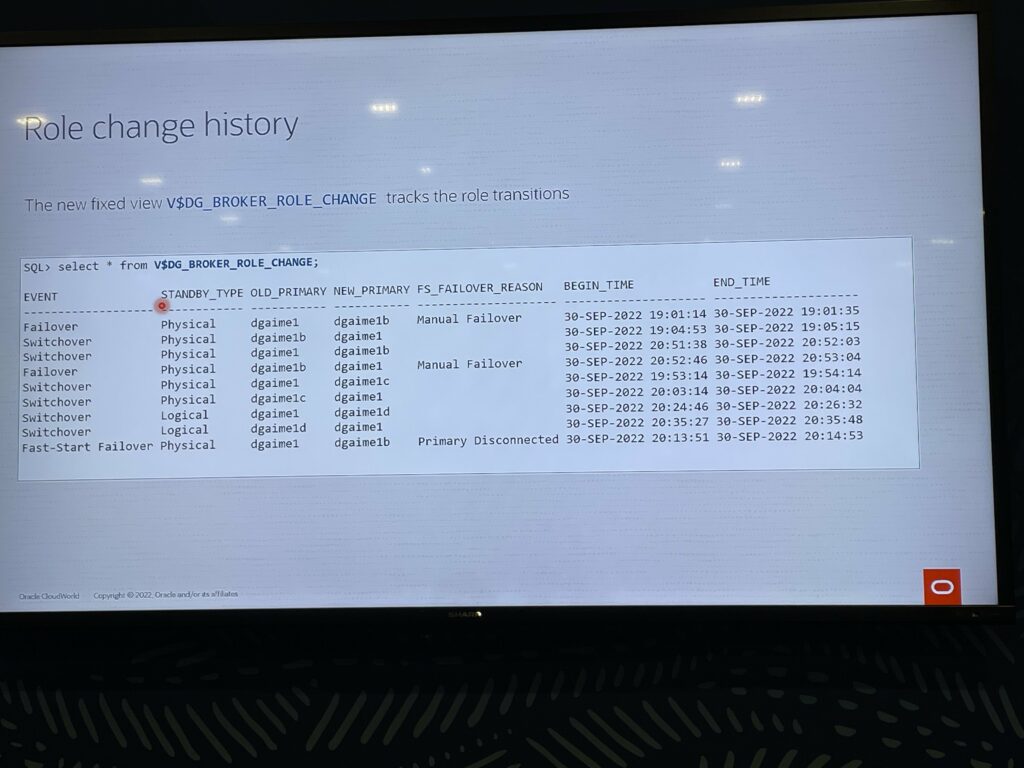

23c role change history

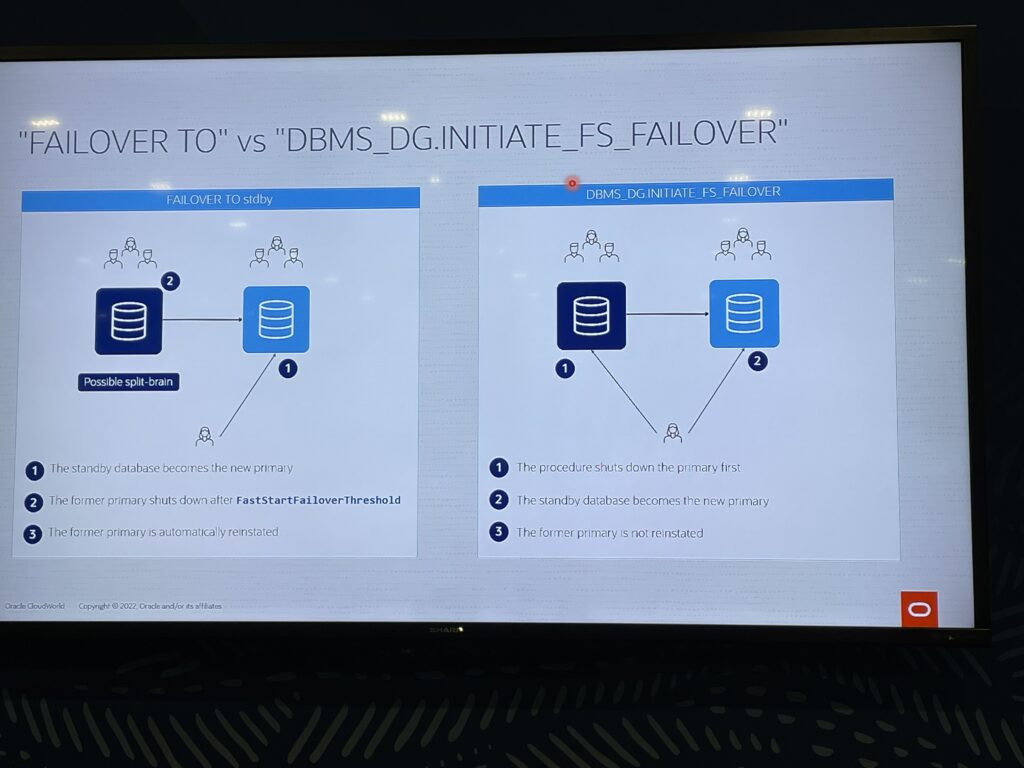

Difference between manual failover (failover to ) vs dbms_dg.initiate_fs_failover

Manual failover : we need to make sure primary is not available any more to avoid split brain.

dbms_dg : to be used from the application directly.

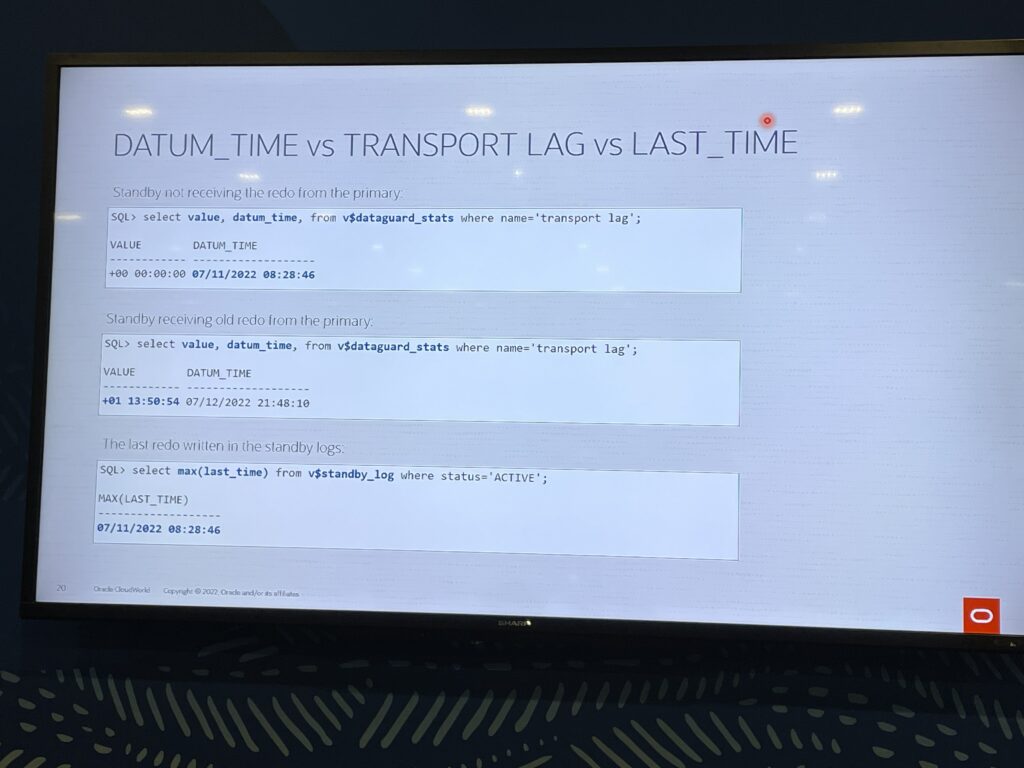

datum_time vs transport lag vs last_time

datum_time : when the last change vector is received from the primary due to disconnection

If connection to the primary exist and old redo log are missing we have a lag.

last_time : last redo written in the standby logs

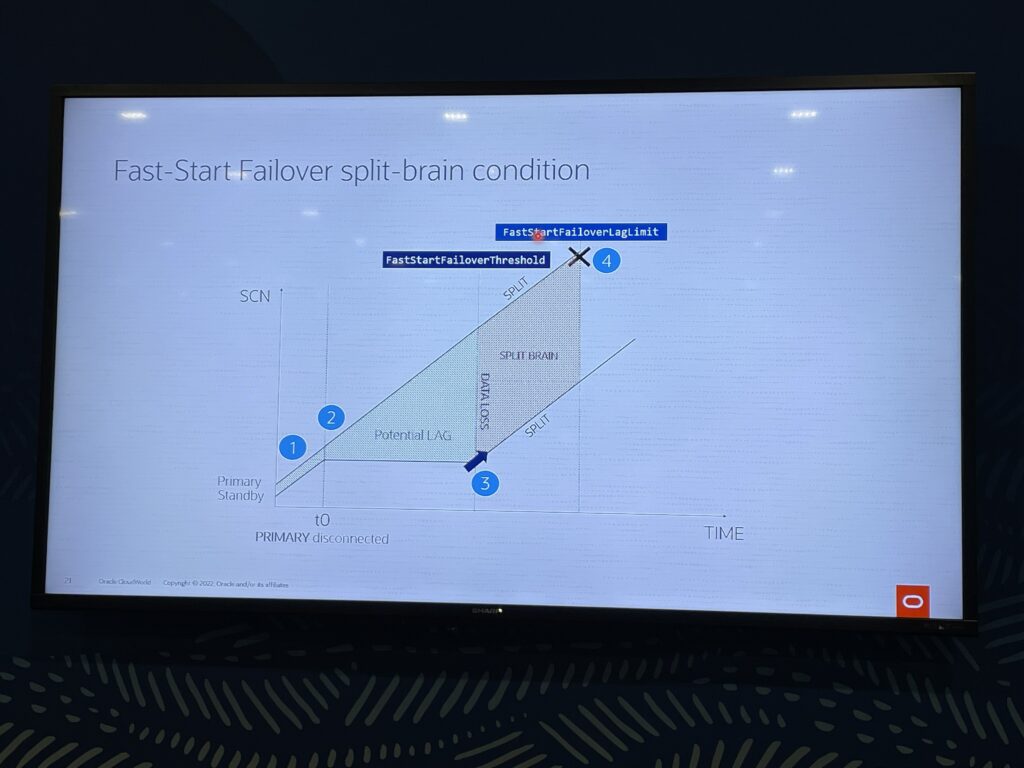

Fast-Start Failover split-brain condition

FastStartFailoverLagLimit should always be lower than FastStartFailoverThreshold to avoid split brain.

Data Guard best practices are available on Oracle Help Center.

Not forgetting the booth!

I was also pleased to take one hour today to walk around the partnership booth. I had good discussions with Nutanix on Nutanix NDB product (previous ERA product) and Quest guys. By the way, quest is really having nice products, like TOAD, Folglight, or SharePlex which can replicate database in mix databases environment.

ODA Customer Advisory Board

I had the great opportunity to be invited in an ODA CAB meeting with other customers. We could address many points, discussed what we find great with the ODA, the top feature, the missing feature, the challenges we have and our expectations. We could also take part of a presentation of the next feature on ODA that development is working on. Of course, I’m not authorised to say more here but I can tell you that there are some great improvements coming, like improvement on patching, having multiple database in DB System, been able to perform some out of the box OS patching,… But I didn’t say anything, right? 😉

I would really like to thank Carlos Ortiz, Sr. Principal Product Manager at Oracle, Sanjay Singh Vice President RACPack MAA, Product Development at Oracle and Tapan Samaddar, Product Management Director at Oracle to have organized this meeting and have made this collaboration possible.

Meeting with Stefan Diedericks, Senior Director, Partner Development Center of Excellence

It was a real pleasure to close this great 4 days at the Oracle CloudWorld meeting Stefan. We had some great discussions around Oracle Support efficiency when it goes with ODA, and I could share my last experience on ODA SR. We also discussed about the difficulty a company can have to get the needed expertise every year. Stefan was really understanding our problem and willing to help.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/MAW_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/11/NIJ-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/04/SIT_web.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/JEW_web-min-scaled.jpg)