Having the required infrastructure ready now it is time to have a look at the AWS Schema Conversion Tool (SCT). The goal of this tool is to convert a source schema (Oracle in our case) to a target schema (AWS Aurora in our case). From the description this should also migrate stored functions and procedures and we will see later on how well that works. Automatically converting Oracle’s PL/SQL to PostgreSQL’s PL/pgSQL quickly becomes tricky and often there is no way around re-implementing a major part of the business logic in the database. There is EDB Postgres Advanced Server which comes with Oracle compatibility and greatly reduces migration time but this is not the topic of this post.

Quickly coming back to the basic infrastructure, this is what we have now:

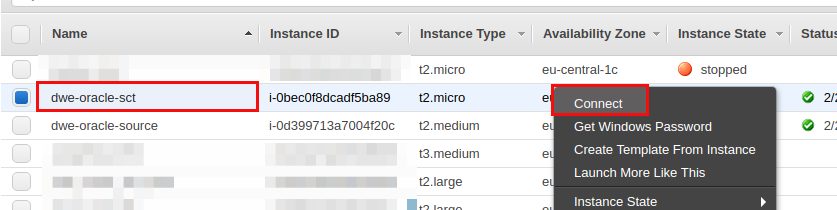

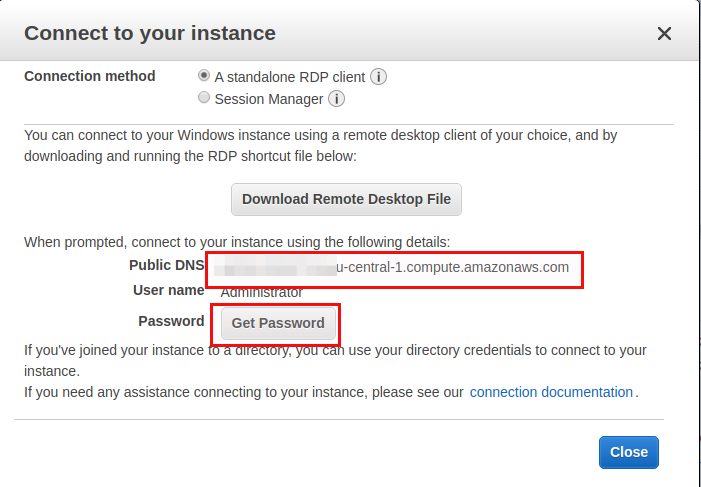

We’ll use the Windows host to install and configure AWS SCT and then convert the Oracle source schema to the Aurora PostgreSQL target schema. To connect to the Windows host you can right-click the Windows instance to get the required information:

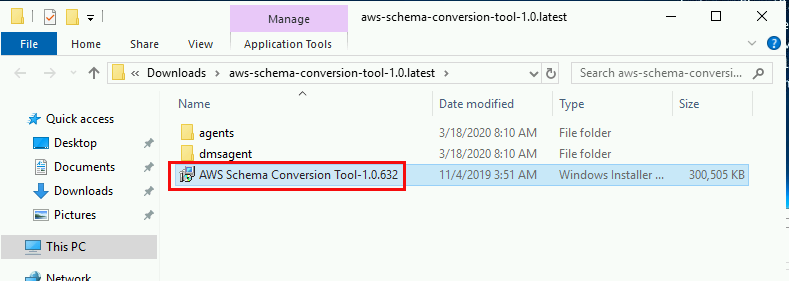

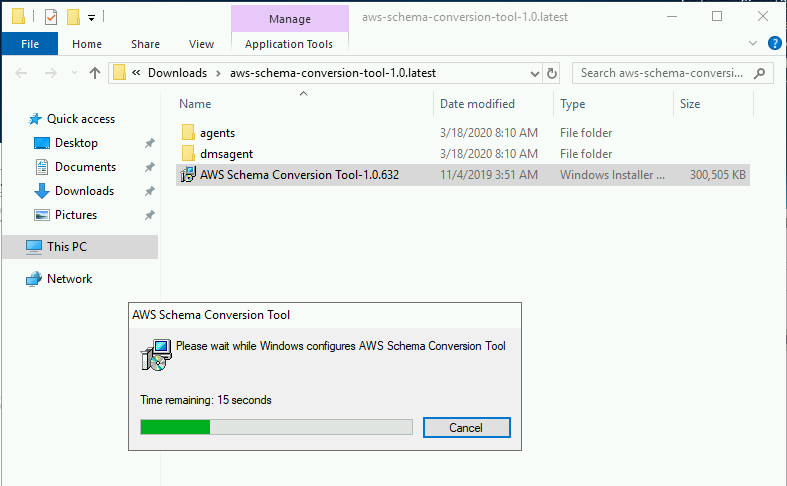

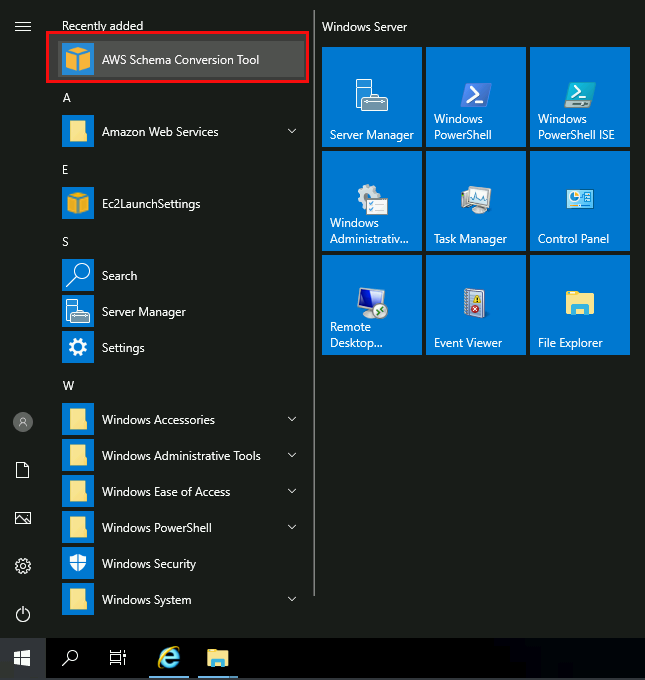

Now that we are connected to the Windows host we can continue with installing AWS SCT. The latest version for Windows can be downloaded here. Having that downloaded and extracted simply execute it, you will not be asked any questions and once completed you will find AWS SCT in the start menu:

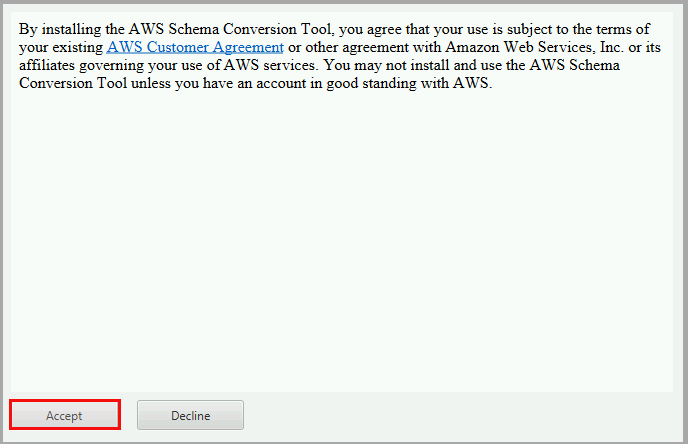

Simply start it and accept the license:

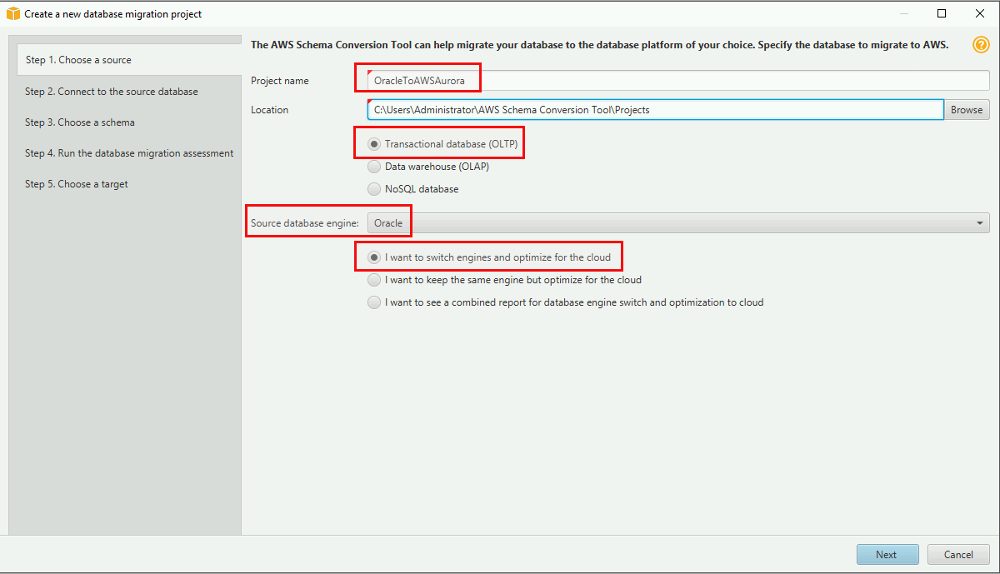

You will be directly asked what type of migration you want to do, so a new migration project can be created:

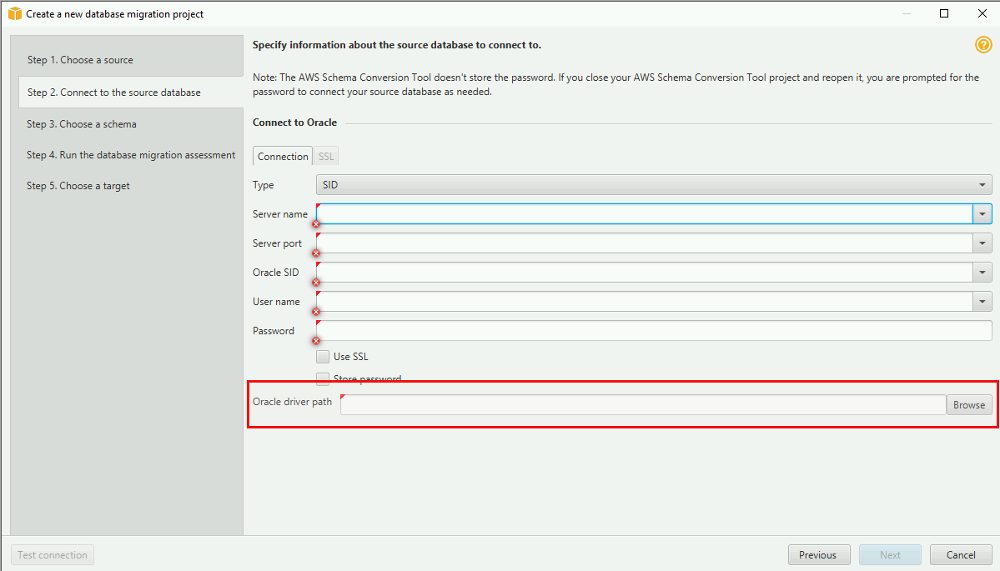

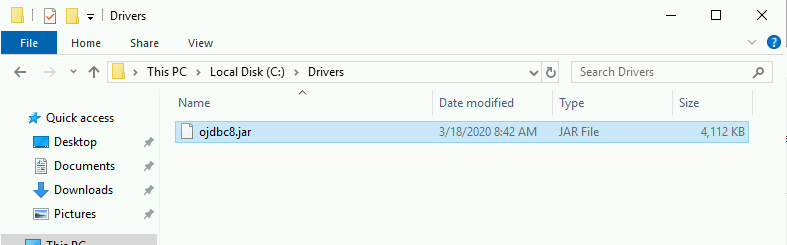

The next screen will directly ask you for the connection details to the Oracle instance. Before you do anything here you need to download the Oracle jdbc driver from here:

,,. and now can select the driver in the SCT screen:

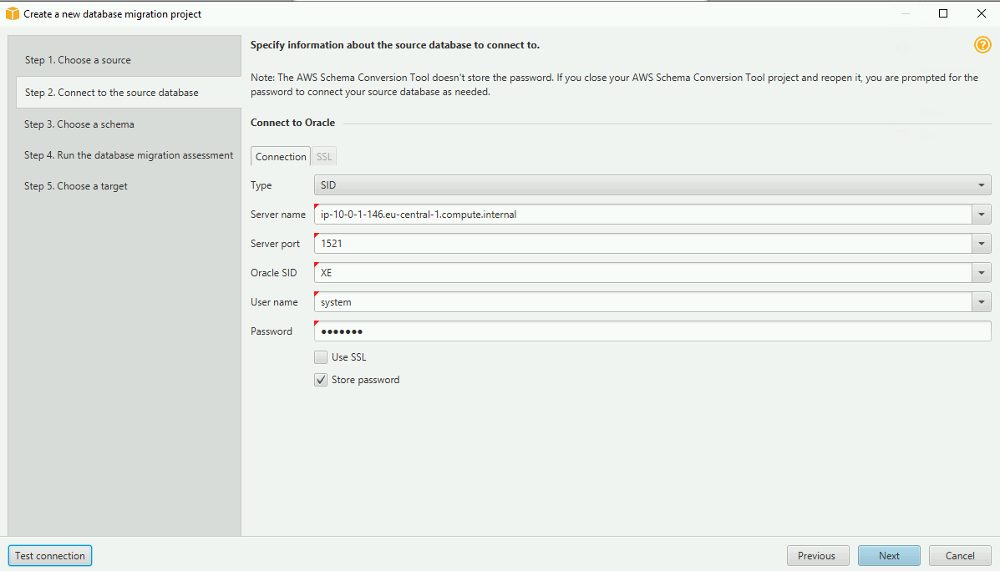

Before your connection test will succeed you will need to adjust the inbound rules of the security which is attached to the Oracle instance:

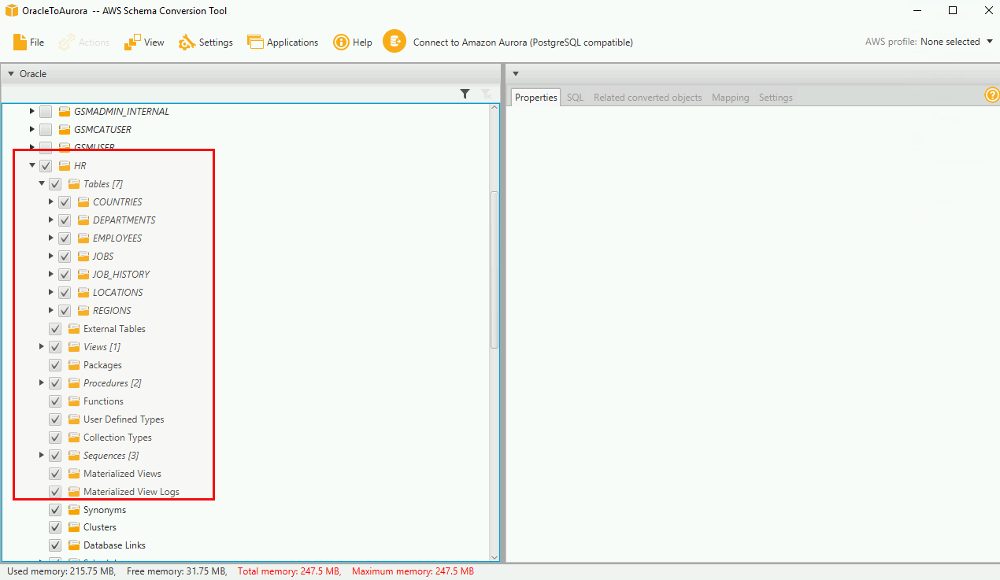

Once the connection fine SCT will load all the meta data of the source (we are only interested in the “HR” schema for now):

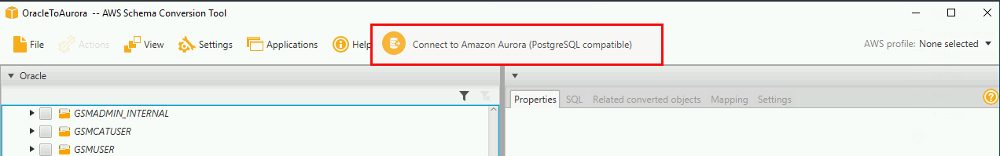

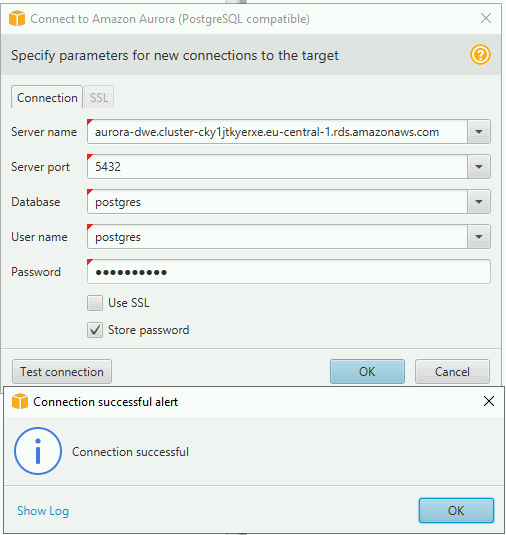

The next step is to connect to the target Aurora instance:

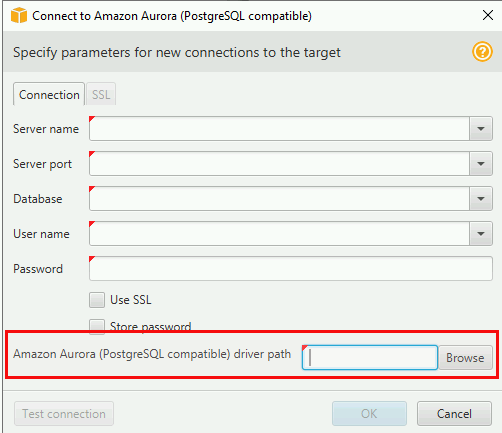

Some procedure here: Before you can connect you’ll need the driver (download from here):

Once ready (and again you need to adjust the security group to allow inbound connection on port 5432):

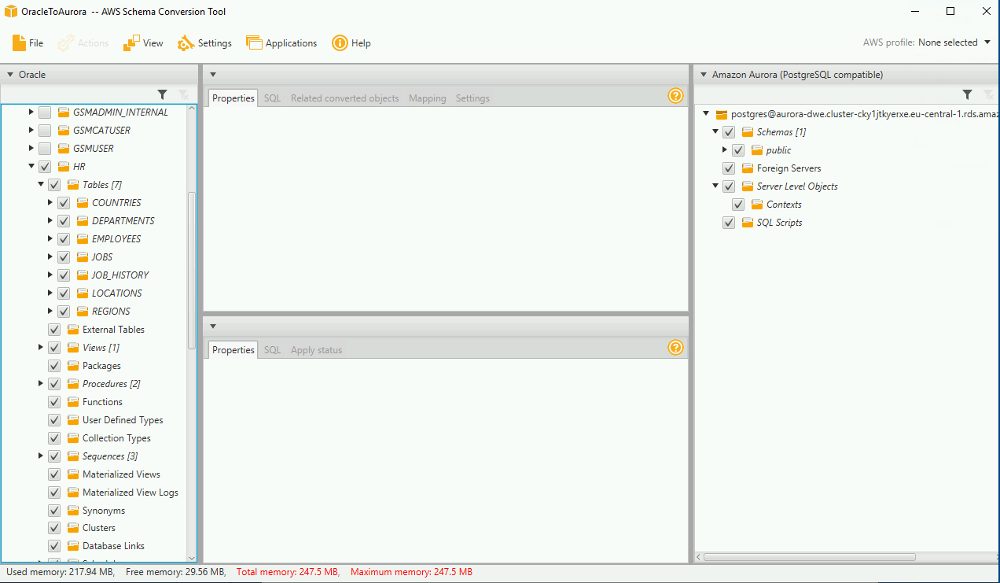

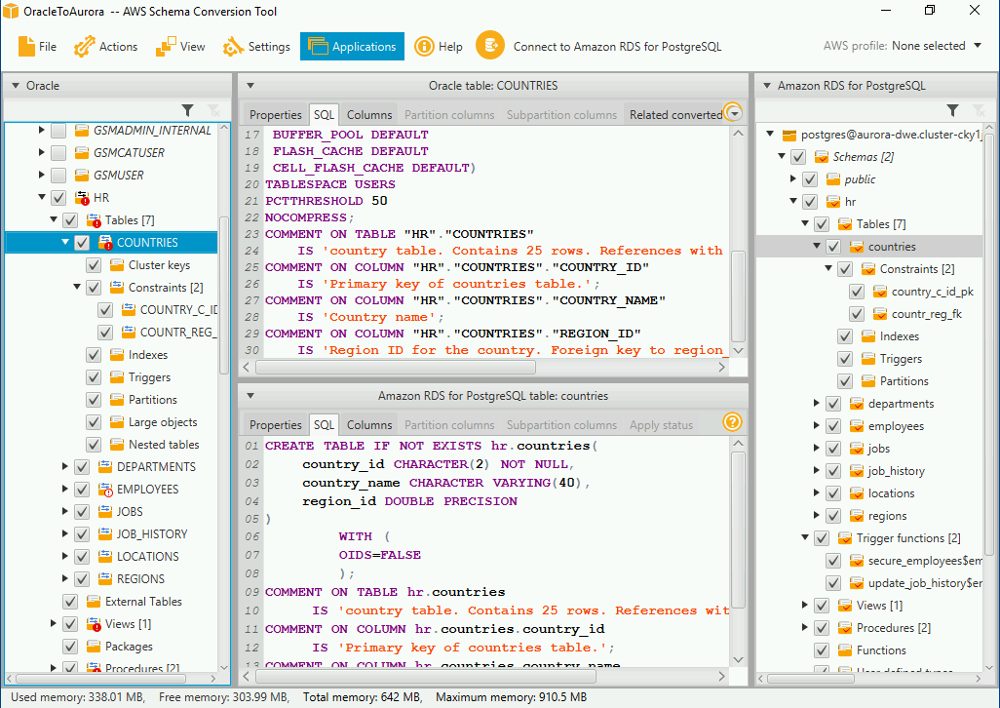

Now we have both sides connected: Oracle on the left and AWS Aurora on the right:

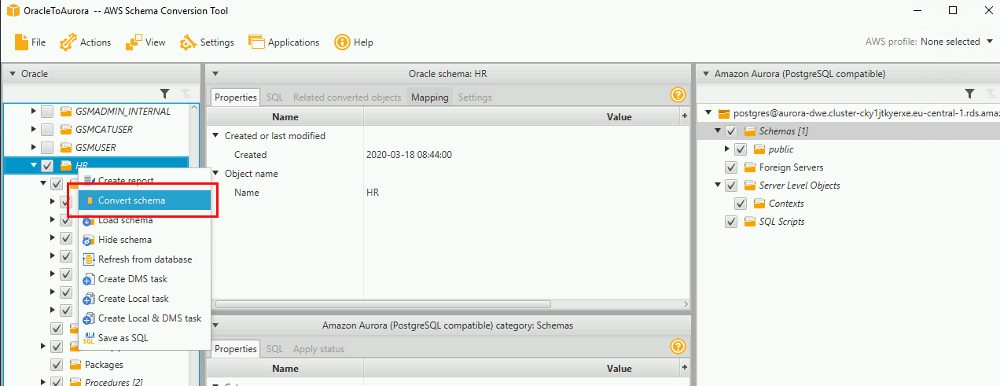

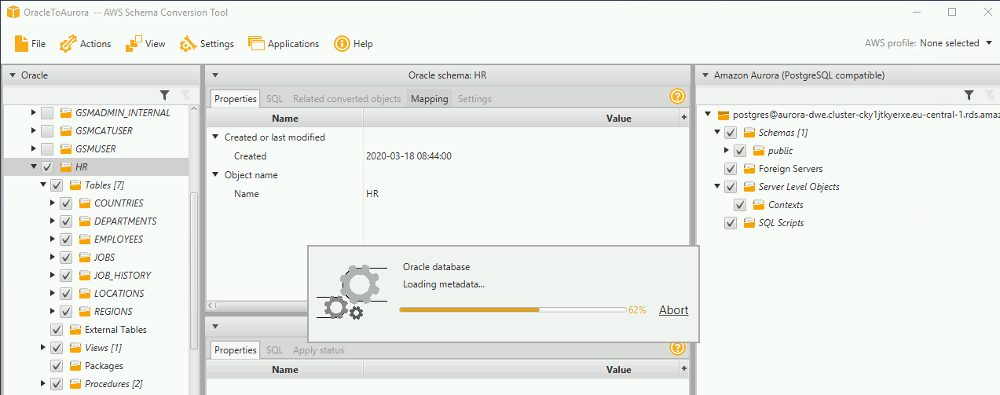

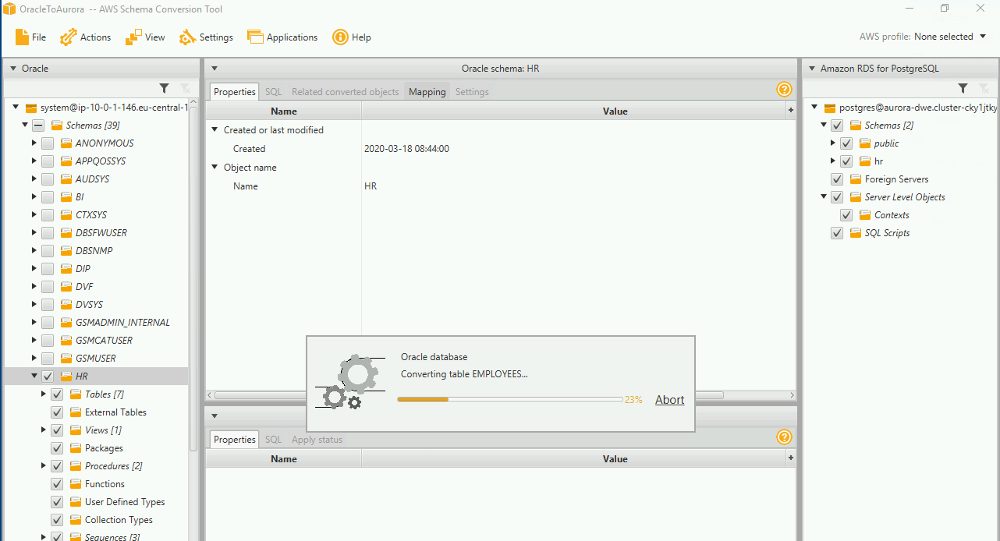

From now on the schema can be migrated from the source to the target:

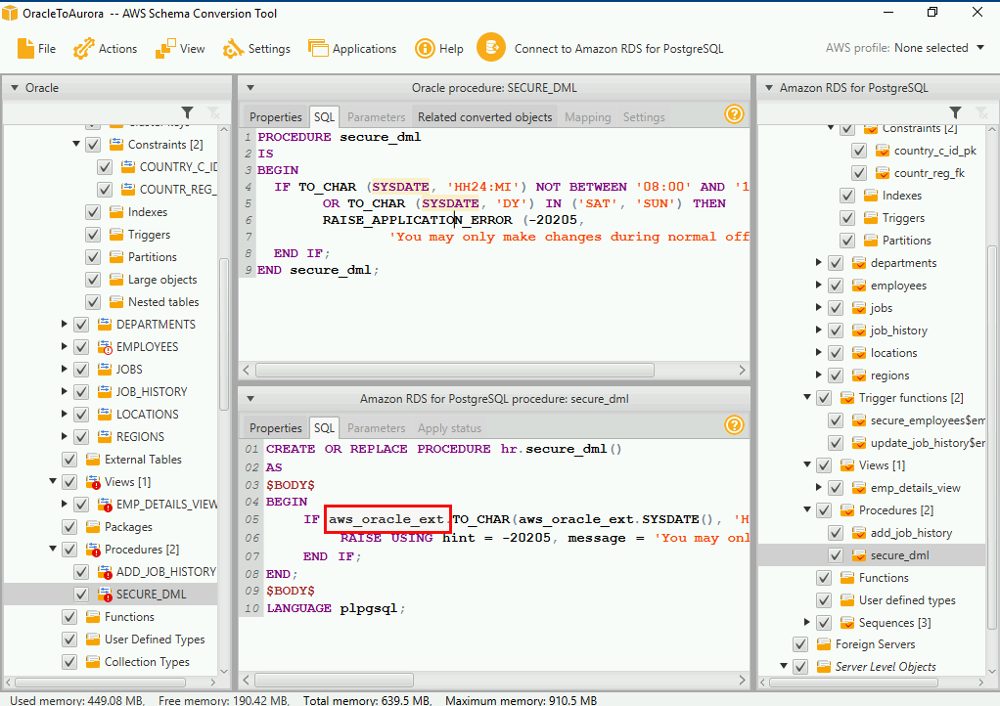

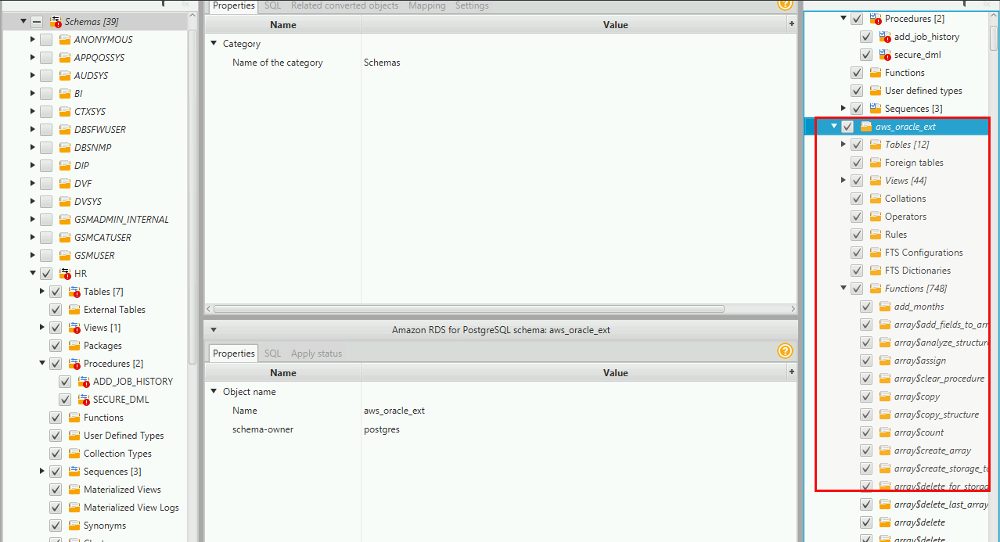

Aurora for PostgreSQL comes with little helpers as you can see in the screenshot below:

You can see that on the right side, there is a new schema “aws_oracle_ext” that holds all these objects:

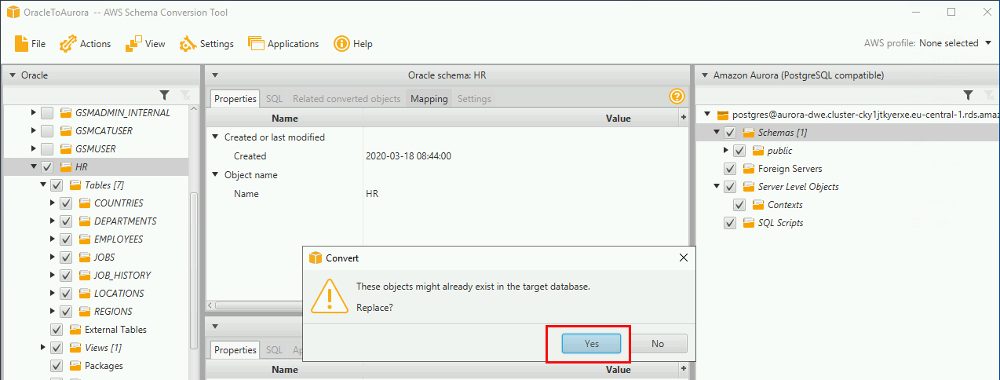

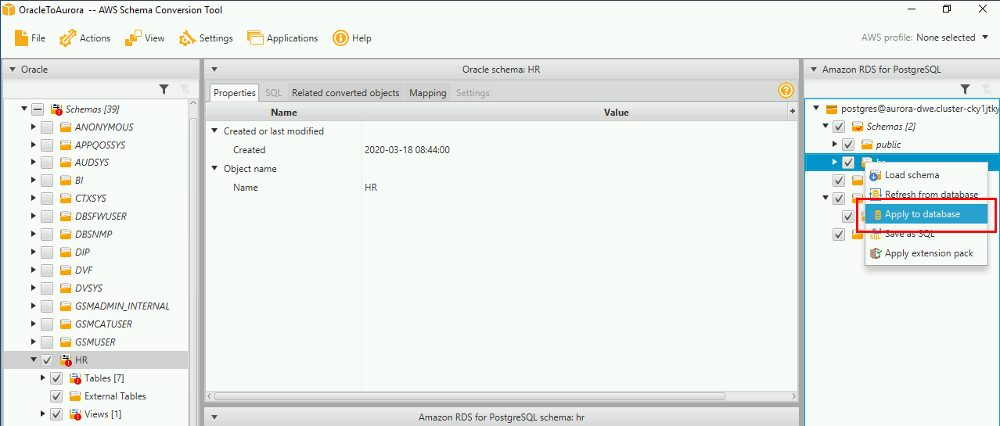

To actually apply the schema to the target database you have to tell SCT to do so:

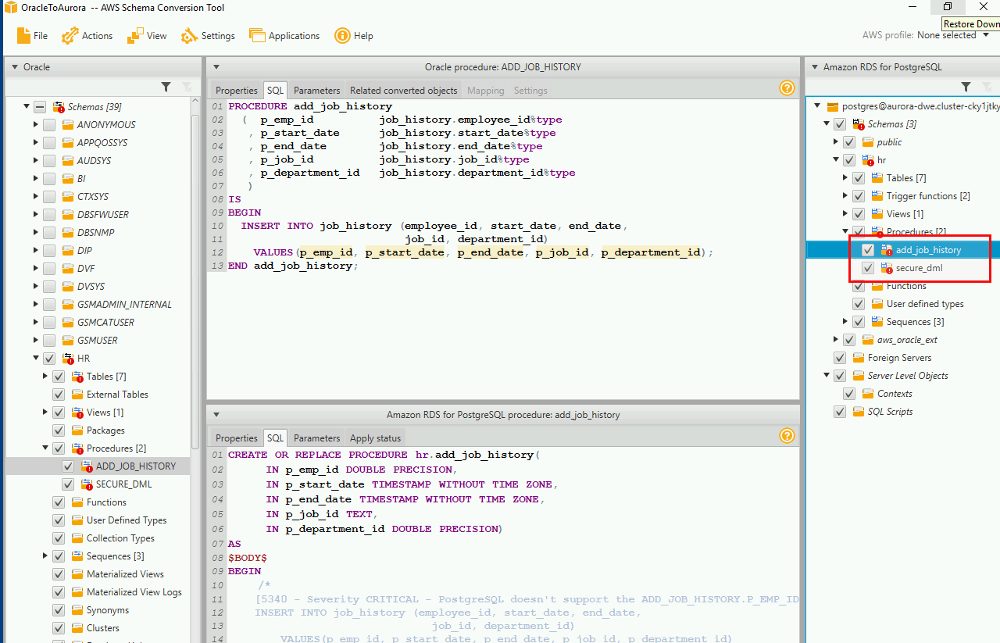

Again, you will need to check the issues on the target side:

By connecting to the Aurora instance with psql you can actually confirm that the objects have been created:

postgres# \dn

List of schemas

Name | Owner

--------------------+----------

aws_oracle_context | postgres

aws_oracle_data | postgres

aws_oracle_ext | postgres

aws_postgis | postgres

hr | postgres

public | postgres

(6 rows)

postgres=> select * from hr.employees;

employee_id | first_name | last_name | email | phone_number | hire_date | job_id | salary | commission_pct | manager_id | department_id

-------------+------------+-----------+-------+--------------+-----------+--------+--------+----------------+------------+---------------

(0 rows)

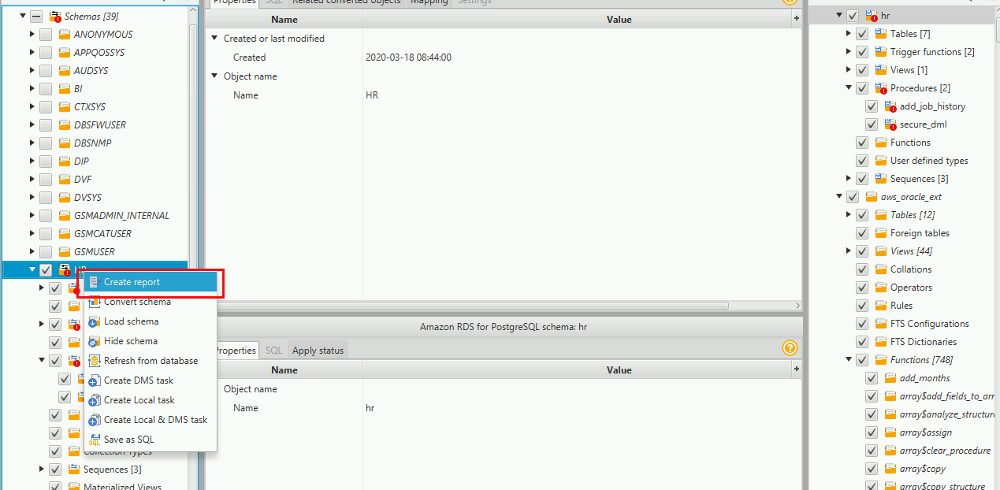

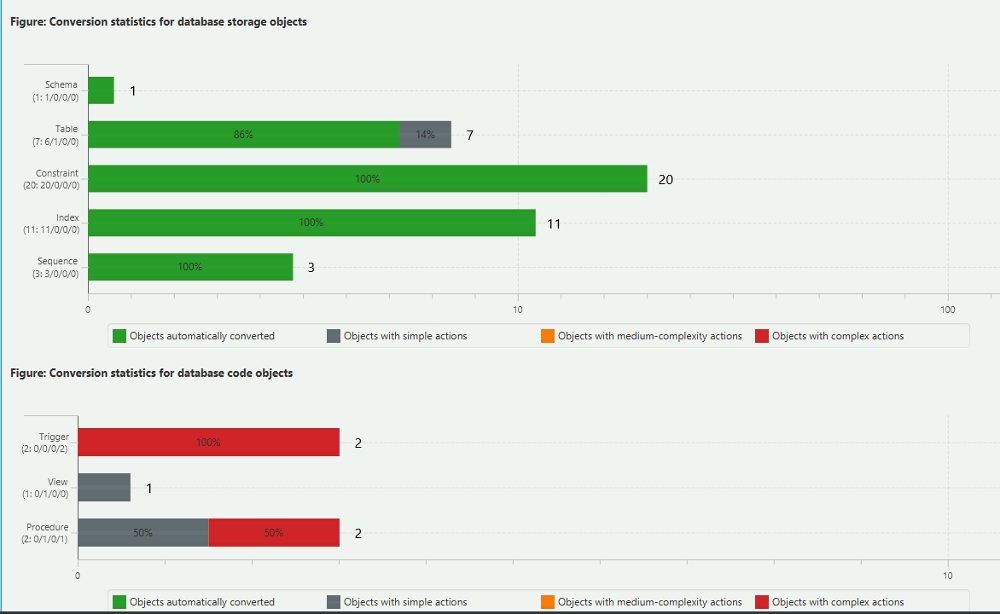

Quite helpful is the report you can generate for getting an idea how many objects can be converted without any issue and how many of them need manual action:

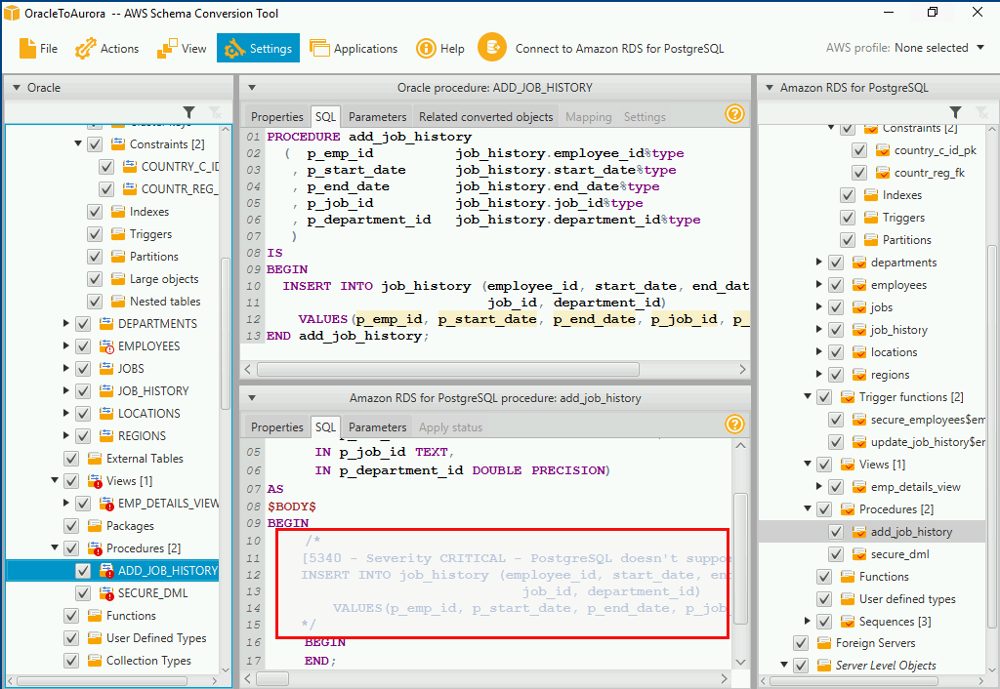

Before proceeding further with loading the data using AWS DMS all the issues reported by SCT should be fixed, like this one:

That’s it for this post. The AWS Schema Conversion Tool in general does a good job. Even functions and procedures are migrated but of course not everything can be done automatically and some of the features/implementations that Oracle provides need to be re-implemented/adjusted on the AWS Aurora side.

In the next post we’ll look at the actual data migration using AWS DMS.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)