Quick recap : in the previous episode, we have seen how to move from a Kubernetes cluster powered by Docker into a future-proof Kubernetes cluster, using containerd. Now let’s move on and fully enjoy the power of having this CRI intermediate layer sitting below the kubelet.

containerd and OCI runtimes

containerd is a high level container runtime. It is CRI compliant, CRI being the API from Kubernetes for container runtimes.

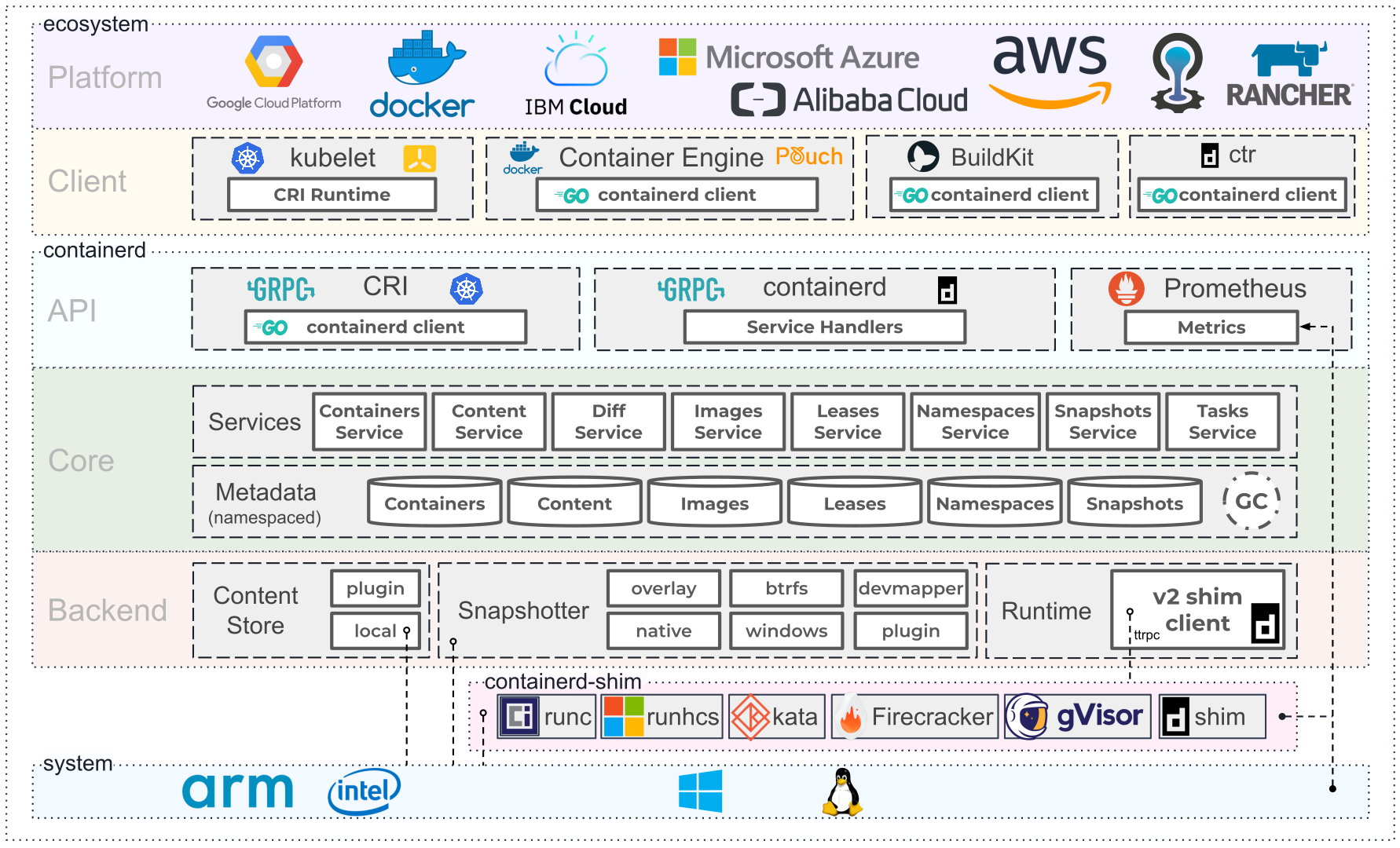

Here below a good picture of the overall containerd architecture :

Source : https://containerd.io/

Source : https://containerd.io/

By design, containerd is capable of addressing multiple OCI runtimes in parallel. Even if containerd is bundled with runc, acting as the default implementation of OCI runtimes, we can configure containerd to refer to another implementation, such as Kata Containers.

Why using two different OCI runtimes ? the answer is simple : each implementation got its own pro and cons.

runc is the defacto standard of OCI runtimes. Installed by default yith containerd, it is also used on machines where Docker is setup. It will also rely on host’s kernel features, such as cgroup and namespaces, the basis of containers. It’s obvious, but all containers running and using runc will use the same kernel, and also the same kernel as the host.

Kata Containers on the other hand, still an OCI implementation, is a kind of hybrid between what we can call standard containers and classic VMs. Each container spinned using Kata Containers will get its own dedicated Kernel with additional security and isolation; it will run in a dedicated micro VM. It is capable of using hardware extensions like the virtualization VT extensions.

Setup of Kata Containers on a Kubernetes cluster.

In this setup, we are going to use the same kind of cluster setup of the last episode. A Kubernetes cluster running the 1.20.7 release, with one master and two workers. As we are going to install a new OCI container runtime, this setup needs to be done on each worker node of the cluster. But also on master(s) if you plan to run pods powered by Kata Containers on the master.

Let’s start with a worker.

Pick the last release, from their Github website, https://github.com/kata-containers/kata-containers/releases and extract the archive on your node.

root@worker2:~# wget https://github.com/kata-containers/kata-containers/releases/download/2.2.0/kata-static-2.2.0-x86_64.tar.xz --2021-09-10 16:27:59-- https://github.com/kata-containers/kata-containers/releases/download/2.2.0/kata-static-2.2.0-x86_64.tar.xz Resolving github.com (github.com)... 140.82.121.4 Connecting to github.com (github.com)|140.82.121.4|:443... connected. HTTP request sent, awaiting response... 302 Found Location: https://github-releases.githubusercontent.com/113404957/129c220a-812b-4d75-a5e3-e988c988c306?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20210910%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20210910T162759Z&X-Amz-Expires=300&X-Amz-Signature=7d0032e7353127cd725c9f61e1136177c29702ded6c636cdb076783fbf0ce0b4&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=113404957&response-content-disposition=attachment%3B%20filename%3Dkata-static-2.2.0-x86_64.tar.xz&response-content-type=application%2Foctet-stream [following] --2021-09-10 16:27:59-- https://github-releases.githubusercontent.com/113404957/129c220a-812b-4d75-a5e3-e988c988c306?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20210910%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20210910T162759Z&X-Amz-Expires=300&X-Amz-Signature=7d0032e7353127cd725c9f61e1136177c29702ded6c636cdb076783fbf0ce0b4&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=113404957&response-content-disposition=attachment%3B%20filename%3Dkata-static-2.2.0-x86_64.tar.xz&response-content-type=application%2Foctet-stream Resolving github-releases.githubusercontent.com (github-releases.githubusercontent.com)... 185.199.108.154, 185.199.111.154, 185.199.109.154, ... Connecting to github-releases.githubusercontent.com (github-releases.githubusercontent.com)|185.199.108.154|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 92535904 (88M) [application/octet-stream] Saving to: ‘kata-static-2.2.0-x86_64.tar.xz’ kata-static-2.2.0-x86_64.tar.xz 100%[==============================================================================================================================================>] 88.25M 28.4MB/s in 3.1s 2021-09-10 16:28:03 (28.4 MB/s) - ‘kata-static-2.2.0-x86_64.tar.xz’ saved [92535904/92535904] root@worker2:~# xz -d kata-static-2.2.0-x86_64.tar.xz root@worker2:~# tar -xvf kata-static-2.2.0-x86_64.tar ./ ./opt/kata/ ./opt/kata/bin/ ... root@worker2:~#

You noticed : all archive extracted into a ../opt/kata folder.

Move the extracted folder into the corresponding node /opt folder. As this folder is not part of the PATH, a symbolic link is needed if you want to keep the containerd configuration file simple, with no folder references.

root@worker2:~# mv opt/kata/ /opt/ root@worker2:~# ln -s /opt/kata/bin/kata-runtime /usr/local/bin/kata-runtime root@worker2:~# ln -s /opt/kata/bin/containerd-shim-kata-v2 /usr/local/bin/containerd-shim-kata-v2 root@worker2:~#

Let’s check if what we installed is working correctly.

root@worker2:~# kata-runtime --version kata-runtime : 2.2.0 commit : <<unknown>> OCI specs: 1.0.2-dev root@worker2:~# kata-runtime check WARN[0000] Not running network checks as super user arch=amd64 name=kata-runtime pid=61809 source=runtime System is capable of running Kata Containers System can currently create Kata Containers

Now we need to update the containerd configuration file, and add the proper reference to the new OCI runtime installed.

Modify the config.toml in folder /etc/containerd so that you have the lines 3 and 4 below added to your file (pay attention, nested lines !)

root@worker2:~# cat /etc/containerd/config.toml |grep ".containerd.runtimes]" -A 4

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata]

runtime_type = "io.containerd.kata.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2"

Once updated, we are now ready to restart the contained service.

root@worker2:~# systemctl restart containerd.service

root@worker2:~# systemctl status containerd.service

● containerd.service - containerd container runtime

Loaded: loaded (/lib/systemd/system/containerd.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2021-09-10 16:59:39 UTC; 4s ago

Docs: https://containerd.io

Process: 72140 ExecStartPre=/sbin/modprobe overlay (code=exited, status=0/SUCCESS)

Main PID: 72141 (containerd)

Tasks: 103

...

Repeat those steps on other nodes, as many times as required by your infrastructure.

RuntimeClass in Kubernetes

Now we have our Kubernetes cluster with containerd, setup to run either runc containers or Kata Containers. But we miss one thing : how to specify in our workload which kind of OCI runtime we would like to address ?

Let’s take a look at the RuntimeClass resource of Kubernetes. Graduated to Stable in release 1.20, the RuntimeClass resource is there to specify which kind of container runtime implementation you want to use. So, we need to create one for each container runtime. Once defined properly, this resource is used in conjunction with a pod definition.

Creation of a RuntimeClass is pretty straight forward (notice that RuntimeClass are not namespaced). You need to specify a name and a handler. This handler can be found on the containerd configuration file, this is the last part of the key name :

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata]

In our cluster, handlers are “kata” and “runc”.

dbi@master:~$ cat runtimeclass-kata.yml apiVersion: node.k8s.io/v1 kind: RuntimeClass metadata: name: kata-rc handler: kata dbi@master:~$ kubectl apply -f runtimeclass-kata.yml runtimeclass.node.k8s.io/kata-rc created

Same for the RunC RuntimeClass :

dbi@master:~$ cat runtimeclass-runc.yml apiVersion: node.k8s.io/v1 kind: RuntimeClass metadata: name: runc-rc handler: runc dbi@master:~$ kubectl apply -f runtimeclass-runc.yml runtimeclass.node.k8s.io/runc-rc created dbi@master:~$ kubectl get runtimeclass NAME HANDLER AGE kata-rc kata 78s runc-rc runc 2d8h

Once RuntimeClass resources created, let’s spin up our pods. The workload itself is not that important in this context. We will focus on the easy and ready-to-go image, the popular web-server Nginx.

We will deploy two pods, one for each container runtime. For runc container runtime :

dbi@master:~$ cat nginx-on-runc.yml apiVersion: v1 kind: Pod metadata: name: nginx-on-runc spec: runtimeClassName: runc-rc containers: - name: nginx image: nginx dbi@master:~$ kubectl apply -f nginx-on-runc.yml pod/nginx-on-runc created

Then, for the Kata Containers runtime :

dbi@master:~$ cat nginx-on-kata.yml apiVersion: v1 kind: Pod metadata: name: nginx-on-kata spec: runtimeClassName: kata-rc containers: - name: nginx image: nginx dbi@master:~$ kubectl apply -f nginx-on-kata.yml pod/nginx-on-kata created

Always some checks ! Check that our two pods are running.

dbi@master:~$ kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-on-kata 1/1 Running 0 10m 172.16.235.157 worker1 <none> <none> nginx-on-runc 1/1 Running 0 13m 172.16.189.87 worker2 <none> <none>

We have two pods running Nginx on our cluster, providing same service. But what are the difference ? As said earlier, you should get an isolation of kernel and for sure, difference in versions between the kernel inside your pod and the one on your worker.

Also, the worker running Nginx on Kata Containers will contain some references to QEMU processes running. By default, Kata Containers will use host kernel module KVM and QEMU for the virtualization section.

Print system information of each worker by initiating the uname command :

dbi@worker1:~$ uname -a Linux worker1 5.4.0-84-generic #94-Ubuntu SMP Thu Aug 26 20:27:37 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux dbi@worker2:~$ uname -a Linux worker2 5.4.0-84-generic #94-Ubuntu SMP Thu Aug 26 20:27:37 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

And then, same system information for each nginx container :

dbi@master:~$ kubectl exec -it nginx-on-runc -- bash root@nginx-on-runc:/# uname -a Linux nginx-on-runc 5.4.0-84-generic #94-Ubuntu SMP Thu Aug 26 20:27:37 UTC 2021 x86_64 GNU/Linux dbi@master:~$ kubectl exec -it nginx-on-kata -- bash root@nginx-on-kata:/# uname -a Linux nginx-on-kata 5.10.25 #2 SMP Tue Aug 31 22:48:37 UTC 2021 x86_64 GNU/Linux

Here we are 🙂 the running container using Kata Containers is having a different version (and more recent !).

dbi@worker1:~$ ps -ef |grep qemu root 24617 24609 0 17:44 ? 00:00:00 /opt/kata/libexec/kata-qemu/virtiofsd --syslog -o cache=auto -o no_posix_lock -o source=/run/kata-containers/shared/sandboxes/886de7e8561b601a3c0a2af05b85beb9afaa8c2ac376b61c15a1a71bf49093e6/shared --fd=3 -f --thread-pool-size=1 root 24623 1 0 17:44 ? 00:00:14 /opt/kata/bin/qemu-system-x86_64 -name sandbox-886de7e8561b601a3c0a2af05b85beb9afaa8c2ac376b61c15a1a71bf49093e6 -uuid 56bd770d-ce6f-4313-8ac1-9429d0bf7a19 -machine q35,accel=kvm,kernel_irqchip=on,nvdimm=on -cpu host,pmu=off -qmp unix:/run/vc/vm/886de7e8561b601a3c0a2af05b85beb9afaa8c2ac376b61c15a1a71bf49093e6/qmp.sock,server=on,wait=off -m 2048M,slots=10,maxmem=3011M -device pci-bridge,bus=pcie.0,id=pci-bridge-0,chassis_nr=1,shpc=on,addr=2 -device virtio-serial-pci,disable-modern=true,id=serial0 -device virtconsole,chardev=charconsole0,id=console0 -chardev socket,id=charconsole0,path=/run/vc/vm/886de7e8561b601a3c0a2af05b85beb9afaa8c2ac376b61c15a1a71bf49093e6/console.sock,server=on,wait=off -device nvdimm,id=nv0,memdev=mem0,unarmed=on -object memory-backend-file,id=mem0,mem-path=/opt/kata/share/kata-containers/kata-clearlinux-latest.image,size=268435456,readonly=on -device virtio-scsi-pci,id=scsi0,disable-modern=true -object rng-random,id=rng0,filename=/dev/urandom -device virtio-rng-pci,rng=rng0 -device vhost-vsock-pci,disable-modern=true,vhostfd=3,id=vsock-1696826128,guest-cid=1696826128 -chardev socket,id=char-6546ada211d5c7ec,path=/run/vc/vm/886de7e8561b601a3c0a2af05b85beb9afaa8c2ac376b61c15a1a71bf49093e6/vhost-fs.sock -device vhost-user-fs-pci,chardev=char-6546ada211d5c7ec,tag=kataShared -netdev tap,id=network-0,vhost=on,vhostfds=4,fds=5 -device driver=virtio-net-pci,netdev=network-0,mac=62:2c:d8:29:1c:f3,disable-modern=true,mq=on,vectors=4 -rtc base=utc,driftfix=slew,clock=host -global kvm-pit.lost_tick_policy=discard -vga none -no-user-config -nodefaults -nographic --no-reboot -daemonize -object memory-backend-file,id=dimm1,size=2048M,mem-path=/dev/shm,share=on -numa node,memdev=dimm1 -kernel /opt/kata/share/kata-containers/vmlinux-5.10.25-85 -append tsc=reliable no_timer_check rcupdate.rcu_expedited=1 i8042.direct=1 i8042.dumbkbd=1 i8042.nopnp=1 i8042.noaux=1 noreplace-smp reboot=k console=hvc0 console=hvc1 cryptomgr.notests net.ifnames=0 pci=lastbus=0 root=/dev/pmem0p1 rootflags=dax,data=ordered,errors=remount-ro ro rootfstype=ext4 quiet systemd.show_status=false panic=1 nr_cpus=2 systemd.unit=kata-containers.target systemd.mask=systemd-networkd.service systemd.mask=systemd-networkd.socket scsi_mod.scan=none -pidfile /run/vc/vm/886de7e8561b601a3c0a2af05b85beb9afaa8c2ac376b61c15a1a71bf49093e6/pid -smp 1,cores=1,threads=1,sockets=2,maxcpus=2 root 24628 24617 0 17:44 ? 00:00:03 /opt/kata/libexec/kata-qemu/virtiofsd --syslog -o cache=auto -o no_posix_lock -o source=/run/kata-containers/shared/sandboxes/886de7e8561b601a3c0a2af05b85beb9afaa8c2ac376b61c15a1a71bf49093e6/shared --fd=3 -f --thread-pool-size=1 dbi 44240 42405 0 18:11 pts/0 00:00:00 grep --color=auto qemu

Kubernetes is a very powerful system, with lots of functionnalies, but very flexible. Its architecture, design and the way you configure it, allows it to adapt to variours needs, topologies or requirements.

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/martin_bracher_2048x1536.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/03/AHI_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/HER_web-min-scaled.jpg)