Today at dbi services, with my colleague Chay Te and Arjen Lankkamp, we attended to the KCD in Zurich. First occurrence of the Kubernetes Community Days organized in Switzerland, the KCD Zurich hosted 250 participants. The previous day was devoted to technical workshops, while the following day was spent on general sessions and talks.

This event, which took place at the Google offices in EuropaAllee, offered two parallel streams. A variety of technical sessions, with a few non-technical ones, offered a wide range of discussions around Kubernetes, monitoring and observability, eBPF and the Linux kernel, and even AI. Additionally, it provided a chance for direct interaction and discussion with the presenters.

In the following sections, I’ve chosen to share with you a few of the sessions I attended.

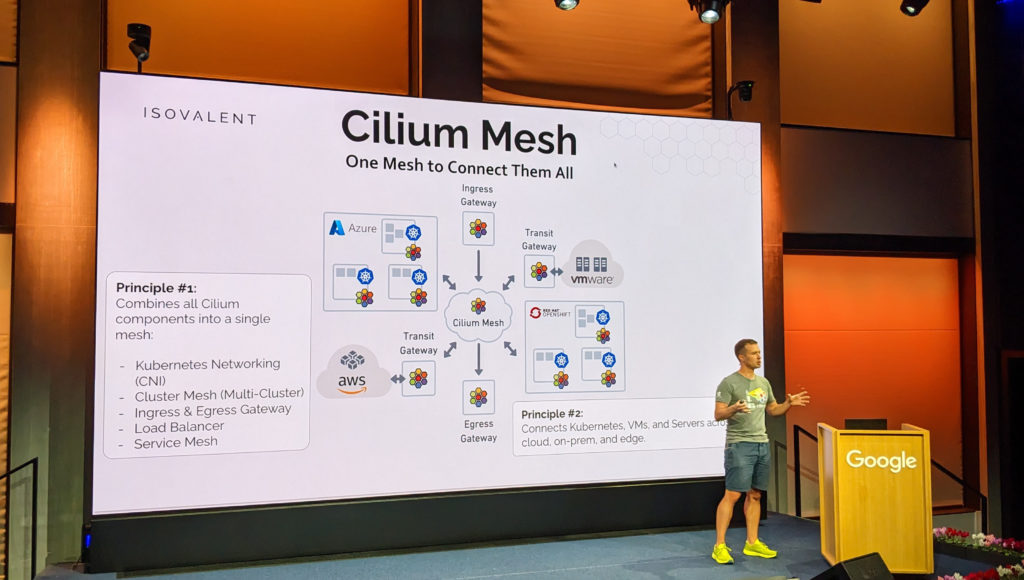

Cilium Mesh – How to Connect Kubernetes with Legacy VM and Server Infrastructure

Thomas Graf, Co-Founder and CTO of Isovalent, offered a 30 minutes presentation on Cilium Mesh as the day’s grand opening.

Cilium is a networking and security project from Isovalent that leverages eBPF. Cilium Mesh adds the capacity to connect Kubernetes clusters with existing datacenters and legacy infrastructure.

The transition to Kubernetes is not binary, and most of the time, at customer premises, some applications, due to technical, political, or compliance constraints, can’t or won’t be migrated to a containerized infrastructure. Despite all that, you need to keep everything connected. And ideally, as Kubernetes enthusiasts, we should manage it in a manner similar to how we manage Kubernetes.

The goal of Cilium Mesh is to make that connection easier by offering a central piece of software to make that connection. It combines all Cilium components into a single mesh. It connects Kubernetes clusters, virtual machines and servers through various topologies, such as cloud or on-premises.

During his talk, Thomas showed that Transit Gateway allows the connection of AWS or VMware infrastructure, while RedHat Openshift or vanilla Kubernetes clusters can onboard Cilium components as standard.

Forensic container checkpointing and analysis

The second session was performed by Adrian Reber from RedHat. The main topic was checkpointing containers in a Kubernetes environment. Checkpointing a container consists of saving the current state of a process. On Kubernetes and containerized environments, it consists of performing a copy of the container and all its state into an archive, with the container not knowing it has been backed up and saved. The archive can then be extracted for later offline analysis.

Kubernetes, starting with version 1.25, provides checkpointing functionality, the entrypoint of that being the kubelet. Under the hood, the CRI needs to support it. For the moment, he mentioned that only CRI-O supports it, while containerd got a PR for that (PR#6465)

Demystifying eBPF – eBPF Firewall from scratch

Then, since I was very interested in the main topic of the talk, I attended the session of Filip Nicolic, talking about eBPF. During half an hour, he offered a good introduction to the eBPF technology and specifically how to code a pretty straightforward firewall.

Well, in fact, it was more pseudo-code with C language syntax, but his explanation was very clear. A quick intro on Ethernet frame specification and where the NIC is handled on Linux (user space or kernel space) and we all had the feeling “OMG, that’s easy !”. At least to me; to be honest, it inspired me to try and code something using eBPF.

Then the lunch break. A few Kubernetes cupcakes later, and a good catering service proposing vegan meals, I moved on to the next session.

Buzzing Across the Cloud Native Landscape with eBPF

This one was from Bill Mulligan, from Isovalent, discussing eBPF again, but in relation to cloud native environments. After a short introduction to code implemented in user space vs. in the kernel, he then drew attention to the benefits of eBPF. How to get programmability inside the kernel, comparing eBPF as the new Javascript of the kernel. By design, eBPF does shorten the release plan for new features.

We also learned that the Linux kernel embeds safety functionality. The eBPF Verifier ensures that eBPF programs can run until the end without crashing or corrupting the system. Memory can also be shared. eBPF maps are there to allow memory sharing between eBPF programs and between the Linux kernel and user space. eBPF programs can see anything; therefore, for Kubernetes clusters, they provide a full set of functionalities on observability, networking inspection, security, tracing and profiling applications.

eBPF is almost a decade old; it was released in March 2014. Since then, it has grown in popularity and more and more software are being released, providing new features.

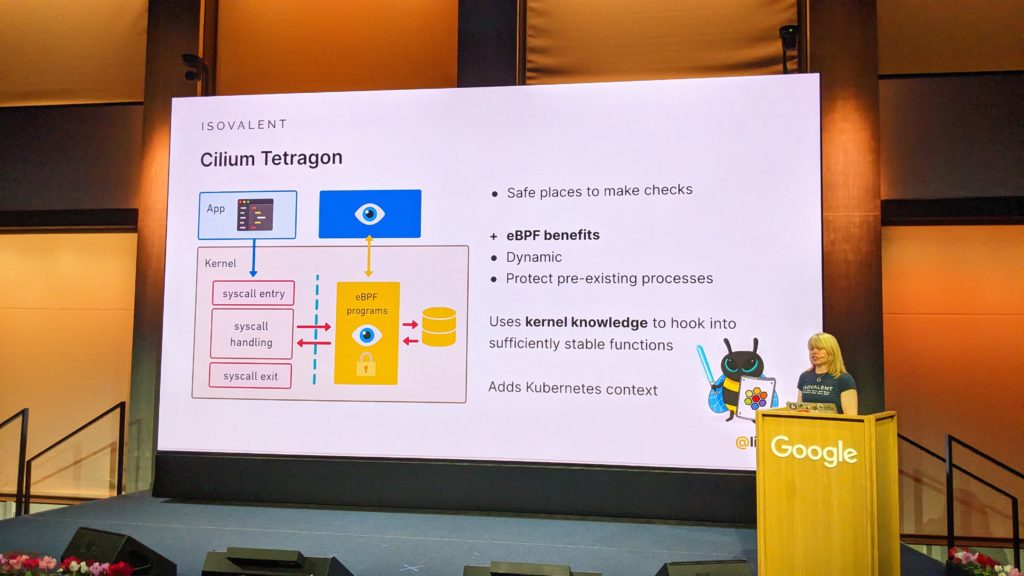

eBPF for Security

The last session was performed by Liz Rice in the main auditorium. The place was packed to listen to her talking and highlighting the capacity of eBPF, with a focus on security. She concludes the day with demos and presentations of Tetragon, the eBPF-based security enforcement tool, but also the Cilium network policies.

With the sessions I’ve chosen to attend, this event got a clear eBPF flavor. But this technology is now being adopted massively by more and more Kubernetes infrastructures, from vanilla Kubernetes deployments to cloud providers for their managed Kubernetes solutions.

We, at dbi services, choose to use Cilium as CNI of Kubernetes clusters. Interested in more on Cilium? Please visit posts of my colleagues. They share their experience and troubleshooting moment with that tool.

Also, don’t hesitate to have a look at the blog post of my colleague, Chay who expressed as well his feelings on this event.

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/martin_bracher_2048x1536.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/03/AHI_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/HER_web-min-scaled.jpg)