For a few months now, we started a huge project at a customer; build a complete CI/CD platform for Documentum. The final goal is to create a CI/CD process for platform and software delivery to reduce as possible the delivery of the releases.

To achieve this goal, 2 main products have been introduced at our customer by our DevOps team: Kubernetes (as the new “virtualization” platform) and Jenkins X (for CI/CD). Based on these 2 brand new tools, multiple other tools are used within the Jenkins X ecosystem such as Kaniko; the new container image builder released by the famous Google Cloud team. Kaniko is part of the Jenkins X ecosystem to build images in the pipelines.

In this series of blogs, we will introduce/explain all tools used in the Jenkins X ecosystem. Let’s start by Kaniko:-D!

Why Kaniko?

Before Kaniko, we used 2 components to build a new container image: the Docker daemon and a DockerFile. As you know the Docker daemon needs access to the root user on the machine on which it runs. This lead to some security issues in multiple production environments which cannot give root access to the Docker daemon: https://github.com/kubernetes/kubernetes/issues/1806

To overcome this security issue, Kaniko comes with a solution to build a container image from a DockerFile without the need for the Docker daemon (no root-access).

How Kaniko works?

Kaniko runs as a container image (pod) within a Kubernetes cluster. It requires three parameters to create a new image:

- a DockerFile

- a build context

- the name of the registry

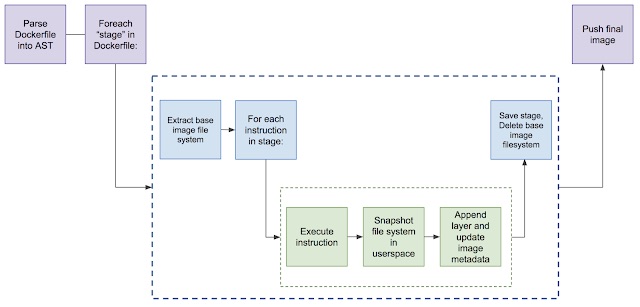

The Kaniko image is called kaniko executor. Below the process used by the kaniko executor to build a new image:

- Kaniko executor pull the image specified in the DockerFile “FROM” in the container root file system

- Then it executes each DockerFile command and takes a snapshot of the filesystem after each command

- For each modification of the base image (FROM field in the DockerFile) a new layer is created and changes the image metadata.

- At the end it pushes the new image to the image registry.

Below the representation of each step:

Getting started with Kaniko executor

In our getting started example, we will use the followings:

- a Kubernetes cluster hosted in GKE

- a personal GitHub repository as a build context

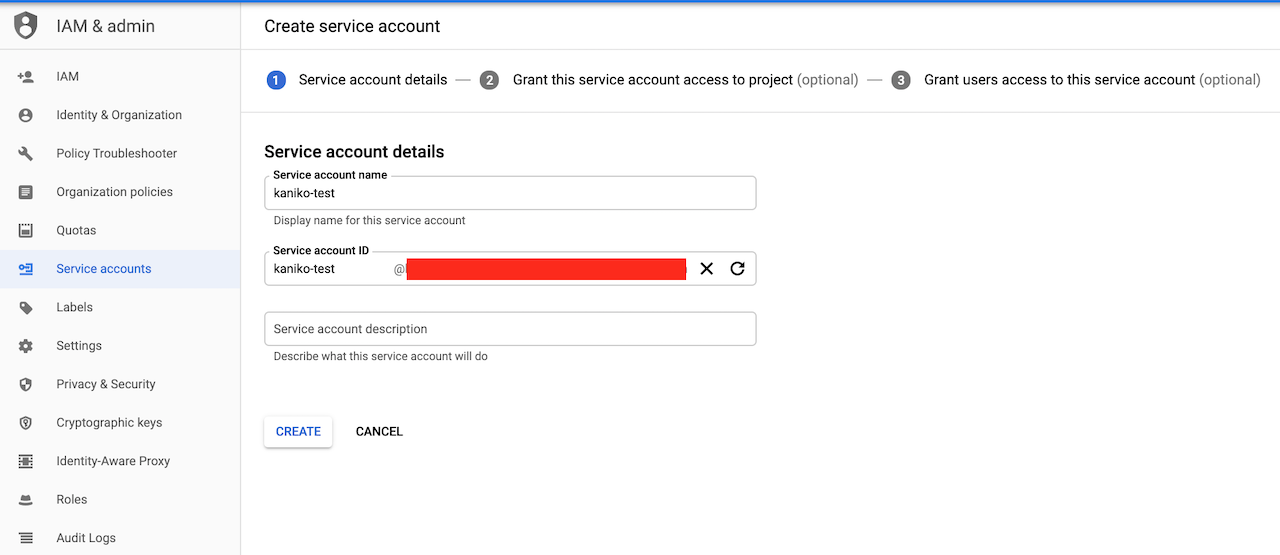

Once your cluster is provisioned and your GitHub account is ready for use you can start by creating the Kubernetes service account in GKE as follows:

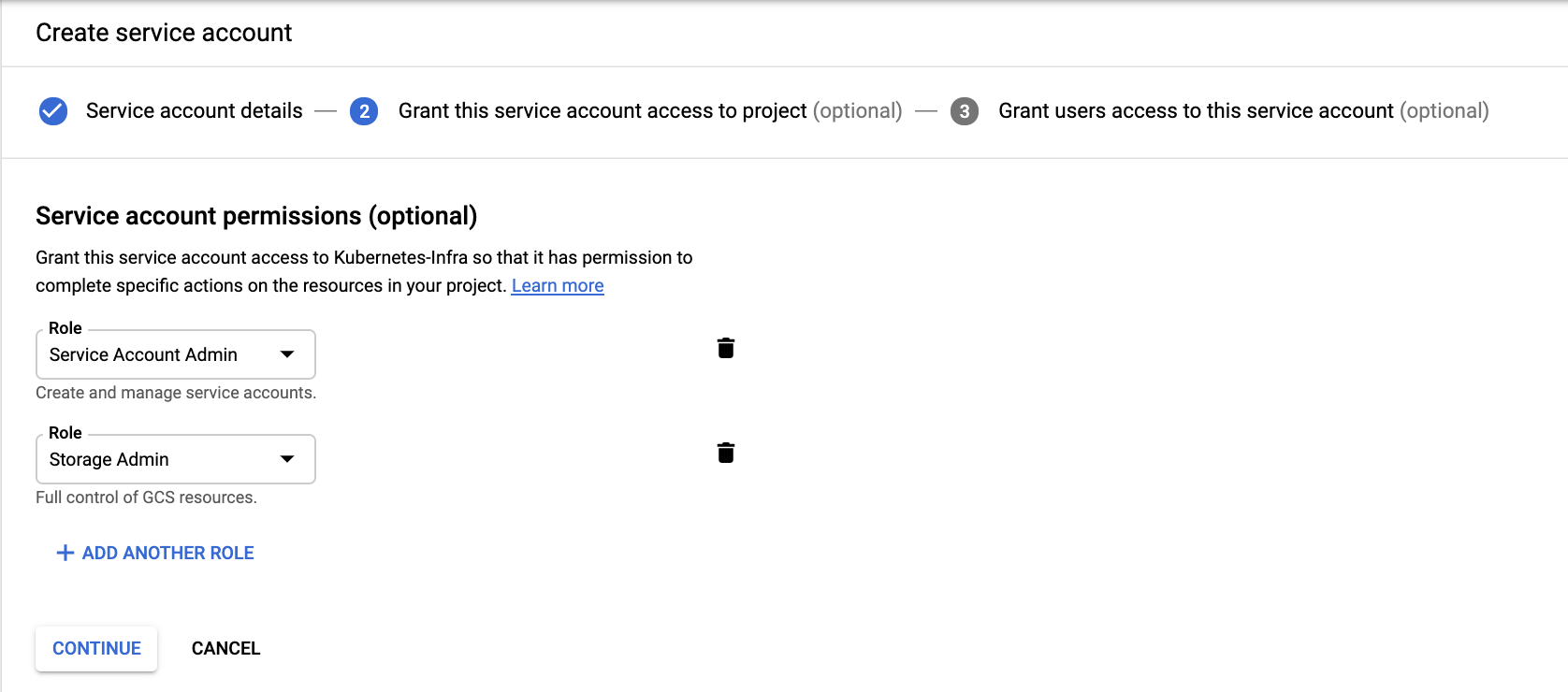

Grant the service account with storage admin, service account admin and create it:

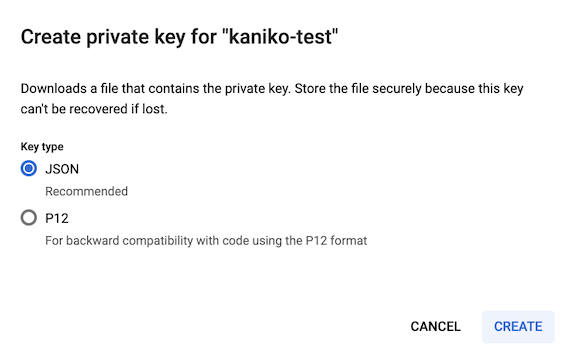

Once it has been done, create a JSON key and save it locally as follows:

Rename the key to kaniko-secret.json:

mehdi@MacBook-Pro: mv [PROJECT_ID]-cd55f597bdf8.json kaniko-secret.json

Then create the Kubernetes secret:

mehdi@MacBook-Pro: kubectl create secret generic kaniko-secret --from-file=kaniko-secret.json secret/kaniko-secret created

Now create your DockerFile and save it into your personal GitHub account: https://github.com/MehB/kaniko-test

mehdi@MacBook-Pro: echo "# kaniko-test" >> README.md mehdi@MacBook-Pro: git init mehdi@MacBook-Pro: git add . mehdi@MacBook-Pro: git commit -m "Initial Commit" mehdi@MacBook-Pro: git remote add origin https://github.com/MehB/kaniko-test.git mehdi@MacBook-Pro: git push -u origin master

Create the Kubernetes pod with the following parameters:

mehdi@MacBook-Pro: vi kaniko-executor.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: kaniko

spec:

containers:

- name: kaniko

image: gcr.io/kaniko-project/executor:latest

args: ["--dockerfile=DockerFile",

"--context=git://github.com/MehB/kaniko-test.git#refs/heads/master",

"--destination=gcr.io/${PROJECT_ID}/apache:0.0.1"]

volumeMounts:

- name: kaniko-secret

mountPath: /secret

env:

- name: GOOGLE_APPLICATION_CREDENTIALS

value: /secret/kaniko-secret.json

restartPolicy: Never

volumes:

- name: kaniko-secret

secret:

secretName: kaniko-secret

Start the kaniko-executor pod:

mehdi@MacBook-Pro: kubectl create -f kaniko-executor-pod.yaml pod/kaniko created mehdi@MacBook-Pro:kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kaniko 1/1 Running 0 7s 10.36.2.6 gke-kanino-test-default-pool-840d3d88-7fct

You can have a look in the pod logs to verify if all DockerFile commands have been properly done:

mehdi@MacBook-Pro:kubectl logs kaniko Enumerating objects: 10, done. Counting objects: 100% (10/10), done. Compressing objects: 100% (9/9), done. Total 10 (delta 0), reused 10 (delta 0), pack-reused 0 INFO[0026] Taking snapshot of full filesystem... INFO[0028] ENV APACHE_RUN_USER www-data INFO[0028] ENV APACHE_RUN_GROUP www-data INFO[0028] ENV APACHE_LOG_DIR /var/log/apache2 INFO[0028] EXPOSE 80 INFO[0028] cmd: EXPOSE INFO[0028] Adding exposed port: 80/tcp INFO[0028] CMD ["/usr/sbin/apache2", "-D", "FOREGROUND"]

The image is now available in the private registry; in our case the GKE registry 😉

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)