Is there too much memory for my SQL Server instance? This is definitely an uncommon question I had to deal with of my customers a couple of weeks ago. Usually DBAs complain when they don’t have enough memory for environments they have to manage and the fact is SQL Server (like other SGBDRs) provides a plenty of tools for memory pressure troubleshooting. But what about of the opposite? This question raised in a context of an environment that includes a lot of virtual database servers (> 100) on the top of VMWare where my customer was asked for lowering the SQL Server instance memory reservations when possible in order to free memory from ESX hosts.

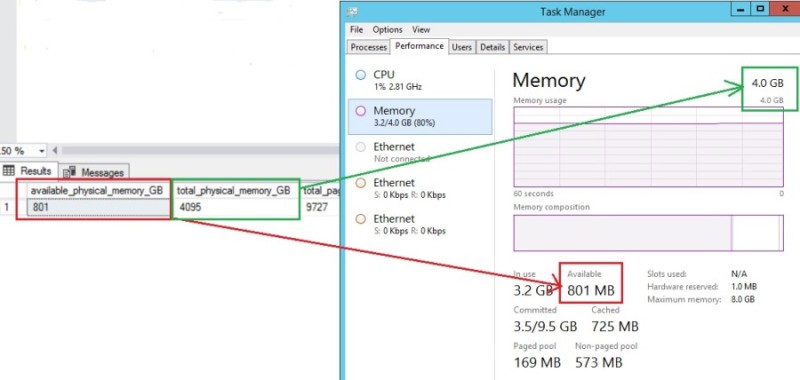

Let’s start with the sys.dm_os_sys_memory. This is the first one that my customer wanted to dig into. This DMV may be helpful to get a picture of the overall system state including external memory conditions at the operating system level and the physical limits of the underlying hardware.

select available_physical_memory_kb / 1024 AS available_physical_memory_MB, total_physical_memory_kb / 1024 AS total_physical_memory_MB, total_page_file_kb / 1024 AS total_page_file_MB, available_page_file_kb / 1024 AS available_page_file_MB, system_cache_kb / 1024 AS system_cache_MB from sys.dm_os_sys_memory;

But in the context of my customer, it partially helped to figure out SQL Server memory consumption instances because we didn’t really face any environments under pressure here.

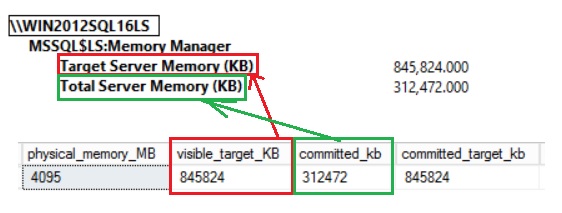

However, another interesting DMV we may rely on is sys.dm_os_sys_info. We may also use their counterparts with perfmon counters \Memory Manager\Target Server Memory (KB) and \Memory Manager\Total Server Memory (KB) as shown below:

select physical_memory_kb / 1024 AS physical_memory_MB, visible_target_KB, committed_kb, committed_target_kb from sys.dm_os_sys_info;

The concept of committed and Target commit memory are important here to figure out how SQL Server deals with memory space. The commit memory represents the physical memory allocated by the SQL Server process whereas the Target memory is the amount of memory SQL Server tries to maintain as committed memory regarding different factors described in the BOL. Chances are the latter is closed to the max server memory value in most of scenarios from my experience by the way.

But relying blindly on the committed memory may contribute to misinterpretation about what SQL Server is really consuming for a specific period of time. Indeed, let’s say my SQL Server instance is capped to 2GB and after the daily business workload here the corresponding figures. Let’s say the values in the context of my customer were of a different order of magnitude but this demo will help to figure out the issue that motivated this write-up:

select physical_memory_kb / 1024 AS physical_memory_MB, visible_target_KB, committed_kb, committed_target_kb from sys.dm_os_sys_info;

The committed memory is about 365MB and by far from the configured max server memory parameter value – 2GB. But now let’s the database maintenance kicks-in. Usually this is a nightly and a daily or weekly basis job that includes generally a rebuilding index task that consists in reading generally all the data structures to get external fragmentation values through the DMF sys.dm_db_index_physical_stats(). This operation can touch structures that are not used during daily business and may have a huge impact on the buffer pool. In my case here the new memory state after executing this maintenance task:

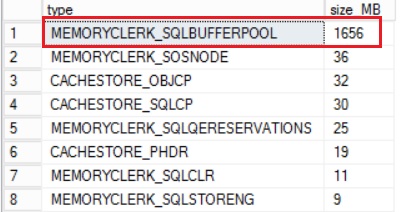

The game has changed here because SQL Server has committed all the memory until reaching the max server memory value. This time we may go through the sys.dm_os_memory_clerks DMV to get details from different memory clerks of my SQL Server instance. pages_kb column is used because SQL instances run with SQL 2014 version.

SELECT [type], pages_kb / 1024 AS size_MB FROM sys.dm_os_memory_clerks WHERE memory_node_id = 0 ORDER BY size_MB DESC

So, from now the committed memory has good chance to keep closed from the max server memory value while in fact the daily business workload won’t probably need all this memory allocated to the SQL Server process. This exactly why my customer asked me for a way to get a more realistic picture of memory consumption of its SQL Server instances during daily business by excluding the nightly database maintenance workload.

We went through a solution that consisted in freeing up the committed memory before starting the journey and to leave the memory grow up gradually until reaching its maximum usage. It is worth noting that there is no easy way. as far as I know, to free up the committed memory and SQL Server may decrease it only if the corresponding target server memory value is lower. From my experience this situation is more an exception than the rule of thumbs and therefore it is difficult to rely on it. One potential workaround might be to restart the SQL Server instance(s) but in the case of my customer restarting the database servers was not an option and we looked into a solution that forced SQL Server making room by setting up the max server memory closed to the min server memory value. Don’t get me wrong, I don’t consider this as a best practice but more as an emergency procedure because as restarting a SQL Server instance, it may lead to a temporary impact but in a high number of magnitudes especially whether the workload performance is directly tied to the buffer cache state (warm vs cold). In addition, I would say that scaling the max server memory value with only the daily business workload may be controversial in many ways and in fact we have to consider some tradeoffs here. In the context of my customer, the main goal was to release “unused memory” from SQL Server instances during daily business to free up memory from VMWare ESX hosts but there is no free lunch. For instance, the nightly basis workload execution may become suddenly higher in duration if there is less room to work in memory. Another direct side effect of working with less memory might be the increase of I/O operations from the storage layout. In a nutshell, there is no black or white solution and we have to deal with what we consider at the best solution for the specific context.

See you!

By David Barbarin

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/microsoft-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)