During previous months, I’ve had some time to satisfy my curiosity about databases in containers and I started to test a little bit MySQL in Kubernetes.

This is how it all began…

In January I had the chance to be trained on Kubernetes attending the Docker and Kubernetes essentials Workshop of dbi services. So I decided to prepare a session on this topic at our internal dbi xChange event. And as if by magic, at the same time, a customer asked for our support to migrate a MySQL database to their Kubernetes cluster.

In general, I would like to raise two points before going into the technical details:

1. Is it a good idea to move databases into containers? Here I would use a typical IT answer: “it depends”. I can suggest you to think about your needs and constraints, if you have small images to deploy, about storage and persistence, performances, …

2. There are various solutions for installing, orchestrating and administering MySQL in K8s: MySQL single instance vs MySQL InnoDB Cluster, using MySQL Operator for Kubernetes or Helm Charts, on-premise but also through Oracle Container Engine for Kubernetes on OCI, … I recommend you to think about which are (again) your needs and skills, if you are already working on Cloud technologies, whether you have already set up DevOps processes and which ones, …

Here I will show you how to install a MySQL InnoDB Cluster in OKE using a MySQL Operator.

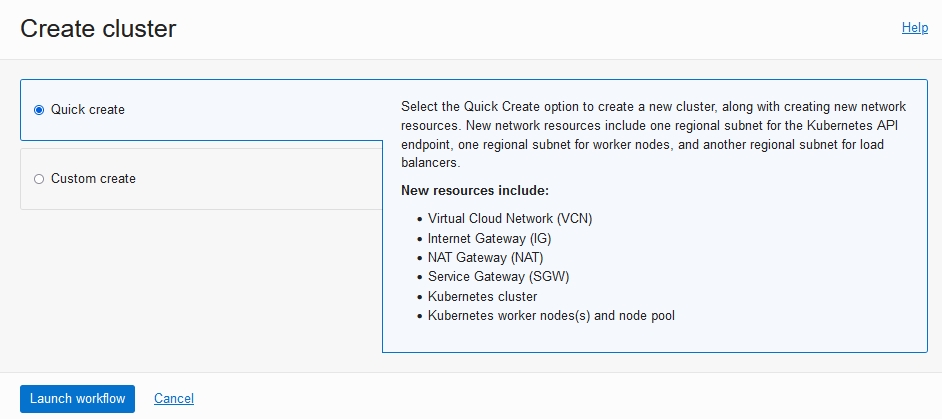

First thing is to have an account on Oracle OCI and have deployed an Oracle Container Engine for Kubernetes in your compartment. You can do it in an easy was using the Quick Create option under “Developer Services > Containers & Artifacts > Kubernetes Clusters (OKE)”:

In this way all the resources you need (VCN, Internet and NAT gateways, a K8s cluster with workers nodes and node pool) are there in one click:

elisa@cloudshell:~ (eu-zurich-1)$ kubectl cluster-info Kubernetes control plane is running at https://xxx.xx.xxx.xxx:6443 CoreDNS is running at https://xxx.xx.xxx.xxx:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. elisa@cloudshell:~ (eu-zurich-1)$ kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME 10.0.10.36 Ready node 6m7s v1.22.5 10.0.10.36 yyy.yyy.yyy.yyy Oracle Linux Server 7.9 5.4.17-2136.304.4.1.el7uek.x86_64 cri-o://1.22.3-1.ci.el7 10.0.10.37 Ready node 6m1s v1.22.5 10.0.10.37 kkk.kkk.kkk.kk Oracle Linux Server 7.9 5.4.17-2136.304.4.1.el7uek.x86_64 cri-o://1.22.3-1.ci.el7 10.0.10.42 Ready node 6m v1.22.5 10.0.10.42 jjj.jj.jjj.jj Oracle Linux Server 7.9 5.4.17-2136.304.4.1.el7uek.x86_64 cri-o://1.22.3-1.ci.el7

As a second step, you can install the MySQL Operator for Kubernetes using kubectl:

elisa@cloudshell:~ (eu-zurich-1)$ kubectl apply -f https://raw.githubusercontent.com/mysql/mysql-operator/trunk/deploy/deploy-crds.yaml customresourcedefinition.apiextensions.k8s.io/innodbclusters.mysql.oracle.com created customresourcedefinition.apiextensions.k8s.io/mysqlbackups.mysql.oracle.com created customresourcedefinition.apiextensions.k8s.io/clusterkopfpeerings.zalando.org created customresourcedefinition.apiextensions.k8s.io/kopfpeerings.zalando.org created elisa@cloudshell:~ (eu-zurich-1)$ kubectl apply -f https://raw.githubusercontent.com/mysql/mysql-operator/trunk/deploy/deploy-operator.yaml serviceaccount/mysql-sidecar-sa created clusterrole.rbac.authorization.k8s.io/mysql-operator created clusterrole.rbac.authorization.k8s.io/mysql-sidecar created clusterrolebinding.rbac.authorization.k8s.io/mysql-operator-rolebinding created clusterkopfpeering.zalando.org/mysql-operator created namespace/mysql-operator created serviceaccount/mysql-operator-sa created deployment.apps/mysql-operator created

You can check the health of the MySQL Operator:

elisa@cloudshell:~ (eu-zurich-1)$ kubectl get deployment -n mysql-operator mysql-operator NAME READY UP-TO-DATE AVAILABLE AGE mysql-operator 1/1 1 1 24s elisa@cloudshell:~ (eu-zurich-1)$ kubectl get pods --show-labels -n mysql-operator NAME READY STATUS RESTARTS AGE LABELS mysql-operator-869d4b4b8d-slr4t 1/1 Running 0 113s name=mysql-operator,pod-template-hash=869d4b4b8d

To isolate resources, you can create a dedicated namespace for the MySQL InnoDB Cluster:

elisa@cloudshell:~ (eu-zurich-1)$ kubectl create namespace mysql-cluster namespace/mysql-cluster created

You should also create a Secret using kubectl to store MySQL user credentials that will be created and then required by pods to access to the MySQL server:

elisa@cloudshell:~ (eu-zurich-1)$ kubectl create secret generic elisapwd --from-literal=rootUser=root --from-literal=rootHost=% --from-literal=rootPassword="pwd" -n mysql-cluster secret/elisapwd created

You can check that the Secret was corrected created:

elisa@cloudshell:~ (eu-zurich-1)$ kubectl get secrets -n mysql-cluster NAME TYPE DATA AGE default-token-t2c47 kubernetes.io/service-account-token 3 2m elisapwd Opaque 3 34s elisa@cloudshell:~ (eu-zurich-1)$ kubectl describe secret/elisapwd -n mysql-cluster Name: elisapwd Namespace: mysql-cluster Labels: Annotations: Type: Opaque Data ==== rootHost: 1 bytes rootPassword: 7 bytes rootUser: 4 bytes

Now you have to write a .yaml configuration file to define how the MySQL InnoDB Cluster should be created. Here is a simple example:

elisa@cloudshell:~ (eu-zurich-1)$ vi InnoDBCluster_config.yaml

apiVersion: mysql.oracle.com/v2alpha1

kind: InnoDBCluster

metadata:

name: elisacluster

namespace: mysql-cluster

spec:

secretName: elisapwd

instances: 3

router:

instances: 1

At this point you can run a MySQL InnoDB Cluster applying the configuration that you just created:

elisa@cloudshell:~ (eu-zurich-1)$ kubectl apply -f InnoDBCluster_config.yaml innodbcluster.mysql.oracle.com/elisacluster created

You can finally check if the MySQL InnoDB Cluster has been successfully created:

elisa@cloudshell:~ (eu-zurich-1)$ kubectl get innodbcluster --watch --namespace mysql-cluster NAME STATUS ONLINE INSTANCES ROUTERS AGE elisacluster PENDING 0 3 1 12s elisacluster PENDING 0 3 1 103s elisacluster INITIALIZING 0 3 1 103s elisacluster INITIALIZING 0 3 1 103s elisacluster INITIALIZING 0 3 1 103s elisacluster INITIALIZING 0 3 1 104s elisacluster INITIALIZING 0 3 1 106s elisacluster ONLINE 1 3 1 107s elisa@cloudshell:~ (eu-zurich-1)$ kubectl get all -n mysql-cluster NAME READY STATUS RESTARTS AGE pod/elisacluster-0 2/2 Running 0 4h44m pod/elisacluster-1 2/2 Running 0 4h42m pod/elisacluster-2 2/2 Running 0 4h41m pod/elisacluster-router-7686457f5f-hwfcv 1/1 Running 0 4h42m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/elisacluster ClusterIP 10.96.9.203 6446/TCP,6448/TCP,6447/TCP,6449/TCP 4h44m service/elisacluster-instances ClusterIP None 3306/TCP,33060/TCP,33061/TCP 4h44m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/elisacluster-router 1/1 1 1 4h44m NAME DESIRED CURRENT READY AGE replicaset.apps/elisacluster-router-7686457f5f 1 1 1 4h44m NAME READY AGE statefulset.apps/elisacluster 3/3 4h44m

You can use port forwarding in the following way:

elisa@cloudshell:~ (eu-zurich-1)$ kubectl port-forward service/elisacluster mysql --namespace=mysql-cluster Forwarding from 127.0.0.1:6446 -> 6446

to access your MySQL InnoDB Cluster on a second terminal in order to check its health:

elisa@cloudshell:~ (eu-zurich-1)$ mysqlsh -h127.0.0.1 -P6446 -uroot -p Please provide the password for '[email protected]:6446': ******* Save password for '[email protected]:6446'? [Y]es/[N]o/Ne[v]er (default No): N MySQL Shell 8.0.28-commercial Copyright (c) 2016, 2022, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help' or '?' for help; 'quit' to exit. Creating a session to '[email protected]:6446' Fetching schema names for autocompletion... Press ^C to stop. Your MySQL connection id is 36651 Server version: 8.0.28 MySQL Community Server - GPL No default schema selected; type use to set one. MySQL 127.0.0.1:6446 ssl JS > MySQL 127.0.0.1:6446 ssl JS > dba.getCluster().status(); { "clusterName": "elisacluster", "defaultReplicaSet": { "name": "default", "primary": "elisacluster-0.elisacluster-instances.mysql-cluster.svc.cluster.local:3306", "ssl": "REQUIRED", "status": "OK", "statusText": "Cluster is ONLINE and can tolerate up to ONE failure.", "topology": { "elisacluster-0.elisacluster-instances.mysql-cluster.svc.cluster.local:3306": { "address": "elisacluster-0.elisacluster-instances.mysql-cluster.svc.cluster.local:3306", "memberRole": "PRIMARY", "memberState": "(MISSING)", "mode": "n/a", "readReplicas": {}, "role": "HA", "shellConnectError": "MySQL Error 2005: Could not open connection to 'elisacluster-0.elisacluster-instances.mysql-cluster.svc.cluster.local:3306': Unknown MySQL server host 'elisacluster-0.elisacluster-instances.mysql-cluster.svc.cluster.local' (-2)", "status": "ONLINE", "version": "8.0.28" }, "elisacluster-1.elisacluster-instances.mysql-cluster.svc.cluster.local:3306": { "address": "elisacluster-1.elisacluster-instances.mysql-cluster.svc.cluster.local:3306", "memberRole": "SECONDARY", "memberState": "(MISSING)", "mode": "n/a", "readReplicas": {}, "role": "HA", "shellConnectError": "MySQL Error 2005: Could not open connection to 'elisacluster-1.elisacluster-instances.mysql-cluster.svc.cluster.local:3306': Unknown MySQL server host 'elisacluster-1.elisacluster-instances.mysql-cluster.svc.cluster.local' (-2)", "status": "ONLINE", "version": "8.0.28" }, "elisacluster-2.elisacluster-instances.mysql-cluster.svc.cluster.local:3306": { "address": "elisacluster-2.elisacluster-instances.mysql-cluster.svc.cluster.local:3306", "memberRole": "SECONDARY", "memberState": "(MISSING)", "mode": "n/a", "readReplicas": {}, "role": "HA", "shellConnectError": "MySQL Error 2005: Could not open connection to 'elisacluster-2.elisacluster-instances.mysql-cluster.svc.cluster.local:3306': Unknown MySQL server host 'elisacluster-2.elisacluster-instances.mysql-cluster.svc.cluster.local' (-2)", "status": "ONLINE", "version": "8.0.28" } }, "topologyMode": "Single-Primary" }, "groupInformationSourceMember": "elisacluster-0.elisacluster-instances.mysql-cluster.svc.cluster.local:3306" } MySQL 127.0.0.1:6446 ssl JS > sql Switching to SQL mode... Commands end with ; MySQL 127.0.0.1:6446 ssl SQL > select @@hostname; +----------------+ | @@hostname | +----------------+ | elisacluster-0 | +----------------+ 1 row in set (0.0018 sec) MySQL 127.0.0.1:6446 ssl SQL > SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+-----------------------------------------------------------------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+--------------------------------------+-----------------------------------------------------------------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | 717dbe17-ba71-11ec-8a91-3665daa9c822 | elisacluster-0.elisacluster-instances.mysql-cluster.svc.cluster.local | 3306 | ONLINE | PRIMARY | 8.0.28 | XCom | | group_replication_applier | b02c3c9a-ba71-11ec-8b65-5a93db09dda5 | elisacluster-1.elisacluster-instances.mysql-cluster.svc.cluster.local | 3306 | ONLINE | SECONDARY | 8.0.28 | XCom | | group_replication_applier | eb06aadd-ba71-11ec-8aac-aa31e5d7e08b | elisacluster-2.elisacluster-instances.mysql-cluster.svc.cluster.local | 3306 | ONLINE | SECONDARY | 8.0.28 | XCom | +---------------------------+--------------------------------------+-----------------------------------------------------------------------+-------------+--------------+-------------+----------------+----------------------------+ 3 rows in set (0.0036 sec)

Easy, right?

Yes, but databases containers is still a tricky subject. As we said above, many topics need to be addressed: deployment type, performances, backups, storage and persistence, … So stay tuned, more blog posts about MySQL on K8s will come soon…

By Elisa Usai

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/ELU_web-min-1-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)