What is Apache Kafka ?

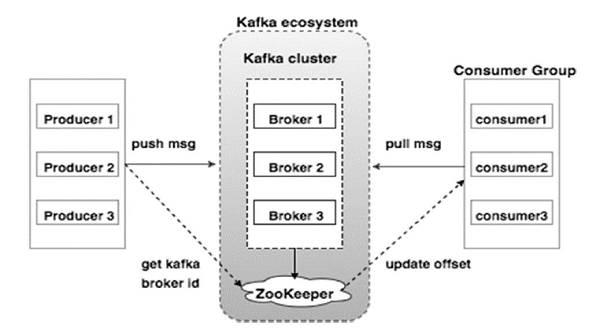

No, Kafka is not only the famous author (en.wikipedia.org/wiki/Franz_Kafka), it’s an open-source distributed pub-sub messaging system with powerful skills like scalability and fault tolerance. It’s also a stream processing platform (near real-time) for the streaming datasources. The design of Apache Kafka is strongly influenced by the commit logs. Apache Kafka was originally developed by Linkedin and was subsequently open sourced in early 2011.

The installation is pretty simple but need to be rigorous .

Binaries installation

-

- Prerequisites

Get a Linux server (I have chosen Centos 7.3.1611), it could run on a small config. (memory 1G min.)

Connect as a sudo user or root - Update your system and reboot

[root@osboxes ~]# yum update Loaded plugins: fastestmirror, langpacks Loading mirror speeds from cached hostfile * base: mirror.switch.ch * epel: mirror.uni-trier.de * extras: mirror.switch.ch * updates: mirror.switch.ch No packages marked for update

- Prerequisites

- Install the latest openjdk and set your environment

[root@osboxes ~]# yum install java-1.8.0-openjdk Loaded plugins: fastestmirror, langpacks Loading mirror speeds from cached hostfile * base: mirror.switch.ch * epel: mirror.imt-systems.com * extras: mirror.switch.ch * updates: mirror.switch.ch Package 1:java-1.8.0-openjdk-1.8.0.131-3.b12.el7_3.x86_64 already installed and latest version Nothing to do

#Check it: [root@osboxes ~]# java -version openjdk version "1.8.0_131" OpenJDK Runtime Environment (build 1.8.0_131-b12) OpenJDK 64-Bit Server VM (build 25.131-b12, mixed mode)

#Update your bash_profile: export JAVA_HOME=/usr/lib/jvm/jre-1.8.0-openjdk export JRE_HOME=/usr/lib/jvm/jre # and source your profile: [root@osboxes ~]# . ./.bash_profile [root@osboxes ~]# echo $JAVA_HOME /usr/lib/jvm/jre-1.8.0-openjdk [root@osboxes ~]# echo $JRE_HOME /usr/lib/jvm/jre

- The Confluent Platform is an open source platform that contains all the components you need

to create a scalable data platform built around Apache Kafka.

Confluent Open Source is freely downloadable.

Install the public key from Confluentrpm --import http://packages.confluent.io/rpm/3.2/archive.key

- Add the confluent.repo to your /etc/yum.repos.d with this content

[Confluent.dist] name=Confluent repository (dist) baseurl=http://packages.confluent.io/rpm/3.2/7 gpgcheck=1 gpgkey=http://packages.confluent.io/rpm/3.2/archive.key enabled=1 [Confluent] name=Confluent repository baseurl=http://packages.confluent.io/rpm/3.2 gpgcheck=1 gpgkey=http://packages.confluent.io/rpm/3.2/archive.key enabled=1

- Clean your yum caches

yum clean all

- And finally install the open source version of Confluent

yum install confluent-platform-oss-2.11

Transaction Summary ============================================================================================================================================================================ Install 1 Package (+11 Dependent packages) Total download size: 391 M Installed size: 446 M Is this ok [y/d/N]: y Downloading packages: (1/12): confluent-common-3.2.1-1.noarch.rpm | 2.0 MB 00:00:06 (2/12): confluent-camus-3.2.1-1.noarch.rpm | 20 MB 00:00:28 (3/12): confluent-kafka-connect-elasticsearch-3.2.1-1.noarch.rpm | 4.3 MB 00:00:06 (4/12): confluent-kafka-2.11-0.10.2.1-1.noarch.rpm | 38 MB 00:00:28 (5/12): confluent-kafka-connect-jdbc-3.2.1-1.noarch.rpm | 6.0 MB 00:00:07 (6/12): confluent-kafka-connect-hdfs-3.2.1-1.noarch.rpm | 91 MB 00:01:17 (7/12): confluent-kafka-connect-s3-3.2.1-1.noarch.rpm | 92 MB 00:01:18 (8/12): confluent-kafka-rest-3.2.1-1.noarch.rpm | 16 MB 00:00:16 (9/12): confluent-platform-oss-2.11-3.2.1-1.noarch.rpm | 6.7 kB 00:00:00 (10/12): confluent-rest-utils-3.2.1-1.noarch.rpm | 7.1 MB 00:00:06 (11/12): confluent-schema-registry-3.2.1-1.noarch.rpm | 27 MB 00:00:23 (12/12): confluent-kafka-connect-storage-common-3.2.1-1.noarch.rpm | 89 MB 00:01:08 ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Total 2.2 MB/s | 391 MB 00:03:00 Running transaction check Running transaction test Transaction test succeeded Running transaction Installing : confluent-common-3.2.1-1.noarch 1/12 Installing : confluent-kafka-connect-storage-common-3.2.1-1.noarch 2/12 Installing : confluent-rest-utils-3.2.1-1.noarch 3/12 Installing : confluent-kafka-rest-3.2.1-1.noarch 4/12 Installing : confluent-schema-registry-3.2.1-1.noarch 5/12 Installing : confluent-kafka-connect-s3-3.2.1-1.noarch 6/12 Installing : confluent-kafka-connect-elasticsearch-3.2.1-1.noarch 7/12 Installing : confluent-kafka-connect-jdbc-3.2.1-1.noarch 8/12 Installing : confluent-kafka-connect-hdfs-3.2.1-1.noarch 9/12 Installing : confluent-kafka-2.11-0.10.2.1-1.noarch 10/12 Installing : confluent-camus-3.2.1-1.noarch 11/12 Installing : confluent-platform-oss-2.11-3.2.1-1.noarch 12/12 Verifying : confluent-kafka-connect-storage-common-3.2.1-1.noarch 1/12 Verifying : confluent-platform-oss-2.11-3.2.1-1.noarch 2/12 Verifying : confluent-rest-utils-3.2.1-1.noarch 3/12 Verifying : confluent-kafka-connect-elasticsearch-3.2.1-1.noarch 4/12 Verifying : confluent-kafka-connect-s3-3.2.1-1.noarch 5/12 Verifying : confluent-kafka-rest-3.2.1-1.noarch 6/12 Verifying : confluent-camus-3.2.1-1.noarch 7/12 Verifying : confluent-kafka-connect-jdbc-3.2.1-1.noarch 8/12 Verifying : confluent-schema-registry-3.2.1-1.noarch 9/12 Verifying : confluent-kafka-2.11-0.10.2.1-1.noarch 10/12 Verifying : confluent-kafka-connect-hdfs-3.2.1-1.noarch 11/12 Verifying : confluent-common-3.2.1-1.noarch 12/12 Installed: confluent-platform-oss-2.11.noarch 0:3.2.1-1 Dependency Installed: confluent-camus.noarch 0:3.2.1-1 confluent-common.noarch 0:3.2.1-1 confluent-kafka-2.11.noarch 0:0.10.2.1-1 confluent-kafka-connect-elasticsearch.noarch 0:3.2.1-1 confluent-kafka-connect-hdfs.noarch 0:3.2.1-1 confluent-kafka-connect-jdbc.noarch 0:3.2.1-1 confluent-kafka-connect-s3.noarch 0:3.2.1-1 confluent-kafka-connect-storage-common.noarch 0:3.2.1-1 confluent-kafka-rest.noarch 0:3.2.1-1 confluent-rest-utils.noarch 0:3.2.1-1 confluent-schema-registry.noarch 0:3.2.1-1 Complete!

Ok , the binaries are installed now. The next operation will be to configure and launch Zookeeper and Kafka itself !

-

- First , take a look at the Zookeeper configuration :

[root@osboxes kafka]# cat /etc/kafka/zookeeper.properties # Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership. # The ASF licenses this file to You under the Apache License, Version 2.0 # (the "License"); you may not use this file except in compliance with # the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # the directory where the snapshot is stored. dataDir=/var/lib/zookeeper # the port at which the clients will connect clientPort=2181 # disable the per-ip limit on the number of connections since this is a non-production config maxClientCnxns=0

- Don’t change the configuration file (the default values are okay to start with) and launch Zookeeper

/usr/bin/zookeeper-server-start /etc/kafka/zookeeper.properties ... [2017-06-08 14:05:02,051] INFO binding to port 0.0.0.0/0.0.0.0:2181 (org.apache.zookeeper.server.NIOServerCnxnFactory)

- Keep the session with Zookeeper and open a new terminal for the Kafka part

/usr/bin/kafka-server-start /etc/kafka/server.properties ... [2017-06-08 14:11:31,333] INFO Kafka version : 0.10.2.1-cp1 (org.apache.kafka.common.utils.AppInfoParser) [2017-06-08 14:11:31,334] INFO Kafka commitId : 80ff5014b9e74a45 (org.apache.kafka.common.utils.AppInfoParser) [2017-06-08 14:11:31,335] INFO [Kafka Server 0], started (kafka.server.KafkaServer) [2017-06-08 14:11:31,350] INFO Waiting 10062 ms for the monitored broker to finish starting up... (io.confluent.support. metrics.MetricsReporter) [2017-06-08 14:11:41,413] INFO Monitored broker is now ready (io.confluent.support.metrics.MetricsReporter) [2017-06-08 14:11:41,413] INFO Starting metrics collection from monitored broker... (io.confluent.support.metrics.Metric sReporter)

- Like Zookeeper , let the Kafka Terminal open and launch a new session for the topic creation.

- First , take a look at the Zookeeper configuration :

Topic creation

- Messages in Kafka are categorized into Topics, it’s like a db table or a directory in a file system.

At first , we are going to create a new topic.[root@osboxes ~]# /usr/bin/kafka-topics --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic dbi Created topic "dbi".

- Check if the topic has been effectively created

[root@osboxes ~]# /usr/bin/kafka-topics --list --zookeeper localhost:2181 dbi

-

Nice , we can now produce some messages using the topic “dbi”

[root@osboxes ~]# kafka-console-producer --broker-list localhost:9092 --topic dbi be passionate be successful be responsible be sharing

- Open a new terminal and act like a consumer with the console

/usr/bin/kafka-console-consumer --zookeeper localhost:2181 --topic dbi --from-beginning

be passionate be successful be responsible be sharing

- Et voilà ! the messages produced with the producer appeared now in the consumer windows. You can type a new message in the producer console , it will display immediately in the other terminal.If you want to stop all the consoles , you can press Ctrl-C.

Now the most difficult thing is still to be done, configure Kafka with multiple producers / consumers within a complex broker topology.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/05/open-source-author.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)