If you are interested to learn Calico locally on your machine, it is possible to use it with Minikube.

Minikube is a tool that sets up a Kubernetes environment on your local machine. It works on Windows, MacOS and Linux and it is very easy to install. Have a look at the kubernetes documentation for the installation instructions: https://minikube.sigs.k8s.io/docs/start/

Calico is one of the Container Network Interface (CNI) plugin of Kubernetes that basically allows all pods to communicate together. Minikube offers a built-in Calico implementation and you can have a look at the Calico documentation to get started https://projectcalico.docs.tigera.io/getting-started/kubernetes/minikube

Communication between 2 Pods

Once you’ve started minikube with the CNI calico plugin and the Calico pods are up and running you are ready to go. In order to explore Calico, let’s create a deployment with 2 Pods (one on each of my 2 minikube nodes):

% kubectl create deployment busybox --image=busybox --replicas=2 -- sleep 8760h

% kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox-f5945bfb5-mw26t 1/1 Running 0 22s 10.244.120.67 minikube <none> <none>

busybox-f5945bfb5-mwgr6 1/1 Running 0 22s 10.244.205.193 minikube-m02 <none> <none>

The first Pod (Pod1) on the node called “minikube” has the IP Address of 10.244.120.67 and the second Pod (Pod2) on the node called “minikube-m02” has the IP Address of 10.244.205.193

Let’s have a look at a traceroute from Pod1 to Pod2:

% kubectl exec -it busybox-f5945bfb5-mw26t -- traceroute 10.244.205.193

traceroute to 10.244.205.193 (10.244.205.193), 30 hops max, 46 byte packets

1 10.244.120.64 (10.244.120.64) 0.017 ms 0.007 ms 0.005 ms

2 10.244.205.192 (10.244.205.192) 0.008 ms 0.008 ms 0.004 ms

3 10.244.205.193 (10.244.205.193) 0.008 ms 0.006 ms 0.003 ms

Let’s follow those packets end-to-end between those pods to better understand how networking with Calico and Minikube is working:

Interfaces and Routing on Pod1

Let’s start by checking Pod1:

% kubectl exec -it busybox-f5945bfb5-mw26t -- ip address

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: ip6tnl0@NONE: <NOARP> mtu 1452 qdisc noop qlen 1000

link/tunnel6 00:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00 brd 00:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00

5: eth0@if47: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1480 qdisc noqueue

link/ether 8e:01:bd:36:9e:ed brd ff:ff:ff:ff:ff:ff

inet 10.244.120.67/32 brd 10.244.120.67 scope global eth0

valid_lft forever preferred_lft forever

% kubectl exec -it busybox-f5945bfb5-mw26t -- ip route

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

I’m not going to knock you out with all the details so I’ll try to summarize and simplify that processing. Pod1 is using its interface called eth0 that has the Pod IP address of 10.244.120.67. Pod1 has a unique default route through 169.254.1.1 that is an IPv4 link-local address. This link-local address will take care of routing all packets from this Pod by using the local tunnel interface called tunl0 (second interface in the output above). tunl0 interface name is related to Calico default routing mode which is called IP-in-IP. IP-in-IP is a simple form of encapsulation achieved by putting an IP packet inside another.

If another routing mode was configured then there would be another interface name instead of tunl0. This gives you also a clue about Calico configuration.

Interfaces and Routing between Nodes

When the packet leaves Pod1 it reaches 10.244.120.64 according to the output of traceroute. This IP address is the other side of that IP-in-IP tunnel which is the tunl0 interface of the node “minikube”. As we are using Minikube, both our nodes are actually Docker containers so let’s see how we can check it:

% docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

72dd21906820 gcr.io/k8s-minikube/kicbase:v0.0.33 "/usr/local/bin/entr…" 15 hours ago Up 15 hours 0.0.0.0:50541->22/tcp, 0.0.0.0:50542->2376/tcp, 0.0.0.0:50544->5000/tcp, 0.0.0.0:50545->8443/tcp, 0.0.0.0:50543->32443/tcp minikube-m02

b3b3002ac94b gcr.io/k8s-minikube/kicbase:v0.0.33 "/usr/local/bin/entr…" 36 hours ago Up 15 hours 0.0.0.0:50425->22/tcp, 0.0.0.0:50426->2376/tcp, 0.0.0.0:50423->5000/tcp, 0.0.0.0:50424->8443/tcp, 0.0.0.0:50427->32443/tcp minikube

Let’s check the interfaces of our Docker container “minikube”:

% docker exec -it b3b3002ac94b ip address

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.244.120.64/32 scope global tunl0

valid_lft forever preferred_lft forever

3: ip6tnl0@NONE: <NOARP> mtu 1452 qdisc noop state DOWN group default qlen 1000

link/tunnel6 :: brd ::

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:29:4a:f4:a6 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

5: cni0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether fa:42:7a:3c:cd:0c brd ff:ff:ff:ff:ff:ff

inet 10.85.0.1/16 brd 10.85.255.255 scope global cni0

valid_lft forever preferred_lft forever

43: cali7a49ac07849@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 1

44: calic74e40fd394@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 2

47: cali8d02ed71817@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 3

255: eth0@if256: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:a8:31:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.49.2/24 brd 192.168.49.255 scope global eth0

valid_lft forever preferred_lft forever

% docker exec -it b3b3002ac94b ip route

default via 192.168.49.1 dev eth0

10.85.0.0/16 dev cni0 proto kernel scope link src 10.85.0.1 linkdown

blackhole 10.244.120.64/26 proto bird

10.244.120.65 dev cali7a49ac07849 scope link

10.244.120.66 dev calic74e40fd394 scope link

10.244.120.67 dev cali8d02ed71817 scope link

10.244.205.192/26 via 192.168.49.3 dev tunl0 proto bird onlink

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

192.168.49.0/24 dev eth0 proto kernel scope link src 192.168.49.2

We can see that interface number 2 (tunl0) has the IP address 10.244.120.64 we saw in the output of traceroute.

From the output of ip route we can see that to reach Pod2 in the subnet 10.244.205.192/26 we will go through 192.168.49.3 which is the eth0 interface of the node “minikube-m02”. This node “minikube” will use its own eth0 interface (IP address 192.168.49.2) to reach it.

So let’s now check our Docker container “minikube-m02”:

% docker exec -it 72dd21906820 ip address

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.244.205.192/32 scope global tunl0

valid_lft forever preferred_lft forever

3: ip6tnl0@NONE: <NOARP> mtu 1452 qdisc noop state DOWN group default qlen 1000

link/tunnel6 :: brd ::

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:a3:13:19:ef brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

261: eth0@if262: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:a8:31:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.49.3/24 brd 192.168.49.255 scope global eth0

valid_lft forever preferred_lft forever

7: cali79f33e4263e@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 1

% docker exec -it 72dd21906820 ip route

default via 192.168.49.1 dev eth0

10.244.120.64/26 via 192.168.49.2 dev tunl0 proto bird onlink

blackhole 10.244.205.192/26 proto bird

10.244.205.193 dev cali79f33e4263e scope link

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

192.168.49.0/24 dev eth0 proto kernel scope link src 192.168.49.3

To continue the routing of our packet to the destination 10.244.205.193, the interface cali79f33e4263e (created automatically by Calico for each Pod who gets an IP address provided by Calico) will be used and the packet will be pushed into tunl0.

We can see that tunl0 (interface number 2) has the IP address 10.244.205.192 which we saw in the output of traceroute.

Interfaces and Routing on Pod2

Let’s finally check the interfaces and routes in Pod2:

% k exec -it busybox-f5945bfb5-mwgr6 -- ip address

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: ip6tnl0@NONE: <NOARP> mtu 1452 qdisc noop qlen 1000

link/tunnel6 00:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00 brd 00:00:00:00:00:00:00:00:00:00:00:00:00:00:00:00

5: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1480 qdisc noqueue

link/ether ea:17:ec:cd:14:b8 brd ff:ff:ff:ff:ff:ff

inet 10.244.205.193/32 brd 10.244.205.193 scope global eth0

valid_lft forever preferred_lft forever

enb@DBI-LT-ENB ~ % k exec -it busybox-f5945bfb5-mwgr6 -- ip route

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

This is similar to the Pod1 output where eth0 is the IP address of the Pod we wanted to reach. Our packet reached eth0 through tunl0.

Summary

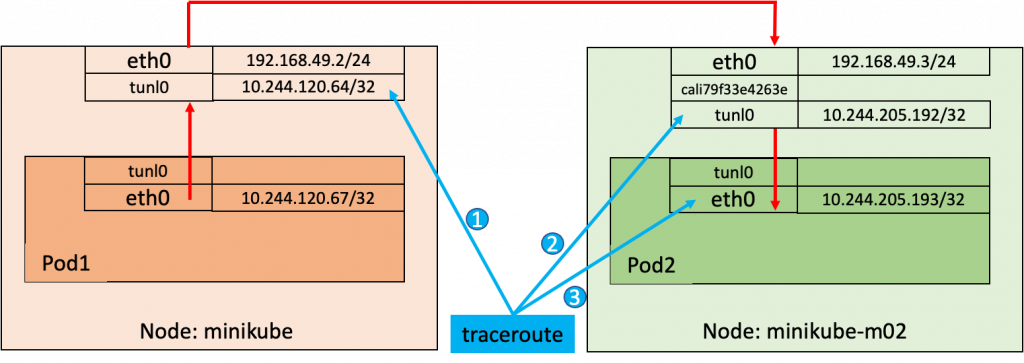

It is always better to look at the whole picture with a diagram. This is the traceroute from Pod1 to Pod2:

The IP Addresses seen in the output of traceroute appear in the order represented by the numbers in this diagram.

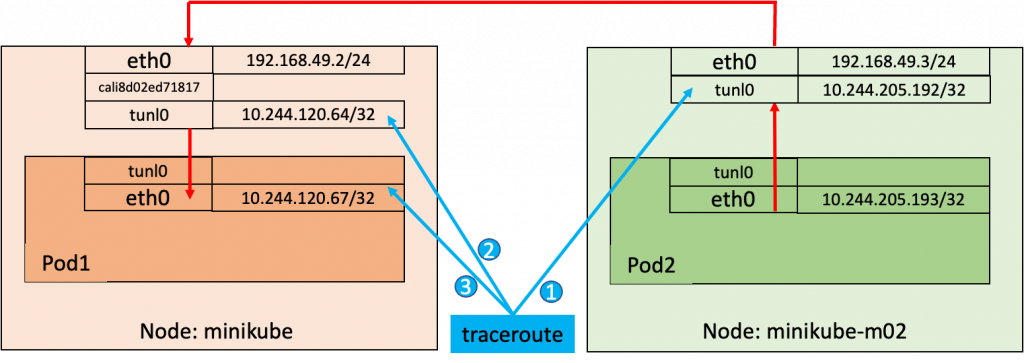

Just for fun, let’s have a look at the traceroute diagram from Pod2 to Pod1:

Conclusion

We have seen that Calico and Minikube add some complexity in the routing by adding their own network interfaces. I’ve let the details out of how the kernel knows how to route the packets from one interface to another on the nodes and pods as well as the details of arp, proxy arp and iptables. However you’ll have the basics now to be able to follow an end-to-end communication between 2 IP addresses in your Kubernetes cluster with a similar Calico setting.

We’ve only scratched the surface of our Calico exploration as we can also look at the Calico components (Bird, Confd, Felix) in more details, as well as dive into the iptables used by Calico by default. That may be topics for future posts, so stay tuned!

If you too want to learn more about Docker and Kubernetes and be at ease with those technologies in your job, check out our Training course given by our Docker Guru!

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/martin_bracher_2048x1536.jpg)