Dealing with dynamic filters is a common scenario with management software or ERP. Indeed, users should like to have the flexibility to filter and sort their business data as they want in order to be efficient. Target and operate quickly on the right data is in line with the required performance every day. This is at least what I could notice from different customer places.

So, through my mandates at customer places, I could see various T-SQL implementations of dynamic filters, and some of them do not comply with performance best practices. The blog post doesn’t aim to provide one-size-fits-all solutions for such issues but only expose a compilation of different implementations I encountered so far. Please feel free to comment or add your thoughs!

Firstly, the most common implementation I’ve seen so far the use of the well-known “kitchen Sink” anti-pattern. You may see a good example with the following stored procedure (My examples are based on the AdventureWorks database to stay on simple explanations. Of course in customer scenarios, this kind of stored procedure may be a little bit complex)

create procedure PR_get_customer_sales_info ( @SalesOrderID int = null, @LastName nvarchar(50) = null, @StoreName nvarchar(50) = null, @AccountNumber varchar(10) = null ) as select p.FirstName + '.' + p.LastName as customer_name, c.AccountNumber, h.OrderDate, d.SalesOrderID as order_id, d.SalesOrderDetailID as order_detail_id, d.UnitPrice * d.OrderQty as total_sale, s.Name as store_name, s.Demographics from Sales.SalesOrderHeader as h join Sales.SalesOrderDetail as d on h.SalesOrderID = d.SalesOrderID join Sales.Customer as c on c.CustomerID = h.CustomerID join Person.Person as p on p.BusinessEntityID = c.PersonID join Sales.Store as s on s.BusinessEntityID = c.StoreID where 1=1 and (h.SalesOrderID = @SalesOrderID or @SalesOrderID is null) and (p.LastName = @LastName or @LastName is null) and (s.Name = @StoreName or @StoreName is null) and (c.AccountNumber = @AccountNumber or @AccountNumber is null)

The main idea here is to satisfy a variety of search conditions from the application users. If you take a look at the predicate, you will recognize a common pattern used by developers (I used myself the same pattern in the past when I was a T-SQL developer). Writing this kind of predicate is very simple (and maybe intuitive). If the application user doesn’t want to filter data, the clause 1=1 will come into play. Otherwise, if the same user wants to apply more restrictive filters by using either one or a set of parameters, the procedure still remains flexible to meet the user requirement.

But simplicity doesn’t rely always on performance in all cases and unfortunately we are unlucky here. Indeed, we may face performance issues with this kind of T-SQL statement because of the parameter sniffing phenomena. To understand what I mean, let’s say that the first compilation of this stored procedure is based on the predicate filter 1=1 only. The application user doesn’t want to apply filter to his data. So we just have to execute our stored procedure without specifying any parameters as follows:

exec dbo.PR_get_customer_sales_info

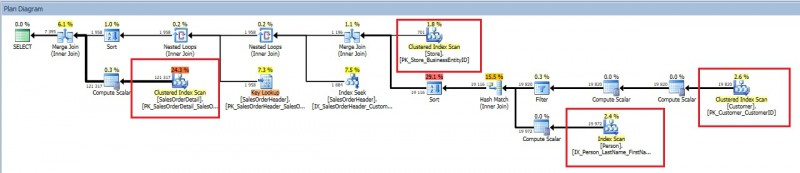

Chances are that SQL Server will choose to use index scan operators and this is what we’ll notice by retrieving the correspond execution plan in the cache.

select t.text, p.query_plan, cp.refcounts, cp.usecounts, cp.cacheobjtype, cp.size_in_bytes from sys.dm_exec_cached_plans as cp cross apply sys.dm_exec_sql_text(cp.plan_handle) as t cross apply sys.dm_exec_query_plan(cp.plan_handle) as p where t.text like '%from Sales.SalesOrderHeader as h%' and t.text not like '%sys.dm_exec_cache_plans%'

![]()

…

The execution plan is now in cache such as the next executions will use it. This time, let’s say another application user wants to use a completely different predicate that is more restrictive compared to the previous query as follow. So the new execution of the stored procedure will be as follows:

exec dbo.PR_get_customer_sales_info @SalesOrderID = 47454

The query returns 37 rows against 60919 from the previous query. At this point, we may guess that this query will potentially use index seek operators rather than index scan operators but as you may expect, the former will not be chosen by SQL Server because the second query execution will be based on the existing plan in cache. So let’s imagine a stored procedure with much more parameters and the number of combinations of search predicates and values we may get. There is a good probability that the execution plan becomes quickly obsolete.

We may avoid this issue by using the recompile option either at the statement or the stored procedure level but at the price of a resource wasting at each execution.

Let’s continue with the second alternative I faced recently and that consists in using dynamic SQL. In addition, in this specific case, the number of parameters may be large and was completely dynamic such as we didn’t know in advance which parameters the application users will use to filter their data. The solution found by my customer consisted in transferring the parameters and their respective values as a string chain to the stored procedure from the URL application as follows (I don’t remember the exact structure of the stored procedure).

create procedure PR_get_customer_sales_info_dynamic ( @parameters varchar(max) = null ) as declare @where varchar(max); set @where = case when @parameters is not null then 'AND ' + REPLACE(@parameters, ';', ' AND ') else '' end declare @sql varchar(max) = N' select p.FirstName + ''.'' + p.LastName as customer_name, c.AccountNumber, h.OrderDate, d.SalesOrderID as order_id, d.SalesOrderDetailID as order_detail_id, d.UnitPrice * d.OrderQty as total_sale, s.Name as store_name, s.Demographics from Sales.SalesOrderHeader as h join Sales.SalesOrderDetail as d on h.SalesOrderID = d.SalesOrderID join Sales.Customer as c on c.CustomerID = h.CustomerID join Person.Person as p on p.BusinessEntityID = c.PersonID join Sales.Store as s on s.BusinessEntityID = c.StoreID where 1=1' + char(13) set @sql = @sql + @where; exec(@sql); go

At the first glance, it could be a better idea than the previous stored procedure even if writing this kind of T-SQL code may be more difficult. Note that this time the query is dynamically constructed and includes only the minimal predicate clause that will probably lead to a better execution plan.

Let’s execute this second stored procedure with two different parameters as the previous test:

declare @parameters varchar(max) = null; exec dbo.PR_get_customer_sales_info_dynamic @parameters = @parameters; go declare @parameters varchar(max) = 'h.SalesOrderID=47454'; exec dbo.PR_get_customer_sales_info_dynamic @parameters = @parameters; go

And let’s have a look at the cached execution plan cache:

Well, we may notice that new stored procedure is reused but let’s focus on the two others lines below: we have two other lines related to each execution of the dynamic T-SQL statement inside the procedure. Take a look at the objtype column value and you will understand why we will get as many lines as the number of stored procedure executions. You may test by yourself by changing only the parameter values … For example in my case, after changing the value of the SalesOrderID parameter I got an additional record in the execution plan cache:

declare @parameters varchar(max) = 'h.SalesOrderID=47453' exec dbo.PR_get_customer_sales_info_dynamic @parameters = @parameters;

So keep in mind that executing dynamic T-SQL code with EXEC command is always considered as adhoc queries by SQL Server. It means that the related execution plan will be generated and only once for a specific set of parameters and values as well. However since the 2005, there are some optimizations on this topic because SQL Server is able to recompile at the statement level instead of the entire procedure level leading to reduce considerably the CPU consumption compared to SQL Server 2000.

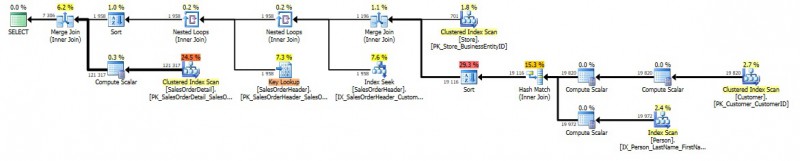

By taking a look at the query plans, we may notice that each is more suitable for each statement execution context.

declare @parameters varchar(max) = null; exec dbo.PR_get_customer_sales_info_dynamic @parameters = @parameters; go

declare @parameters varchar(max) = 'h.SalesOrderID=47454'; exec dbo.PR_get_customer_sales_info_dynamic @parameters = @parameters; go

Using this solution has some advantages but also some important drawbacks:

- The execution plan cache may be bloated by a lot of batch compilations plans. You may mitigate this issue by using the server level option “Optimize for adhoc workload” if your workload is suitable.

- Even if SQL Server is able to compile only the concerned batch, the compilation stuff is there and may be part of general compilation issue regarding the workload. It was the case with one my customers.

Finally, the previous method may be enhanced by changing the way to execute the dynamic SQL statement. We must use system stored procedure sp_executesql instead of the EXEC command. In the context of dynamic parameters, if we use the same way than the previous procedure, that will be more challenging because we will also have to provide the type of each parameter.

If it is technically possible, I personally prefer to use stored procedures parameters instead of passing the parameters inside a string chain from a web URL to avoid exposing applications to malicious attacks. From my point of view, this is not a good idea but I would like to hear you about this point…

Of course, if we face a large amount of parameters, we will probably write a very big stored procedure to finally use maybe 5% of the parameters. Yes, this a trade-off to find here but trust me, in the most cases, it will finally benefit the performance.

Let’s rewrite the previous stored procedure by preparing correctly the dynamic T-SQL.

create procedure PR_get_customer_sales_info_dynamic_enhanced ( @SalesOrderID int = null, @LastName nvarchar(50) = null, @StoreName nvarchar(50) = null, @AccountNumber varchar(10) = null ) as declare @where nvarchar(max) = N''; declare @parameters nvarchar(max) = N''; if @SalesOrderID is not null set @where = N'and h.SalesOrderID = @SalesOrderID' + CHAR(13); if @LastName is not null set @where = @where + N'and c.LastName = N''@LastName''' + CHAR(13); if @StoreName is not null set @where = @where + N'and s.Name = N''@StoreName''' + CHAR(13); if @AccountNumber is not null set @where = @where + N'and c.AccountNumber = N''@AccountNumber''' + CHAR(13); declare @sql nvarchar(max) = N' select p.FirstName + ''.'' + p.LastName as customer_name, c.AccountNumber, h.OrderDate, d.SalesOrderID as order_id, d.SalesOrderDetailID as order_detail_id, d.UnitPrice * d.OrderQty as total_sale, s.Name as store_name, s.Demographics from Sales.SalesOrderHeader as h join Sales.SalesOrderDetail as d on h.SalesOrderID = d.SalesOrderID join Sales.Customer as c on c.CustomerID = h.CustomerID join Person.Person as p on p.BusinessEntityID = c.PersonID join Sales.Store as s on s.BusinessEntityID = c.StoreID where 1=1' + CHAR(13); set @sql = @sql + @where; set @parameters = N'@SalesOrderID INT, @LastName nvarchar(50), @StoreName nvarchar(50), @AccountNumber varchar(10)'; exec sp_executesql @sql, @parameters, @SalesOrderID, @LastName, @StoreName, @AccountNumber; go

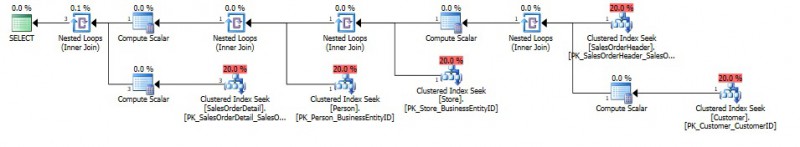

Yes I agree we are far from the first procedure but this last version will probably help you in the most cases in terms of performance. Let’s have a look at the execution plan cache:

This time, each batch is considered as prepared by SQL Server and can be reused as a normal compiled object. So, we will probably have as many rows as we have searched filter variations but that’s all. What about parameters sniffing? It is a legitimate question here because as said above, prepared queries are considered as compiled objects and may suffer from parameters sniffing. Depending on your data distribution, you still have the possibility to add hints like OPTIMIZE FOR.

In this blog post, we’ve seen that dealing with “kitchen sink” procedures is not easy. Unfortunately, we don’t ‘have any perfect solution but what is certain is that some solutions are generally better than others.

Happy development!

By David Barbarin

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/microsoft-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWI_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/03/AHI_web.jpg)