This write-up follows my first article about helm chart with SQL Server. This time, I would like to cover the availability groups topic and how to deploy them with helm charts.

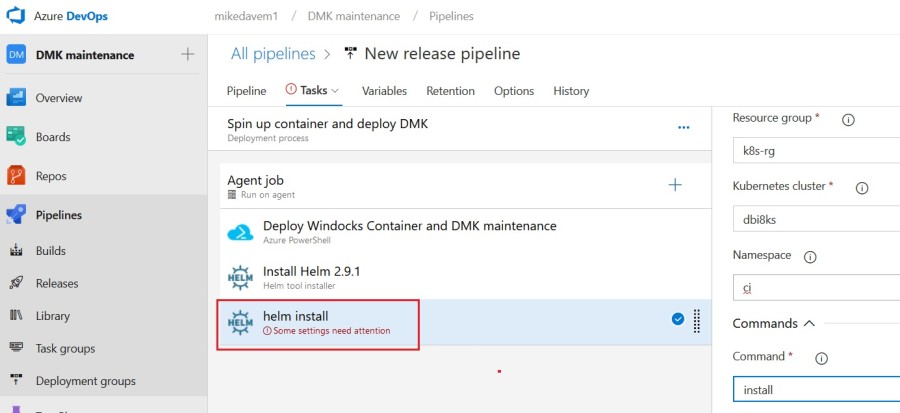

In fact, to go through this feature for AGs was motivated to its usage in our Azure DevOps CI pipeline in order to deploy a configurable one on an AKS cluster with SQL Server 2019.

If you look carefully at the release pipeline, Windocks is also another product we are using for our integration testing with SQL Server containers and I will probably explain more on this topic in a future blog post. But this time I would like to share some experiences with the construction of the AG helm chart.

First of all let’s precise I used the content provided by Microsoft on GitHub to deploy availability groups on K8s. This is a new functionality of SQL Server 2019 and we run actually with CTP 2.1 version. Chances are things will likely change over the time and I may bet Microsoft will release their own helm chart in the future. Anyway, for me it was an interesting opportunity to deep dive in helm charts feature.

First step I ran into was the parametrization (one big interest of Helm) of the existing template with input values including AG’s name, image container repository and tag used for deployment and different service settings like service type, service port and target service port.

Here one of my values.yaml file:

# General parameters agname: ag1 acceptEula: true # Container parameters agentsContainerImage: repository: mcr.microsoft.com/mssql/ha tag: 2019-CTP2.1-ubuntu pullPolicy: IfNotPresent sqlServerContainer: repository: mcr.microsoft.com/mssql/server tag: 2019-CTP2.1-ubuntu pullPolicy: IfNotPresent # Service parameters sqlservice: type: LoadBalancer port: 1433 agservice: type: LoadBalancer port: 1433

As a reminder, services on K8s are a way to expose pods to the outside world. I also introduced some additional labels for the purpose of querying the system. This is basically the same labels used in the stable template on GitHub.

labels:

…

app: {{ template "dbi_mssql_ag.name" . }}

chart: {{ .Chart.Name }}-{{ .Chart.Version | replace "+" "_" }}

release: {{ .Release.Name }}

heritage: {{ .Release.Service }}

Here a sample of my ag-sqlserver-deployment.yaml file with parametrization stuff:

apiVersion: mssql.microsoft.com/v1

kind: SqlServer

metadata:

labels:

name: mssql1

type: sqlservr

app: {{ template "dbi_mssql_ag.name" . }}

chart: {{ .Chart.Name }}-{{ .Chart.Version | replace "+" "_" }}

release: {{ .Release.Name }}

heritage: {{ .Release.Service }}

name: mssql1

namespace: {{ .Release.Namespace }}

spec:

acceptEula: true

agentsContainerImage: {{ .Values.agentsContainerImage.repository }}:{{ .Values.agentsContainerImage.tag }}

availabilityGroups: [{{ .Values.agname }}]

instanceRootVolumeClaimTemplate:

accessModes: [ReadWriteOnce]

resources:

requests: {storage: 5Gi}

storageClass: default

saPassword:

secretKeyRef: {key: sapassword, name: sql-secrets}

masterKeyPassword:

secretKeyRef: {key: masterkeypassword, name: sql-secrets}

sqlServerContainer: {image: '{{ .Values.sqlServerContainer.repository }}:{{ .Values.sqlServerContainer.tag }}'}

In addition, for a sake of clarity, I also took the opportunity to break the YAML files provided by Microsoft into different pieces including AG operator, AG RBAC configuration (security), AG instances and resources and AG services files. But you may wonder (like me) how to control the ordering of object’s creation? Well, it is worth noting that Helm collects all of the resources in a given Chart and it’s dependencies, groups them by resource type, and then installs them in a pre-defined order.

Let’ say I also removed the existing namespace’s creation from the existing YAML file because currently helm charts are not able to create one if it doesn’t exist. From a security perspective my concern was to keep the release deployment under control through helm charts so I preferred to precise the namespace directly in the –namespace parameter as well as creating the sql-secrets object which contains both sa and master key passwords manually according to the Microsoft documentation. In this way, you may segregate the permissions tiller has on a specific namespace and in my case, tiller has access to the ci namespace only.

Here comes likely the most interesting part. During the first deployment attempt, I had to face was the dependency that exists between the AG operator, SQL Server and AG resources as stated here:

The operator implements and registers the custom resource definition for SQL Server and the Availability Group resources.

The custom resource definition (CRD) is one of the first components with the deployment of the AG operator. You may retrieve an API service v1.mssql.microsoft.com as show below:

$ kubectl describe apiservice v1.mssql.microsoft.com

Name: v1.mssql.microsoft.com

Namespace:

Labels: kube-aggregator.kubernetes.io/automanaged=true

Annotations: <none>

API Version: apiregistration.k8s.io/v1

Kind: APIService

Metadata:

Creation Timestamp: 2019-01-17T01:45:19Z

Resource Version: 283588

Self Link: /apis/apiregistration.k8s.io/v1/apiservices/v1.mssql.microsoft.com

UID: 8b90159d-19f9-11e9-96ba-ee2da997daf5

Spec:

Group: mssql.microsoft.com

Group Priority Minimum: 1000

Service: <nil>

Version: v1

Version Priority: 100

Status:

Conditions:

Last Transition Time: 2019-01-17T01:45:19Z

Message: Local APIServices are always available

Reason: Local

Status: True

Type: Available

Events: <none>

Then the API is referenced in the YAML file that contains the definition of SQL Server resource objects through the following elements:

apiVersion: mssql.microsoft.com/v1 kind: SqlServer

As you probably guessed, if this API is missing on your K8s cluster at the moment of installing the AG resources you’ll probably face the following error message:

Error: [unable to recognize “”: no matches for kind “SqlServer” in version “mssql.microsoft.com/v1”, unable to recognize “”: no matches for kind “SqlServer” in version “mssql.microsoft.com/v1”, unable to recognize “”: no matches for kind “SqlServer” in version “mssql.microsoft.com/v1”]

At this stage, referring to the Helm documentation, I decided to split my initial release deployment into 2 separate helm charts. Between the 2 suggested methods in the documentation I much prefer this one because updating / removing releases is little bit easier but at the cost of introducing an additional chart in the game. With the CRD hook method, the CRD is not attached to a specific chart deployment, so if we need to change something in the CRD, it doesn’t get updated in the cluster unless we tear down the chart and install it again. This also means that we can’t add a CRD to a chart that has already been deployed. Finally, I took a look at the charts dependency feature but it doesn’t fix my issue at all because chart validation seems to come before the completion of the custom API. This is at least what I noticed with the current version of my helm version (v2.12.1). Probably one area to investigate for Microsoft …

So let’s continue … Here the structure of my two helm charts (respectively for my AG resources and my AG operator).

$ tree /f

───dbi_mssql_ag

│ │ .helmignore

│ │ Chart.yaml

│ │ values.yaml

│ │

│ ├───charts

│ └───templates

│ │ ag-services.yaml

│ │ ag-sqlserver-deployment.yaml

│ │ NOTES.txt

│ │ _helpers.tpl

│ │

│ └───tests

└───dbi_mssql_operator

│ .helmignore

│ Chart.yaml

│ values.yaml

│

├───charts

└───templates

│ ag-operator-deployment.yaml

│ ag-security.yaml

│ NOTES.txt

│ _helpers.tpl

│

└───tests

The deployment consists in deploying the two charts in the correct order:

$ helm install --name ag-2019-o --namespace ci .\dbi_mssql_operator\ … $ helm install --name ag-2019 --namespace ci .\dbi_mssql_ag\ … $ helm ls NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE ag-2019 1 Wed Jan 16 23:48:33 2019 DEPLOYED dbi-mssql-ag-1 2019.0.0 ci ag-2019-o 1 Wed Jan 16 23:28:17 2019 DEPLOYED dbi-mssql-ag-1 2019.0.0 ci … $ kubectl get all -n ci NAME READY STATUS RESTARTS AGE pod/mssql-initialize-mssql1-hb9xs 0/1 Completed 0 2m pod/mssql-initialize-mssql2-47n99 0/1 Completed 0 2m pod/mssql-initialize-mssql3-67lzn 0/1 Completed 0 2m pod/mssql-operator-7bc948fdc-45qw5 1/1 Running 0 22m pod/mssql1-0 2/2 Running 0 2m pod/mssql2-0 2/2 Running 0 2m pod/mssql3-0 2/2 Running 0 2m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/ag1 ClusterIP None <none> 1433/TCP,5022/TCP 2m service/ag1-primary LoadBalancer 10.0.62.75 xx.xx.xxx.xx 1433:32377/TCP 2m service/mssql1 LoadBalancer 10.0.45.155 xx.xx.xxx.xxx 1433:31756/TCP 2m service/mssql2 LoadBalancer 10.0.104.145 xx.xx.xxx.xxx 1433:31285/TCP 2m service/mssql3 LoadBalancer 10.0.51.142 xx.xx.xxx.xxx 1433:31002/TCP 2m NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deployment.apps/mssql-operator 1 1 1 1 22m NAME DESIRED CURRENT READY AGE replicaset.apps/mssql-operator-7bc948fdc 1 1 1 22m NAME DESIRED CURRENT AGE statefulset.apps/mssql1 1 1 2m statefulset.apps/mssql2 1 1 2m statefulset.apps/mssql3 1 1 2m NAME DESIRED SUCCESSFUL AGE job.batch/mssql-initialize-mssql1 1 1 2m job.batch/mssql-initialize-mssql2 1 1 2m job.batch/mssql-initialize-mssql3 1 1 2m

After that, my AG is deployed and ready!

Let me know if you want to try it and feel free to comment!. Apologize I don’t use a GitHub repository so far but things may be changing this year 🙂

Bear in mind SQL Server 2019 is still in CTP version at the moment of this write-up. Things may change more or less until the first GA …

Happy AG deployment on K8s!

By David Barbarin

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/microsoft-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)