Deploying a Cloudera distribution of Hadoop automatically is very interesting in terms of time-saving. Infrastructure as Code tools such as Ansible, Puppet, Chef, Terraform, allow now to provision, manage and deploy configuration for large clusters.

In this blog posts series, we will see how to deploy and install a CDH cluster with Terraform and Ansible in the Azure cloud.

The first part consists of provisioning the environment with Terraform in Azure. Terraform features an extension to interact with cloud providers such as Azure and AWS. You can find here the Terraform documentation for the Azure module.

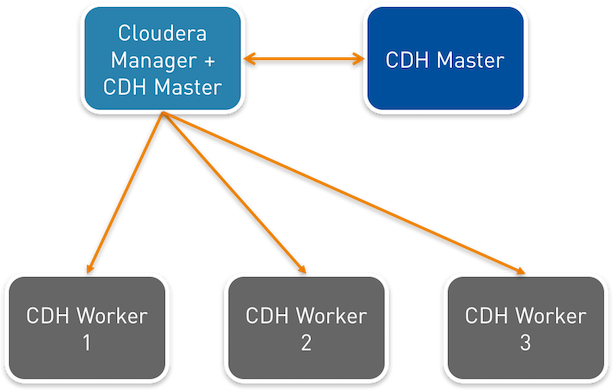

Desired architecture

Above a representation of the wished architecture for our CDH environment. 5 nodes for a testing infrastructure, including a Cloudera manager node, a second master node for the Hadoop Secondary NameNode and 3 workers.

Prerequisites

Terraform must be installed on your system. https://docs.microsoft.com/en-us/azure/virtual-machines/linux/terraform-install-configure#install-terraform

Generate a Client ID and a Client Secret from Azure CLI to authenticate in Azure with Terraform.

1. Sign in to administer your Azure subscription:

[root@centos Terraform]# az login

2. Get the subscription ID and tenant ID:

[root@centos Terraform]# az account show --query "{subscriptionId:id, tenantId:tenantId}"

3. Create separate credentials for TF:

[root@centos Terraform]# az ad sp create-for-rbac --role="Contributor" --scopes="/subscriptions/${SUBSCRIPTION_ID}"

4. Save the following information:

- subscription_id

- client_id

- client_secret

- tenant_id

Now we are ready to start using Terraform with AzureRM API.

Build your cluster

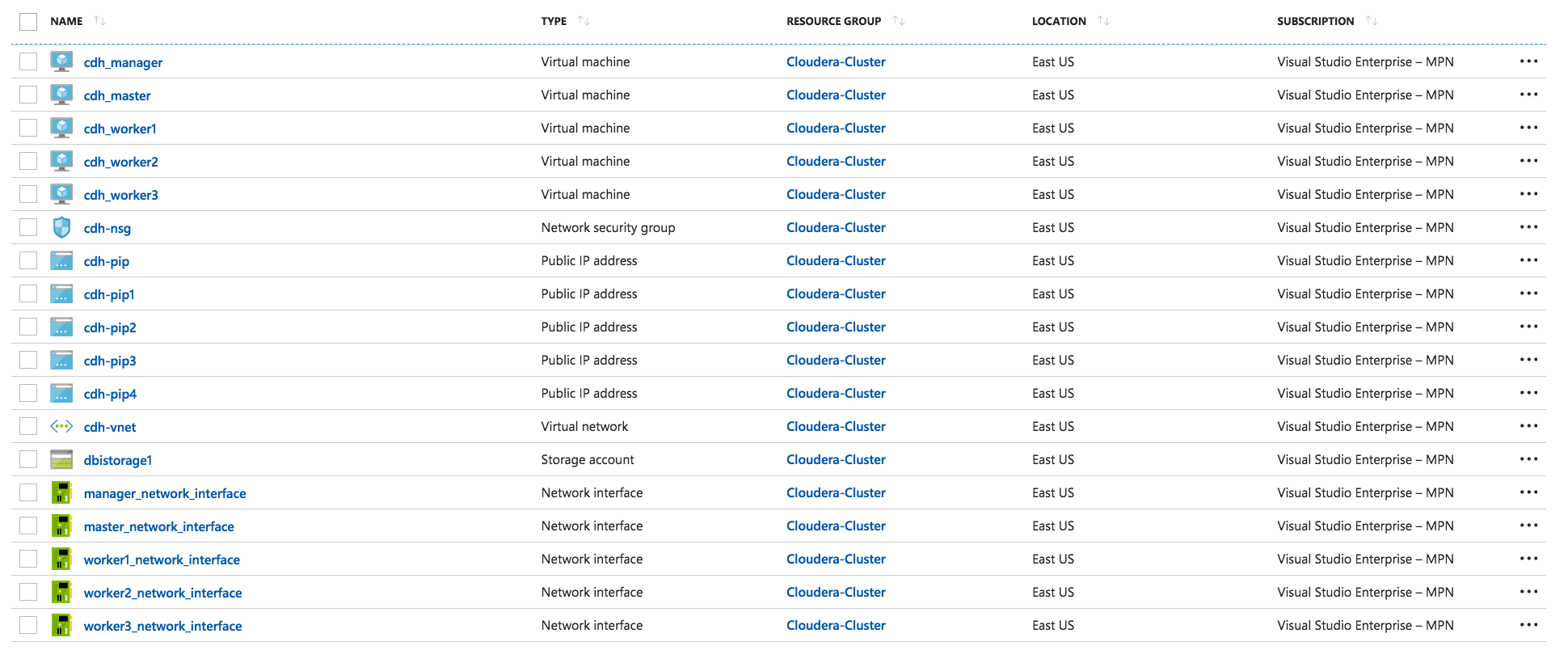

With Terraform, we will provision the following resources in Azure:

- A resource group – “Cloudera-Cluster”

- 1 virtual network – “cdh_vnet”

- 1 network security group – “cdh-nsg”

- 1 storage account – “dbistorage”

- 5 network interfaces – “instance_name_network_interface”

- 5 Public / Private IP – “cdh-pip1-4”

First, we will create a variable file, which contains all variables needed without specific values. The values are specified in the var_values.tfvars file.

variables.tf

/* Configure Azure Provider and declare all the Variables that will be used in Terraform configurations */

provider "azurerm" {

subscription_id = "${var.subscription_id}"

client_id = "${var.client_id}"

client_secret = "${var.client_secret}"

tenant_id = "${var.tenant_id}"

}

variable "subscription_id" {

description = "Enter Subscription ID for provisioning resources in Azure"

}

variable "client_id" {

description = "Enter Client ID for Application created in Azure AD"

}

variable "client_secret" {

description = "Enter Client secret for Application in Azure AD"

}

variable "tenant_id" {

description = "Enter Tenant ID / Directory ID of your Azure AD. Run Get-AzureSubscription to know your Tenant ID"

}

variable "location" {

description = "The default Azure region for the resource provisioning"

}

variable "resource_group_name" {

description = "Resource group name that will contain various resources"

}

variable "vnet_cidr" {

description = "CIDR block for Virtual Network"

}

variable "subnet1_cidr" {

description = "CIDR block for Subnet within a Virtual Network"

}

variable "subnet2_cidr" {

description = "CIDR block for Subnet within a Virtual Network"

}

variable "vm_username_manager" {

description = "Enter admin username to SSH into Linux VM"

}

variable "vm_username_master" {

description = "Enter admin username to SSH into Linux VM"

}

variable "vm_username_worker1" {

description = "Enter admin username to SSH into Linux VM"

}

variable "vm_username_worker2" {

description = "Enter admin username to SSH into Linux VM"

}

variable "vm_username_worker3" {

description = "Enter admin username to SSH into Linux VM"

}

variable "vm_password" {

description = "Enter admin password to SSH into VM"

}

var_values.tfvars

subscription_id = "xxxxxxx" client_id = "xxxxxxx" client_secret = "xxxxxxx" tenant_id = "xxxxxxx" location = "YOUR-LOCATION" resource_group_name = "Cloudera-Cluster" vnet_cidr = "192.168.0.0/16" subnet1_cidr = "192.168.1.0/24" subnet2_cidr = "192.168.2.0/24" vm_username_manager = "dbi" vm_username_master = "dbi" vm_username_worker1 = "dbi" vm_username_worker2 = "dbi" vm_username_worker3 = "dbi" vm_password = "YOUR-PASSWORD"

Next, we will configure the virtual network with 1 subnet for all hosts.

network.tf

resource "azurerm_resource_group" "terraform_rg" {

name = "${var.resource_group_name}"

location = "${var.location}"

}

resource "azurerm_virtual_network" "vnet" {

name = "cdh-vnet"

address_space = ["${var.vnet_cidr}"]

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_subnet" "subnet_1" {

name = "Subnet-1"

address_prefix = "${var.subnet1_cidr}"

virtual_network_name = "${azurerm_virtual_network.vnet.name}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

}

Next, we can create the storage account related to the resource group with a container.

storage.tf

resource "azurerm_storage_account" "storage" {

name = "dbistorage1"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

location = "${var.location}"

account_tier = "Standard"

account_replication_type = "LRS"

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_storage_container" "container" {

name = "vhds"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

storage_account_name = "${azurerm_storage_account.storage.name}"

container_access_type = "private"

}

The next step will be to create a security group for all VM. We will implement 2 rules. Allow SSH and HTTP connection from everywhere, which it’s basically not really secure, but don’t forget that we are in a volatile testing infrastructure.

security_group.tf

resource "azurerm_network_security_group" "nsg_cluster" {

name = "cdh-nsg"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

security_rule {

name = "AllowSSH"

priority = 100

direction = "Inbound"

access = "Allow"

protocol = "Tcp"

source_port_range = "*"

destination_port_range = "22"

source_address_prefix = "*"

destination_address_prefix = "*"

}

security_rule {

name = "AllowHTTP"

priority = 200

direction = "Inbound"

access = "Allow"

protocol = "Tcp"

source_port_range = "*"

destination_port_range = "80"

source_address_prefix = "Internet"

destination_address_prefix = "*"

}

tags {

group = "Cloudera-Cluster"

}

}

Next we will create the private / public IP for our instances.

ip.tf

resource "azurerm_public_ip" "manager_pip" {

name = "cdh-pip"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

public_ip_address_allocation = "static"

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_network_interface" "public_nic" {

name = "manager_network_interface"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

network_security_group_id = "${azurerm_network_security_group.nsg_cluster.id}"

ip_configuration {

name = "manager_ip"

subnet_id = "${azurerm_subnet.subnet_1.id}"

private_ip_address_allocation = "dynamic"

public_ip_address_id = "${azurerm_public_ip.manager_pip.id}"

}

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_public_ip" "master_pip" {

name = "cdh-pip1"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

public_ip_address_allocation = "static"

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_network_interface" "public_nic1" {

name = "master_network_interface"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

network_security_group_id = "${azurerm_network_security_group.nsg_cluster.id}"

ip_configuration {

name = "master_ip"

subnet_id = "${azurerm_subnet.subnet_2.id}"

private_ip_address_allocation = "dynamic"

public_ip_address_id = "${azurerm_public_ip.master_pip.id}"

}

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_public_ip" "worker1_pip" {

name = "cdh-pip2"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

public_ip_address_allocation = "static"

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_network_interface" "public_nic2" {

name = "worker1_network_interface"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

network_security_group_id = "${azurerm_network_security_group.nsg_cluster.id}"

ip_configuration {

name = "worker1_ip"

subnet_id = "${azurerm_subnet.subnet_2.id}"

private_ip_address_allocation = "dynamic"

public_ip_address_id = "${azurerm_public_ip.worker1_pip.id}"

}

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_public_ip" "worker2_pip" {

name = "cdh-pip3"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

public_ip_address_allocation = "static"

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_network_interface" "public_nic3" {

name = "worker2_network_interface"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

network_security_group_id = "${azurerm_network_security_group.nsg_cluster.id}"

ip_configuration {

name = "worker2_ip"

subnet_id = "${azurerm_subnet.subnet_2.id}"

private_ip_address_allocation = "dynamic"

public_ip_address_id = "${azurerm_public_ip.worker2_pip.id}"

}

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_public_ip" "worker3_pip" {

name = "cdh-pip4"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

public_ip_address_allocation = "static"

tags {

group = "Cloudera-Cluster"

}

}

resource "azurerm_network_interface" "public_nic4" {

name = "worker3_network_interface"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

network_security_group_id = "${azurerm_network_security_group.nsg_cluster.id}"

ip_configuration {

name = "worker3_ip"

subnet_id = "${azurerm_subnet.subnet_2.id}"

private_ip_address_allocation = "dynamic"

public_ip_address_id = "${azurerm_public_ip.worker3_pip.id}"

}

tags {

group = "Cloudera-Cluster"

}

}

Once the network, storage and the security group are configured we can now provision our VM instances with the following configuration:

- 5 instances

- Master and Manager VM size: Standard_DS3_v2

- Worker VM size: Standard_DS2_v2

- Centos 7.3

- 1 OS disk + 1 data disk of 100GB

vm.tf

resource "azurerm_virtual_machine" "la_manager" {

name = "cdh_manager"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

network_interface_ids = ["${azurerm_network_interface.public_nic.id}"]

vm_size = "Standard_DS3_v2"

#This will delete the OS disk automatically when deleting the VM

delete_os_disk_on_termination = true

storage_image_reference {

publisher = "OpenLogic"

offer = "CentOS"

sku = "7.3"

version = "latest"

}

storage_os_disk {

name = "osdisk-1"

vhd_uri = "${azurerm_storage_account.storage.primary_blob_endpoint}${azurerm_storage_container.container.name}/osdisk-1.vhd"

caching = "ReadWrite"

create_option = "FromImage"

}

# Optional data disks

storage_data_disk {

name = "data"

vhd_uri = "${azurerm_storage_account.storage.primary_blob_endpoint}${azurerm_storage_container.container.name}/data1.vhd"

disk_size_gb = "100"

create_option = "Empty"

lun = 0

}

os_profile {

computer_name = "manager"

admin_username = "${var.vm_username_manager}"

admin_password = "${var.vm_password}"

}

os_profile_linux_config {

disable_password_authentication = false

}

tags {

group = "Cloudera-Cluster"

}

}

# Master (High Availability)

resource "azurerm_virtual_machine" "la_master" {

name = "cdh_master"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

network_interface_ids = ["${azurerm_network_interface.public_nic1.id}"]

vm_size = "Standard_DS3_v2"

#This will delete the OS disk automatically when deleting the VM

delete_os_disk_on_termination = true

storage_image_reference {

publisher = "OpenLogic"

offer = "CentOS"

sku = "7.3"

version = "latest"

}

storage_os_disk {

name = "osdisk-2"

vhd_uri = "${azurerm_storage_account.storage.primary_blob_endpoint}${azurerm_storage_container.container.name}/osdisk-2.vhd"

caching = "ReadWrite"

create_option = "FromImage"

}

# Optional data disks

storage_data_disk {

name = "data"

vhd_uri = "${azurerm_storage_account.storage.primary_blob_endpoint}${azurerm_storage_container.container.name}/data2.vhd"

disk_size_gb = "100"

create_option = "Empty"

lun = 0

}

os_profile {

computer_name = "master"

admin_username = "${var.vm_username_master}"

admin_password = "${var.vm_password}"

}

os_profile_linux_config {

disable_password_authentication = false

}

tags {

group = "Cloudera-Cluster"

}

}

# Worker 1

resource "azurerm_virtual_machine" "la_worker1" {

name = "cdh_worker1"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

network_interface_ids = ["${azurerm_network_interface.public_nic2.id}"]

vm_size = "Standard_DS2_v2"

#This will delete the OS disk automatically when deleting the VM

delete_os_disk_on_termination = true

storage_image_reference {

publisher = "OpenLogic"

offer = "CentOS"

sku = "7.3"

version = "latest"

}

storage_os_disk {

name = "osdisk-3"

vhd_uri = "${azurerm_storage_account.storage.primary_blob_endpoint}${azurerm_storage_container.container.name}/osdisk-3.vhd"

caching = "ReadWrite"

create_option = "FromImage"

}

# Optional data disks

storage_data_disk {

name = "data"

vhd_uri = "${azurerm_storage_account.storage.primary_blob_endpoint}${azurerm_storage_container.container.name}/data3.vhd"

disk_size_gb = "100"

create_option = "Empty"

lun = 0

}

os_profile {

computer_name = "worker1"

admin_username = "${var.vm_username_worker1}"

admin_password = "${var.vm_password}"

}

os_profile_linux_config {

disable_password_authentication = false

}

tags {

group = "Cloudera-Cluster"

}

}

# Worker 2

resource "azurerm_virtual_machine" "la_worker2" {

name = "cdh_worker2"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

network_interface_ids = ["${azurerm_network_interface.public_nic3.id}"]

vm_size = "Standard_DS2_v2"

#This will delete the OS disk automatically when deleting the VM

delete_os_disk_on_termination = true

storage_image_reference {

publisher = "OpenLogic"

offer = "CentOS"

sku = "7.3"

version = "latest"

}

storage_os_disk {

name = "osdisk-4"

vhd_uri = "${azurerm_storage_account.storage.primary_blob_endpoint}${azurerm_storage_container.container.name}/osdisk-4.vhd"

caching = "ReadWrite"

create_option = "FromImage"

}

# Optional data disks

storage_data_disk {

name = "data"

vhd_uri = "${azurerm_storage_account.storage.primary_blob_endpoint}${azurerm_storage_container.container.name}/data4.vhd"

disk_size_gb = "100"

create_option = "Empty"

lun = 0

}

os_profile {

computer_name = "worker2"

admin_username = "${var.vm_username_worker2}"

admin_password = "${var.vm_password}"

}

os_profile_linux_config {

disable_password_authentication = false

}

tags {

group = "Cloudera-Cluster"

}

}

# Worker 3

resource "azurerm_virtual_machine" "la_worker3" {

name = "cdh_worker3"

location = "${var.location}"

resource_group_name = "${azurerm_resource_group.terraform_rg.name}"

network_interface_ids = ["${azurerm_network_interface.public_nic4.id}"]

vm_size = "Standard_DS2_v2"

#This will delete the OS disk automatically when deleting the VM

delete_os_disk_on_termination = true

storage_image_reference {

publisher = "OpenLogic"

offer = "CentOS"

sku = "7.3"

version = "latest"

}

storage_os_disk {

name = "osdisk-5"

vhd_uri = "${azurerm_storage_account.storage.primary_blob_endpoint}${azurerm_storage_container.container.name}/osdisk-5.vhd"

caching = "ReadWrite"

create_option = "FromImage"

}

# Optional data disks

storage_data_disk {

name = "data"

vhd_uri = "${azurerm_storage_account.storage.primary_blob_endpoint}${azurerm_storage_container.container.name}/data5.vhd"

disk_size_gb = "100"

create_option = "Empty"

lun = 0

}

os_profile {

computer_name = "worker3"

admin_username = "${var.vm_username_worker3}"

admin_password = "${var.vm_password}"

}

os_profile_linux_config {

disable_password_authentication = false

}

tags {

group = "Cloudera-Cluster"

}

}

Execution

We can now execute the following command from a shell environment. Note that all files must be placed in the same directory.

[root@centos Terraform]# terraform plan -var-file=var_values.tfvars

[root@centos Terraform]# terraform apply -var-file=var_values.tfvars

After few minutes, check your resources in your Azure portal.

To destroy the infrastructure, run the following command:

[root@centos Terraform]# terraform destroy -var-file=var_values.tfvars

Once our infrastructure has been fully provisioned by Terraform we can start the installation of the Cloudera distribution of Hadoop with Ansible.

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/11/NIJ-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/JEW_web-min-scaled.jpg)