In mid-February, I had the pleasure of attending with SUSE, the Swiss Cyber Security Days. This two-day event was taking place in Bern, and I gave a 20-minute session on Harvester. Even if I think this was well received, in 20 minutes, you can’t go through every single feature of a product. And I planned it more as a global overview than a deep dive.

This time, I decided to conduct a deeper exploration of Harvester.

HCI, Harvester, what is this all about?

Nowadays, most of our applications run virtualized. From development environments to huge production clusters, virtual machines are everywhere. And to provide better scalability and flexibility, IT infrastructures evolved from traditional legacy datacenters to hyper-converged infrastructures.

What is hyper-converged infrastructure, so? An HCI is a software-defined, fully integrated system that combines compute, storage, networking, and virtualization resources into a single platform. Those are big boxes that concentrate everything here in one place. You define your workload, you setup your storage, and you design your network all by yourself.

Harvester is an open-source HCI solution that provides cloud-native technologies for managing and orchestrating virtual machines, along with storage and networks. The best is yet to come. Harvester, under the hood, is based on famous and well-known cloud-native technologies. We will discover that while playing with this platform.

Nevertheless, let’s not waste time talking about the theory. Let’s get to work!

Installing Harvester

Let’s start with the installation of Harvester. It’s, in fact, a pretty straight forward process.

ISO is provided and available on GitHub, the version 1.3.0 is the latest stable version as of this writing. Paying attention to the GitHub release page, there are regularly new versions or updates, like RCs. You can try those, but, well, it is release candidates 😉

SUSE and the corresponding web page are pretty clear on the hardware requirements.

Remember, here we are dealing with a critical piece of software that is going to handle all of your workload. It is obvious, but there’s no point, except maybe for very basic testing, running Harvester as a VM on your personal computer. And also, don’t be shy about the specifications.

In summary:

- CPU: requires hardware virtualization. All servers have those CPU extensions activated. But in order to cross check, just try to execute and trigger this command on a Linux shell prompt. In terms of resources, 8 cores are marked as a minimum, with 16 cores recommended.

- Memory: 32GB is the minimum. But as always, the more, the better.

- Local storage of the node requires a minimum of 250GB. It can be either one single disk or spread across multiple disks

The network stack? I would treat that, in fact, as separate, as I think it would deserve, regarding the architecture, its own blog post. Only remember that the server would obviously require network connectivity. Please note the set of network ports that you would need to open for incoming traffic.

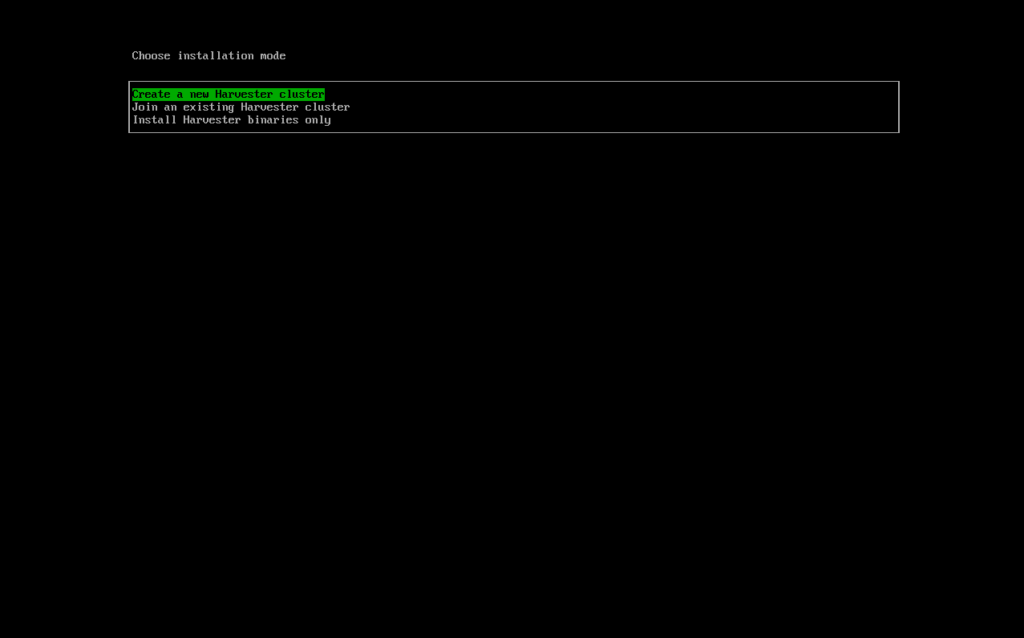

Installation for real

As mentioned previously, the installation stack is and ISO file, which is 5.79GB. Once you boot your machine straight from it, you’ll be welcomed by … a GRUB, a quite usual welcome page for Linux enthusiasts. Be aware: mouse not required, no graphical interface here (which is nice: the KISS principle).

Once validated, you need to follow the installation process. It will ask you for the node hostname, standard network settings (IP, DNS, Proxy, etc.), VIP, manual token (useful when you add additional Harvester nodes).

One word about the VIP. This IP is different from the one you have assigned to the node. The VIP is kind of assigned to the Harvester cluster you are going to create. This is the one you’ll enter when you want to target the cluster for the Web UI of Harvester.

Talking about cluster, based on my own testing, Harvester could potentially work in single-node mode, but more as a cluster. The first node you will install will always be a management node. Starting at the fourth node, the first three ones are management nodes, remaining ones will be worker nodes.

I hadn’t talked until now about the core architecture of Harvester, and it was on purpose: the best is to discover it now 🙂

Once installed, you end up with a welcome screen with a summary of the management cluster URL and status, the current node name, IP, and status as well.

Press F12, fill up the password you’ve entered during the installation process, and you’ll reach a prompt. Warning: except if I missed a setting, the shell prompt that is presented is using the QWERTY keyboard layout. Tips found in the FAQ, you can SSH using the admin user name (password authentication for a fresh installed node).

jpc@dbi-lt-jpc:~> ssh [email protected]

([email protected]) Password:

Last login: Thu Apr 18 11:57:11 2024 from 192.168.20.211

rancher@harv-01:~> sudo su -

harv-01:~ #

Enter a very common command for any DevOps & Kubernetes addict:

harv-01:~ $ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

harv-01 Ready control-plane,etcd,master 4h40m v1.27.10+rke2r1 192.168.20.121 <none> Harvester v1.3.0 5.14.21-150400.24.108-default containerd://1.7.11-k3s2

If you already got some interest into Harvester, you expected that. As said in the introduction, Harvester is based on cloud native components and Kubernetes is at its core.

“To Host and Serve”

But Kubernetes is not the only one. Remember: Harvester is a HCI, and it is intended to carry out corporate workloads. And in a cloud-native way 🙂

As always, on a Kubernetes cluster, looking at namespaces and pods gives a good overview of what is going on here.

Let’s do this!

harv-01:~ $ kubectl get ns

NAME STATUS AGE

cattle-dashboards Active 5h27m

cattle-fleet-clusters-system Active 5h27m

cattle-fleet-local-system Active 5h27m

cattle-fleet-system Active 5h28m

cattle-logging-system Active 5h27m

cattle-monitoring-system Active 5h27m

cattle-provisioning-capi-system Active 5h27m

cattle-system Active 5h28m

cluster-fleet-local-local-1a3d67d0a899 Active 5h27m

default Active 5h29m

fleet-local Active 5h28m

harvester-public Active 5h26m

harvester-system Active 5h26m

kube-node-lease Active 5h29m

kube-public Active 5h29m

kube-system Active 5h29m

local Active 5h28m

longhorn-system Active 5h26m

Let’s start with the harvester-system namespace.

harv-01:~ $ kubectl get pods -n harvester-system

NAME READY STATUS RESTARTS AGE

harvester-667fb9cbc8-jwmtx 1/1 Running 0 5h47m

harvester-load-balancer-6755cb4d67-7xjw8 1/1 Running 0 5h47m

harvester-load-balancer-webhook-6b5c6b546-bdf66 1/1 Running 0 5h47m

harvester-network-controller-manager-5ff644ffb6-66s69 1/1 Running 0 5h47m

harvester-network-controller-v9lhf 1/1 Running 0 5h47m

harvester-network-webhook-5c596bdd6c-grctr 1/1 Running 0 5h47m

harvester-node-disk-manager-gdfzx 1/1 Running 0 5h47m

harvester-node-manager-mrqbs 1/1 Running 0 5h47m

harvester-node-manager-webhook-9cfccc84c-hxnbm 1/1 Running 0 5h47m

harvester-webhook-79f5446494-65lvb 1/1 Running 0 5h47m

kube-vip-zk62c 1/1 Running 0 5h47m

virt-api-77cbf85485-spnf8 1/1 Running 0 5h46m

virt-controller-659ccbfbcd-6xzmb 1/1 Running 0 5h46m

virt-controller-659ccbfbcd-tkk2b 1/1 Running 0 5h46m

virt-handler-9bwd5 1/1 Running 0 5h46m

virt-operator-6b8b9b7578-66tps 1/1 Running 0 5h47m

Pods virt-api, virt-controller, virt-operator. For those who know me, they know my addiction to KubeVirt. This wonderful add-on brings the management of virtual machines to Kubernetes and relies on KVM (Kerned-based Virtual Machine, released in 2007).

Add-on to Kubernetes, that also means new objects.

harv-01:~ $ kubectl api-resources |grep "kubevirt.io/v1"

virtualmachineclones vmclone,vmclones clone.kubevirt.io/v1alpha1 true VirtualMachineClone

virtualmachineexports vmexport,vmexports export.kubevirt.io/v1alpha1 true VirtualMachineExport

virtualmachineclusterinstancetypes vmclusterinstancetype,vmclusterinstancetypes,vmcf,vmcfs instancetype.kubevirt.io/v1beta1 false VirtualMachineClusterInstancetype

virtualmachineclusterpreferences vmcp,vmcps instancetype.kubevirt.io/v1beta1 false VirtualMachineClusterPreference

virtualmachineinstancetypes vminstancetype,vminstancetypes,vmf,vmfs instancetype.kubevirt.io/v1beta1 true VirtualMachineInstancetype

virtualmachinepreferences vmpref,vmprefs,vmp,vmps instancetype.kubevirt.io/v1beta1 true VirtualMachinePreference

kubevirts kv,kvs kubevirt.io/v1 true KubeVirt

virtualmachineinstancemigrations vmim,vmims kubevirt.io/v1 true VirtualMachineInstanceMigration

virtualmachineinstancepresets vmipreset,vmipresets kubevirt.io/v1 true VirtualMachineInstancePreset

virtualmachineinstancereplicasets vmirs,vmirss kubevirt.io/v1 true VirtualMachineInstanceReplicaSet

virtualmachineinstances vmi,vmis kubevirt.io/v1 true VirtualMachineInstance

virtualmachines vm,vms kubevirt.io/v1 true VirtualMachine

migrationpolicies migrations.kubevirt.io/v1alpha1 false MigrationPolicy

virtualmachinepools vmpool,vmpools pool.kubevirt.io/v1alpha1 true VirtualMachinePool

virtualmachinerestores vmrestore,vmrestores snapshot.kubevirt.io/v1alpha1 true VirtualMachineRestore

virtualmachinesnapshotcontents vmsnapshotcontent,vmsnapshotcontents snapshot.kubevirt.io/v1alpha1 true VirtualMachineSnapshotContent

virtualmachinesnapshots vmsnapshot,vmsnapshots snapshot.kubevirt.io/v1alpha1 true VirtualMachineSnapshot

With KubeVirt, and so Harvester, a virtual machine will be defined as a Kubernetes object, VirtualMachine. VirtualMachineInstances will be a running instance of a VirtualMachine object. Just imagine, being able to apply VirtualMachine definitions and get your VM scheduled, by Kubernetes standard scheduler somewhere on your Harvester cluster 🥰 . The best of two worlds is brought together. Harvester simplifies all this using, like Rancher is doing by easing the management of standard Kubernetes workloads.

Quickly continue to get a taste of what is running also on Harvester. We can see some cattle- namespace. Rancher is running here!

harv-01:~ $ kubectl get pods -n cattle-system

NAME READY STATUS RESTARTS AGE

harvester-cluster-repo-5c75f7d9fd-88g4x 1/1 Running 0 5h31m

rancher-5dbd4cf7dc-995rr 1/1 Running 0 5h29m

rancher-webhook-5788f655d8-4p8bt 1/1 Running 0 5h31m

system-upgrade-controller-78cfb99bb7-hdslt 1/1 Running 2 (5h14m ago) 5h31m

What else do we have? Longhorn, for the storage.

harv-01:~ $ kubectl get pods -n longhorn-system

NAME READY STATUS RESTARTS AGE

csi-attacher-dc76666dd-jhs8l 1/1 Running 0 5h32m

csi-attacher-dc76666dd-t96tr 1/1 Running 0 5h32m

csi-attacher-dc76666dd-w9zwf 1/1 Running 0 5h32m

csi-provisioner-7fc9d85c66-48n7g 1/1 Running 0 5h32m

csi-provisioner-7fc9d85c66-89h4d 1/1 Running 0 5h32m

csi-provisioner-7fc9d85c66-c94hb 1/1 Running 0 5h32m

csi-resizer-67664c5755-jpl6r 1/1 Running 0 5h32m

csi-resizer-67664c5755-r4llm 1/1 Running 0 5h32m

csi-resizer-67664c5755-vcn94 1/1 Running 0 5h32m

csi-snapshotter-6c9d675d9c-flkf6 1/1 Running 0 5h32m

csi-snapshotter-6c9d675d9c-hw4mj 1/1 Running 0 5h32m

csi-snapshotter-6c9d675d9c-sppkx 1/1 Running 0 5h32m

engine-image-ei-acb7590c-cxtvm 1/1 Running 0 5h32m

instance-manager-3c8c76df5f2bfa171e62a198a9ade00e 1/1 Running 0 5h32m

longhorn-csi-plugin-t7x2h 3/3 Running 0 5h32m

longhorn-driver-deployer-67fd98774c-xd7x2 1/1 Running 0 5h32m

longhorn-loop-device-cleaner-vq2nr 1/1 Running 0 5h32m

longhorn-manager-mn75c 1/1 Running 0 5h32m

longhorn-ui-7f8cdfcc48-gqcs4 1/1 Running 0 5h32m

longhorn-ui-7f8cdfcc48-v9nhc 1/1 Running 0 5h32m

You remember the cattle-monitoring-system namespace? We didn’t talked also about the based OS, Elemental. We will go deeper into all those topics, as they are pretty dense, lots of subjects here. But by using Kubernetes for his HCI, SUSE simplifies the management of workloads for companies and reduces the time required for their delivery.

Stay connected for much more!

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/JPC_wev-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/OSC_web-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2023/03/KKE_web-min-scaled.jpg)