Introduction

Have you ever cloned a database using RMAN?

Have you ever gotten the advice not to clone during daytime to avoid performance downgrade on the entire storage or network?

For a proof of concept, we wanted to evaluate a Pure Storage array in terms of feasibility and speed of database cloning by using storage-based snapshot technology.

First, I want to mention that PureStorage produces Storage devices in several sizes, all based on NVMe devices together with a high bandwidth connection and data compression.

All in all a “fast as hell” storage but this is not enough. There is additionally a snapshot technology which enables a snapshot-copy of a volume in no time, regardless of its size.

We wanted to see if it works as well for cloning Oracle databases.

Starting point

We set up:

• Two virtual machines on two ESX hosts, running RHEL 7.9

• Two sets of disk-groups to store the database files.

• A source database, running on machine 1, Oracle Release 19.10.

• Prepare machine 2 for a target database to be created by cloning

We had to install only very few extras on the virtual machines

• Install rpms, required by PureStorage

• Configure multipathing

• Update UDEV rules

About the Storage

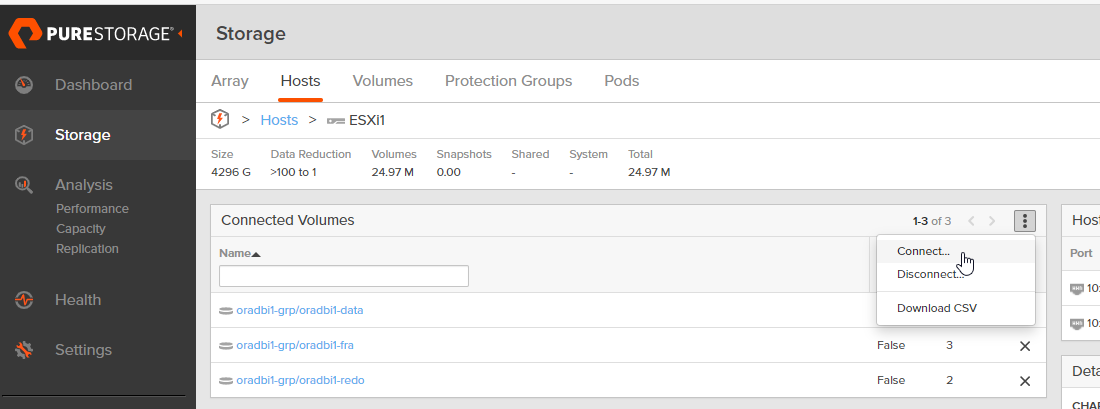

The GUI allows comfortably to create volumes and assign them to groups and “Protection Groups”.

Connect the volumes with the hosts.

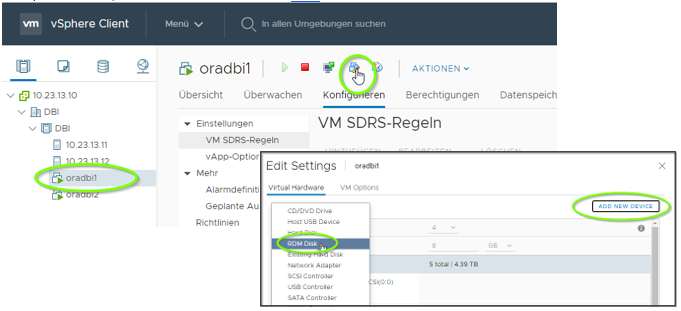

In vSphere Client, attach the volumes to the VMs

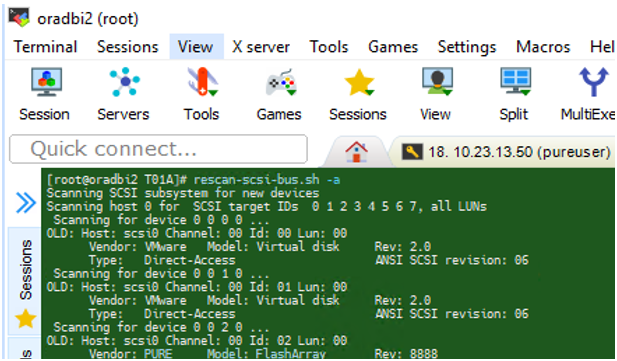

Configure the volumes on Linux OS

configure multipath

vi /etc/multipath.conf

multipaths {

multipath { wwid 3624a9370F9C23FA49CFC431C00013CD5 alias oradbi1-data }

multipath { wwid 3624a9370F9C23FA49CFC431C00013CD7 alias oradbi1-fra }

multipath { wwid 3624a9370F9C23FA49CFC431C00013CD6 alias oradbi1-redo }

}

create filesystems on the volumes

mkfs.ext4 /dev/mapper/oradbi2-data mkfs.ext4 /dev/mapper/3624a9370f9c23fa49cfc431c00013cda mkfs.ext4 /dev/mapper/3624a9370f9c23fa49cfc431c00013cd9 mkfs.ext4 /dev/mapper/3624a9370f9c23fa49cfc431c00013cd8

enter the volumes in fstab

vi /etc/fstab

/dev/mapper/3624a9370f9c23fa49cfc431c00013cd5 /u02/oradata ext4 defaults 0 0 /dev/mapper/3624a9370f9c23fa49cfc431c00013cd6 /u03/oradata ext4 defaults 0 0 /dev/mapper/3624a9370f9c23fa49cfc431c00013cd7 /u90/fast_recovery_area ext4 defaults 0 0

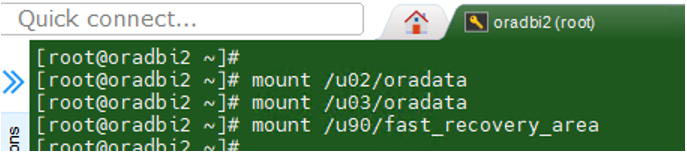

And finally mount them

mount -a

Now you can clone the database.

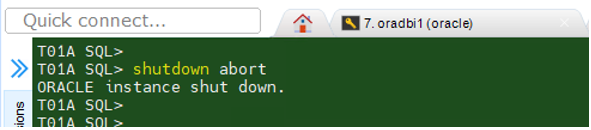

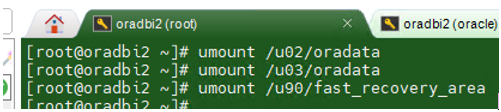

On the target server, shut down the database and unmount the volumes:

Create a snapshot

You can either use the GUI or CLI.

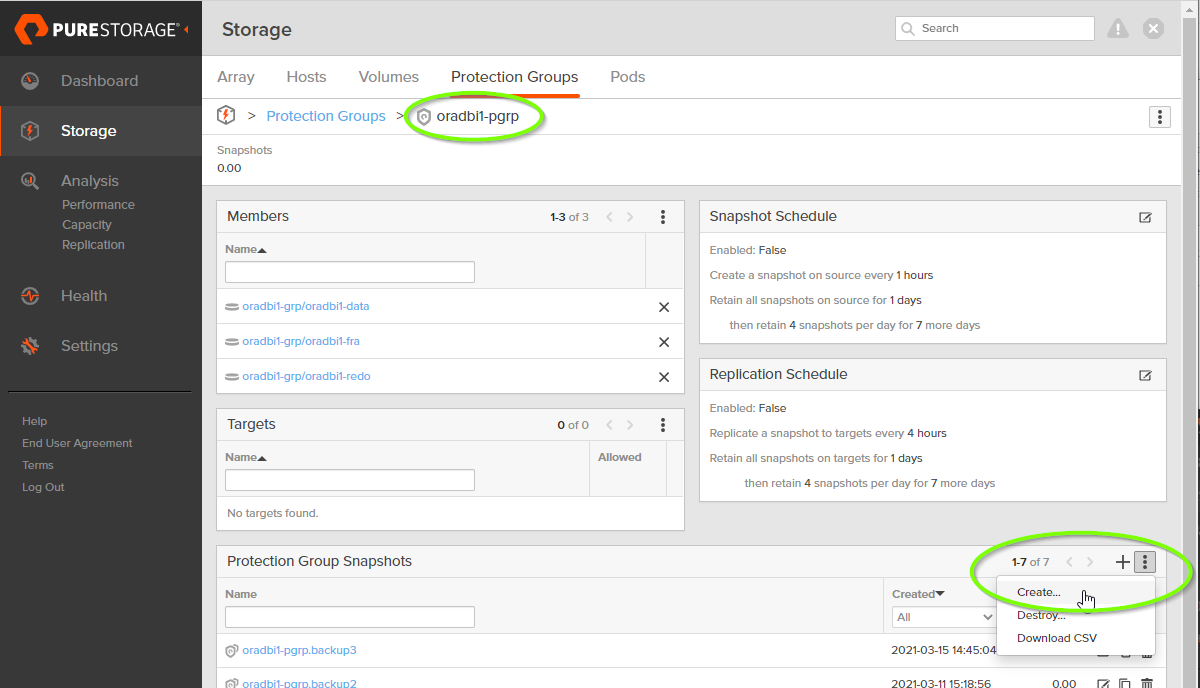

in the web-console, navigate to Storage -> Protection Groups

select the Protection Group you want to “snapshot”

select “create” from the overflow-menu

provide a “speaking” name for your first snapshot.

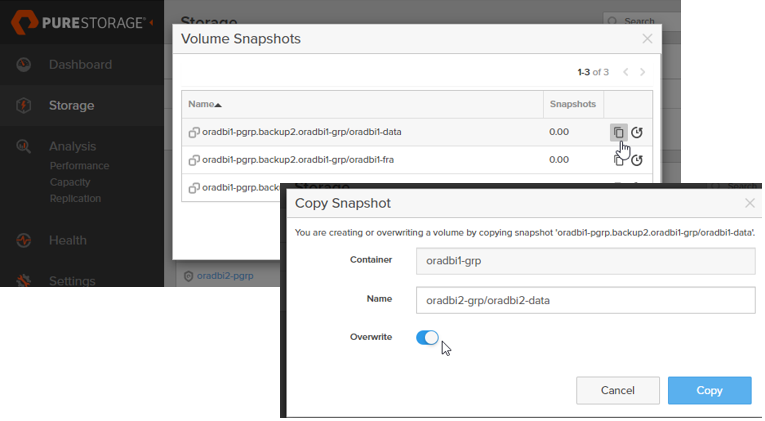

Next you copy the volume snapshots to their target counterparts:

That’s it !

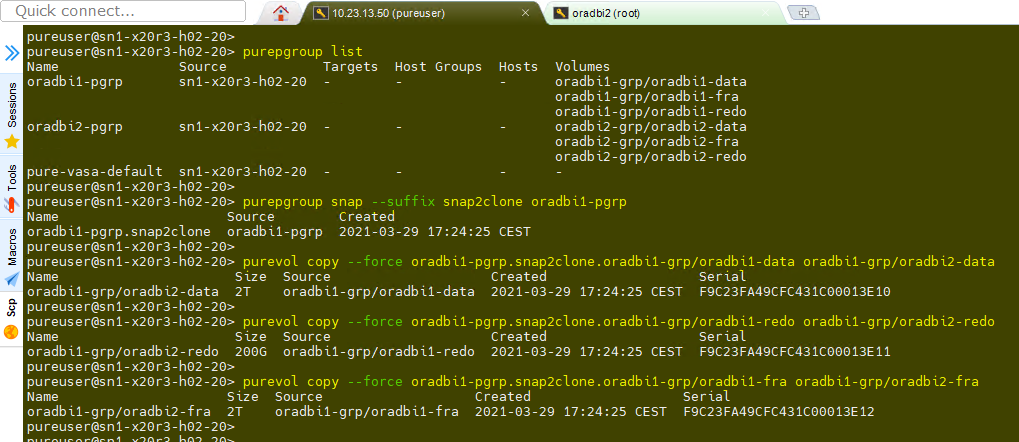

On the CLI it looks even simpler:

Only two commands required: “purepgroup snap” and “purevol copy”

That’s all !

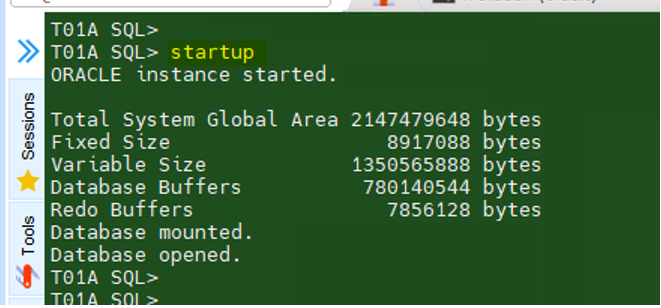

You can mount the copied volumes now and start the database on the target side:

You have probably noted that the copy is nothing else than a copy.

Means that your target-DB has still the same name as the source-DB.

Don’t worry! Changing the name and the DBID of a database, will be continued next week.

see my blog “Rename your DB clone completely“

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/DHE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/SNA_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)