As usual the day 2 with Adams Selipsky’s keynote is where most announcements are done…and they have been severals. As you will probably see plenty of articles about these, and by the way I recommend you to take few minutes for the one of @nicolas-jardot, on my hand I will focus on auditing and compliance by showing how you can leverage the power of Lakes in AWS to improve you governance.

Overloaded of CloudTrail events? try out CloudTrail Lake

I guess we all agree that auditing what is happening on your cloud tenant and monitoring compliance isn’t a question anymore. Whatever if it is in a proactive effort to keep everything under control or reactive to find out who screwed up your budget 😉

Then activating and CloudTrail is a must. Indeed if you deployed your cloud organization and landscape using AWS Control Tower, CloudTrail has been already activated in background.

However, that was the easiest part. The challenge usually comes when you have to access and analyse the outputs stored in S3.

A pretty good solution I had the opportunity to test in a 2 hours workshop is to move then to an Event Data Store and activating CloudTrail Lake allowing to aggregate events from multiple regions and accounts.

The principle is store store all or selected events/outputs in a central repository and then to be able to query these data directly with SQL statements. The output return can be exported to S3 and for instance provided to external auditors.

Activating CloudTrail Lake

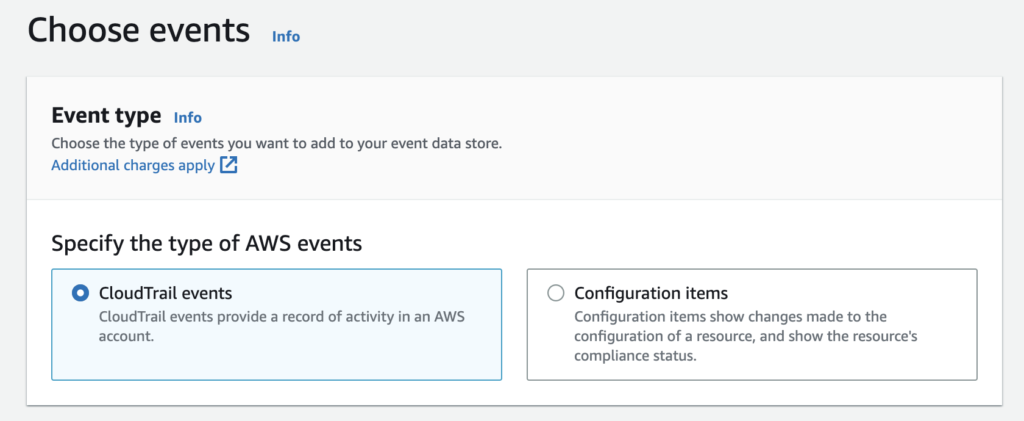

Using CloudTrail Lake you will be starting by creating an Event Data Store, which supports 2 type of data: CloudTrail events and Configuration items coming from AWS Config.

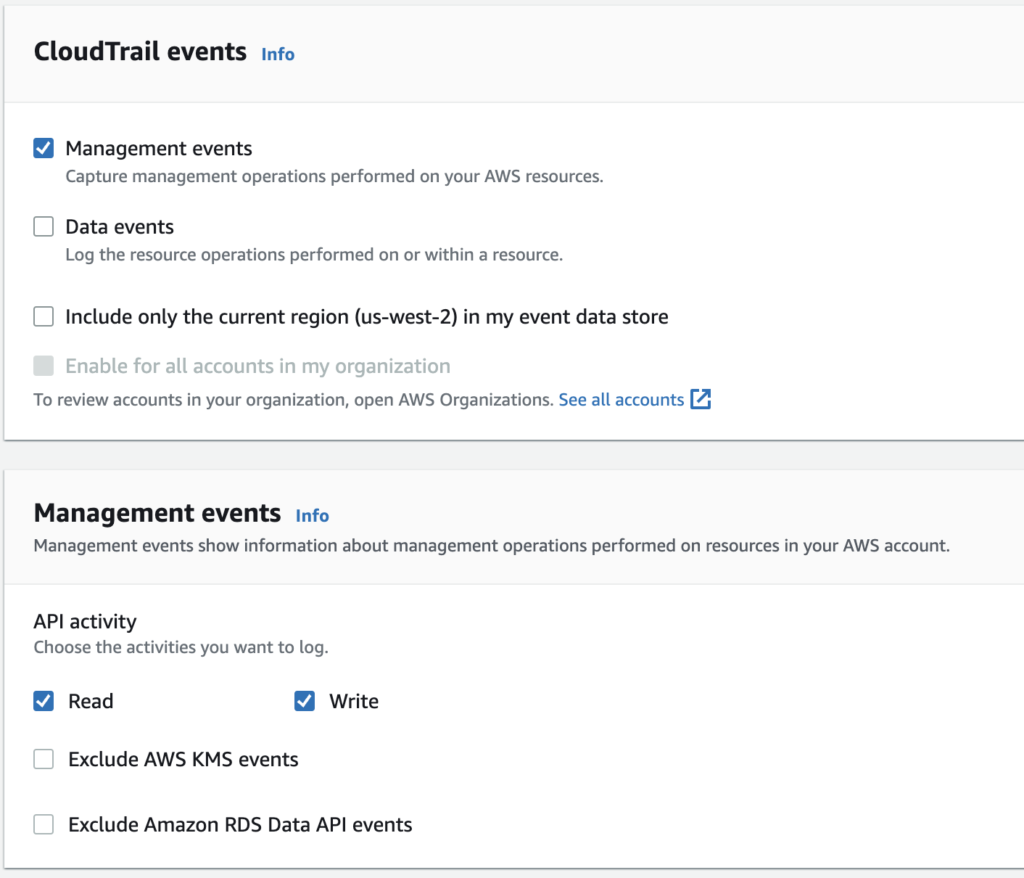

By default you will trace all management events coming from both the console and the API, which you can also customize.

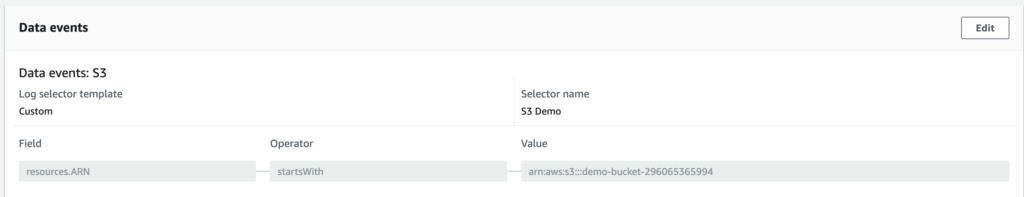

Beside you can check the Data events option, allowing you to log operations performs on and within resources. For instance activities which took place on an S3 bucket. It even allows you to set filtering parameters to define which resources you will be tracing or not.

Getting the outputs…

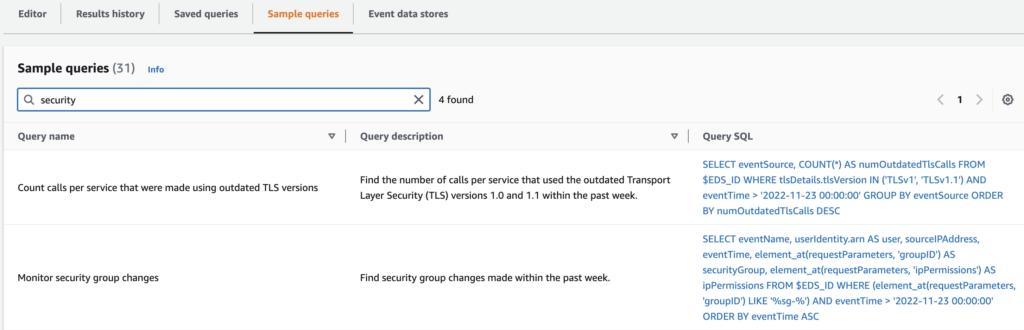

Here comes the interesting part. Using CloudTrail Lake you will be able to run queries against the events stored in your lake. You can either use some sample queries provided by AWS…

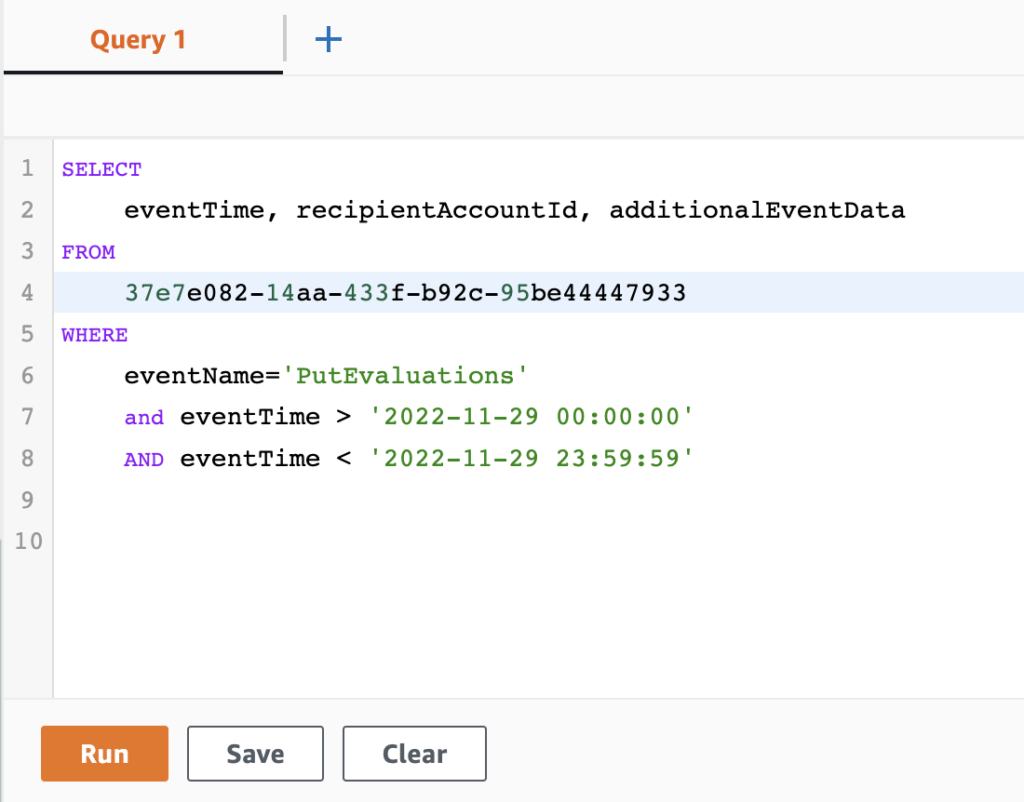

…or write your own queries in the query editor

Pretty basic as it is based on a standard SQL structure:

SELECT <columns> FROM <table> WHERE <conditions>where the table is your Event data store ID.

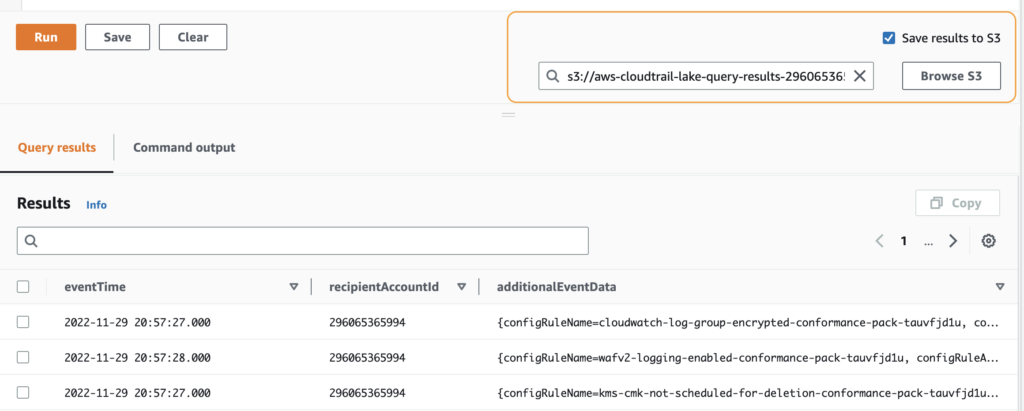

The output is by default presented in a table directly below your query but can also be saved to S3

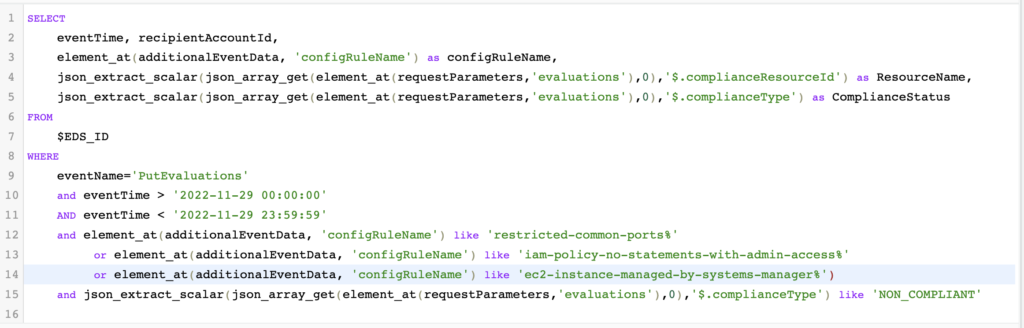

As you can see the output of some data like here the additionalEventData is in JSON format. This means that we can extend our SQL statement to clean it up a bit as well as filtering it out a bit more.

Here an example a bit more funny with some JSON data extraction and additional filtering on AWS Config rules.

Amazon Security Lake

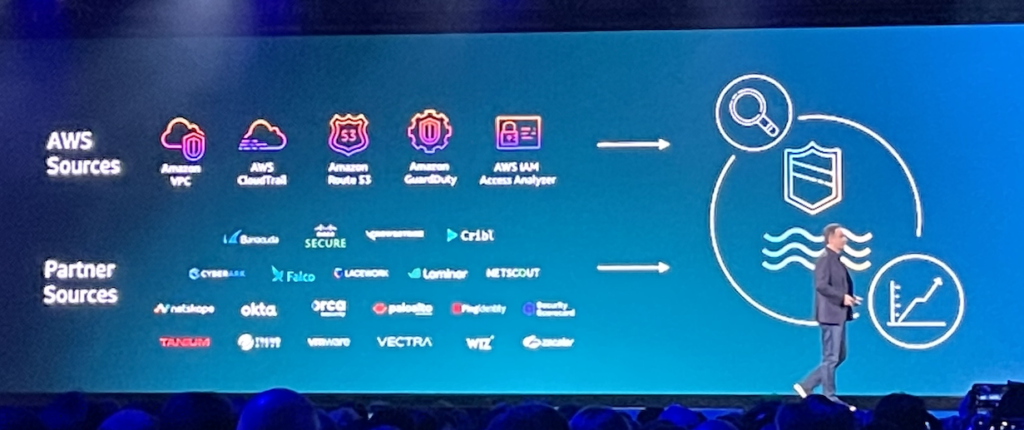

Finally I would like to conclude this post by coming back on of the announcements made today: Amazon Security Lake.

Basically the principle is pretty similar to CloudTrail Lake in the sense of offering a central location for your security data. It works also cross regions and accounts…but in addition it also allows the integration of third party sources in order to even aggregate for instance data for on-prem environments.

This works as Security Lake as adopted the Open Cybersecurity Schema Framework (OCSF).

For moment this new feature is in preview mode and available only in 7 regions…but for sure something my Security Officer will be looking for playing with 😉

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/09/DHU_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/11/NIJ-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/JEW_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/NAC_web-min-scaled.jpg)