This week I feel lucky to attend the AWS re:Invent conference in Las Vegas. This event is so big there are multiple ways to go through it. For the first day I made a mix between breakout sessions and a workshop.

Cloud and DevOps

The very first session was about combining terraform and Github actions to deploy infrastructure in AWS. They started the presentation to discuss DevOps methodology and to apply it for infrastructure. DevOps does not mean everyone should do everything. It’s more to put in place the teams and the organisation to allow better cooperation between operations and the developers.

Then when speaking about deployment, it’s quite challenging because for security purposes, you should avoid to store an access key in your pipeline. Instead in the demo, they showed how to use Github as an OpenID provider to allow Github actions to assume role in your account.

Optimization of app in the Cloud

My favorite session type is the workshop because you come with your laptop and you can have some practice on a dedicated topic. Today’s workshop was about finding performance issues in cloud applications.

They provided us all an AWS account with the application already deployed and our goal was to enable monitoring to detect the issue and resolve it. At the beginning of the workshop, they took as an example an email we can get from a manager or a customer

Can you please have a look as soon as possible, the application is very “slow” today?

It’s slow is probably the most famous complaint we can get working in operations. But it’s also not very useful because it does not help to spot where the problem is. When working on performance observability is a key and having a baseline is really important.

The workshop was split in 2 application types: serverless using Python lambda function and container application. And we had to work with different services like Lambda Insights in CloudWatch and AWS X-Rays for monitoring the application. Then using DevOps Guru, it’s possible to get automated recommendations.

Sustainability in AWS

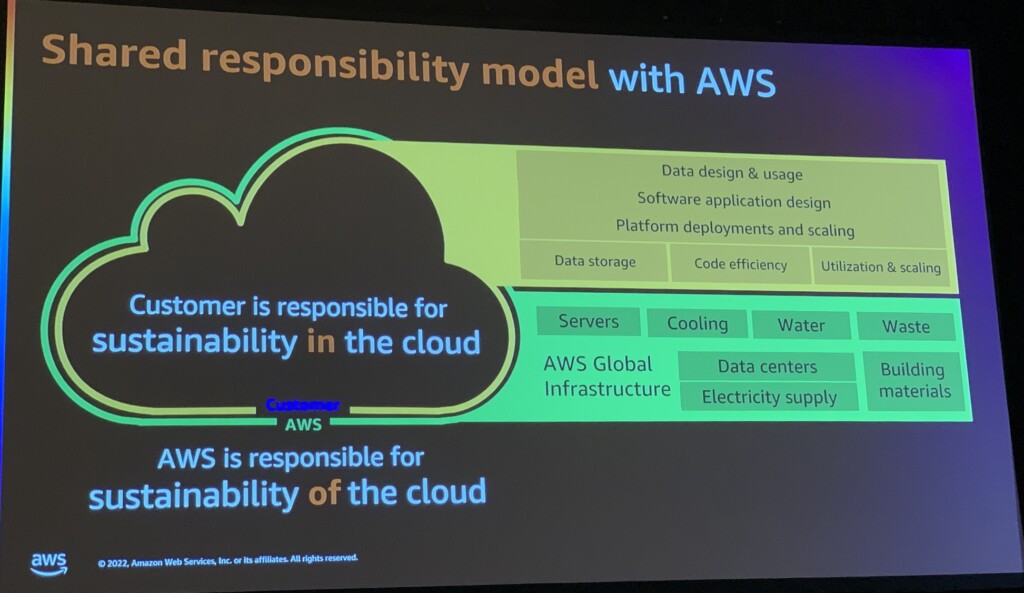

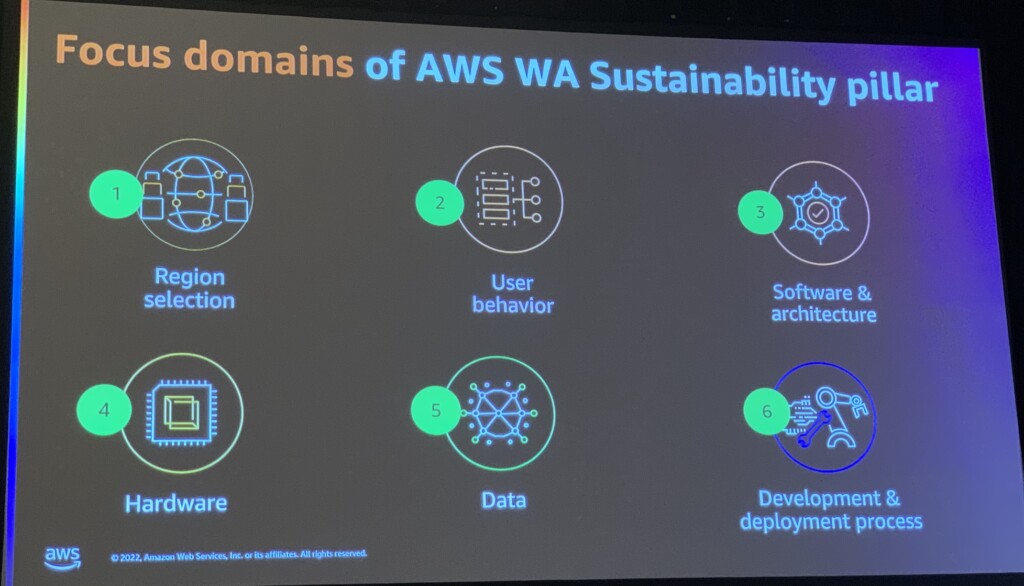

Then I had few more breakout sessions: about network architecture for security which was quite advanced. And one about sustainability in our architectures in the cloud. AWS is aware about it’s impact and started to provide reports about sustainability in 2019. Last year they announced a new pillar in the AWS Well-Architected for sustainability.

AWS is responsible of the sustainability of the platform itself and as a customer we are responsible about the architecture we put in place. Like for performance, it’s important to have good metrics to measure the impact of the applications. One important point to keep in mind is to related the metric to the business to avoid meaningless numbers.

They took an example about New-York taxi and related the Glue consumption to the number of rides. Then you can have a goal to improve your sustainability and you can measure the impact of different scenarios. In the demo, it was possible to reduce the resources used by Glue by adapting the file format in the data lake by using Apache Parquet over plain CSV. The compression and the ability to filter upfront reduced the amount of data to process.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/11/NIJ-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/JEW_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/NAC_web-min-scaled.jpg)