In many blogs or articles, we can read that it is recommended to recycle the error log to be sure that the size is not too big

In the Best Practice Analyzer (BPA) for SQL Server, this is a rule.

But what is a good size for the error log file?

I have often heard “it depends”, but in my opinion, this is not a good answer

The first step is to find out what is a good size for the error log file.

Step 1: Identify the error log size threshold

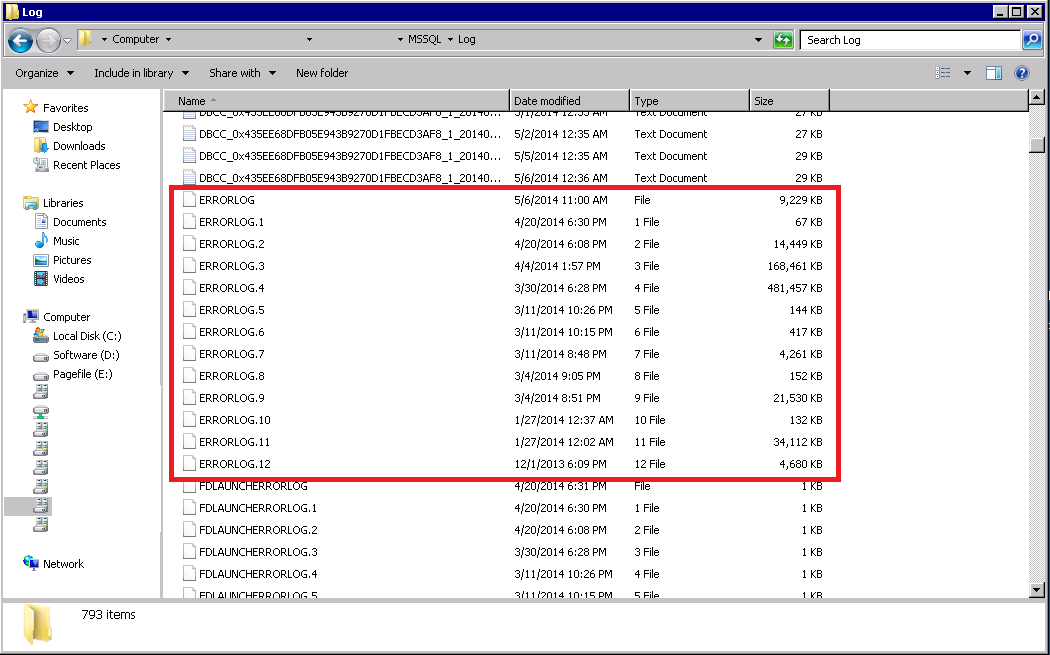

To find an acceptable limit, I first checked the server. The easiest way is to go to the windows explorer on the server, open the error log path and look at the size by file as showed on the following picture:

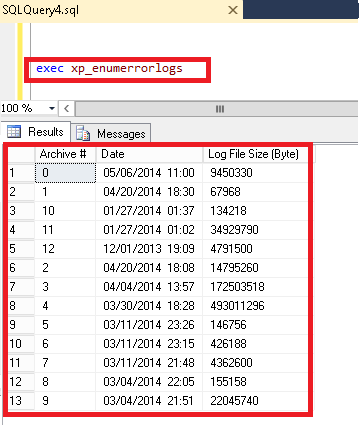

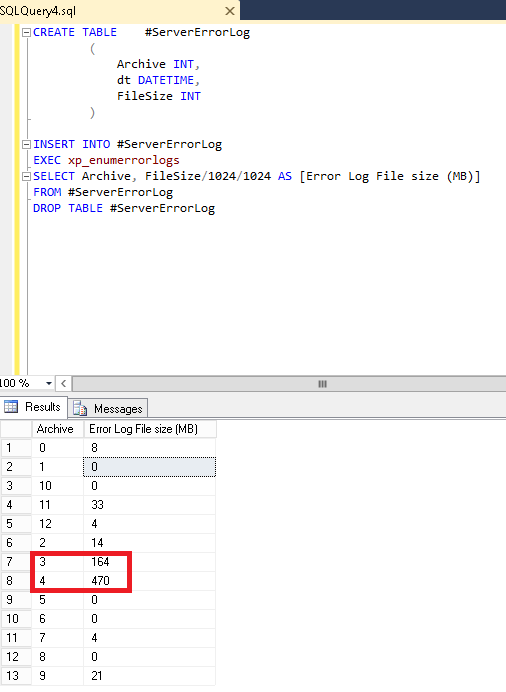

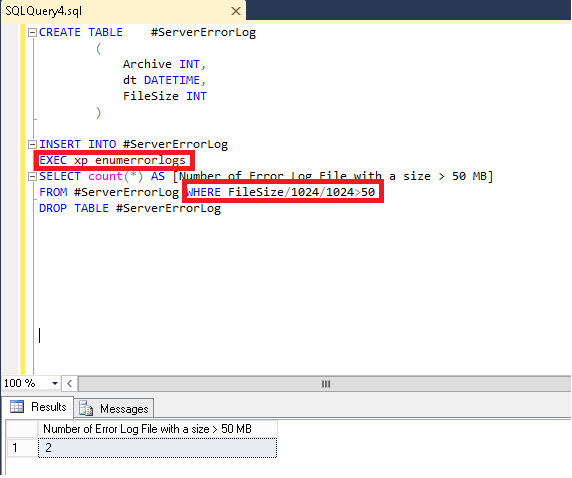

You can also use the undocumented stored procedure xp_enumerrorlogs, which will be useful in my case because I will create a policy that will verify each error log file size against a configured threshold:

xp_enumerrorlogs provides the following information:

- Archive Number

- Creation Date (recycling date)

- Size in byte

On my server, I noticed I have 13 archive files with different sizes, but I have not a strategy to recycle the error log file. Thus, it is recycled during the SQL Server Engine service restart.

For a production server, this can be long to read and display the error log file. Furthermore the error log can become very very big.

At the end of this article, I will give you a dbi best practice for the error log.

In my sample, I will focus with 4 archives: 2 small size archive files with number 2 and 11 and 2 big size archive files with number 3 & 4.

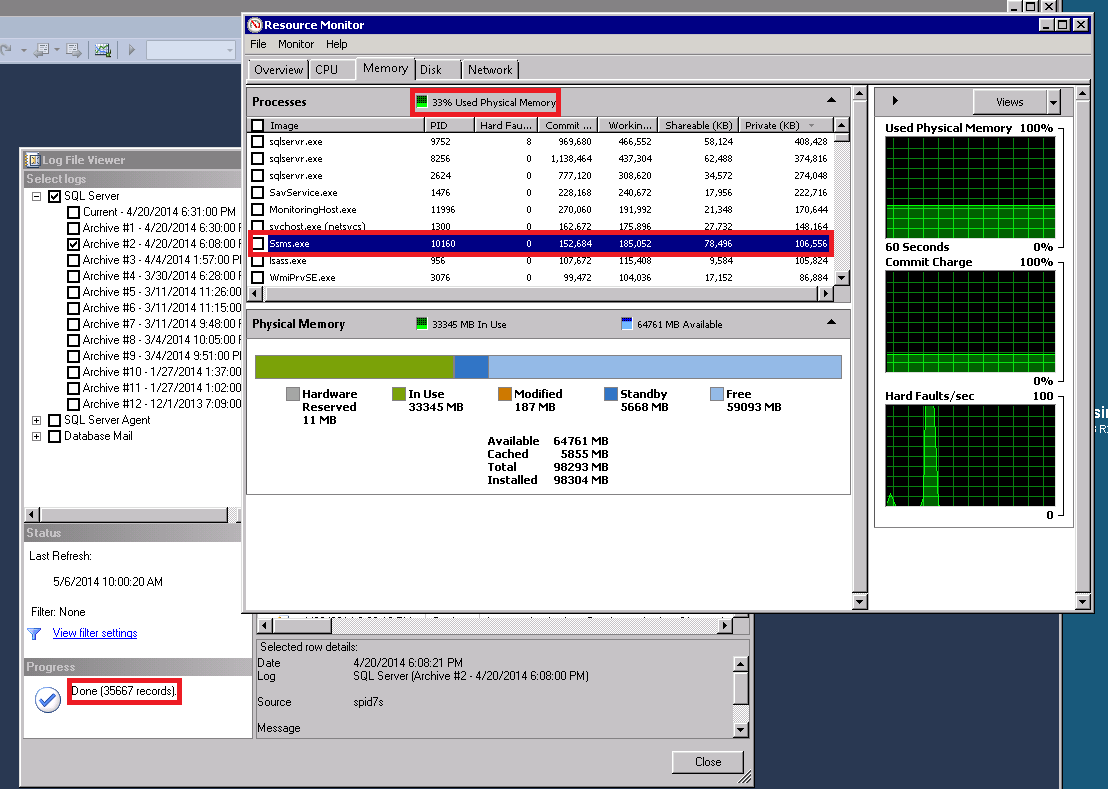

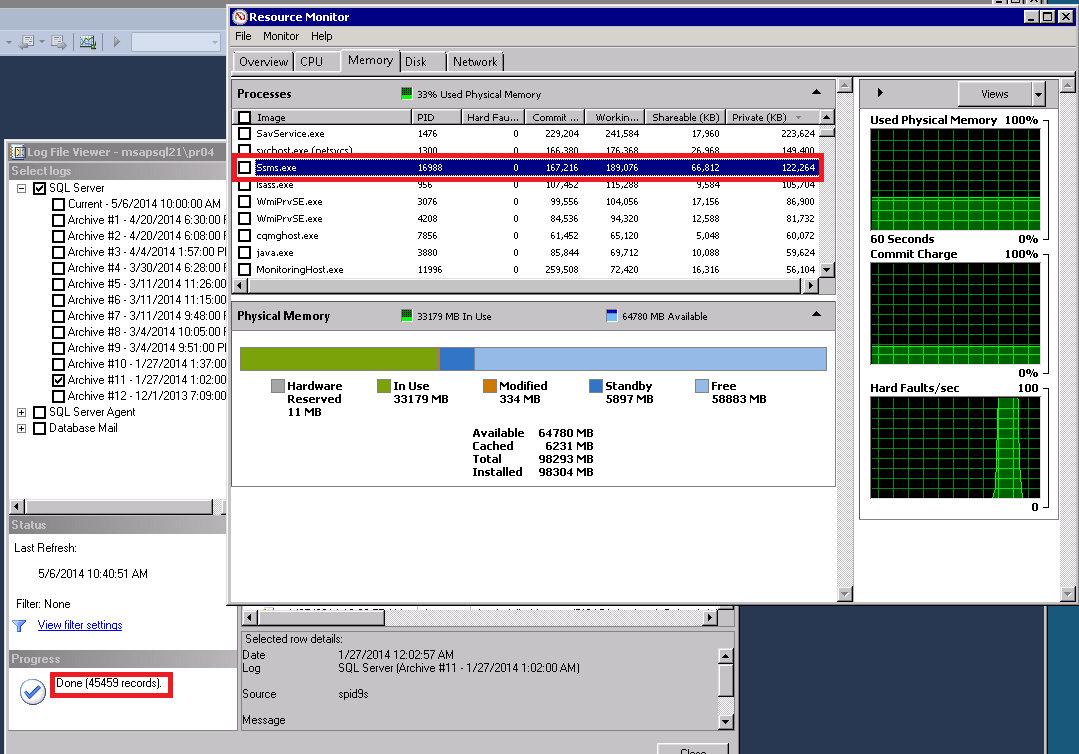

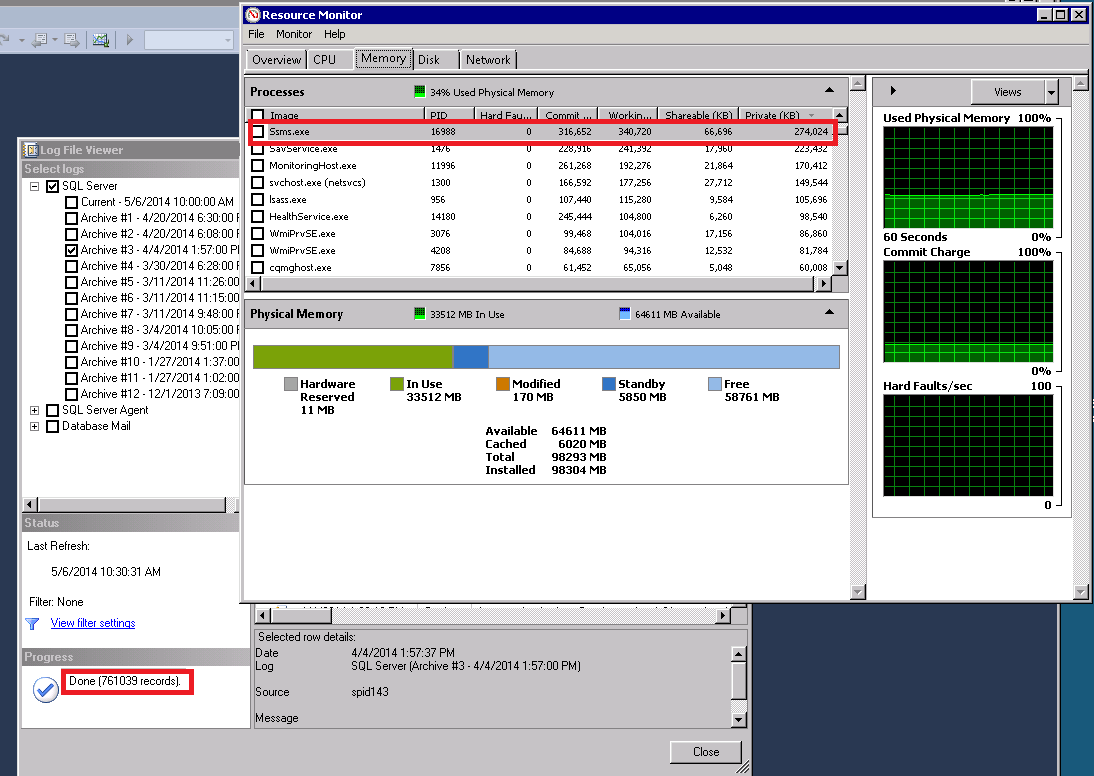

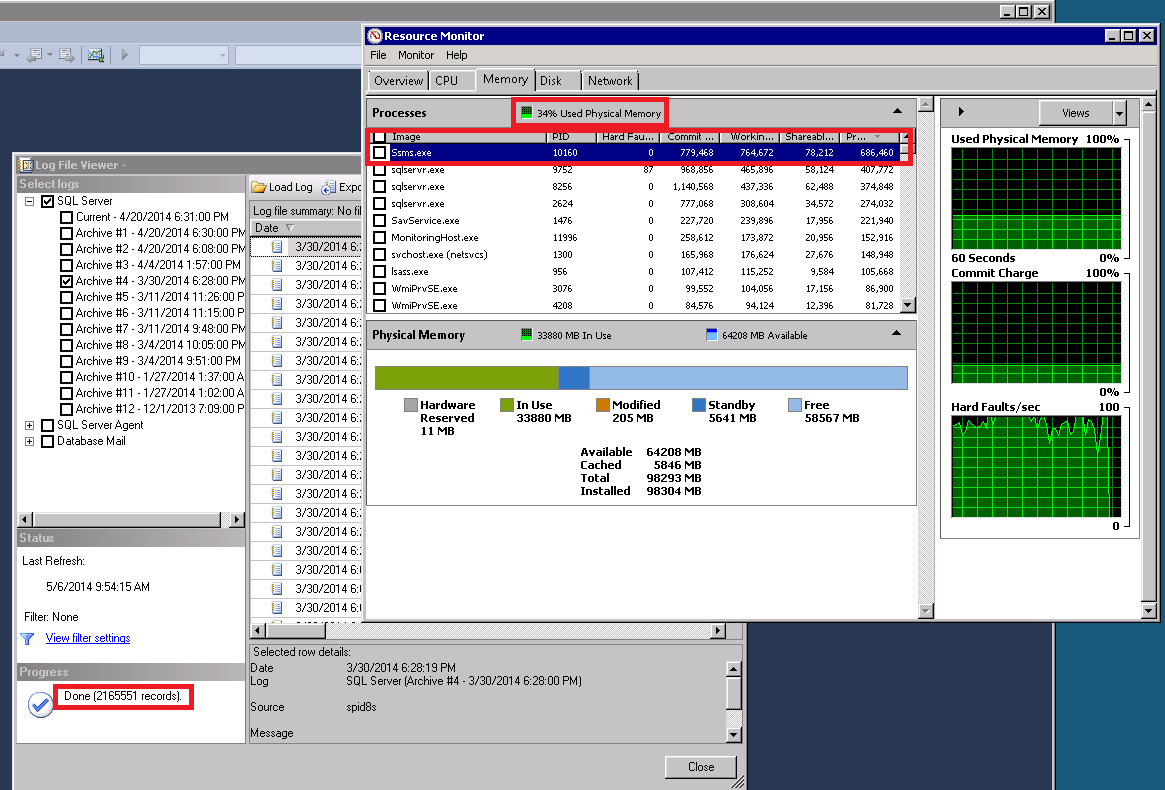

I will run SQL Server Management Studio (SSMS) to display one archive after the other and in parallel, I will run the resource manager to see the memory used by SSMS.

Result for Archive Number 2

Result for Archive Number 11

Result for Archive Number 3

Result for Archive Number 4

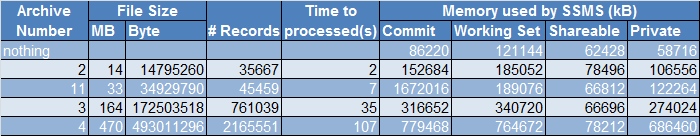

The following table summarizes the results of each error log files reading:

Time result

We can notice that between the archive number 11 with 45459 records and the archive number 3 with 761039 records, the time to read is approximately 30s.

The archive number 4 run more than 1 minutes… I can easily take a coffee!:-o

Memory result

The private memory column gives us an important information here: we can see that for the archive number 11, the memory has doubled in size.

Furthermore, for the Archive number 3, we have 4 times more and for the Archive number 4 we have 11 times more.

As the reminder, this metric is typically used to measure the memory impact of an application.

According to these information, I can deduce that a good error log file size is between 33MB and 164MB.

To be comfortable, I choose a policy threshold value of 50MB.

Step2: Creating the script

I create a table with the information provided from the xp_enumerrorlogs.

I divide the size per 1024 to have kB and again to have MB.

In this picture, you can see that I have 2 archive sizes greater than the 50Mb threshold value.

So, to create my policy condition I have to count the number of files whose size is greater than this threshold value.

And of course in my sample, I get 2. Perfect!:-)

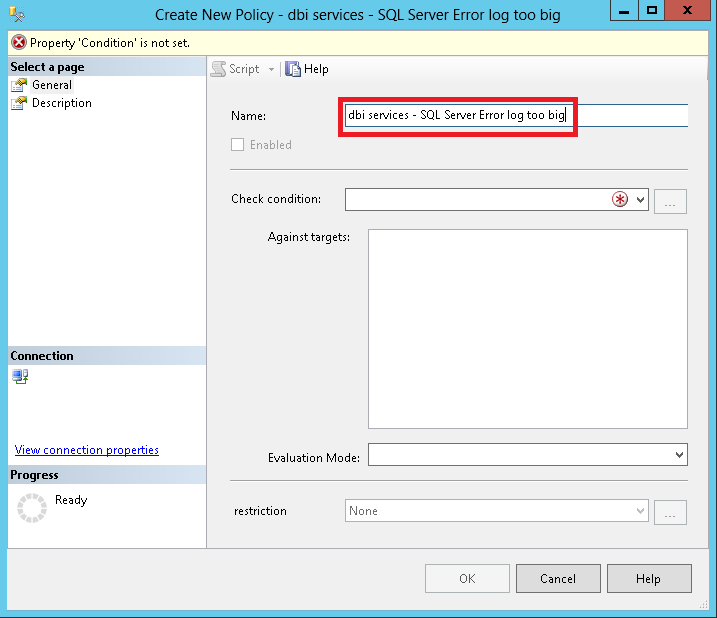

Step3: Create the policy

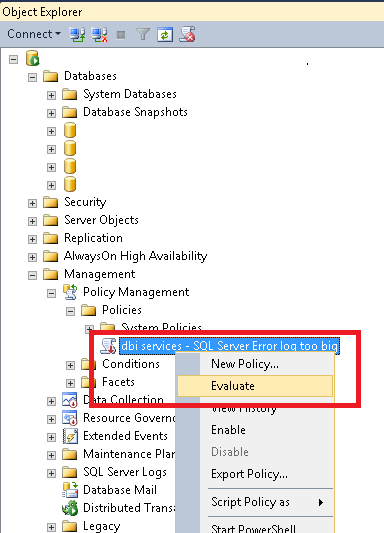

I create the new policy “dbi services – SQL Server Error log too big”

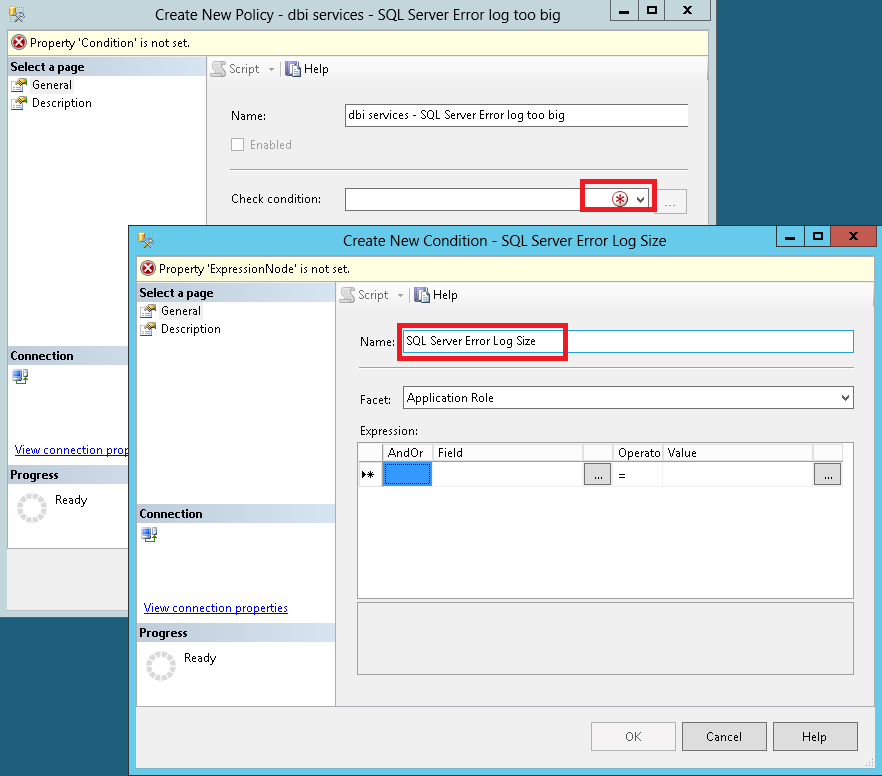

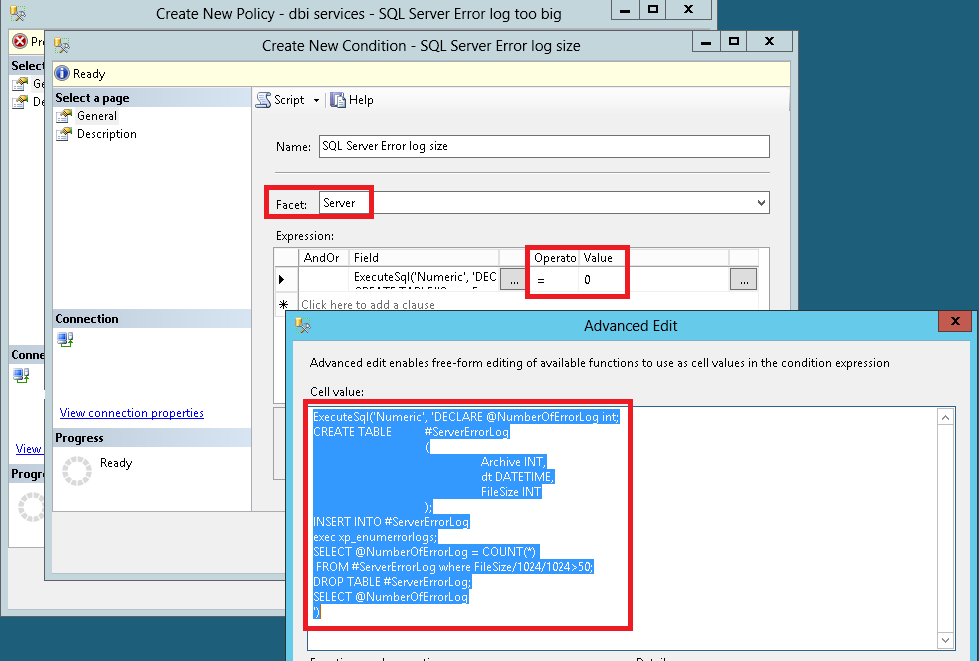

I create a new condition “SQL Server Error log size”

If it is greater than 0, the condition is not meet and it implies that I have an error log file greater than 50Mb.

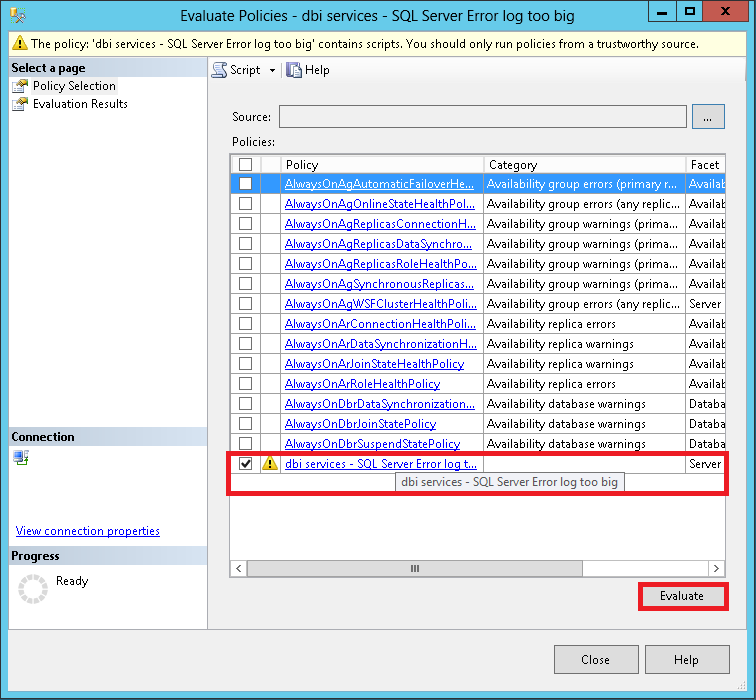

And now I can evaluate the policy against my error log files on my server as following:

Click on evaluate and…

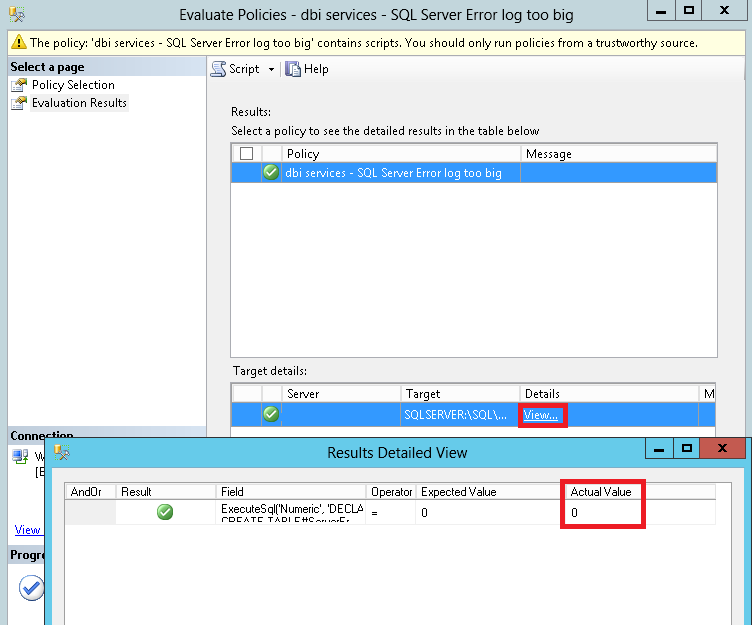

On this test server, the condition is met because I have not a big error log file (> 50MB).

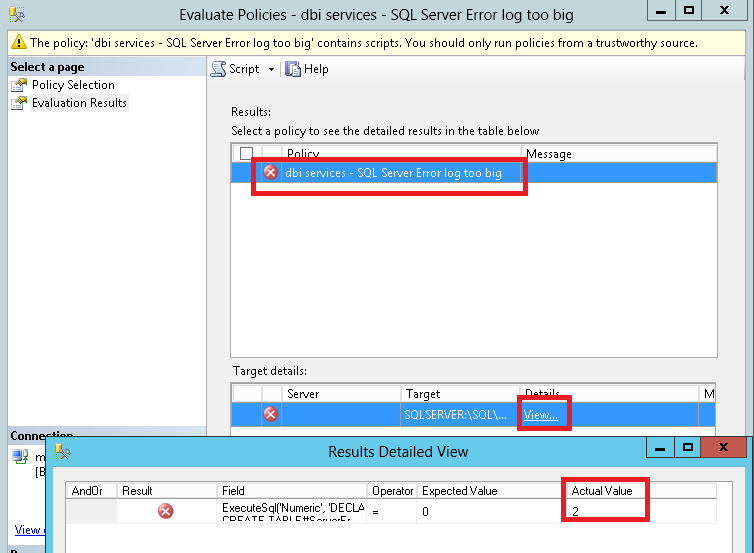

Then, I export the policy on the sample server that has 2 error log files with a size bigger than 50MB.

So, now if I evaluate my policy against this server…

… as expected it fails because we have two error log files that does not meet the condition.

I can easily deploy this policy on all servers or by using Enterprise Policy Management (EPM) on codeplex and the Central Management Server feature (CMS).

Conclusion

I recommend to use this policy to check the error log file size.

My best practice here is to recycle the error log every day with a cycle of 30 files (30 days) per default in a maintenance job.

If this policy failed with this best practice, then you can be sure that you have a lot of errors or too much information (like trace flag activation).

I hope this can help you and click here dbi-services—SQL-Server-Error-log-too-big.zip to download the policy to try it.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/11/LTO_WEB.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/JDE_Web-1-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)