Automation is key today, nobody wants to do the same tasks over and over and again. Cloud without automation is not even possible. There are several tools around that help with automation and one of the most popular is Ansible. We already have several posts about Ansible on our blog platform but this one will be rather long. Setting up PostgreSQL high available architectures is our daily business and we as well try to automate as much as possible. We do not only automate to save time, even more important we automate to avoid human errors. What we will share with this post is how you could use Ansible to bring up a three node Patroni cluster from scratch.

Disclaimer: Please see what we show here as a kind of template. You might need to adjust several bits to fit into your environment, other bits for sure can be solved more elegant by using advanced features of Ansible. Anyway, using this template you should be able to bring up one PostgreSQL master instance, two replicas, Patroni and HAProxy in minutes on CentOS 7. This should work the same for Red Hat 7 but if you want to do the same on Debian based systems or SUSE you for sure need to adjust some of the Ansible tasks. This post does not explain how Ansible works nor does it explain what Patroni or HAProxy is.

The starting point is a CentOS minimal installation with just the postgres user and group created and sudo permissions for postgres. That’s it:

postgres@patroni1 ~]$ id -a uid=1000(postgres) gid=1000(postgres) groups=1000(postgres) context=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023 [postgres@patroni1 ~]$ lsb_release -a LSB Version: :core-4.1-amd64:core-4.1-noarch:cxx-4.1-amd64:cxx-4.1-noarch:desktop-4.1-amd64:desktop-4.1-noarch:languages-4.1-amd64:languages-4.1-noarch:printing-4.1-amd64:printing-4.1-noarch Distributor ID: CentOS Description: CentOS Linux release 7.5.1804 (Core) Release: 7.5.1804 Codename: Core [postgres@patroni1 ~]$ sudo cat /etc/sudoers | grep postgres postgres ALL=(ALL) NOPASSWD: ALL [postgres@patroni1 ~]$

This is the Ansible directory structure on my workstation:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ tree

.

├── _commands.sh

├── _init_dirs.sh

├── patroni

└── roles

├── common

│ ├── files

│ │ └── PostgreSQL-DMK-17-09.4.zip

│ ├── handlers

│ ├── meta

│ ├── tasks

│ │ └── main.yml

│ ├── templates

│ │ └── compile.sh.j2

│ └── vars

└── patroni

├── files

│ ├── etcd.service

│ └── patroni.service

├── handlers

├── meta

├── site.retry

├── site.yml

├── tasks

│ └── main.yml

├── templates

│ ├── etcd.conf.j2

│ ├── haproxy.cfg.j2

│ ├── hosts.j2

│ ├── keepalived.conf.j2

│ └── patroni.yml.j2

└── vars

You can use the _init_dirs.sh script to create that but it is pretty much the Ansible default anyway:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat _init_dirs.sh #!/bin/bash touch patroni mkdir roles/ mkdir roles/common mkdir roles/common/tasks mkdir roles/common/handlers mkdir roles/common/templates mkdir roles/common/files mkdir roles/common/vars mkdir roles/common/meta mkdir roles/patroni mkdir roles/patroni/tasks mkdir roles/patroni/handlers mkdir roles/patroni/templates mkdir roles/patroni/files mkdir roles/patroni/vars mkdir roles/patroni/meta

What you always need with Ansible is the inventory and in our case it looks like this:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat patroni [patroni-servers] 192.168.22.240 keepalived_role=MASTER keepalived_priority=102 ansible_hostname=patroni1 ansible_hostname_fqdn=patroni1.it.dbi-services.com 192.168.22.241 keepalived_role=SLAVE keepalived_priority=101 ansible_hostname=patroni2 ansible_hostname_fqdn=patroni2.it.dbi-services.com 192.168.22.242 keepalived_role=SLAVE keepalived_priority=100 ansible_hostname=patroni3 ansible_hostname_fqdn=patroni3.it.dbi-services.com [patroni-servers:vars] postgresql_version=11.1 postgresql_major_version=11 dmk_postgresql_version=11/db_1 etcd_vserion=3.3.10 postgres_user=postgres postgres_group=postgres dmk_version=17-09.4 cluster_name=PG1 blank=' ' virtual_ip=192.168.22.245

As you can see there are three machines and several variables defined. The *dmk* stuff if for our management kit, just ignore/delete that for your environment.

We have two roles, one common and one for Patroni. The common role is responsible for doing the common stuff and can be used for single instance PostgreSQL deployments as well so lets start with this one:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/common/tasks/main.yml

- name: Install all dependencies for PostgreSQL

yum: name={{item}} state=present

with_items:

- gcc

- openldap-devel

- python-devel

- readline-devel

- redhat-lsb

- bison

- flex

- perl-ExtUtils-Embed

- zlib-devel

- crypto-utils

- openssl-devel

- pam-devel

- libxml2-devel

- libxslt-devel

- openssh-clients

- bzip2

- net-tools

- wget

- screen

- unzip

- sysstat

- xorg-x11-xauth

- systemd-devel

- bash-completion

- name: Remove iwl packages

yum: name={{item}} state=removed

with_items:

- iwl*

- name: upgrade all packages

yum:

name: '*'

state: latest

- file:

path: /u01/app/{{ postgres_user }}/local

state: directory

mode: 0700

recurse: yes

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

- file:

path: /u01

owner: "{{ postgres_user }}"

group: "{{ postgres_user }}"

mode: 0700

- file:

path: /u01/app

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

mode: 0700

- file:

path: /u01/app/{{ postgres_user }}

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

mode: 0700

- file:

path: /u02/pgdata/

state: directory

mode: 0700

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

- file:

path: /u02/pgdata/{{ postgresql_major_version }}

state: directory

mode: 0700

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

- file:

path: /u02/pgdata/{{ postgresql_major_version }}/{{ cluster_name }}

state: directory

mode: 0700

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

- file:

path: /u99/pgdata/

state: directory

mode: 0700

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

- file:

path: /etc/pgtab

state: touch

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

mode: 0600

- name: check if PostgreSQL source code exists

stat:

path: /home/{{ postgres_user }}/source.tar.bz2

register: source_available

- name: Download the PostgreSQL source code if it is not already there

get_url:

url: https://ftp.postgresql.org/pub/source/v{{ postgresql_version }}/postgresql-{{ postgresql_version }}.tar.bz2

dest: /home/{{ postgres_user }}/source.tar.bz2

mode: 0775

when: source_available.stat.exists == false

- name: Check if PostgreSQL is already installed

stat:

path: /u01/app/{{ postgres_user }}/product/{{ dmk_postgresql_version }}/bin/postgres

register: postrgresql_is_installed

- name: extract the sources when PostgreSQL is not already installed

shell: cd /home/{{ postgres_user }}; tar -axf source.tar.bz2

become: yes

become_user: "{{ postgres_user }}"

when: postrgresql_is_installed.stat.exists == false

- template:

src: compile.sh.j2

dest: /home/{{ postgres_user }}/postgresql-{{ postgresql_version }}/compile.sh

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

mode: 0700

- name: Install PostgreSQL from source code

shell: cd /home/{{ postgres_user }}/postgresql-{{ postgresql_version }}; ./compile.sh

become: yes

become_user: "{{ postgres_user }}"

when: postrgresql_is_installed.stat.exists == false

- name: check if DMK for PostgreSQL source code exists

stat:

path: /u01/app/{{ postgres_user }}/local/PostgreSQL-DMK-{{ dmk_version }}.zip

register: dmk_source_available

- name: check if DMK for PostgreSQL is extracted

stat:

path: /u01/app/{{ postgres_user }}/local/dmk/bin/dmk.bash

register: dmk_extracted

- name: Copy DMK source distribution

copy:

src: PostgreSQL-DMK-{{ dmk_version }}.zip

dest: /u01/app/{{ postgres_user }}/local/PostgreSQL-DMK-{{ dmk_version }}.zip

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

mode: 0700

when: dmk_source_available.stat.exists == false

- name: extract DMK

shell: cd /u01/app/{{ postgres_user }}/local; unzip PostgreSQL-DMK-{{ dmk_version }}.zip

become: yes

become_user: "{{ postgres_user }}"

when: dmk_extracted.stat.exists == false

- name: check if DMK is installed

stat:

path: /home/{{ postgres_user }}/.DMK_HOME

register: dmk_installed

- lineinfile:

path: /etc/pgtab

line: 'pg{{ postgresql_version }}:/u01/app/{{ postgres_user }}/product/{{ dmk_postgresql_version }}:dummy:9999:D'

create: no

when: dmk_installed.stat.exists == false

- name: Execute DMK for the first time

shell: /u01/app/{{ postgres_user }}/local/dmk/bin/dmk.bash; cat /u01/app/{{ postgres_user }}/local/dmk/templates/profile/dmk.postgres.profile >> /home/{{ postgres_user }}/.bash_profile

become: yes

become_user: "{{ postgres_user }}"

when: dmk_installed.stat.exists == false

This should be more or less self explaining so we will only summarize what it does:

- Install required packages for compiling PostgreSQL from source

- Remove the iwl* packages

- Update all packages to the latest release

- Create the directory structure

- Download the PostgreSQL source code, compile and install

- Install our DMK

As said, this role can be included in any other PostgreSQL setup as it only does basic stuff. There is one template used here, which is compile.sh.j2:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/common/templates/compile.sh.j2

PGHOME=/u01/app/postgres/product/{{ dmk_postgresql_version }}

SEGSIZE=2

BLOCKSIZE=8

./configure --prefix=${PGHOME} \

--exec-prefix=${PGHOME} \

--bindir=${PGHOME}/bin \

--libdir=${PGHOME}/lib \

--sysconfdir=${PGHOME}/etc \

--includedir=${PGHOME}/include \

--datarootdir=${PGHOME}/share \

--datadir=${PGHOME}/share \

--with-pgport=5432 \

--with-perl \

--with-python \

--with-openssl \

--with-pam \

--with-ldap \

--with-libxml \

--with-libxslt \

--with-segsize=${SEGSIZE} \

--with-blocksize=${BLOCKSIZE} \

--with-systemd "

make -j 2 all

make install

cd contrib

make -j 2 install

This one is our standard way of bringing PostgreSQL onto the system and the only parameter is the PostgreSQL version we use for the directory name. No magic, simple stuff and that’s it for the common role.

Coming to the Patroni role. Here is it:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/patroni/tasks/main.yml

---

- name: check if epel rpm already is there

stat:

path: /root/epel-release-latest-7.noarch.rpm

register: epel_rpm_available

- name: Download the EPEL rpm

get_url:

url: http://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

dest: /root/epel-release-latest-7.noarch.rpm

mode: 0440

when: epel_rpm_available.stat.exists == false

- name: check if epel repository is already installed

stat:

path: /etc/yum.repos.d/epel.repo

register: epel_installed

- name: Install the EPEL rpm

shell: yum localinstall -y /root/epel-release-latest-7.noarch.rpm

args:

warn: false

when: epel_installed.stat.exists == false

- name: Install all dependencies for Patroni

yum: name={{item}} state=present

with_items:

- python-pip

- PyYAML

- bind-utils

- keepalived

- haproxy

# create the hosts file

- template:

src: hosts.j2

dest: /etc/hosts

owner: root

group: root

mode: 0644

- name: Create the file to load the watchdog module

file:

path: /etc/modules-load.d/softdog.conf

state: touch

- name: Add the watchdog module

shell: modprobe softdog

- name: Change ownershhip of the watchdog device

shell: chown postgres /dev/watchdog

args:

warn: false

- name: check if etcd sources already exist

stat:

path: /home/{{ postgres_user }}/etcd-v{{ etcd_vserion }}-linux-amd64.tar.gz

register: etcd_source_available

- name: Download etcd

get_url:

url: https://github.com/etcd-io/etcd/releases/download/v{{ etcd_vserion }}/etcd-v{{ etcd_vserion }}-linux-amd64.tar.gz

dest: /home/{{ postgres_user }}/etcd-v{{ etcd_vserion }}-linux-amd64.tar.gz

mode: 0755

when: etcd_source_available.stat.exists == false

- name: check if etcd is available in DMK

stat:

path: /u01/app/{{ postgres_user }}/local/dmk/bin/etcd

register: etcd_copied_to_dmk

- name: extract etcd

shell: cd /home/{{ postgres_user }}/; tar -axf etcd-v{{ etcd_vserion }}-linux-amd64.tar.gz

become: yes

become_user: "{{ postgres_user }}"

when: etcd_copied_to_dmk.stat.exists == false

- name: copy etcd to DMK

shell: cp /home/{{ postgres_user }}/etcd-v{{ etcd_vserion }}-linux-amd64/etcd* /u01/app/{{ postgres_user }}/local/dmk/bin/

become: yes

become_user: "{{ postgres_user }}"

when: etcd_copied_to_dmk.stat.exists == false

- template:

src: etcd.conf.j2

dest: /u01/app/{{ postgres_user }}/local/dmk/etc/etcd.conf

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

mode: 0700

- name: Copy the etcd systemd service file

copy:

src: etcd.service

dest: /etc/systemd/system/etcd.service

owner: root

group: root

mode: 0755

- file:

path: /u02/pgdata/etcd

state: directory

mode: 0700

recurse: yes

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

- name: force systemd to reread configs

systemd:

daemon_reload: yes

- name: Enable the systemd etcd service

systemd:

name: etcd

enabled: yes

- name: Start the systemd etcd service

shell: systemctl start etcd.service

- name: check if patroni is alraedy installed

stat:

path: /home/{{ postgres_user }}/.local/bin/patroni

register: patroni_is_installed

- name: install and upgrade pip

shell: pip install --upgrade pip

when: patroni_is_installed.stat.exists == false

- name: install and upgrade setuptools

become: yes

become_user: "{{ postgres_user }}"

shell: pip install --upgrade --user setuptools

when: patroni_is_installed.stat.exists == false

- name: install psycopg2-binary

become: yes

become_user: "{{ postgres_user }}"

shell: pip install --user psycopg2-binary

when: patroni_is_installed.stat.exists == false

- name: install patroni

become: yes

become_user: "{{ postgres_user }}"

shell: pip install --user patroni[etcd]

when: patroni_is_installed.stat.exists == false

- file:

src: /home/{{ postgres_user }}/.local/bin/patroni

dest: /u01/app/{{ postgres_user }}/local/dmk/bin/patroni

owner: "{{ postgres_user }}"

group: "{{ postgres_user }}"

state: link

- file:

src: /home/{{ postgres_user }}/.local/bin/patronictl

dest: /u01/app/{{ postgres_user }}/local/dmk/bin/patronictl

owner: "{{ postgres_user }}"

group: "{{ postgres_user }}"

state: link

- template:

src: patroni.yml.j2

dest: /u01/app/{{ postgres_user }}/local/dmk/etc/patroni.yml

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

mode: 0600

- name: Copy the patroni systemd service file

copy:

src: patroni.service

dest: /etc/systemd/system/patroni.service

owner: root

group: root

mode: 0755

- name: force systemd to reread configs

systemd:

daemon_reload: yes

- name: Enable the systemd etcd service

systemd:

name: patroni

enabled: yes

# add the instance to /etc/pgtab so DMK is aware of if

- lineinfile:

path: /etc/pgtab

line: '{{ cluster_name }}:/u01/app/{{ postgres_user }}/product/{{ dmk_postgresql_version }}:/u02/pgdata/{{ postgresql_major_version }}/{{ cluster_name }}:5432:N'

- template:

src: haproxy.cfg.j2

dest: /etc/haproxy/haproxy.cfg

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

mode: 0600

- name: Enable the systemd haproxy service

systemd:

name: haproxy

enabled: yes

# we need to set this so haproxy can be started

- name: Set selinux context for ha proxy

shell: setsebool -P haproxy_connect_any=1

- template:

src: keepalived.conf.j2

dest: /etc/keepalived/keepalived.conf

owner: "{{ postgres_user }}"

group: "{{ postgres_group }}"

mode: 0600

with_items:

- { role: "{{ hostvars[inventory_hostname].keepalived_role }}" , priority: "{{ hostvars[inventory_hostname].keepalived_priority }}" }

What it does:

- Install the Extra Packages for Enterprise Linux (EPEL)

- Install the dependencies for Patroni, HAProxy

- Create the /etc/hosts file

- Enable the watchdog service

- Download and install etcd

- Integrate etcd into systemd

- Install Patroni, create the configuration files and integrate it into systemd

- Install and configure HAProxy

This role uses several templates. The first one is used to create /etc/hosts:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/patroni/templates/hosts.j2

#jinja2: trim_blocks:False

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

{%- for h in ansible_play_hosts %}

{{ hostvars[h]['ansible_enp0s8']['ipv4']['address'] }} {{ hostvars[h]['ansible_hostname'] }}

{% endfor %}

The second one is used to create the etcd configuration:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/patroni/templates/etcd.conf.j2

name: {{ ansible_hostname }}

data-dir: /u02/pgdata/etcd

initial-advertise-peer-urls: http://{{ hostvars[inventory_hostname]['ansible_enp0s8']['ipv4']['address'] }}:2380

listen-peer-urls: http://{{ hostvars[inventory_hostname]['ansible_enp0s8']['ipv4']['address'] }}:2380

listen-client-urls: http://{{ hostvars[inventory_hostname]['ansible_enp0s8']['ipv4']['address'] }}:2379,http://localhost:2379

advertise-client-urls: http://{{ hostvars[inventory_hostname]['ansible_enp0s8']['ipv4']['address'] }}:2379

initial-cluster:{{ blank }} {%- for h in ansible_play_hosts %}

{{ hostvars[h]['ansible_hostname'] }}=http://{{ hostvars[h]['ansible_enp0s8']['ipv4']['address'] }}:2380{% if not loop.last %},{% endif %}

{% endfor %}

The third one creates the Patroni configuration:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/patroni/templates/patroni.yml.j2

scope: {{ cluster_name }}

#namespace: /service/

name: {{ ansible_hostname }}

restapi:

listen: {{ hostvars[inventory_hostname]['ansible_enp0s8']['ipv4']['address'] }}:8008

connect_address: {{ hostvars[inventory_hostname]['ansible_enp0s8']['ipv4']['address'] }}:8008

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# keyfile: /etc/ssl/private/ssl-cert-snakeoil.key

# authentication:

# username: username

# password: password

# ctl:

# insecure: false # Allow connections to SSL sites without certs

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# cacert: /etc/ssl/certs/ssl-cacert-snakeoil.pem

etcd:

host: 127.0.0.1:2379

bootstrap:

# this section will be written into Etcd:///config after initializing new cluster

# and all other cluster members will use it as a `global configuration`

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

postgresql:

use_pg_rewind: true

use_slots: true

parameters:

wal_level: 'hot_standby'

hot_standby: "on"

wal_keep_segments: 8

max_replication_slots: 10

wal_log_hints: "on"

listen_addresses: '*'

port: 5432

logging_collector: 'on'

log_truncate_on_rotation: 'on'

log_filename: 'postgresql-%a.log'

log_rotation_age: '1440'

log_line_prefix: '%m - %l - %p - %h - %u@%d - %x'

log_directory: 'pg_log'

log_min_messages: 'WARNING'

log_autovacuum_min_duration: '60s'

log_min_error_statement: 'NOTICE'

log_min_duration_statement: '30s'

log_checkpoints: 'on'

log_statement: 'ddl'

log_lock_waits: 'on'

log_temp_files: '0'

log_timezone: 'Europe/Zurich'

log_connections: 'on'

log_disconnections: 'on'

log_duration: 'on'

client_min_messages: 'WARNING'

wal_level: 'replica'

hot_standby_feedback: 'on'

max_wal_senders: '10'

shared_buffers: '128MB'

work_mem: '8MB'

effective_cache_size: '512MB'

maintenance_work_mem: '64MB'

wal_compression: 'off'

max_wal_senders: '20'

shared_preload_libraries: 'pg_stat_statements'

autovacuum_max_workers: '6'

autovacuum_vacuum_scale_factor: '0.1'

autovacuum_vacuum_threshold: '50'

archive_mode: 'on'

archive_command: '/bin/true'

wal_log_hints: 'on'

# recovery_conf:

# restore_command: cp ../wal_archive/%f %p

# some desired options for 'initdb'

initdb: # Note: It needs to be a list (some options need values, others are switches)

- encoding: UTF8

- data-checksums

pg_hba: # Add following lines to pg_hba.conf after running 'initdb'

- host replication replicator 192.168.22.0/24 md5

- host all all 192.168.22.0/24 md5

# - hostssl all all 0.0.0.0/0 md5

# Additional script to be launched after initial cluster creation (will be passed the connection URL as parameter)

# post_init: /usr/local/bin/setup_cluster.sh

# Some additional users users which needs to be created after initializing new cluster

users:

admin:

password: admin

options:

- createrole

- createdb

replicator:

password: postgres

options:

- superuser

postgresql:

listen: {{ hostvars[inventory_hostname]['ansible_enp0s8']['ipv4']['address'] }}:5432

connect_address: {{ hostvars[inventory_hostname]['ansible_enp0s8']['ipv4']['address'] }}:5432

data_dir: /u02/pgdata/{{ postgresql_major_version }}/{{ cluster_name }}/

bin_dir: /u01/app/{{ postgres_user }}/product/{{ dmk_postgresql_version }}/bin

# config_dir:

pgpass: /u01/app/{{ postgres_user }}/local/dmk/etc/pgpass0

authentication:

replication:

username: replicator

password: postgres

superuser:

username: postgres

password: postgres

parameters:

unix_socket_directories: '/tmp'

watchdog:

mode: automatic # Allowed values: off, automatic, required

device: /dev/watchdog

safety_margin: 5

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

Then we have the configuration template for HAProxy:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/patroni/templates/haproxy.cfg.j2

#jinja2: trim_blocks:False

global

maxconn 100

defaults

log global

mode tcp

retries 2

timeout client 30m

timeout connect 4s

timeout server 30m

timeout check 5s

listen stats

mode http

bind *:7000

stats enable

stats uri /

# stats auth haproxy:haproxy

# stats refresh 10s

listen {{ cluster_name }}

bind *:5000

option httpchk

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

{%- for h in ansible_play_hosts %}

server postgresql_{{ hostvars[h]['ansible_enp0s8']['ipv4']['address'] }}_5432 {{ hostvars[h]['ansible_enp0s8']['ipv4']['address'] }}:5432 maxconn 100 check port 8008

{% endfor %}

Finally the template for keepalived:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/patroni/templates/keepalived.conf.j2

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance VI_1 {

interface enp0s8

state {{ item.role }}

virtual_router_id 51

priority {{ item.priority }}

virtual_ipaddress {

{{ virtual_ip }}

}

track_script {

chk_haproxy

}

}

What is left are the systemd service files. The one for etcd:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/patroni/files/etcd.service # # systemd integration for etcd # Put this file under /etc/systemd/system/etcd.service # then: systemctl daemon-reload # then: systemctl list-unit-files | grep etcd # then: systemctl enable etcd.service # [Unit] Description=dbi services etcd service After=network.target [Service] User=postgres Type=notify ExecStart=/u01/app/postgres/local/dmk/bin/etcd --config-file /u01/app/postgres/local/dmk/etc/etcd.conf Restart=always RestartSec=10s LimitNOFILE=40000 [Install] WantedBy=multi-user.target

For Patroni:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/patroni/files/patroni.service # # systemd integration for patroni # Put this file under /etc/systemd/system/patroni.service # then: systemctl daemon-reload # then: systemctl list-unit-files | grep patroni # then: systemctl enable patroni.service # [Unit] Description=dbi services patroni service After=etcd.service syslog.target network.target [Service] User=postgres Group=postgres Type=simple ExecStartPre=-/usr/bin/sudo /sbin/modprobe softdog ExecStartPre=-/usr/bin/sudo /bin/chown postgres /dev/watchdog ExecStart=/u01/app/postgres/local/dmk/bin/patroni /u01/app/postgres/local/dmk/etc/patroni.yml ExecReload=/bin/kill -s HUP $MAINPID KillMode=process Restart=no TimeoutSec=30 [Install] WantedBy=multi-user.target

The last bit is the site definition which combines all of the above.

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ cat roles/patroni/site.yml

---

# This playbook deploys a three node patroni PostgreSQL cluster with HAProxy

- hosts: patroni-servers

become: true

become_user: root

roles:

- common

- patroni

Once all of that is in place the palybook can be executed:

dwe@dwe:~/Documents/dbiProjects/dbi_dmk_postgres/dmk/ansible$ ansible-playbook -i ../patroni patroni/site.yml -u postgres

This runs for a couple of minutes as especially upgrading all the operating system packages and comling PostgreSQL will take some time. Once it completed you only need to reboot the systems and your cluster is ready:

postgres@patroni1:/home/postgres/ [pg11.1] patronictl list +---------+----------+----------------+--------+---------+-----------+ | Cluster | Member | Host | Role | State | Lag in MB | +---------+----------+----------------+--------+---------+-----------+ | PG1 | patroni1 | 192.168.22.240 | Leader | running | 0.0 | | PG1 | patroni2 | 192.168.22.241 | | running | 0.0 | | PG1 | patroni3 | 192.168.22.242 | | running | 0.0 | +---------+----------+----------------+--------+---------+-----------+

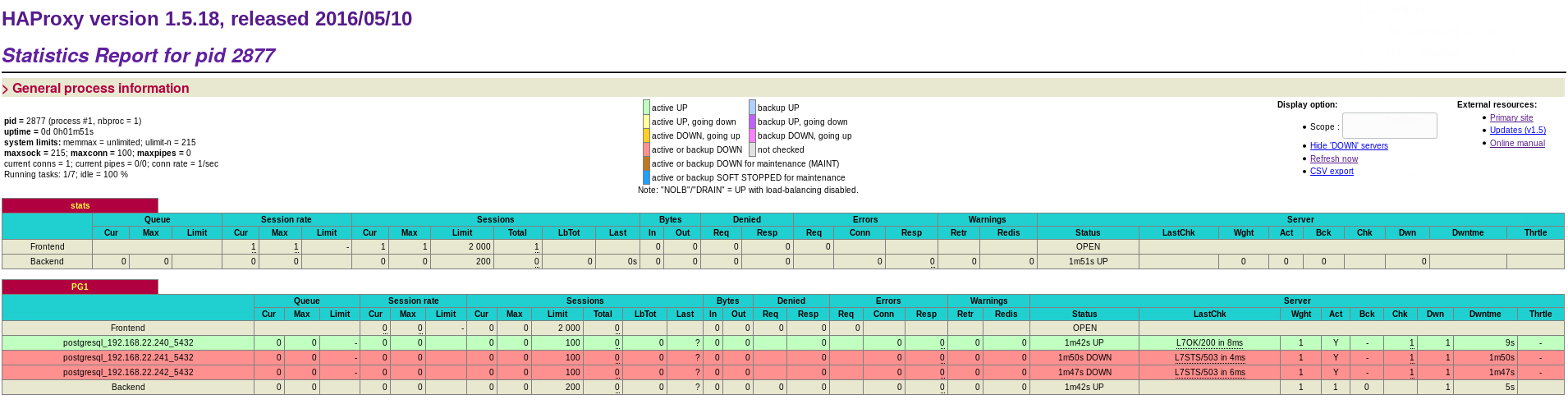

HAProy is running as well on all three nodes and you can check that by pointing your browser to any of the hosts on port 7000:

Hope that helps.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/FRJ_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/04/SIT_web.png)