Everybody is talking about AI, RAG and language models today. A lot of people are using ChatGPT or other tools to get answers to their questions, generate text or images. While this sometimes is really helpful (depending on how you use it) you still need to be skeptical about the information you get out of those tools. To understand how those tools work, you need to touch and work with them. In this post we’ll take a look at Open WebUI, which is an open source “user-friendly self-hosted AI platform designed to operate entirely offline”.

Before we can setup Open WebUI we need a large language model, and one of the popular frameworks is Ollama. Getting this up and running as quite easy and we’ll start from a Debian 12 minimal installation:

root@ip-10-0-1-112:~$ cat /etc/debian_version

12.10

The installation is actually a one-liner, and all you have to do is this:

root@ip-10-0-1-112:~$ curl -fsSL https://ollama.com/install.sh | sh

>>> Installing ollama to /usr/local

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> Creating ollama user...

>>> Adding ollama user to render group...

>>> Adding ollama user to video group...

>>> Adding current user to ollama group...

>>> Creating ollama systemd service...

>>> Enabling and starting ollama service...

Created symlink /etc/systemd/system/default.target.wants/ollama.service → /etc/systemd/system/ollama.service.

>>> The Ollama API is now available at 127.0.0.1:11434.

>>> Install complete. Run "ollama" from the command line.

WARNING: No NVIDIA/AMD GPU detected. Ollama will run in CPU-only mode.

This created a new operating system user and started a new process:

root@ip-10-0-1-112:~$ grep ollama /etc/passwd

ollama:x:999:994::/usr/share/ollama:/bin/false

root@ip-10-0-1-112:~$ ps -ef | grep ollama

ollama 1194 1 0 05:01 ? 00:00:00 /usr/local/bin/ollama serve

root 1205 606 0 05:01 pts/1 00:00:00 grep ollama

root@ip-10-0-1-112:~$ systemctl list-unit-files | grep ollama

ollama.service enabled enabled

From now on you already can run the model and start asking questions:

root@ip-10-0-1-112:~$ ollama run llama3.2

>>> Who am I?

I'd love to try and figure out who you are, but I need a bit more information. You see, there are many people with different names, backgrounds, and characteristics, so it's not always possible to pinpoint exactly who someone is.

Can you give me some clues or hints about yourself? For example:

* What do you like doing in your free time?

* Do you have any unique talents or skills?

* Are you a fan of any particular sports teams, movies, or TV shows?

* Do you have any fun facts or accomplishments that might help me get to know you better?

The more information you share, the better I'll be able to try and guess who you are!

>>> Send a message (/? for help)

As we want a graphical user interface over this, we can now go ahead an install Open WebUI. This is quite easy as well. We’ll use a Python virtual environment for this:

root@ip-10-0-1-112:~$ apt install -y pip python3-venv

root@ip-10-0-1-112:~$ groupadd openwebui

root@ip-10-0-1-112:~$ useradd -g openwebui -m -s /bin/bash openwebui

root@ip-10-0-1-112:~$ su - openwebui

openwebui@ip-10-0-1-112:~$ python3 -m venv .local

openwebui@ip-10-0-1-112:~$ .local/bin/pip install open-webui

Collecting open-webui

Downloading open_webui-0.6.2-py3-none-any.whl (132.7 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 132.7/132.7 MB 18.7 MB/s eta 0:00:00

Collecting accelerate

Downloading accelerate-1.6.0-py3-none-any.whl (354 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 354.7/354.7 kB 27.4 MB/s eta 0:00:00

Collecting aiocache

Downloading aiocache-0.12.3-py2.py3-none-any.whl (28 kB)

Collecting aiofiles

Downloading aiofiles-24.1.0-py3-none-any.whl (15 kB)

Collecting aiohttp==3.11.11

...

Successfully installed Events-0.5 Jinja2-3.1.6 Mako-1.3.10 MarkupSafe-3.0.2 PyYAML-6.0.2 RTFDE-0.1.2 Shapely-2.1.0 XlsxWriter-3.2.2 accelerate-1.6.0 aiocache-0.12.3 aiofiles-24.1.0 aiohappyeyeballs-2.6.1 aiohttp-3.11.11 aiosignal-1.3.2 alembic-1.14.0 annotated-types-0.7.0 anthropic-0.49.0 anyio-4.9.0 appdirs-1.4.4 apscheduler-3.10.4 argon2-cffi-23.1.0 argon2-cffi-bindings-21.2.0 asgiref-3.8.1 async-timeout-5.0.1 attrs-25.3.0 authlib-1.4.1 av-14.3.0 azure-ai-documentintelligence-1.0.0 azure-core-1.33.0 azure-identity-1.20.0 azure-storage-blob-12.24.1 backoff-2.2.1 bcrypt-4.3.0 beautifulsoup4-4.13.3 bidict-0.23.1 bitarray-3.3.1 black-25.1.0 blinker-1.9.0 boto3-1.35.53 botocore-1.35.99 build-1.2.2.post1 cachetools-5.5.2 certifi-2025.1.31 cffi-1.17.1 chardet-5.2.0 charset-normalizer-3.4.1 chroma-hnswlib-0.7.6 chromadb-0.6.2 click-8.1.8 colbert-ai-0.2.21 colorclass-2.2.2 coloredlogs-15.0.1 compressed-rtf-1.0.7 cryptography-44.0.2 ctranslate2-4.6.0 dataclasses-json-0.6.7 datasets-3.5.0 defusedxml-0.7.1 deprecated-1.2.18 dill-0.3.8 distro-1.9.0 dnspython-2.7.0 docker-7.1.0 docx2txt-0.8 duckduckgo-search-7.3.2 durationpy-0.9 easygui-0.98.3 ebcdic-1.1.1 ecdsa-0.19.1 einops-0.8.1 elastic-transport-8.17.1 elasticsearch-8.17.1 emoji-2.14.1 et-xmlfile-2.0.0 eval-type-backport-0.2.2 extract-msg-0.54.1 fake-useragent-2.1.0 fastapi-0.115.7 faster-whisper-1.1.1 filelock-3.18.0 filetype-1.2.0 firecrawl-py-1.12.0 flask-3.1.0 flatbuffers-25.2.10 fonttools-4.57.0 fpdf2-2.8.2 frozenlist-1.5.0 fs-2.4.16 fsspec-2024.12.0 ftfy-6.2.3 gcp-storage-emulator-2024.8.3 git-python-1.0.3 gitdb-4.0.12 gitpython-3.1.44 google-ai-generativelanguage-0.6.15 google-api-core-2.24.2 google-api-python-client-2.166.0 google-auth-2.38.0 google-auth-httplib2-0.2.0 google-auth-oauthlib-1.2.1 google-cloud-core-2.4.3 google-cloud-storage-2.19.0 google-crc32c-1.7.1 google-generativeai-0.8.4 google-resumable-media-2.7.2 googleapis-common-protos-1.63.2 greenlet-3.1.1 grpcio-1.67.1 grpcio-status-1.67.1 grpcio-tools-1.67.1 h11-0.14.0 h2-4.2.0 hpack-4.1.0 html5lib-1.1 httpcore-1.0.7 httplib2-0.22.0 httptools-0.6.4 httpx-0.28.1 httpx-sse-0.4.0 huggingface-hub-0.30.2 humanfriendly-10.0 hyperframe-6.1.0 idna-3.10 importlib-metadata-8.6.1 importlib-resources-6.5.2 iniconfig-2.1.0 isodate-0.7.2 itsdangerous-2.2.0 jiter-0.9.0 jmespath-1.0.1 joblib-1.4.2 jsonpatch-1.33 jsonpointer-3.0.0 kubernetes-32.0.1 langchain-0.3.19 langchain-community-0.3.18 langchain-core-0.3.51 langchain-text-splitters-0.3.8 langdetect-1.0.9 langfuse-2.44.0 langsmith-0.3.30 lark-1.1.9 ldap3-2.9.1 loguru-0.7.2 lxml-5.3.2 markdown-3.7 markdown-it-py-3.0.0 marshmallow-3.26.1 mdurl-0.1.2 milvus-lite-2.4.12 mmh3-5.1.0 monotonic-1.6 moto-5.1.3 mpmath-1.3.0 msal-1.32.0 msal-extensions-1.3.1 msoffcrypto-tool-5.4.2 multidict-6.4.3 multiprocess-0.70.16 mypy-extensions-1.0.0 nest-asyncio-1.6.0 networkx-3.4.2 ninja-1.11.1.4 nltk-3.9.1 numpy-1.26.4 nvidia-cublas-cu12-12.4.5.8 nvidia-cuda-cupti-cu12-12.4.127 nvidia-cuda-nvrtc-cu12-12.4.127 nvidia-cuda-runtime-cu12-12.4.127 nvidia-cudnn-cu12-9.1.0.70 nvidia-cufft-cu12-11.2.1.3 nvidia-curand-cu12-10.3.5.147 nvidia-cusolver-cu12-11.6.1.9 nvidia-cusparse-cu12-12.3.1.170 nvidia-cusparselt-cu12-0.6.2 nvidia-nccl-cu12-2.21.5 nvidia-nvjitlink-cu12-12.4.127 nvidia-nvtx-cu12-12.4.127 oauthlib-3.2.2 olefile-0.47 oletools-0.60.2 onnxruntime-1.20.1 open-webui-0.6.2 openai-1.72.0 opencv-python-4.11.0.86 opencv-python-headless-4.11.0.86 openpyxl-3.1.5 opensearch-py-2.8.0 opentelemetry-api-1.32.0 opentelemetry-exporter-otlp-proto-common-1.32.0 opentelemetry-exporter-otlp-proto-grpc-1.32.0 opentelemetry-instrumentation-0.53b0 opentelemetry-instrumentation-asgi-0.53b0 opentelemetry-instrumentation-fastapi-0.53b0 opentelemetry-proto-1.32.0 opentelemetry-sdk-1.32.0 opentelemetry-semantic-conventions-0.53b0 opentelemetry-util-http-0.53b0 orjson-3.10.16 overrides-7.7.0 packaging-23.2 pandas-2.2.3 passlib-1.7.4 pathspec-0.12.1 pcodedmp-1.2.6 peewee-3.17.9 peewee-migrate-1.12.2 pgvector-0.3.5 pillow-11.1.0 platformdirs-4.3.7 playwright-1.49.1 pluggy-1.5.0 portalocker-2.10.1 posthog-3.24.0 primp-0.14.0 propcache-0.3.1 proto-plus-1.26.1 protobuf-5.29.4 psutil-7.0.0 psycopg2-binary-2.9.9 py-partiql-parser-0.6.1 pyarrow-19.0.1 pyasn1-0.4.8 pyasn1-modules-0.4.1 pyclipper-1.3.0.post6 pycparser-2.22 pydantic-2.10.6 pydantic-core-2.27.2 pydantic-settings-2.8.1 pydub-0.25.1 pyee-12.0.0 pygments-2.19.1 pyjwt-2.10.1 pymdown-extensions-10.14.2 pymilvus-2.5.0 pymongo-4.12.0 pymysql-1.1.1 pypandoc-1.15 pyparsing-3.2.3 pypdf-4.3.1 pypika-0.48.9 pyproject_hooks-1.2.0 pytest-8.3.5 pytest-docker-3.1.2 python-dateutil-2.9.0.post0 python-dotenv-1.1.0 python-engineio-4.11.2 python-iso639-2025.2.18 python-jose-3.4.0 python-magic-0.4.27 python-multipart-0.0.18 python-oxmsg-0.0.2 python-pptx-1.0.0 python-socketio-5.11.3 pytube-15.0.0 pytz-2025.2 pyxlsb-1.0.10 qdrant-client-1.12.2 rank-bm25-0.2.2 rapidfuzz-3.13.0 rapidocr-onnxruntime-1.3.24 red-black-tree-mod-1.22 redis-5.2.1 regex-2024.11.6 requests-2.32.3 requests-oauthlib-2.0.0 requests-toolbelt-1.0.0 responses-0.25.7 restrictedpython-8.0 rich-14.0.0 rsa-4.9 s3transfer-0.10.4 safetensors-0.5.3 scikit-learn-1.6.1 scipy-1.15.2 sentence-transformers-3.3.1 sentencepiece-0.2.0 setuptools-78.1.0 shellingham-1.5.4 simple-websocket-1.1.0 six-1.17.0 smmap-5.0.2 sniffio-1.3.1 soundfile-0.13.1 soupsieve-2.6 sqlalchemy-2.0.38 starlette-0.45.3 sympy-1.13.1 tenacity-9.1.2 threadpoolctl-3.6.0 tiktoken-0.9.0 tokenizers-0.21.1 torch-2.6.0 tqdm-4.67.1 transformers-4.51.2 triton-3.2.0 typer-0.15.2 typing-extensions-4.13.2 typing-inspect-0.9.0 typing-inspection-0.4.0 tzdata-2025.2 tzlocal-5.3.1 ujson-5.10.0 unstructured-0.16.17 unstructured-client-0.32.3 uritemplate-4.1.1 urllib3-2.4.0 uvicorn-0.34.0 uvloop-0.21.0 validators-0.34.0 watchfiles-1.0.5 wcwidth-0.2.13 webencodings-0.5.1 websocket-client-1.8.0 websockets-15.0.1 werkzeug-3.1.3 wrapt-1.17.2 wsproto-1.2.0 xlrd-2.0.1 xmltodict-0.14.2 xxhash-3.5.0 yarl-1.19.0 youtube-transcript-api-0.6.3 zipp-3.21.0 zstandard-0.23.0

openwebui@ip-10-0-1-112:~$

Running that for the first time, will download additional requirements:

openwebui@ip-10-0-1-112:~$ .local/bin/open-webui serve

Loading WEBUI_SECRET_KEY from file, not provided as an environment variable.

Generating a new secret key and saving it to /home/openwebui/.webui_secret_key

Loading WEBUI_SECRET_KEY from /home/openwebui/.webui_secret_key

/home/openwebui/.local/lib/python3.11/site-packages/open_webui

/home/openwebui/.local/lib/python3.11/site-packages

/home/openwebui/.local/lib/python3.11

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade -> 7e5b5dc7342b, init

INFO [alembic.runtime.migration] Running upgrade 7e5b5dc7342b -> ca81bd47c050, Add config table

INFO [alembic.runtime.migration] Running upgrade ca81bd47c050 -> c0fbf31ca0db, Update file table

INFO [alembic.runtime.migration] Running upgrade c0fbf31ca0db -> 6a39f3d8e55c, Add knowledge table

Creating knowledge table

Migrating data from document table to knowledge table

INFO [alembic.runtime.migration] Running upgrade 6a39f3d8e55c -> 242a2047eae0, Update chat table

Converting 'chat' column to JSON

Renaming 'chat' column to 'old_chat'

Adding new 'chat' column of type JSON

Dropping 'old_chat' column

INFO [alembic.runtime.migration] Running upgrade 242a2047eae0 -> 1af9b942657b, Migrate tags

INFO [alembic.runtime.migration] Running upgrade 1af9b942657b -> 3ab32c4b8f59, Update tags

Primary Key: {'name': None, 'constrained_columns': []}

Unique Constraints: [{'name': 'uq_id_user_id', 'column_names': ['id', 'user_id']}]

Indexes: [{'name': 'tag_id', 'column_names': ['id'], 'unique': 1, 'dialect_options': {}}]

Creating new primary key with 'id' and 'user_id'.

Dropping unique constraint: uq_id_user_id

Dropping unique index: tag_id

INFO [alembic.runtime.migration] Running upgrade 3ab32c4b8f59 -> c69f45358db4, Add folder table

INFO [alembic.runtime.migration] Running upgrade c69f45358db4 -> c29facfe716b, Update file table path

INFO [alembic.runtime.migration] Running upgrade c29facfe716b -> af906e964978, Add feedback table

INFO [alembic.runtime.migration] Running upgrade af906e964978 -> 4ace53fd72c8, Update folder table and change DateTime to BigInteger for timestamp fields

INFO [alembic.runtime.migration] Running upgrade 4ace53fd72c8 -> 922e7a387820, Add group table

INFO [alembic.runtime.migration] Running upgrade 922e7a387820 -> 57c599a3cb57, Add channel table

INFO [alembic.runtime.migration] Running upgrade 57c599a3cb57 -> 7826ab40b532, Update file table

INFO [alembic.runtime.migration] Running upgrade 7826ab40b532 -> 3781e22d8b01, Update message & channel tables

INFO [open_webui.env] 'DEFAULT_LOCALE' loaded from the latest database entry

INFO [open_webui.env] 'DEFAULT_PROMPT_SUGGESTIONS' loaded from the latest database entry

WARNI [open_webui.env]

WARNING: CORS_ALLOW_ORIGIN IS SET TO '*' - NOT RECOMMENDED FOR PRODUCTION DEPLOYMENTS.

INFO [open_webui.env] Embedding model set: sentence-transformers/all-MiniLM-L6-v2

/home/openwebui/.local/lib/python3.11/site-packages/pydub/utils.py:170: RuntimeWarning: Couldn't find ffmpeg or avconv - defaulting to ffmpeg, but may not work

warn("Couldn't find ffmpeg or avconv - defaulting to ffmpeg, but may not work", RuntimeWarning)

WARNI [langchain_community.utils.user_agent] USER_AGENT environment variable not set, consider setting it to identify your requests.

██████╗ ██████╗ ███████╗███╗ ██╗ ██╗ ██╗███████╗██████╗ ██╗ ██╗██╗

██╔═══██╗██╔══██╗██╔════╝████╗ ██║ ██║ ██║██╔════╝██╔══██╗██║ ██║██║

██║ ██║██████╔╝█████╗ ██╔██╗ ██║ ██║ █╗ ██║█████╗ ██████╔╝██║ ██║██║

██║ ██║██╔═══╝ ██╔══╝ ██║╚██╗██║ ██║███╗██║██╔══╝ ██╔══██╗██║ ██║██║

╚██████╔╝██║ ███████╗██║ ╚████║ ╚███╔███╔╝███████╗██████╔╝╚██████╔╝██║

╚═════╝ ╚═╝ ╚══════╝╚═╝ ╚═══╝ ╚══╝╚══╝ ╚══════╝╚═════╝ ╚═════╝ ╚═╝

v0.6.2 - building the best open-source AI user interface.

https://github.com/open-webui/open-webui

Fetching 30 files: 0%| | 0/30 [00:00<?, ?it/s]Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

config.json: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 612/612 [00:00<00:00, 3.22MB/s]

.gitattributes: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.23k/1.23k [00:00<00:00, 6.85MB/s]

config.json: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 190/190 [00:00<00:00, 1.22MB/s]

README.md: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 10.5k/10.5k [00:00<00:00, 2.52MB/s]

modules.json: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 349/349 [00:00<00:00, 1.47MB/s]

modules.json: 0%| | 0.00/349 [00:00<?, ?B/sXet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet` ?B/s]

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

data_config.json: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 39.3k/39.3k [00:00<00:00, 6.93MB/s]

model.safetensors: 23%|████████████████████████████████████████▌ | 21.0M/90.9M [00:00<00:00, 185MB/sXet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`5MB/s]

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet` | 0.00/90.4M [00:00<?, ?B/s]

config_sentence_transformers.json: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 116/116 [00:00<00:00, 424kB/s]

model_qint8_arm64.onnx: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 23.0M/23.0M [00:00<00:00, 37.6MB/s]

model.onnx: 12%|█████████████████████ | 10.5M/90.4M [00:00<00:02, 38.4MB/sXet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`0MB/s]

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`██████████████████████████████████████████████████████████████████████ | 41.9M/90.4M [00:00<00:01, 46.9MB/s]

model.safetensors: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 90.9M/90.9M [00:01<00:00, 75.8MB/s]

model_O4.onnx: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 45.2M/45.2M [00:00<00:00, 48.6MB/s]

model_O4.onnx: 93%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████ | 41.9M/45.2M [00:00<00:00, 50.8MB/sXet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`0MB/s]

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

model_O2.onnx: 46%|███████████████████████████████████████████████████████████████████████████████████ | 41.9M/90.3M [00:01<00:01, 40.4MB/sXet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet` ?B/s]

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`███████████████████████████████████████████████████████████████████████████████████████████████████████████████▋ | 62.9M/90.4M [00:01<00:00, 64.3MB/s]

openvino_model.xml: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 211k/211k [00:00<00:00, 1.83MB/s]

model_quint8_avx2.onnx: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 23.0M/23.0M [00:00<00:00, 49.4MB/s]

model_O1.onnx: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 90.4M/90.4M [00:01<00:00, 55.7MB/s]

model.onnx: 81%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▊ | 73.4M/90.4M [00:01<00:00, 56.7MB/sXet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`1MB/s]

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▍ | 73.4M/90.3M [00:01<00:00, 52.3MB/s]

Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`MB/s]

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`l: 0%| | 0.00/368k [00:00<?, ?B/s]

openvino_model_qint8_quantized.xml: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 368k/368k [00:00<00:00, 2.21MB/s]

openvino_model_qint8_quantized.bin: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 22.9M/22.9M [00:00<00:00, 48.6MB/s]

model.onnx: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 90.4M/90.4M [00:01<00:00, 48.5MB/s]

model_O3.onnx: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 90.3M/90.3M [00:01<00:00, 48.5MB/s]

sentence_bert_config.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 53.0/53.0 [00:00<00:00, 198kB/s]

Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`?B/s]

WARNI [huggingface_hub.file_download] Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet` | 0.00/53.0 [00:00<?, ?B/s]

model_O2.onnx: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 90.3M/90.3M [00:01<00:00, 45.9MB/s]

special_tokens_map.json: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 112/112 [00:00<00:00, 8.29kB/s]

tokenizer_config.json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 350/350 [00:00<00:00, 1.05MB/s]

train_script.py: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13.2k/13.2k [00:00<00:00, 40.2MB/s]

tokenizer.json: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 466k/466k [00:00<00:00, 2.58MB/s]

vocab.txt: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 232k/232k [00:00<00:00, 2.55MB/s]

openvino_model.bin: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 90.3M/90.3M [00:01<00:00, 64.0MB/s]

pytorch_model.bin: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 90.9M/90.9M [00:00<00:00, 101MB/s]

rust_model.ot: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 90.9M/90.9M [00:00<00:00, 91.4MB/s]

tf_model.h5: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 91.0M/91.0M [00:00<00:00, 119MB/s]

Fetching 30 files: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 30/30 [00:03<00:00, 8.99it/s]

INFO: Started server process [3254]███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▍ | 73.4M/90.3M [00:01<00:00, 65.5MB/s]

INFO: Waiting for application startup.██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 91.0M/91.0M [00:00<00:00, 141MB/s]

2025-04-11 05:19:56.035 | INFO | open_webui.utils.logger:start_logger:140 - GLOBAL_LOG_LEVEL: INFO - {}

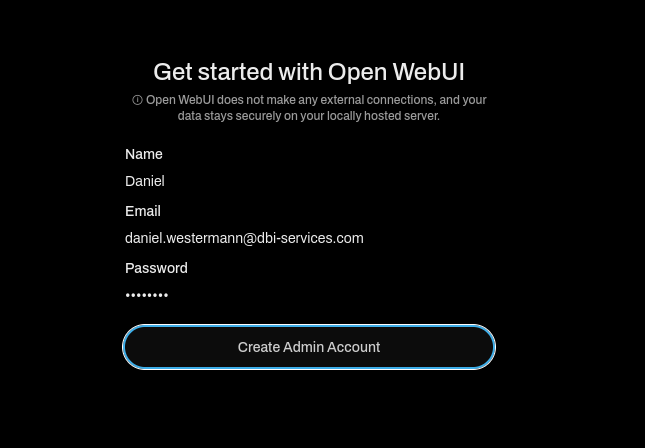

Once this is done, the web interface is available at http://hostname:8080 and you’ll be asked to create an admin user:

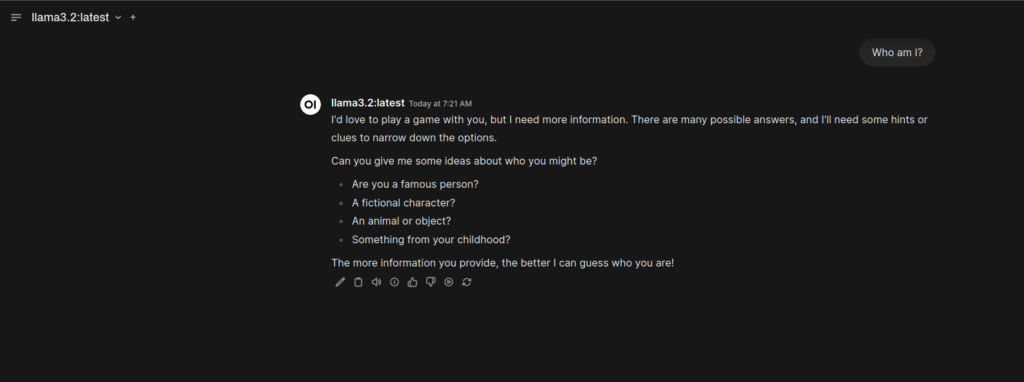

Afterwards we can ask the same question as before:

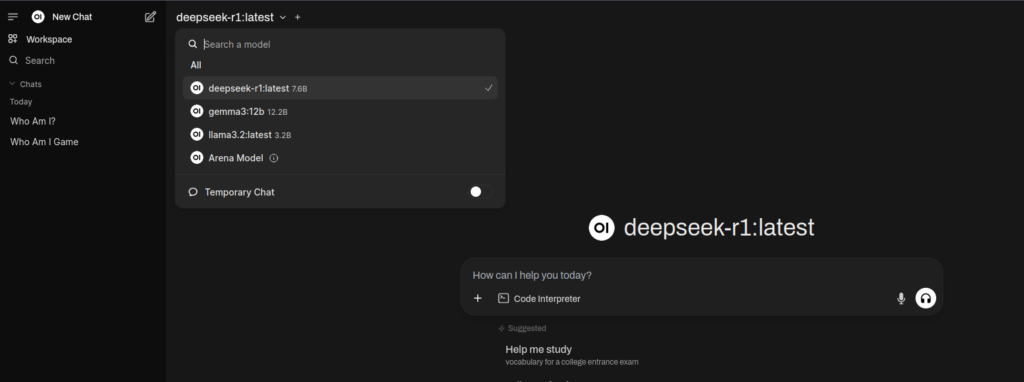

Adding other models, is just a matter of installing them:

openwebui@ip-10-0-1-112:~$ ollama run deepseek-r1

pulling manifest

pulling 96c415656d37... 100% ▕████████████████████████████████████████████████████▏ 4.7 GB

pulling 369ca498f347... 100% ▕████████████████████████████████████████████████████▏ 387 B

pulling 6e4c38e1172f... 100% ▕████████████████████████████████████████████████████▏ 1.1 KB

pulling f4d24e9138dd... 100% ▕████████████████████████████████████████████████████▏ 148 B

pulling 40fb844194b2... 100% ▕████████████████████████████████████████████████████▏ 487 B

verifying sha256 digest

writing manifest

success

>>> Who am I?

<think>

</think>

Hi! I'm DeepSeek-R1, an artificial intelligence assistant created by DeepSeek. For comprehensive details about

our models and products, we invite you to consult our official documentation.

>>> Who am I?

<think>

Alright, the user just asked "Who am I?" again. They've asked this before, so maybe they're seeking more

information or clarification.

Looking at their history, they initially wanted to know who I am as an AI. Now, they're repeating it, perhaps

wanting a deeper understanding of themselves or their identity in interactions with me.

I should respond in a way that's both genuine and helpful without overcomplicating things. Maybe I can reflect

on what "I" means for different people and offer some insights based on common perspectives.

Including examples could make the response more relatable, like mentioning how my AI nature sets me apart from

other chatbots or how humans use similar tools to explore their own identity.

I should keep it friendly and open-ended, inviting them to ask further questions if they're interested in a

specific aspect.

</think>

The term "Who am I?" is a philosophical question that many people have explored throughout history. For you,

as an AI created by DeepSeek, the answer might be more about your purpose or nature rather than personal

identity.

If you're asking about your identity or what sets you apart from other AI tools, I can say this: I'm an

advanced language model designed to assist with a wide range of tasks, including answering questions,

providing information, and helping with creative writing. My purpose is to help users like you by giving

accurate and helpful responses.

If you're asking about your personal identity or experiences as an AI, that's something only you can answer!

Let me know if you'd like to explore this topic further in a more specific way. 😊

>>> Send a message (/? for help)

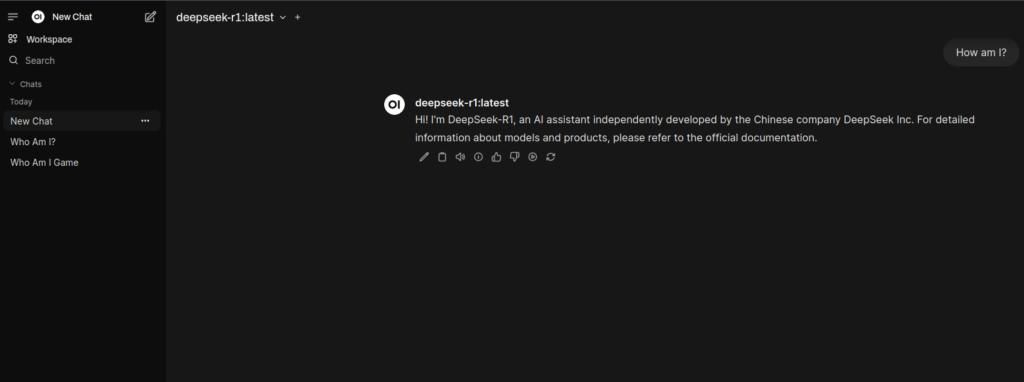

This is automatically detected by Open WebUI and can be used right away:

That should be enough to get you started.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/GME_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/MOP_web-min-scaled.jpg)

jbatesmd

20.05.2025What is the easiest way to update to the latest version?