Most of you probably heard of Red Hat Satellite, a product which is used to manage Linux infrastructures. Maybe you also heard about SUSE Manager, which does more or less the same thing. Both solutions traditionally used Spacewalk as the underlying system management solution, but Spacewalk discontinued on May 31 2020. While Red Hat went for Foreman as a replacement for Spacewalk, the openSUSE community decided to fork Spacewalk and to create a new project called Uyuni, which as based on the Salt stack. The home of Uyuni is here, and what looks really promising is the support for various Linux distributions.

For the demo setup used in this post, we’ll use the OpenSUSE Leap 15.4 AWS AMI. Once this is up and running make sure that the system is patched to the latest release (make also sure that you meet the system requirements):

ec2-user@uyuni-server:~> sudo zypper update -y

ec2-user@uyuni-server:~> sudo reboot

ec2-user@uyuni-server:~> uname -a

Linux uyuni-server 5.14.21-150400.24.38-default #1 SMP PREEMPT_DYNAMIC Fri Dec 9 09:29:22 UTC 2022 (e9c5676) x86_64 x86_64 x86_64 GNU/Linux

ec2-user@uyuni-server:~> cat /etc/os-release

NAME="openSUSE Leap"

VERSION="15.4"

ID="opensuse-leap"

ID_LIKE="suse opensuse"

VERSION_ID="15.4"

PRETTY_NAME="openSUSE Leap 15.4"

ANSI_COLOR="0;32"

CPE_NAME="cpe:/o:opensuse:leap:15.4"

BUG_REPORT_URL="https://bugs.opensuse.org"

HOME_URL="https://www.opensuse.org/"

DOCUMENTATION_URL="https://en.opensuse.org/Portal:Leap"

LOGO="distributor-logo-Leap"

It is essential that “hostname -f” returns the fully qualified domain name of the system. In our case this was done by setting up “/etc/hosts”:

ec2-user@uyuni-server:~> cat /etc/hosts | grep uyuni

10.0.1.235 uyuni-server.it.dbi-services.com uyuni-server

ec2-user@uyuni-server:~> hostname -f

uyuni-server.it.dbi-services.com

The installation itself is quite simple, as everything is installed using zypper:

ec2-user@uyuni-server:~> sudo bash

uyuni-server:/home/ec2-user $ repo=repositories/systemsmanagement:/

uyuni-server:/home/ec2-user $ repo=${repo}Uyuni:/Stable/images/repo/Uyuni-Server-POOL-x86_64-Media1/

uyuni-server:/home/ec2-user $ zypper ar https://download.opensuse.org/$repo uyuni-server-stable

Adding repository 'uyuni-server-stable' ................................................................................................................................................................................................................................[done]

Repository 'uyuni-server-stable' successfully added

URI : https://download.opensuse.org/repositories/systemsmanagement:/Uyuni:/Stable/images/repo/Uyuni-Server-POOL-x86_64-Media1/

Enabled : Yes

GPG Check : Yes

Autorefresh : No

Priority : 99 (default priority)

Repository priorities are without effect. All enabled repositories share the same priority.

uyuni-server:/home/ec2-user $ zypper ref

Repository 'Update repository of openSUSE Backports' is up to date.

Repository 'Debug Repository' is up to date.

Repository 'Update Repository (Debug)' is up to date.

Repository 'Non-OSS Repository' is up to date.

Repository 'Main Repository' is up to date.

Retrieving repository 'Update repository with updates from SUSE Linux Enterprise 15' metadata ..........................................................................................................................................................................[done]

Building repository 'Update repository with updates from SUSE Linux Enterprise 15' cache ...............................................................................................................................................................................[done]

Repository 'Source Repository' is up to date.

Repository 'Main Update Repository' is up to date.

Repository 'Update Repository (Non-Oss)' is up to date.

New repository or package signing key received:

Repository: uyuni-server-stable

Key Fingerprint: 62F0 28DE 22F8 BF49 B88B C9E5 972E 5D6C 0D20 833E

Key Name: systemsmanagement:Uyuni OBS Project <systemsmanagement:[email protected]>

Key Algorithm: RSA 2048

Key Created: Fri Oct 7 14:00:36 2022

Key Expires: Sun Dec 15 14:00:36 2024

Rpm Name: gpg-pubkey-0d20833e-63403104

Note: Signing data enables the recipient to verify that no modifications occurred after the data

were signed. Accepting data with no, wrong or unknown signature can lead to a corrupted system

and in extreme cases even to a system compromise.

Note: A GPG pubkey is clearly identified by its fingerprint. Do not rely on the key's name. If

you are not sure whether the presented key is authentic, ask the repository provider or check

their web site. Many providers maintain a web page showing the fingerprints of the GPG keys they

are using.

Do you want to reject the key, trust temporarily, or trust always? [r/t/a/?] (r): t

Retrieving repository 'uyuni-server-stable' metadata ...................................................................................................................................................................................................................[done]

Building repository 'uyuni-server-stable' cache ........................................................................................................................................................................................................................[done]

All repositories have been refreshed.

uyuni-server:/home/ec2-user $ zypper in patterns-uyuni_server

...

Overall download size: 598.2 MiB. Already cached: 0 B. After the operation, additional 1.4 GiB will be used.

Continue? [y/n/v/...? shows all options] (y): y

Do you agree with the terms of the license? [yes/no] (no): yes

Retrieving package bzip2-1.0.8-150400.1.122.x86_64 (1/493), 44.6 KiB ( 73.1 KiB unpacked)

Retrieving: bzip2-1.0.8-150400.1.122.x86_64.rpm ............................................................................................................................................................................................................[done (2.6 KiB/s)]

Retrieving package grub2-i386-efi-2.06-150400.9.9.noarch (2/493), 997.1 KiB ( 3.7 MiB unpacked)

Retrieving: grub2-i386-efi-2.06-150400.9.9.noarch.rpm ......

...

uyuni-server:/home/ec2-user $ reboot

That’s it for the installation. Once the system is up again, Uyuni needs to be configured. We’ll do it with YaST (Make sure you enable and start the firewall, otherwise the setup will fail):

ec2-user@uyuni-server:~> sudo systemctl status firewalld

○ firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: disabled)

Active: inactive (dead)

Docs: man:firewalld(1)

ec2-user@uyuni-server:~> sudo systemctl enable firewalld

Created symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service → /usr/lib/systemd/system/firewalld.service.

Created symlink /etc/systemd/system/multi-user.target.wants/firewalld.service → /usr/lib/systemd/system/firewalld.service.

ec2-user@uyuni-server:~> sudo systemctl start firewalld

ec2-user@uyuni-server:~> sudo systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2022-12-28 10:19:47 UTC; 4s ago

Docs: man:firewalld(1)

Main PID: 2322 (firewalld)

Tasks: 2 (limit: 4915)

CGroup: /system.slice/firewalld.service

└─ 2322 /usr/bin/python3 /usr/sbin/firewalld --nofork --nopid

Dec 28 10:19:47 uyuni-server systemd[1]: Starting firewalld - dynamic firewall daemon...

Dec 28 10:19:47 uyuni-server systemd[1]: Started firewalld - dynamic firewall daemon.

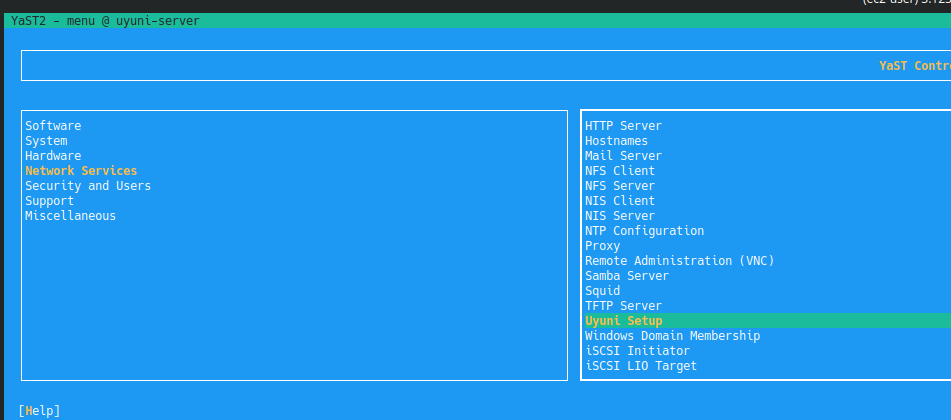

ec2-user@uyuni-server:~> sudo yast

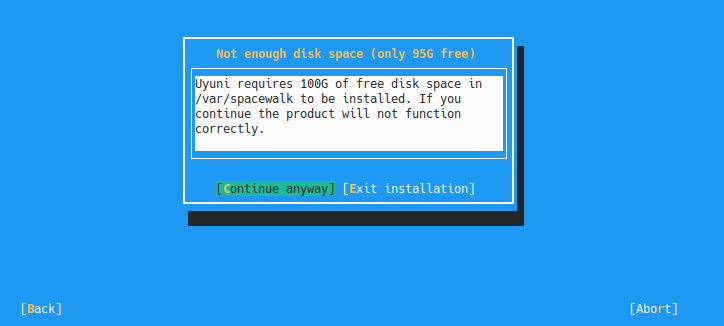

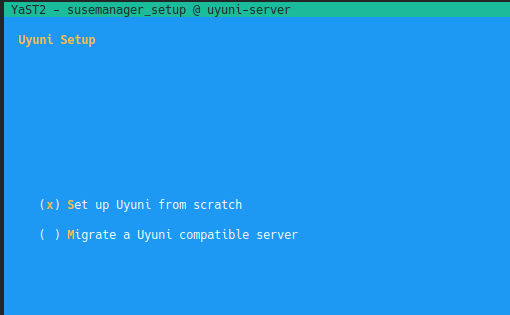

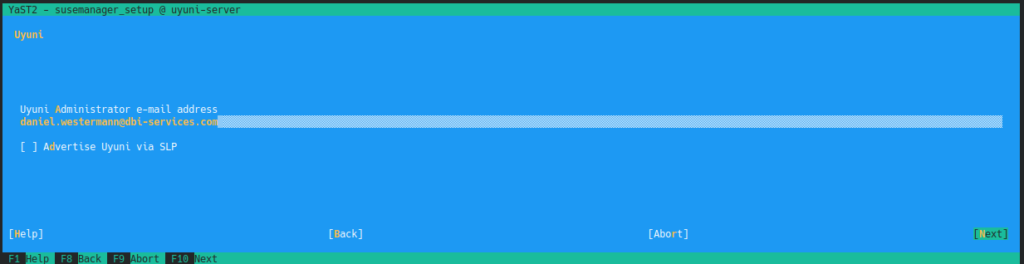

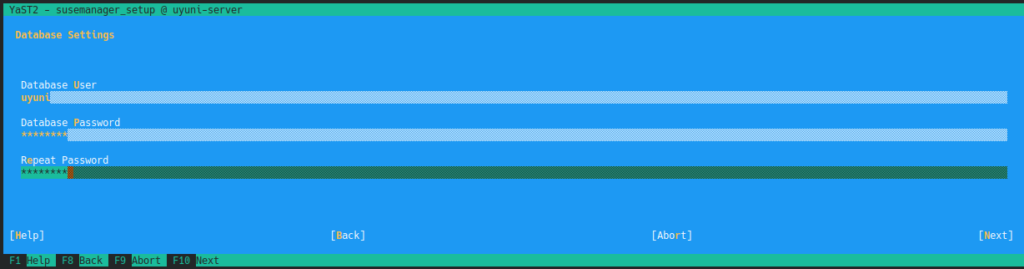

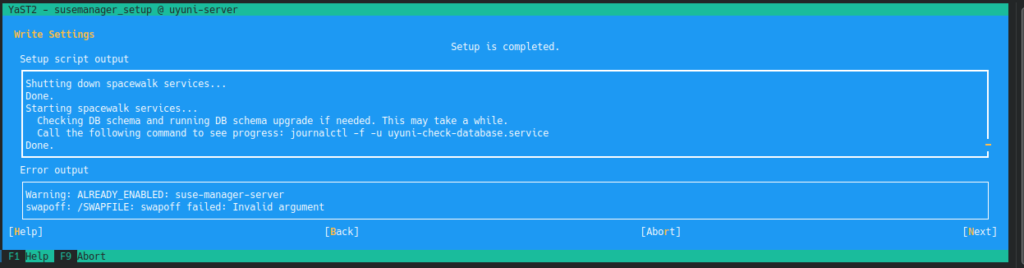

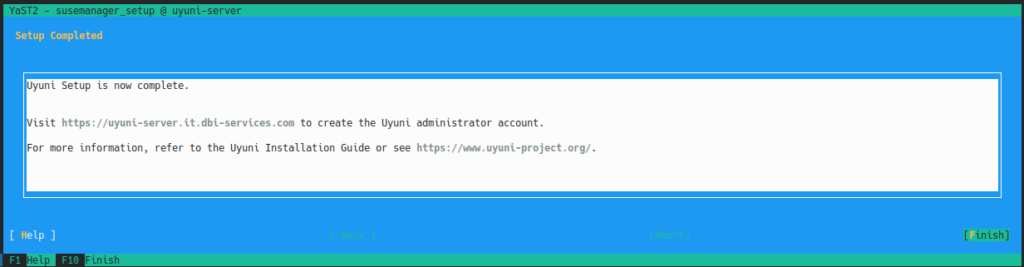

This will bring up the YaST user interface and Uyuni can be configured as follows (we’ll ignore the warnings about disk and swap space as this is just for a demo):

When everything went fine, a couple of services have been started, including a PostgreSQL instance which holds the configuration:

ec2-user@uyuni-server:~> ps -ef | egrep "postgres|salt|httpd"

postgres 7352 1 0 10:23 ? 00:00:00 /usr/lib/postgresql14/bin/postgres -D /var/lib/pgsql/data

postgres 7353 7352 0 10:23 ? 00:00:00 postgres: logger

postgres 7355 7352 0 10:23 ? 00:00:00 postgres: checkpointer

postgres 7356 7352 0 10:23 ? 00:00:00 postgres: background writer

postgres 7357 7352 0 10:23 ? 00:00:00 postgres: walwriter

postgres 7358 7352 0 10:23 ? 00:00:00 postgres: autovacuum launcher

postgres 7359 7352 0 10:23 ? 00:00:00 postgres: archiver

postgres 7360 7352 0 10:23 ? 00:00:00 postgres: stats collector

postgres 7361 7352 0 10:23 ? 00:00:00 postgres: logical replication launcher

salt 7474 1 0 10:23 ? 00:00:01 /usr/bin/python3 /usr/bin/salt-api

salt 7479 1 0 10:23 ? 00:00:01 /usr/bin/python3 /usr/bin/salt-master

salt 7541 7479 0 10:23 ? 00:00:00 /usr/bin/python3 /usr/bin/salt-master

salt 7554 7479 0 10:23 ? 00:00:00 /usr/bin/python3 /usr/bin/salt-master

salt 7555 7479 0 10:23 ? 00:00:00 /usr/bin/python3 /usr/bin/salt-master

salt 7558 7474 0 10:23 ? 00:00:02 /usr/bin/python3 /usr/bin/salt-api

salt 7559 7479 0 10:23 ? 00:00:00 /usr/bin/python3 /usr/bin/salt-master

salt 7560 7479 0 10:23 ? 00:00:01 /usr/bin/python3 /usr/bin/salt-master

salt 7561 7479 0 10:23 ? 00:00:04 /usr/bin/python3 /usr/bin/salt-master

salt 7562 7479 0 10:23 ? 00:00:00 /usr/bin/python3 /usr/bin/salt-master

salt 7563 7562 0 10:23 ? 00:00:00 /usr/bin/python3 /usr/bin/salt-master

postgres 7573 7352 0 10:23 ? 00:00:00 postgres: uyuni uyuni ::1(48654) idle

salt 7574 7562 0 10:23 ? 00:00:03 /usr/bin/python3 /usr/bin/salt-master

salt 7575 7562 0 10:23 ? 00:00:03 /usr/bin/python3 /usr/bin/salt-master

salt 7576 7562 0 10:23 ? 00:00:04 /usr/bin/python3 /usr/bin/salt-master

salt 7577 7562 0 10:23 ? 00:00:04 /usr/bin/python3 /usr/bin/salt-master

salt 7578 7562 0 10:23 ? 00:00:03 /usr/bin/python3 /usr/bin/salt-master

salt 7579 7562 0 10:23 ? 00:00:04 /usr/bin/python3 /usr/bin/salt-master

salt 7582 7562 0 10:23 ? 00:00:03 /usr/bin/python3 /usr/bin/salt-master

salt 7583 7562 0 10:23 ? 00:00:03 /usr/bin/python3 /usr/bin/salt-master

postgres 9088 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(40206) idle in transaction

postgres 9090 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(40238) idle in transaction

postgres 9091 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(40240) idle

root 9167 1 0 10:24 ? 00:00:00 /usr/sbin/httpd-prefork -DSYSCONFIG -DSSL -DISSUSE -C PidFile /run/httpd.pid -C Include /etc/apache2/sysconfig.d//loadmodule.conf -C Include /etc/apache2/sysconfig.d//global.conf -f /etc/apache2/httpd.conf -c Include /etc/apache2/sysconfig.d//include.conf -DSYSTEMD -DFOREGROUND -k start

wwwrun 9180 9167 0 10:24 ? 00:00:00 /usr/sbin/httpd-prefork -DSYSCONFIG -DSSL -DISSUSE -C PidFile /run/httpd.pid -C Include /etc/apache2/sysconfig.d//loadmodule.conf -C Include /etc/apache2/sysconfig.d//global.conf -f /etc/apache2/httpd.conf -c Include /etc/apache2/sysconfig.d//include.conf -DSYSTEMD -DFOREGROUND -k start

wwwrun 9182 9167 0 10:24 ? 00:00:00 /usr/sbin/httpd-prefork -DSYSCONFIG -DSSL -DISSUSE -C PidFile /run/httpd.pid -C Include /etc/apache2/sysconfig.d//loadmodule.conf -C Include /etc/apache2/sysconfig.d//global.conf -f /etc/apache2/httpd.conf -c Include /etc/apache2/sysconfig.d//include.conf -DSYSTEMD -DFOREGROUND -k start

wwwrun 9183 9167 0 10:24 ? 00:00:00 /usr/sbin/httpd-prefork -DSYSCONFIG -DSSL -DISSUSE -C PidFile /run/httpd.pid -C Include /etc/apache2/sysconfig.d//loadmodule.conf -C Include /etc/apache2/sysconfig.d//global.conf -f /etc/apache2/httpd.conf -c Include /etc/apache2/sysconfig.d//include.conf -DSYSTEMD -DFOREGROUND -k start

wwwrun 9184 9167 0 10:24 ? 00:00:00 /usr/sbin/httpd-prefork -DSYSCONFIG -DSSL -DISSUSE -C PidFile /run/httpd.pid -C Include /etc/apache2/sysconfig.d//loadmodule.conf -C Include /etc/apache2/sysconfig.d//global.conf -f /etc/apache2/httpd.conf -c Include /etc/apache2/sysconfig.d//include.conf -DSYSTEMD -DFOREGROUND -k start

wwwrun 9185 9167 0 10:24 ? 00:00:00 /usr/sbin/httpd-prefork -DSYSCONFIG -DSSL -DISSUSE -C PidFile /run/httpd.pid -C Include /etc/apache2/sysconfig.d//loadmodule.conf -C Include /etc/apache2/sysconfig.d//global.conf -f /etc/apache2/httpd.conf -c Include /etc/apache2/sysconfig.d//include.conf -DSYSTEMD -DFOREGROUND -k start

root 9192 9173 1 10:24 ? 00:00:07 /usr/bin/java -Djava.library.path=/usr/lib:/usr/lib64:/usr/lib/gcj/postgresql-jdbc:/usr/lib64/gcj/postgresql-jdbc -classpath /usr/share/rhn/search/lib/*:/usr/share/rhn/classes:/usr/share/rhn/lib/spacewalk-asm.jar:/usr/share/rhn/lib/rhn.jar:/usr/share/rhn/lib/java-branding.jar -Dfile.encoding=UTF-8 -Xms32m -Xmx512m -Dlog4j2.configurationFile=/usr/share/rhn/search/classes/log4j2.xml com.redhat.satellite.search.Main

postgres 9233 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49370) idle

wwwrun 9234 9167 0 10:24 ? 00:00:00 /usr/sbin/httpd-prefork -DSYSCONFIG -DSSL -DISSUSE -C PidFile /run/httpd.pid -C Include /etc/apache2/sysconfig.d//loadmodule.conf -C Include /etc/apache2/sysconfig.d//global.conf -f /etc/apache2/httpd.conf -c Include /etc/apache2/sysconfig.d//include.conf -DSYSTEMD -DFOREGROUND -k start

postgres 9380 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49372) idle

postgres 9381 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49384) idle

postgres 9382 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49398) idle

postgres 9386 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49412) idle

postgres 9387 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49426) idle

postgres 9388 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49438) idle

postgres 9390 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49440) idle

postgres 9391 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49442) idle

postgres 9392 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49454) idle

postgres 9393 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49460) idle

postgres 9394 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49464) idle

postgres 9395 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49468) idle

postgres 9396 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49470) idle

postgres 9397 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49478) idle

postgres 9398 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49494) idle

postgres 9473 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49504) idle

postgres 9474 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49514) idle

postgres 9475 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(49530) idle

postgres 9477 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(47244) idle

postgres 9478 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(47242) idle

postgres 9534 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(47262) idle

postgres 9535 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(47264) idle

postgres 9536 7352 0 10:24 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(47270) idle

salt 10844 7479 0 10:28 ? 00:00:00 /usr/bin/python3 /usr/bin/salt-master

postgres 11013 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(39514) idle

postgres 11014 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(39528) idle

postgres 11015 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(39530) idle

postgres 11020 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(39544) idle

postgres 11021 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(39552) idle

postgres 11022 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(39560) idle

postgres 11023 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(39564) idle

postgres 11024 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(39568) idle

postgres 11027 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(39584) idle

postgres 11056 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(35346) idle

postgres 11057 7352 0 10:30 ? 00:00:00 postgres: uyuni uyuni 127.0.0.1(35354) idle

ec2-user 11182 1579 0 10:31 pts/0 00:00:00 grep -E --color=auto postgres|salt|httpd

The version of PostgreSQL is one version behind the latest minor release as of now, but this is not so bad:

ec2-user@uyuni-server:~> sudo su - postgres

postgres@uyuni-server:~> psql

psql (14.5)

Type "help" for help.

postgres=$ \l

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

-----------+----------+----------+-------------+-------------+-----------------------

postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

reportdb | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres +

| | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres +

| | | | | postgres=CTc/postgres

uyuni | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

(5 rows)

postgres=#

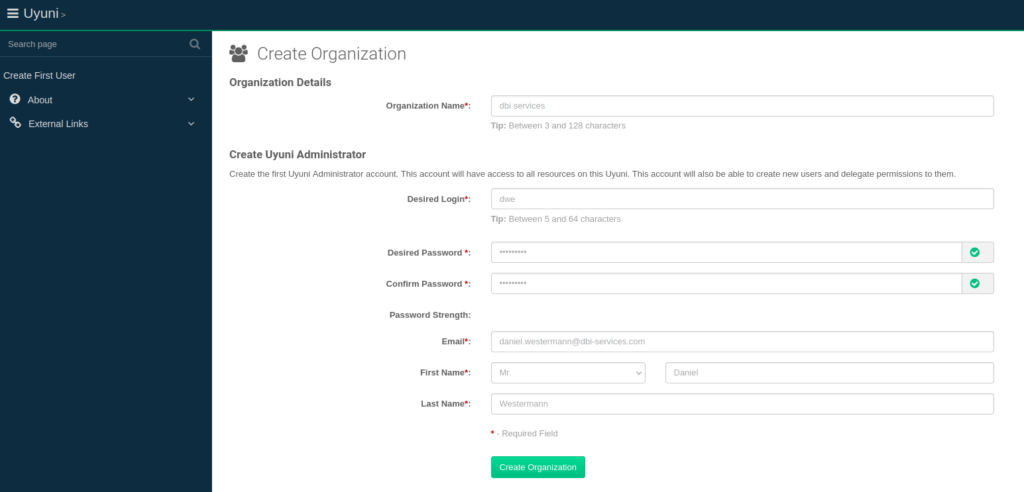

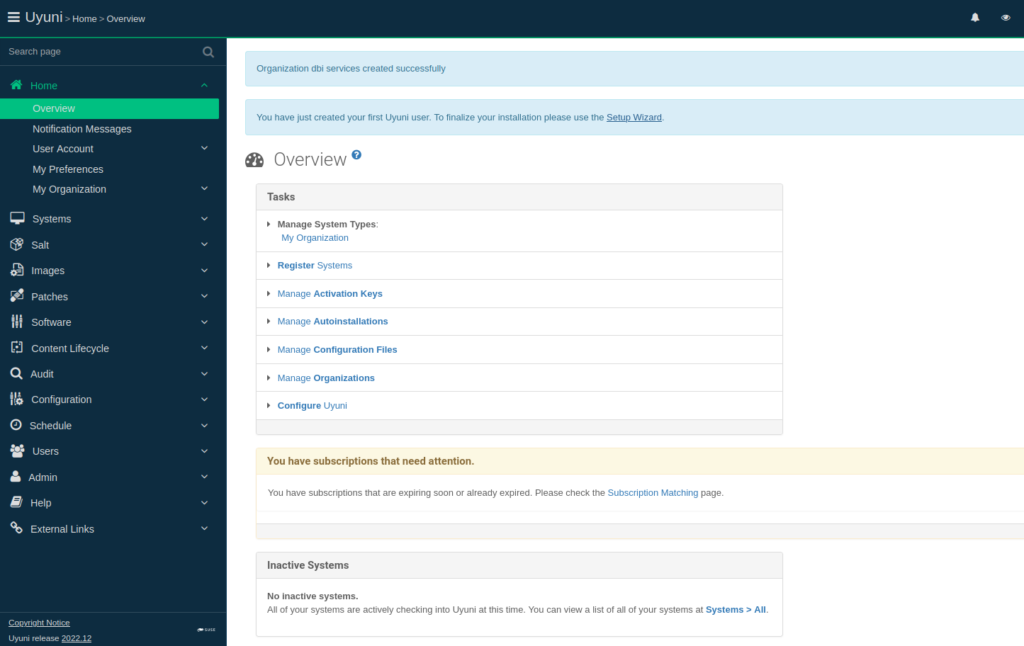

The final step is to create an organization using the web interface:

That’s all for the server setup and configuration part. In the next post we’ll add a client we can then manage with the brand new Uyuni server.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/11/LTO_WEB.jpg)