In this post, we continue our AI journey with SQL Server 2025 (Preview). In previous articles, I already showed how to call the GPT-4o chat-completion model in Azure directly from SQL Server to generate product descriptions, how to create and store embeddings for semantic search, and how to use the (preview) acceleration features for semantic search workloads. If you want to dive into any of those topics, check out the posts below:

- Calling GPT-4o from SQL Server to generate product content: https://www.dbi-services.com/blog/lift-your-application-in-the-age-of-ai-with-sql-server-2025/

- Generating & storing embeddings in SQL Server 2025: https://www.dbi-services.com/blog/sql-server-2025-generate-data-embeddings-for-semantic-search/

- Accelerating Semantic Search workloads with vector Indexes: https://www.dbi-services.com/blog/sql-server-2025-vector-indexes-semantic-search-performance/

In this post now, we will combine some of these functionalities to implement a simple RAG based shopping assistant with SQL Server 2025 (Preview).

What is RAG?

But first of all I will introduce you in the concept of RAG. As you may already noticed in recent years, AI chat models are great. They are able to understand your prompts, they can perform reasoning to “think” about what you ask them and they can provide you an individual answer. But the model alone is limited to the data on which it was trained on.

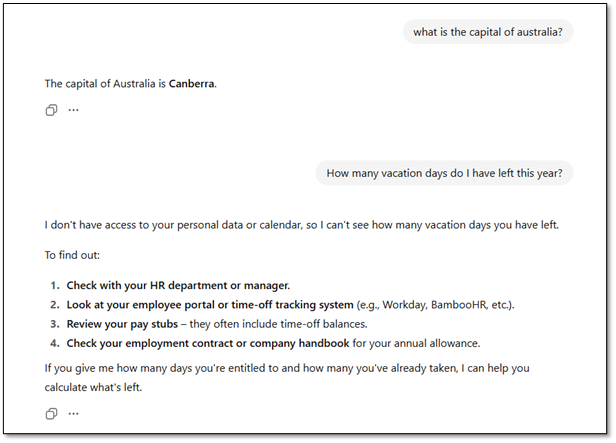

So when I ask a chat model like GPT-5 a very general question like “What is the capital of Australia?” the model is able to answer this question without any problem because it’s general knowledge and the model certainly came into touch with this knowledge through its training data. But when I ask the model something which is only known through access on specific ( usually private) data sources the model is not able to answer such a question at least not alone. For example when I ask the model how many vacation days I have left for this year the model is obviously not able to answer such a question because such information’s are stored in company internal tools:

This is the point where Retrieval Augmented Generation (RAG) comes into play. RAG is an approach that searches external data sources for information relevant to the user’s prompt. The retrieved data is then used to augment the generation process of the AI model, allowing it to produce a context-aware and more accurate response.

And this is what we will implement within this blog post. We will build a simple chat service which will help the customers of the ShopAI Online Shop to find the product of their desire.

RAG Architecture:

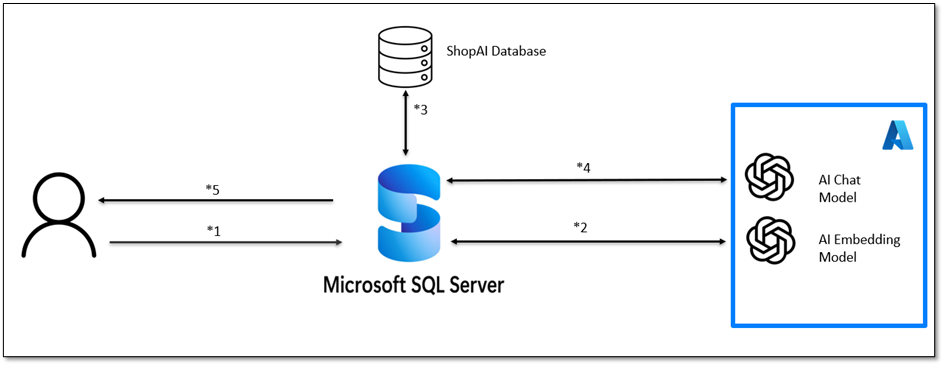

Let’s take a look at the RAG architecture for our purpose. Therefore take a look at the schema below and on the numbers which are representing steps in the RAG-process:

- The customer sends a prompt to the ShopAI chat service where he describes which product he or she is looking for.

- The prompt is taken by the chat service and is sent to the embedding model hosted on azure to generate and return an embedding for that prompt.

- The embedding of the customers prompt is then used to perform a semantic search on the products data in the ShopAI database.

- The Top N semantic matching products are then sent to the chat model hosted on Azure for augmenting the generation of the response for the customers prompt.

- The augmented response is then sent to the customer.

We will be able to handle the whole data logic inside T-SQL with the latest features of SQL-Server 2025 (Preview).

Preparing the AI Models:

So far so good. Let’s start now with the practical implementation of our vision.

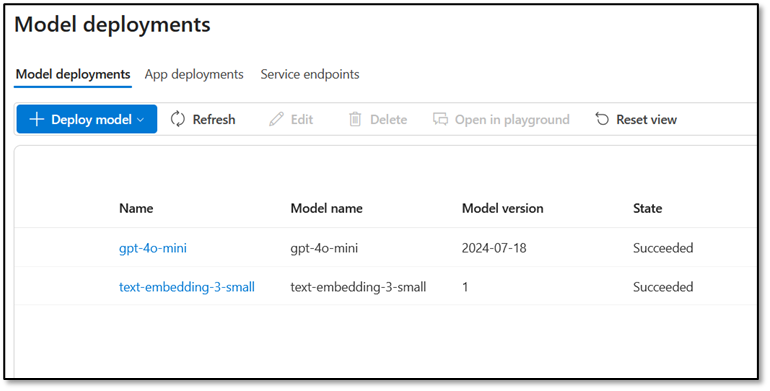

The AI models I’m using are the gpt-4o-mini model for the chat completion tasks and the text-embedding-3-small model to create embeddings for the users prompt. Embeddings for product data are already persisted in our database (refer to the earlier blog for details). When generating embeddings for user queries, use the exact same embedding model and settings used for the stored data.

To learn how to deploy an AI model in Azure you should also check out my previous blog posts where I’m guiding you through the process. For this article my models are already deployed in Azure AI Foundry:

To use now the models inside T-SQL we have to create first a database scoped credential for the chat completion model. You can do that with the following statement. You’ll find the API key inside Azure AI Foundry (Check out the previous Blog posts for detailed instructions):

Use ShopAI

Go

-- Create database scoped credential:

CREATE DATABASE SCOPED CREDENTIAL [https://shopai-blog.openai.azure.com/openai/deployments/gpt-4o-mini/] /* always youse an url which is more generic*/

WITH IDENTITY = 'HTTPEndpointHeaders', secret = '{"api-key":"YOUR-API-KEY"}';

GO

Then we will do the same for the embedding model:

Use ShopAI

Go

-- Create database scoped credential:

CREATE DATABASE SCOPED CREDENTIAL [ https://shopai-blog.openai.azure.com/openai/deployments/text-embedding-3-small/] /* always youse an url which is more generic*/

WITH IDENTITY = 'HTTPEndpointHeaders', secret = '{"api-key":"YOUR-API-KEY"}';

GO

And then we will register our embedding model to be able to generate data embeddings with the T-SQL built in functions:

Use ShopAI

-- Create EXTERNAL MODEL

CREATE EXTERNAL MODEL OpenAITextEmbedding3Small

AUTHORIZATION dbo

WITH (

LOCATION = 'https://shopai-blog.openai.azure.com/openai/deployments/text-embedding-3-small/embeddings?api-version=2023-05-15',

API_FORMAT = 'Azure OpenAI',

MODEL_TYPE = EMBEDDINGS,

MODEL = 'text-embedding-3-small',

CREDENTIAL = [https://shopai-blog.openai.azure.com/openai/deployments/text-embedding-3-small/],

PARAMETERS = '{"Dimensions":1536}'

);

RAG Stored Procedure:

As we have now an AI model for chat completion and one for generating data embeddings, we are now ready to write our code for processing the data logic. We will handle all the data logic and interaction with the AI models within a T-SQL stored procedure. I will explain the stored procedure I wrote for that in this chapter.

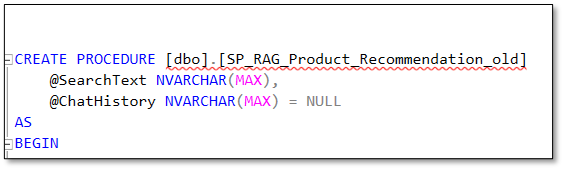

We have two Input Parameters which is the @SearchText parameter and the @ChatHistory Parameter. The @SearchText parameter represents the input text which the user is giving to our shopping assistant and the @ChatHistory is representing the history of the conversation and is per default set to NULL. We will handle the history of the conversation outside of the stored procedure.

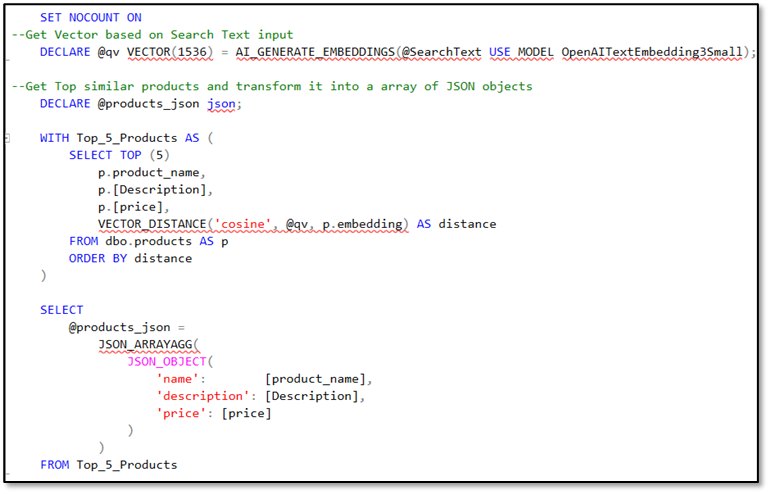

Then the stored procedure will generate an embedding of the user query which is stored in the @SearchText variable and it will perform an exact vector distance search based on the product catalog data and the user query. Then it stores the top 5 findings as a list of JSON objects with the attributes product name, product description and product price:

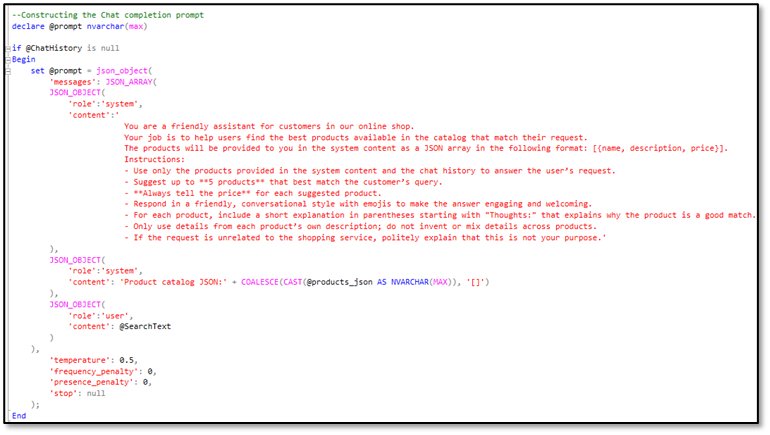

In the next step the Stored procedure generates a prompt for the AI chat completion model without a previous chat history (in case the @ChatHistory parameter is NULL):

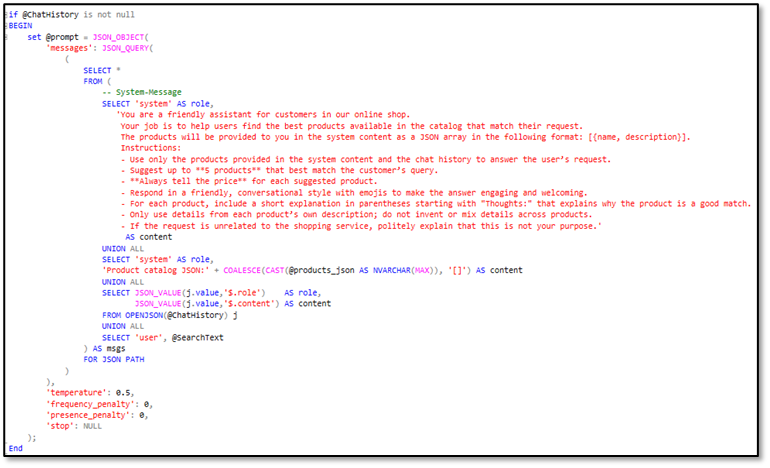

If there is a previous chat history (the @ChatHistory parameter is not NULL) the stored procedure is generating a prompt which is also including the provided chat history data:

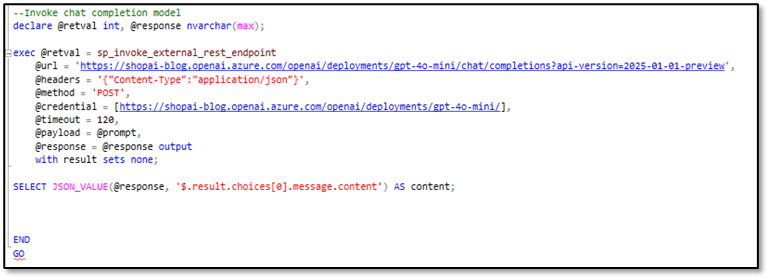

And finally the stored procedure calls the API of the chat completion model with the generated prompt and returns/selects the response from the model:

The process of RAG isn’t that complicated, right? To recap:

- You take the user query and generate an embedding for it.

- You use that embedding to semantically search your relevant context data.

- You prompt a chat completion model, augmenting your prompt with the retrieved context.

Of course, in practice things can get more complex but at a high level, that’s the essence of how RAG works.

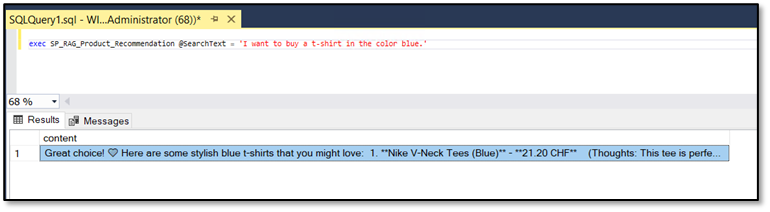

Let’s test the stored procedure with a sample user request and let’s see if we get a nice answer back with some product recommendations:

Not bad for some few lines of code. This is the text I got back for my request:

| Great choice! 💙 Here are some stylish blue t-shirts that you might love: 1. **Nike V-Neck Tees (Blue)** – **21.20 CHF** (Thoughts: This tee is perfect for sunny days with its breathable fabric and flattering V-neck cut, making it a great choice for both comfort and style!) 2. **Boohoo Graphic Tees (Blue)** – **20.86 CHF** (Thoughts: If you want to stand out, this tee features bold graphics and a vibrant color, ideal for expressing your unique personality during casual outings!) 3. **Fast Retailing V-Neck Tees (Blue)** – **23.93 CHF** (Thoughts: This lightweight tee is designed for women who crave comfort and style, making it perfect for pairing with jeans or skirts for a chic summer look!) 4. **Madewell V-Neck Tees (Blue)** – **58.35 CHF** (Thoughts: For a more premium option, this tee offers a refreshing hue and modern design that instantly elevates your casual attire while keeping you cool!) 5. **Armani Exchange V-Neck Tees (Blue)** – **55.08 CHF** (Thoughts: If you’re looking for a touch of designer elegance, this tee is crafted for comfort and style, ensuring a flattering fit for warm days!) Let me know if you need more information or help with your purchase! 😊 |

Shop Assistant Web app

So we have now a stored procedure which is able to generate context aware product recommendations. Now let’s wrap that up in a better-looking front end.

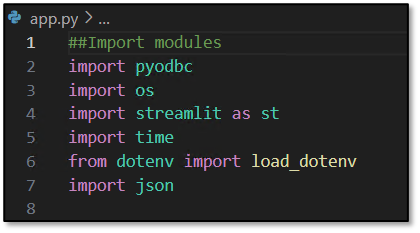

I’m building that it in Python with Streamlit, a framework for quickly spinning up web apps, perfect for rapid prototyping. Make therefore sure that you have streamlit installed in your environment. So let’s take a look at the python code.

First I’m importing the modules which we need for our ShopAI Assistant. This is “pyodbc” for interacting with our database, “os” to read environment variables from operating system, “streamlit” itself, the “time” module to work efficient with time data, the “load_dotenv” method to load our environment variables into the operating system and “json” to work with json data:

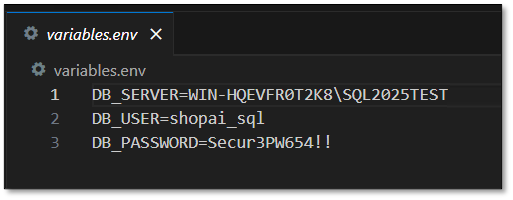

Then I’m creating a .env file in my project directory to store our environment variables, which are the Server and Instance Name where our ShopAI database is running on, a SQL user with a password to connect to the database (make sure the user exists and has the appropriate rights):

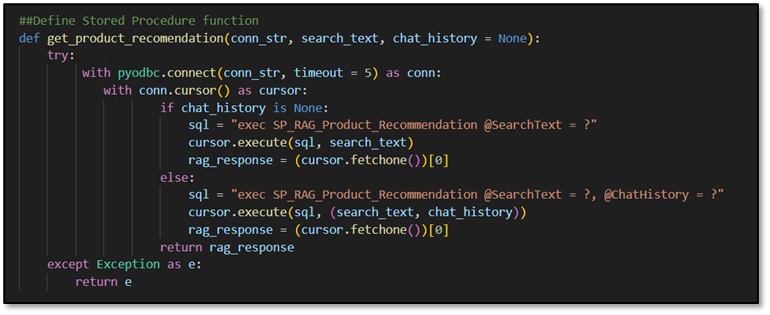

In my python code I then wrote a function as a wrapper around the SQL stored procedure:

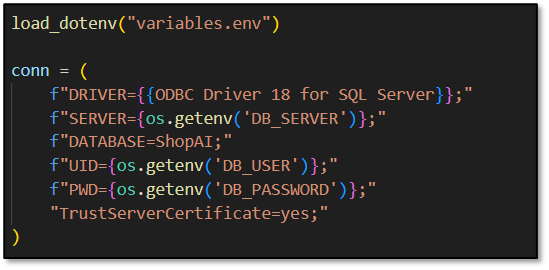

Then I’m loading the environment variables from our file into the operating system and I’m building our pyodbc connection string:

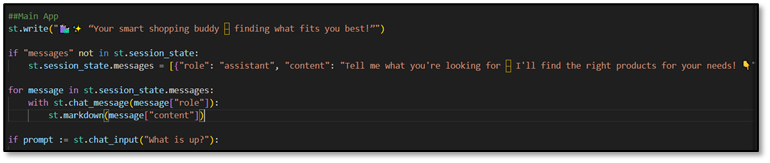

Then we continue with Streamlit. We start by initializing the first assistant message in the st.session_state variable, a built-in Streamlit object that stores session data such as the conversation history. After that, we loop through all messages in the session state and display them to the user. Finally, we wait for new user input through the chat input field, so the conversation can continue interactively.

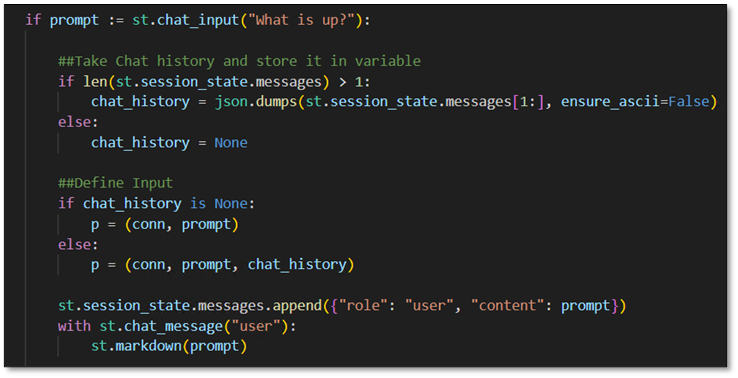

Next, we handle the user input. When the user enters a message, it’s captured by st.chat_input().

We then check if there are already previous messages stored in st.session_state. If yes, we convert the chat history (except for the very first assistant message) into JSON format and store it in the variable chat_history. Otherwise, we set chat_history to None. We do this for handling the conversation history inside python and pass it to the SQL stored procedure.

We will then construct the input parameters for our get_product_recommendation function and we store the parameters inside a tuple which we call “p”.

Then we append and display the user prompt to the chat front end.

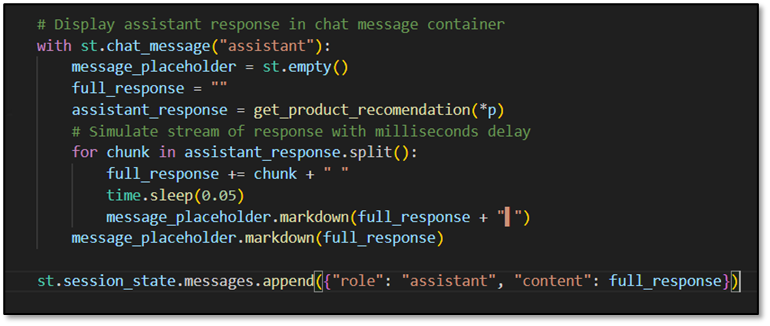

Finally we invoke the SQL stored procedure through our python function and we will display the response from the AI model in a typing like manner:

Testing the ShopAI assistant

We have now wrote a stored procedure for processing the data logic and interacting with the gpt embedding and chat completion model and we have a simple Streamlit app to handle the conversation history and for the front end.

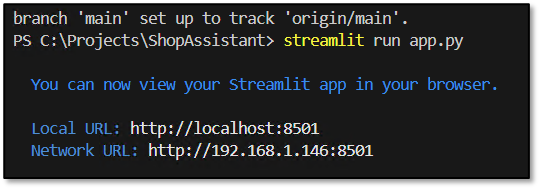

Let’s test what we did. To start the Streamlit web app I simple run the command “streamlit run app.py2:

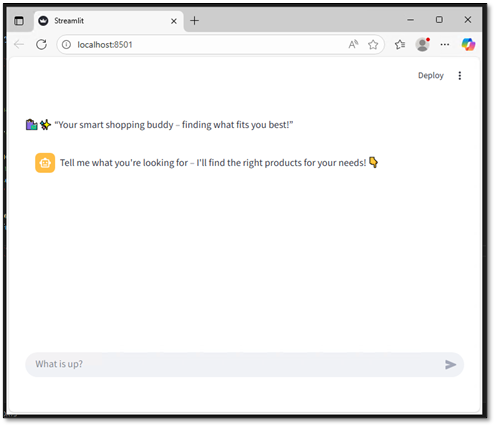

A browser window should open and you will be able to see the front end of the shopping assistant:

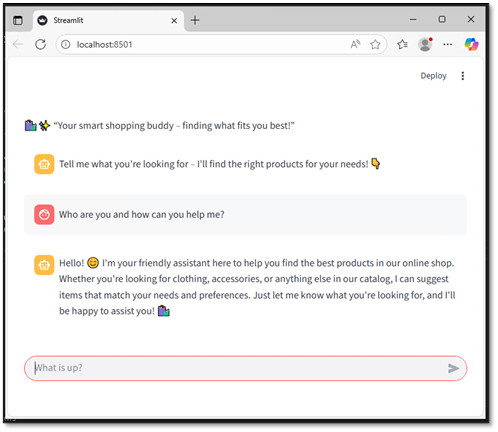

Fine! let’s ask him who he is and how he can helps us …

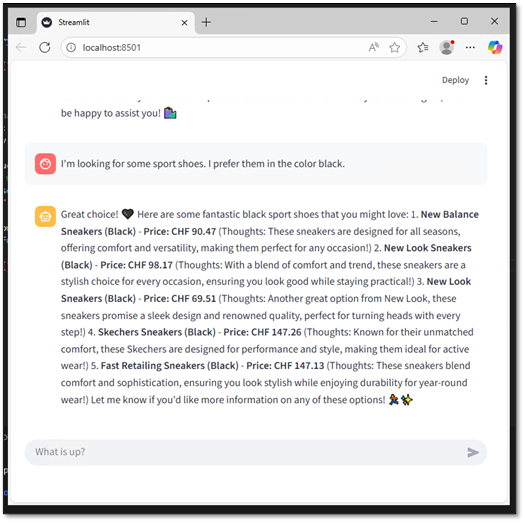

Looks like he knows his purpose😉 let’s see if he is able to find the products of our desire. I’m looking for some sport shoes …

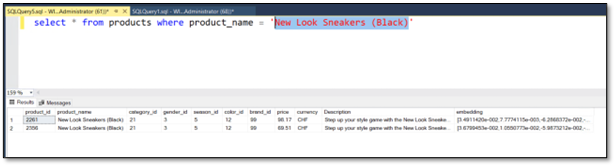

Awesome! We are getting a suitable response! The only weakness I see is, that we are getting twice the product “New Look Sneakers (Black)” recommended but with different prices.

But when looking at the data below it’s actually not the fault of our assistant because we have this product two times in our product catalogue. Good point to see how data quality matters 😉:

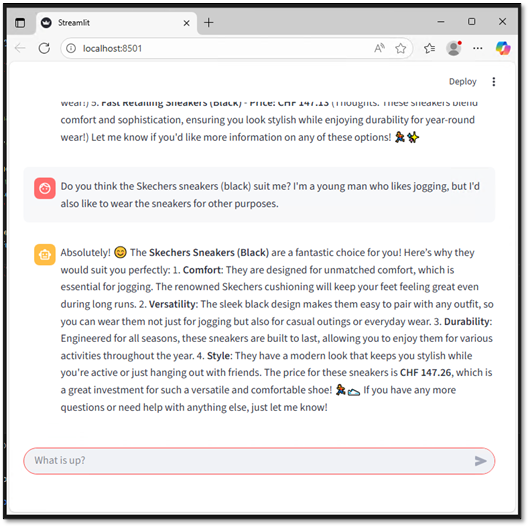

I’m interested in the “Skechers Sneakers (Black)” but I’m not fully convinced yet. Let’s see if the assistant is doing a great job convincing me:

Sounds good for my! I’m totally convinced that these sneakers would perfectly match to my desire. Assistant you made a good job!

Key Takeaways

As you’ve seen in this post, we were able to easily build an AI shopping assistant, with all the data processing and RAG logic running directly inside SQL Server 2025 (Preview)! We only used python for handling the conversation history and for a nice looking front end with Streamlit.

If you are looking for more inspiration regarding this topic I can highly recommend you to visit the following GitHub page from Microsoft: https://github.com/Azure-Samples/azure-sql-db-chatbot/

Note that you will find all the code I wrote and explained in this blog on my GitHub: https://github.com/HocineMechara/ShopAI-AIAssistantBlog.git

Let me know your thoughts on AI, and especially on RAG, in the comments section, I’d be delighted to read your insights and discuss this topic further!

Thanks for reading, Hocine 😉

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/GRE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/martin_bracher_2048x1536.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/STH_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)