One issue with traditional database systems like PostgreSQL is, that you cannot easily scale vertically. Of course you could add read replicas and offload read operations, but that either requires changes in the application, or putting something in front that understands the PostgreSQL dialect and automatically routes writes to the primary and spreads reads across one or more replicas (e.g. pgpool-II). But even if you have something in place, you need to deal with replications lags or you need to go for synchronous replication (which comes with it’s own downsides). Another answer to vertically scaling is Citus. By using Citus you can have sharding in PostgreSQL by simple installing an extension.

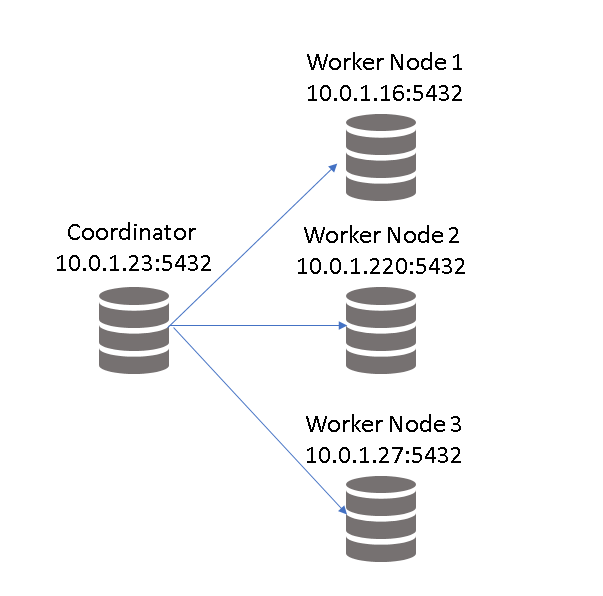

The official Citus documentation is really good, so I will not repeat the concepts here. Basically you need one coordinator: This is the node receiving all the traffic from the application. All other nodes are so called worker nodes which perform the actual work, send the results back to the coordinator which finally accumulates the results.

We’ll start simple with one node, and will end up with one coordinator node and three worker nodes at the end of this post:

Important right from the beginning: Citus is not a fork of PostgreSQL. Citus comes as an extension and you can use it with plain community PostgreSQL. Nothing else is required. Of course you need to install the extension and there are pre-build packages for that, either for flavors of Debian of flavors of Red Hat/Fedora. In this post will walk through installing the Citus extension from source code, as I’ve installed PostgreSQL 13.2 from source code as well (this is already done, search this blog for “install postgres source code”, if you want to know how to do that).

The first thing to do is to check your environment. pg_config needs to be in your $PATH so the Citus extension can find it when it configures/compiles:

postgres@ip-10-0-1-23:/home/postgres/ [pg132] which pg_config /u01/app/postgres/product/13/db_2/bin/pg_config

Once that is ready the procedure for getting the Citus extension installed is quite simple. Either clone the git repository (which will give you the latest development snapshot) or download the latest release. For the scope of this post we’ll clone the repository:

postgres@ip-10-0-1-23:/home/postgres/ [pg132] git clone https://github.com/citusdata/citus.git Cloning into 'citus'... remote: Enumerating objects: 66167, done. remote: Counting objects: 100% (1447/1447), done. remote: Compressing objects: 100% (638/638), done. remote: Total 66167 (delta 942), reused 1166 (delta 804), pack-reused 64720 Receiving objects: 100% (66167/66167), 33.13 MiB | 25.28 MiB/s, done. Resolving deltas: 100% (47834/47834), done.

Having sources in place, configure, compile and install (I am installing the packages for Debian here, you need to adjust this for other Linux distributions):

postgres@ip-10-0-1-23:/home/postgres/ [pg132] cd citus/ postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] sudo apt install libghc-curl-dev postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] sudo apt install libzstd-dev postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] ./configure checking for a sed that does not truncate output... /usr/bin/sed checking for gawk... no checking for mawk... mawk ... config.status: creating src/include/citus_config.h config.status: creating src/include/citus_version.h

Ready to compile …

postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] make Makefile:51: warning: overriding recipe for target 'check' /u01/app/postgres/product/13/db_2/lib/pgxs/src/makefiles/pgxs.mk:433: warning: ignoring old recipe for target 'check' make -C src/backend/distributed/ all ... -D_GNU_SOURCE -I/usr/include/libxml2 -I/u01/app/postgres/product/13/db_2/include -I/home/postgres/citus/vendor/safestringlib/include -flto=thin -emit-llvm -c -o ../columnar/write_state_management.bc ../columnar/write_state_management.c make[1]: Leaving directory '/home/postgres/citus/src/backend/distributed'

.. and install:

postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] make install Makefile:51: warning: overriding recipe for target 'check' /u01/app/postgres/product/13/db_2/lib/pgxs/src/makefiles/pgxs.mk:433: warning: ignoring old recipe for target 'check' make -C src/backend/distributed/ all make[1]: Entering directory '/home/postgres/citus/src/backend/distributed' ... /usr/bin/install -c -m 644 ./src/include/citus_version.h '/u01/app/postgres/product/13/db_2/include/server/' /usr/bin/install -c -m 644 /home/postgres/citus/./src/include/distributed/*.h '/u01/app/postgres/product/13/db_2/include/server/distributed/'

From now on the Citus extension will be available for every PostgreSQL cluster which is initialized with these binaries. As usual, we’ll need to initialize a new cluster:

postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] mkdir -p /u02/pgdata/13/citus

postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] initdb -D /u02/pgdata/13/citus

The files belonging to this database system will be owned by user "postgres".

This user must also own the server process.

The database cluster will be initialized with locale "C.UTF-8".

The default database encoding has accordingly been set to "UTF8".

The default text search configuration will be set to "english".

Data page checksums are disabled.

fixing permissions on existing directory /u02/pgdata/13/citus ... ok

creating subdirectories ... ok

selecting dynamic shared memory implementation ... posix

selecting default max_connections ... 100

selecting default shared_buffers ... 128MB

selecting default time zone ... Etc/UTC

creating configuration files ... ok

running bootstrap script ... ok

performing post-bootstrap initialization ... ok

syncing data to disk ... ok

initdb: warning: enabling "trust" authentication for local connections

You can change this by editing pg_hba.conf or using the option -A, or

--auth-local and --auth-host, the next time you run initdb.

Success. You can now start the database server using:

pg_ctl -D /u02/pgdata/13/citus -l logfile start

Start the cluster and install the Citus extension:

postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] echo "shared_preload_libraries = 'citus,pg_stat_statements'" >> /u02/pgdata/13/citus/postgresql.auto.conf postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] pg_ctl -D /u02/pgdata/13/citus/ start postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] psql -c "select * from pg_available_extensions where name like '%citus%'" postgres name | default_version | installed_version | comment -------+-----------------+-------------------+---------------------------- citus | 10.1-1 | | Citus distributed database (1 row)

Installing the extension into a database works like any other extension (you’ll notice that the extension creates a self signed certificate by default):

postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] psql -c "create database citus" postgres

CREATE DATABASE

postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] psql -c "create extension citus" citus

2021-05-05 17:44:02.467 UTC [7306] LOG: citus extension created on postgres without ssl enabled, turning it on during creation of the extension

2021-05-05 17:44:02.467 UTC [7306] CONTEXT: SQL statement "SELECT citus_setup_ssl()"

PL/pgSQL function inline_code_block line 5 at PERFORM

2021-05-05 17:44:02.467 UTC [7306] STATEMENT: create extension citus

2021-05-05 17:44:02.477 UTC [7306] LOG: no certificate present, generating self signed certificate

2021-05-05 17:44:02.477 UTC [7306] CONTEXT: SQL statement "SELECT citus_setup_ssl()"

PL/pgSQL function inline_code_block line 5 at PERFORM

2021-05-05 17:44:02.477 UTC [7306] STATEMENT: create extension citus

2021-05-05 17:44:02.586 UTC [7289] LOG: received SIGHUP, reloading configuration files

2021-05-05 17:44:02.587 UTC [7289] LOG: parameter "ssl" changed to "on"

2021-05-05 17:44:02.587 UTC [7289] LOG: parameter "ssl_ciphers" changed to "ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES128-SHA256:ECDHE-RSA-AES256-SHA384"

CREATE EXTENSION

postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] ls -latr /u02/pgdata/13/citus/server*

-rw------- 1 postgres postgres 1708 May 5 17:44 /u02/pgdata/13/citus/server.key

-rw------- 1 postgres postgres 981 May 5 17:44 /u02/pgdata/13/citus/server.crt

The Citus extension is ready:

postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] psql -c "select citus_version();" citus

2021-05-05 17:51:13.927 UTC [7343] LOG: starting maintenance daemon on database 16384 user 10

2021-05-05 17:51:13.927 UTC [7343] CONTEXT: Citus maintenance daemon for database 16384 user 10

citus_version

-----------------------------------------------------------------------------------------------------------------------

Citus 10.1devel on x86_64-pc-linux-gnu, compiled by gcc (Debian 8.3.0-6) 8.3.0, 64-bit gitref: master(sha: d0ba12206)

(1 row)

Cheking the processes, there is new background worker:

postgres@ip-10-0-1-23:/home/postgres/citus/ [pg132] ps ax | grep Citus 7343 ? Ss 0:00 postgres: Citus Maintenance Daemon: 16384/10 7351 pts/0 S+ 0:00 grep Citus

Time to create some tables and data using pgbench:

postgres@ip-10-0-1-23:/home/postgres/ [citus] pgbench -i -s 10 citus dropping old tables... NOTICE: table "pgbench_accounts" does not exist, skipping NOTICE: table "pgbench_branches" does not exist, skipping NOTICE: table "pgbench_history" does not exist, skipping NOTICE: table "pgbench_tellers" does not exist, skipping creating tables... generating data (client-side)... 1000000 of 1000000 tuples (100%) done (elapsed 4.18 s, remaining 0.00 s) vacuuming... creating primary keys... done in 6.35 s (drop tables 0.00 s, create tables 0.03 s, client-side generate 4.43 s, vacuum 0.32 s, primary keys 1.56 s).

As of now these are standard tables and we can turn them into distributed tables by using the “create_distributed_table” function and provide the column we we want to shard on:

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "SELECT create_distributed_table('pgbench_accounts', 'aid')" citus

NOTICE: Copying data from local table...

NOTICE: copying the data has completed

DETAIL: The local data in the table is no longer visible, but is still on disk.

HINT: To remove the local data, run: SELECT truncate_local_data_after_distributing_table($$public.pgbench_accounts$$)

create_distributed_table

--------------------------

(1 row)

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "SELECT truncate_local_data_after_distributing_table(\$\$public.pgbench_accounts\$\$)" citus

truncate_local_data_after_distributing_table

----------------------------------------------

(1 row)

This created a distributed table and you can query citus_tables to get more information:

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "select * from citus_tables" citus

table_name | citus_table_type | distribution_column | colocation_id | table_size | shard_count | table_owner | access_method

------------------+------------------+---------------------+---------------+------------+-------------+-------------+---------------

pgbench_accounts | distributed | aid | 1 | 151 MB | 32 | postgres | heap

(1 row)

Running a simple query against that table, you’ll notice that the execution plan shows other plan nodes than usually:

citus=# explain (analyze) select * from pgbench_accounts where aid=100;

QUERY PLAN

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Custom Scan (Citus Adaptive) (cost=0.00..0.00 rows=0 width=0) (actual time=12.633..12.635 rows=1 loops=1)

Task Count: 1

Tuple data received from nodes: 89 bytes

Tasks Shown: All

-> Task

Tuple data received from node: 89 bytes

Node: host=localhost port=5432 dbname=citus

-> Index Scan using pgbench_accounts_pkey_102024 on pgbench_accounts_102024 pgbench_accounts (cost=0.29..8.30 rows=1 width=97) (actual time=0.019..0.021 rows=1 loops=1)

Index Cond: (aid = 100)

Planning Time: 0.407 ms

Execution Time: 0.046 ms

Planning Time: 0.481 ms

Execution Time: 12.658 ms

(13 rows)

As we currently only have one node, all data is coming from this node. Time to add more nodes to the cluster. Before doing this, repeat the Citus installation on the remaining nodes, initiliaze a new PostgreSQL cluster in the same way as above, and make sure that authentication is configured so the nodes can talk to each other (pg_hba.conf). Before we can add worker nodes we need to specify which of the nodes is the coordinator node, and we’ll use the current one for this

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "SELECT citus_set_coordinator_host('10.0.1.23') " citus

citus_set_coordinator_host

----------------------------

(1 row)

Now we can add the workers:

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "SELECT * from citus_add_node('10.0.1.16', 5432)" citus

NOTICE: shards are still on the coordinator after adding the new node

HINT: Use SELECT rebalance_table_shards(); to balance shards data between workers and coordinator or SELECT citus_drain_node('10.0.1.23',5432); to permanently move shards away from the coordinator.

citus_add_node

----------------

2

(1 row)

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "SELECT * from citus_add_node('10.0.1.220', 5432)" citus

citus_add_node

----------------

3

(1 row)

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "SELECT * from citus_add_node('10.0.1.27', 5432)" citus

citus_add_node

----------------

4

(1 row)

Distributing the data across the nodes is something you need to kick of manually:

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "SELECT rebalance_table_shards()" citus NOTICE: Moving shard 102008 from 10.0.1.23:5432 to 10.0.1.16:5432 ... NOTICE: Moving shard 102009 from 10.0.1.23:5432 to 10.0.1.220:5432 ... NOTICE: Moving shard 102010 from 10.0.1.23:5432 to 10.0.1.27:5432 ... NOTICE: Moving shard 102011 from 10.0.1.23:5432 to 10.0.1.16:5432 ... NOTICE: Moving shard 102012 from 10.0.1.23:5432 to 10.0.1.220:5432 ... NOTICE: Moving shard 102013 from 10.0.1.23:5432 to 10.0.1.27:5432 ... NOTICE: Moving shard 102014 from 10.0.1.23:5432 to 10.0.1.16:5432 ... NOTICE: Moving shard 102015 from 10.0.1.23:5432 to 10.0.1.220:5432 ... NOTICE: Moving shard 102016 from 10.0.1.23:5432 to 10.0.1.27:5432 ... NOTICE: Moving shard 102017 from 10.0.1.23:5432 to 10.0.1.16:5432 ... NOTICE: Moving shard 102018 from 10.0.1.23:5432 to 10.0.1.220:5432 ... NOTICE: Moving shard 102019 from 10.0.1.23:5432 to 10.0.1.27:5432 ... NOTICE: Moving shard 102020 from 10.0.1.23:5432 to 10.0.1.16:5432 ... NOTICE: Moving shard 102021 from 10.0.1.23:5432 to 10.0.1.220:5432 ... NOTICE: Moving shard 102022 from 10.0.1.23:5432 to 10.0.1.27:5432 ... NOTICE: Moving shard 102023 from 10.0.1.23:5432 to 10.0.1.16:5432 ... NOTICE: Moving shard 102024 from 10.0.1.23:5432 to 10.0.1.220:5432 ... NOTICE: Moving shard 102025 from 10.0.1.23:5432 to 10.0.1.27:5432 ... NOTICE: Moving shard 102026 from 10.0.1.23:5432 to 10.0.1.16:5432 ... NOTICE: Moving shard 102027 from 10.0.1.23:5432 to 10.0.1.220:5432 ... NOTICE: Moving shard 102028 from 10.0.1.23:5432 to 10.0.1.27:5432 ... NOTICE: Moving shard 102029 from 10.0.1.23:5432 to 10.0.1.16:5432 ... NOTICE: Moving shard 102030 from 10.0.1.23:5432 to 10.0.1.220:5432 ... NOTICE: Moving shard 102031 from 10.0.1.23:5432 to 10.0.1.27:5432 ... rebalance_table_shards ------------------------ (1 row)

Finally remove the data from the coordinator node by draining the node:

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "SELECT citus_drain_node('10.0.1.23',5432)" citus

NOTICE: Moving shard 102032 from 10.0.1.23:5432 to 10.0.1.16:5432 ...

NOTICE: Moving shard 102033 from 10.0.1.23:5432 to 10.0.1.220:5432 ...

NOTICE: Moving shard 102034 from 10.0.1.23:5432 to 10.0.1.27:5432 ...

NOTICE: Moving shard 102035 from 10.0.1.23:5432 to 10.0.1.16:5432 ...

NOTICE: Moving shard 102036 from 10.0.1.23:5432 to 10.0.1.220:5432 ...

NOTICE: Moving shard 102037 from 10.0.1.23:5432 to 10.0.1.27:5432 ...

NOTICE: Moving shard 102038 from 10.0.1.23:5432 to 10.0.1.16:5432 ...

NOTICE: Moving shard 102039 from 10.0.1.23:5432 to 10.0.1.220:5432 ...

citus_drain_node

------------------

(1 row)

Doing the same query again, the execution plan shows that the data is not coming anymore from the local node:

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql citus

psql (13.2)

Type "help" for help.

citus=# explain (analyze) select * from pgbench_accounts where aid=100;

QUERY PLAN

------------------------------------------------------------------------------------------------------------------------------------------

Custom Scan (Citus Adaptive) (cost=0.00..0.00 rows=0 width=0) (actual time=20.801..20.803 rows=1 loops=1)

Task Count: 1

Tuple data received from nodes: 89 bytes

Tasks Shown: All

-> Task

Tuple data received from node: 89 bytes

Node: host=10.0.1.220 port=5432 dbname=citus

-> Index Scan using pgbench_accounts_pkey_102024 on pgbench_accounts_102024 pgbench_accounts (cost=0.29..8.30 rows=1 width=97)

Index Cond: (aid = 100)

Planning Time: 1.794 ms

Execution Time: 1.124 ms

Planning Time: 2.193 ms

Execution Time: 20.841 ms

(13 rows)

All the shards are evenly distributed across the nodes:

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "\d+" -h 10.0.1.16 citus

List of relations

Schema | Name | Type | Owner | Persistence | Size | Description

--------+-------------------------+-------+----------+-------------+---------+-------------

public | citus_tables | view | postgres | permanent | 0 bytes |

public | pgbench_accounts_102008 | table | postgres | permanent | 4176 kB |

public | pgbench_accounts_102011 | table | postgres | permanent | 4128 kB |

public | pgbench_accounts_102014 | table | postgres | permanent | 4072 kB |

public | pgbench_accounts_102017 | table | postgres | permanent | 4120 kB |

public | pgbench_accounts_102020 | table | postgres | permanent | 4136 kB |

public | pgbench_accounts_102023 | table | postgres | permanent | 4144 kB |

public | pgbench_accounts_102026 | table | postgres | permanent | 4152 kB |

public | pgbench_accounts_102029 | table | postgres | permanent | 4136 kB |

public | pgbench_accounts_102032 | table | postgres | permanent | 4104 kB |

public | pgbench_accounts_102035 | table | postgres | permanent | 4104 kB |

public | pgbench_accounts_102038 | table | postgres | permanent | 4136 kB |

(12 rows)

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "\d+" -h 10.0.1.220 citus

List of relations

Schema | Name | Type | Owner | Persistence | Size | Description

--------+-------------------------+-------+----------+-------------+---------+-------------

public | citus_tables | view | postgres | permanent | 0 bytes |

public | pgbench_accounts_102009 | table | postgres | permanent | 4152 kB |

public | pgbench_accounts_102012 | table | postgres | permanent | 4144 kB |

public | pgbench_accounts_102015 | table | postgres | permanent | 4136 kB |

public | pgbench_accounts_102018 | table | postgres | permanent | 4128 kB |

public | pgbench_accounts_102021 | table | postgres | permanent | 4128 kB |

public | pgbench_accounts_102024 | table | postgres | permanent | 4136 kB |

public | pgbench_accounts_102027 | table | postgres | permanent | 4128 kB |

public | pgbench_accounts_102030 | table | postgres | permanent | 4144 kB |

public | pgbench_accounts_102033 | table | postgres | permanent | 4160 kB |

public | pgbench_accounts_102036 | table | postgres | permanent | 4136 kB |

public | pgbench_accounts_102039 | table | postgres | permanent | 4152 kB |

(12 rows)

postgres@ip-10-0-1-23:/home/postgres/ [citus] psql -c "\d+" -h 10.0.1.27 citus

List of relations

Schema | Name | Type | Owner | Persistence | Size | Description

--------+-------------------------+-------+----------+-------------+---------+-------------

public | citus_tables | view | postgres | permanent | 0 bytes |

public | pgbench_accounts_102010 | table | postgres | permanent | 4136 kB |

public | pgbench_accounts_102013 | table | postgres | permanent | 4152 kB |

public | pgbench_accounts_102016 | table | postgres | permanent | 4128 kB |

public | pgbench_accounts_102019 | table | postgres | permanent | 4136 kB |

public | pgbench_accounts_102022 | table | postgres | permanent | 4128 kB |

public | pgbench_accounts_102025 | table | postgres | permanent | 4144 kB |

public | pgbench_accounts_102028 | table | postgres | permanent | 4136 kB |

public | pgbench_accounts_102031 | table | postgres | permanent | 4136 kB |

public | pgbench_accounts_102034 | table | postgres | permanent | 4128 kB |

public | pgbench_accounts_102037 | table | postgres | permanent | 4112 kB |

(11 rows)

That’s it for the initial setup. In a future post we’ll dive into the system in more detail.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/04/SIT_web.png)

Nadir Pervez

23.08.2024Hi, I am trying to configure multiple coordinator nodes with citus using patroni. How can i achieve this.