Oracle unveiled Exadata Database Service on Exascale Infrastructure in summer 2024. In this blog post, the first of a series dedicated to Exascale, we will dive into its architecture after a brief presentation of what Exascale is.

Introduction

Exadata on Exascale Infrastructure is a new deployment option for Exadata Database Service. It comes in addition to the well known Exadata Cloud@Customer or Exadata on Dedicated Infrastructure options already available. It is based on new storage management technology decoupling database and Grid Infrastructure clusters from the Exadata underlying storage servers by integrating the database kernel directly with the Exascale storage structures.

What is Exascale Infrastructure ?

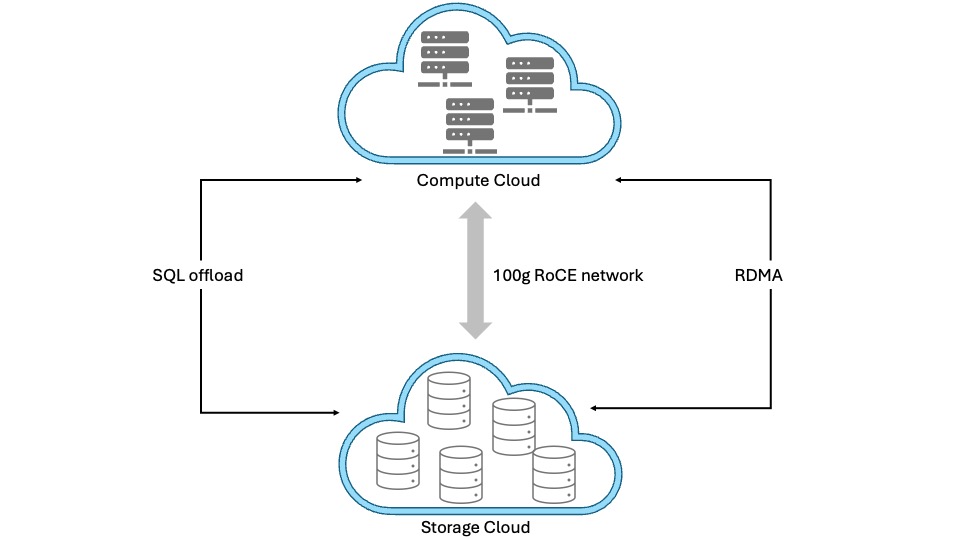

Simply put, Exascale is the next-generation of Oracle Exadata Database Services. It combines a cloud storage approach for flexibilty and hyper-elasticity with the performance of Exadata Infrastructure. It introduces a loosely-coupled shared and multitenant architecture where Database and Grid Infrastructure clusters are decoupled from the underlying Exadata storage servers which become a pool of shared storage resources available for multiple Grid Infrastructure clusters and databases.

Strict data isolation provides secure storage sharing while storage pooling enables flexible and dynamic provisioning combined with better storage space and processing utilization.

Advanced snapshot and cloning features, leveraging Redirect-On-Write technology instead of Copy-On-Write, enable space-efficient thin clones from any read/write database or pluggable database. Read-only test master are therefore a thing of the past. These features alone make Exascale a game-changer for database refreshes, CI/CD workflows and pipelines, development environments provisioning, all done with single SQL commands and much faster than before.

Block storage services allow the creation of arbitrary-sized block volumes for use by numerous applications. Exascale block volumes are also used to store Exadata database server virtual machines images enabling :

- creation of more virtual machines

- removal of local storage dependency inside the Exadata compute nodes

- seamless migration of virtual machines between different compute nodes

Finally, the following hardware and software considerations complete this brief presentation of Exascale:

- runs on 2-socket Oracle Exadata system hardware with RoCE Network Fabric (X8M-2 or later)

- Database 23ai release 23.5.0 or later is required for full-featured native Database file storage in Exascale

- Exascale block volumes support databases using older Database software releases back to Oracle Database 19c

- Exascale is built into Exadata System Software (since release 24.1)

Architecture

The main point with Exascale architecture is cloud-scale, multi-tenant resource pooling, both for storage and compute.

Storage pooling

Exascale is based on pooling storage servers which provide services such as Storage Pools, Vaults and Volume Management. Vaults, which can be considered an equivalent of ASM diskgroups, are directly accessed by the database kernel.

File and extent management is done by Exadata System Software, thus freeing the compute layer from ASM processes and memory structures for database files access and extents management (with Database 23ai or 26ai; for Database 19c, ASM is still required). With storage management moving to storage servers, resource management becomes more flexible and more memory and CPU resources are available on compute nodes to process database tasks.

Exascale also provides redundancy, caching, file metadata management, snapshots and clones as well as security and data integrity features.

Of course, since Exascale is built on top of Exadata, you benefit from features like RDMA, RoCE, Smart Flash Cache, XRMEM, Smart Scan, Storage Indexes, Columnar Caching.

Compute pooling

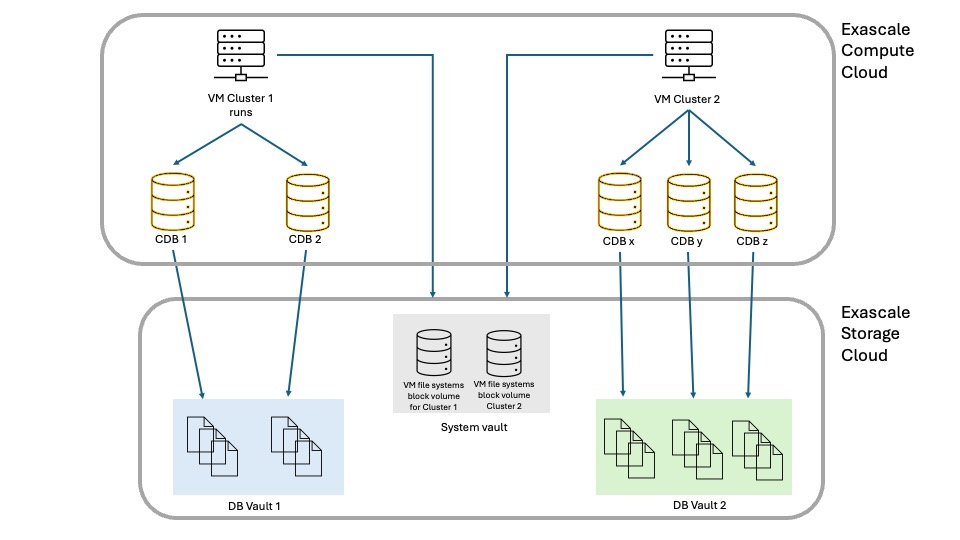

On the compute side, we have database-optimized servers which run Database 23ai or newer and Grid Infrastructure Cluster management software. The physical database servers host the VM Clusters and are managed by Oracle. Unlike Exadata on Dedicated Infrastructure, there is no need to provision an infrastructure before going ahead with VM Cluster creation and configuration : in Exascale, you only deal with VM Clusters.

VM file systems are centrally-hosted by Oracle on RDMA-enabled block volumes in a system-vault. VM images used by the VM Clusters are no more hosted on local storage on the database servers. This enables the number of VMs running on the database servers to raise from 12 to 50.

Each VM Cluster accesses a Database Vault storing the database files with strict isolation from other VM Clusters database files.

Virtual Cloud Network

Client and backup connectivity is provided by Virtual Cloud Network (VCN) services.

This loosely-coupled, shared and multitenant architecture enables far greater flexibilty than what was possible with ASM or even Exadata on Dedicated Infrastructure. Exascale’s hyper-elasticity enables to start with very small VM Clusters and then scale as the workloads increase, with Oracle managing the infrastructure automatically. You can start as small as 1 VM per cluster (up to 10), 8 eCPUs per VM (up to 200), 22GB of memory per VM, 220GB file system storage per VM and 300GB Vault storage per VM Cluster (up to 100TB). Memory is tightly coupled to eCPUs configuration with 2.75GB per eCPU and thus does not scale independently from the eCPUs number.

For those new to eCPU, it is a standard billing metric based on the number of cores per hour elastically allocated from a pool of compute and storage servers. eCPUs are not tied to the make, model or clock speed of the underlying processor. By contrast, an OCPU is the equivalent of one physical core with hyper-threading enabled.

To summarize

To best understand what Exascale Infrastructure is and introduces, here is the wording which best describes this new flavor of Exadata Database Service :

- loosely-coupling of compute and storage cloud

- hyper-elasticity

- shared and multi-tenant service model

- ASM-less (for 23ai or newer)

- Exadata performance, reliability, availability and security at any scale

- CI/CD friendly

- pay-per-use model

Stay tuned for more on Exascale …

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2025/07/ALK_MIN.jpeg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/JDE_Web-1-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/JDU_web-min-scaled.jpg)