Recently I’ve switched my PostgreSQL playground from Linux to FreeBSD. Not because I don’t like Linux anymore, just because I like trying out other operating systems and see what works the same and what is different. When you install FreeBSD and stick to the defaults, you’ll get a ZFS root filesystem and usually I don’t create separate partitions/mounts in my playgrounds for PostgreSQL so it went there as well. While playing a bit I got the impression that the whole system was faster than my previous Linux playground so I though it would be good to do some tests on Linux to compare the performance of ext4 (which is the default on Debian) and ZFS. So here we go.

I’ve used a t3.medium instance and two 20GB gp3 disks for the tests, one of them using an ext4 filesystem and the other one for ZFS. The version of PostgreSQL is 17, the operating system is Debian 12.

As ZFS is not available by default in the Debian repositories (I am not going into the licensing stuff), there are some extra steps to follow which are described in the Debian Wiki. Because ZFS comes as a kernel module, the kernel sources need to be available before it can be installed. For the AWS AMI for Debian this is:

postgres@ip-10-0-1-159:/home/postgres/ [pg17] apt search kernel | grep headers | grep cloud-amd64

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

linux-headers-6.1.0-21-cloud-amd64/stable-security 6.1.90-1 amd64

linux-headers-6.1.0-22-cloud-amd64/stable 6.1.94-1 amd64

linux-headers-6.1.0-23-cloud-amd64/stable-security 6.1.99-1 amd64

linux-headers-6.1.0-25-cloud-amd64/stable 6.1.106-3 amd64

linux-headers-6.1.0-26-cloud-amd64/stable-security 6.1.112-1 amd64

linux-headers-6.10.11+bpo-cloud-amd64/stable-backports 6.10.11-1~bpo12+1 amd64

linux-headers-6.10.6+bpo-cloud-amd64/stable-backports 6.10.6-1~bpo12+1 amd64

linux-headers-6.9.10+bpo-cloud-amd64/stable-backports 6.9.10-1~bpo12+1 amd64

linux-headers-6.9.7+bpo-cloud-amd64/stable-backports 6.9.7-1~bpo12+1 amd64

linux-headers-cloud-amd64/stable-security 6.1.112-1 amd64

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo apt install -y linux-headers-cloud-amd64

Once that is ready, the backports repository needs to be added and then ZFS can be installed:

postgres@ip-10-0-1-159:/home/postgres/ [pg17] cat /etc/apt/sources.list

# See /etc/apt/sources.list.d/debian.sources

deb http://deb.debian.org/debian bookworm-backports main contrib non-free

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo apt update

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo apt install -t stable-backports zfsutils-linux -y

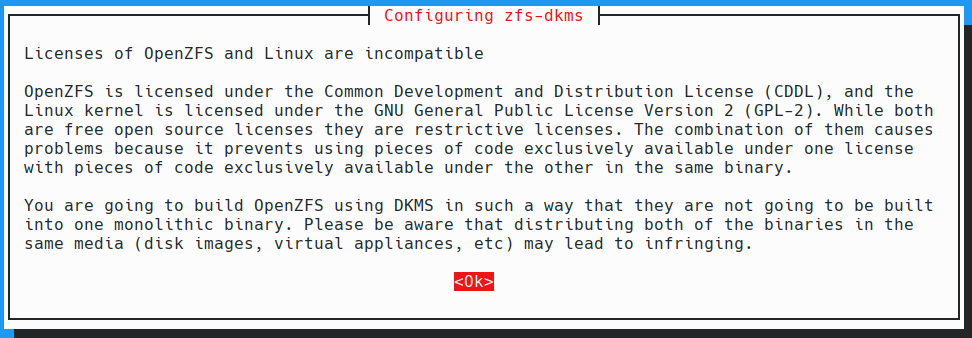

There will be a warning about the license incompatibilities, but as mentioned above I am not going into that topic:

The installation will take some time as the kernel modules will get build. Once it is done, the modules are available and can be loaded (happens automatically after a reboot):

...

Done.

zfs.ko:

Running module version sanity check.

- Original module

- No original module exists within this kernel

- Installation

- Installing to /lib/modules/6.1.0-26-cloud-amd64/updates/dkms/

spl.ko:

Running module version sanity check.

- Original module

- No original module exists within this kernel

- Installation

- Installing to /lib/modules/6.1.0-26-cloud-amd64/updates/dkms/

depmod...

Building initial module for 6.10.11+bpo-cloud-amd64

...

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo modprobe zfs

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo lsmod | grep zfs

zfs 5771264 0

spl 135168 1 zfs

As I’ve mentioned earlier, there are two disks I am going to use here:

postgres@ip-10-0-1-159:/home/postgres/ [pg17] lsblk | grep 20

nvme1n1 259:0 0 20G 0 disk

nvme2n1 259:1 0 20G 0 disk

The first one will be used for the ext4 file system:

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo mkfs.ext4 /dev/nvme1n1

mke2fs 1.47.0 (5-Feb-2023)

Creating filesystem with 5242880 4k blocks and 1310720 inodes

Filesystem UUID: 8fb9e2b2-9b47-4963-8a2b-a6728a9bb16a

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

postgres@ip-10-0-1-159:/home/postgres/ [pg17] mkdir -p /u02/pgdata/ext4

postgres@ip-10-0-1-159:/home/postgres/ [pg17] cat /etc/fstab | grep pgdata

/dev/nvme1n1 /u02/pgdata/ext4 ext4 noatime 0 0

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo mount -a

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo chown postgres:postgres /u02/pgdata/ext4

postgres@ip-10-0-1-159:/home/postgres/ [pg17] df -h | grep pgdata

/dev/nvme1n1 20G 24K 19G 1% /u02/pgdata/ext4

The second disk is for the ZFS file system:

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo zpool create pgpool /dev/nvme2n1

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

pgpool 19.5G 130K 19.5G - - 0% 0% 1.00x ONLINE -

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo zfs create pgpool/pgzfs

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo zfs set mountpoint=/u02/pgdata/zfs pgpool/pgzfs

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo zfs set compression=zstd pgpool/pgzfs

postgres@ip-10-0-1-159:/home/postgres/ [pg17] df -h | grep zfs

pgpool/pgzfs 19G 128K 19G 1% /u02/pgdata/zfs

postgres@ip-10-0-1-159:/home/postgres/ [pg17] sudo chown postgres:postgres /u02/pgdata/zfs

As you can see above I’ve enabled compression using zstd (lz4 is another option, as well as others).

For the ext4 filesystem I’ll initialize PostgreSQL as we do it usually, which means enabling data checksums (this will anyway be the default starting with PostgreSQL 18):

ostgres@ip-10-0-1-159:/home/postgres/ [pg17] initdb --pgdata=/u02/pgdata/ext4/pg --data-checksums

The files belonging to this database system will be owned by user "postgres".

This user must also own the server process.

The database cluster will be initialized with locale "C.UTF-8".

The default database encoding has accordingly been set to "UTF8".

The default text search configuration will be set to "english".

Data page checksums are enabled.

creating directory /u02/pgdata/ext4/pg ... ok

creating subdirectories ... ok

selecting dynamic shared memory implementation ... posix

selecting default "max_connections" ... 100

selecting default "shared_buffers" ... 128MB

selecting default time zone ... Etc/UTC

creating configuration files ... ok

running bootstrap script ... ok

performing post-bootstrap initialization ... ok

syncing data to disk ... ok

initdb: warning: enabling "trust" authentication for local connections

initdb: hint: You can change this by editing pg_hba.conf or using the option -A, or --auth-local and --auth-host, the next time you run initdb.

Success. You can now start the database server using:

pg_ctl -D /u02/pgdata/ext4/pg -l logfile start

For the ZFS filestem we don’t need this, as ZFS is doing this anyway:

postgres@ip-10-0-1-159:/home/postgres/ [pg17] initdb --pgdata=/u02/pgdata/zfs/pg

The files belonging to this database system will be owned by user "postgres".

This user must also own the server process.

The database cluster will be initialized with locale "C.UTF-8".

The default database encoding has accordingly been set to "UTF8".

The default text search configuration will be set to "english".

Data page checksums are disabled.

creating directory /u02/pgdata/zfs/pg … ok

creating subdirectories … ok

selecting dynamic shared memory implementation … posix

selecting default "max_connections" … 100

selecting default "shared_buffers" … 128MB

selecting default time zone … Etc/UTC

creating configuration files … ok

running bootstrap script … ok

performing post-bootstrap initialization … ok

syncing data to disk … ok

initdb: warning: enabling "trust" authentication for local connections

initdb: hint: You can change this by editing pg_hba.conf or using the option -A, or --auth-local and --auth-host, the next time you run initdb.

Success. You can now start the database server using:

pg_ctl -D /u02/pgdata/zfs/pg -l logfile start

The only other two bits I’ll change for the ZFS instance, is to turn off full_page_writes as torn writes do not happen on ZFS and to turn off wal_init_zero which is also not required on ZFS (and of course another port):

postgres@ip-10-0-1-159:/home/postgres/ [pg17] echo "wal_init_zero='off'" >> /u02/pgdata/zfs/pg/postgresql.auto.conf

postgres@ip-10-0-1-159:/home/postgres/ [pg17] echo "full_page_writes='off'" >> /u02/pgdata/zfs/pg/postgresql.auto.conf

postgres@ip-10-0-1-159:/home/postgres/ [pg17] echo "port=5433'" >> /u02/pgdata/zfs/pg/postgresql.auto.conf

Ready to start both clusters:

postgres@ip-10-0-1-159:/home/postgres/ [pg17] pg_ctl --pgdata=/u02/pgdata/ext4/pg/ --log=/u02/pgdata/ext4/pg/log.log start

waiting for server to start.... done

server started

postgres@ip-10-0-1-159:/home/postgres/ [pg17] pg_ctl --pgdata=/u02/pgdata/zfs/pg/ --log=/u02/pgdata/zfs/pg/log.log start

waiting for server to start.... done

server started

No we’re ready to start with the tests, and this is the topic for the next post.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/11/LTO_WEB.jpg)