By Franck Pachot

.

The Oracle 12c Adaptive Plan feature was already presented by Nicolas Jardot in OOW 2013: Solving customer issues with the 12c Optimizer.

I recently had to answer several questions about its behavior at execution time. Maybe the term ‘adaptive’ is misleading. It’s not that a join will stop and restart to another join method. Even with adaptive plan there will only be one join method to be applied. The feature only defers a decision that was made at parse time in previous versions and that will now be made at execution time – after reading a few rows.

In order to show what happens exactly at execution time, I will reproduce the kind of exercise that we do in our training session Oracle 12c New Features workshop in this posting.

First I set the current schema to “HR” – the one that is delivered as a demo with Oracle.

SQL> alter session set current_schema=HR;

Session altered.Then I get the execution plan for a join between DEPARTMENTS and EMPLOYEES where SALARY>20000 and departement like ‘%ing’;

SQL> explain plan for

2 select distinct DEPARTMENT_NAME from DEPARTMENTS

3 join EMPLOYEES using(DEPARTMENT_ID)

4 where DEPARTMENT_NAME like '%ing' and SALARY>20000;

Explained.

I’ve chosen such a predicate because I know that a lot of department names are ending with ‘ing’ (Marketing, Purchasing, Shipping, Accounting, etc). But I know also that the optimizer cannot guess that and will underestimate then number of departements.

SQL> set linesize 150 pagesize 1000

SQL> select * from table( dbms_xplan.display );

PLAN_TABLE_OUTPUT

----------------------------------------------------------------------------------------------

Plan hash value: 3041748347

----------------------------------------------------------------------------------------------

| Id | Operation | Name |Rows | Bytes | Cost (%CPU)|

----------------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | 23 | 3 (0)|

| 1 | HASH UNIQUE | | 1 | 23 | 3 (0)|

| 2 | NESTED LOOPS SEMI | | 1 | 23 | 3 (0)|

|* 3 | TABLE ACCESS FULL | DEPARTMENTS | 1 | 16 | 2 (0)|

|* 4 | TABLE ACCESS BY INDEX ROWID BATCHED| EMPLOYEES | 1 | 7 | 1 (0)|

|* 5 | INDEX RANGE SCAN | EMP_DEP_IX | 10 | | 0 (0)|

----------------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

3 - filter("DEPARTMENTS"."DEPARTMENT_NAME" LIKE '%ing')

4 - filter("EMPLOYEES"."SALARY">20000)

5 - access("DEPARTMENTS"."DEPARTMENT_ID"="EMPLOYEES"."DEPARTMENT_ID")

Note

-----

- this is an adaptive plan NESTED LOOP was picked because of the low cardinality that is estimated (estimation is only one department with a specific suffix), but the plan is adaptive.

DBMS_XPLAN in 12c has a new ‘adaptive’ format that show the inactive operations:

SQL> select * from table( dbms_xplan.display(format=>'adaptive') );

PLAN_TABLE_OUTPUT

-------------------------------------------------------------------------------------------

Plan hash value: 3041748347

-------------------------------------------------------------------------------------------

| Id | Operation | Name | Rows| Bytes | Cost |

-------------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | 23 | 3|

| 1 | HASH UNIQUE | | 1 | 23 | 3|

|- * 2 | HASH JOIN SEMI | | 1 | 23 | 3|

| 3 | NESTED LOOPS SEMI | | 1 | 23 | 3|

|- 4 | STATISTICS COLLECTOR | | | | |

| * 5 | TABLE ACCESS FULL | DEPARTMENTS | 1 | 16 | 2|

| * 6 | TABLE ACCESS BY INDEX ROWID BATCHED| EMPLOYEES | 1 | 7 | 1|

| * 7 | INDEX RANGE SCAN | EMP_DEP_IX | 10 | | 0|

|- * 8 | TABLE ACCESS FULL | EMPLOYEES | 1 | 7 | 1|

-------------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - access("DEPARTMENTS"."DEPARTMENT_ID"="EMPLOYEES"."DEPARTMENT_ID")

5 - filter("DEPARTMENTS"."DEPARTMENT_NAME" LIKE '%ing')

6 - filter("EMPLOYEES"."SALARY">20000)

7 - access("DEPARTMENTS"."DEPARTMENT_ID"="EMPLOYEES"."DEPARTMENT_ID")

8 - filter("EMPLOYEES"."SALARY">20000)

Note

-----

- this is an adaptive plan (rows marked '-' are inactive)Look at the STATISTICS COLLECTOR. It buffers the rows and it is able to switch to HASH JOIN when cardinality becomes higher than what was estimated.

That was only explain plan. Now I run it and gather execution statistics:

SQL> select /*+ gather_plan_statistics */ distinct DEPARTMENT_NAME

2 from DEPARTMENTS join EMPLOYEES using(DEPARTMENT_ID)

3 where DEPARTMENT_NAME like '%ing' and SALARY>20000;

no rows selected

SQL> select * from table( dbms_xplan.display_cursor(format=>'rowstats last adaptive') );

PLAN_TABLE_OUTPUT

---------------------------------------------------------------------------------------

SQL_ID gys8mb9n367gq, child number 0

-------------------------------------

select /*+ gather_plan_statistics */ distinct DEPARTMENT_NAME from

DEPARTMENTS join EMPLOYEES using(DEPARTMENT_ID) where DEPARTMENT_NAME

like '%ing' and SALARY>20000

Plan hash value: 1983137394

---------------------------------------------------------------------------------------

| Id | Operation | Name |Starts|E-Rows| A-Rows|

---------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | | 0 |

| 1 | HASH UNIQUE | | 1 | 1 | 0 |

| * 2 | HASH JOIN SEMI | | 1 | 1 | 0 |

|- 3 | NESTED LOOPS SEMI | | 1 | 1 | 7 |

|- 4 | STATISTICS COLLECTOR | | 1 | | 7 |

| * 5 | TABLE ACCESS FULL | DEPARTMENTS | 1 | 1 | 7 |

|- * 6 | TABLE ACCESS BY INDEX ROWID BATCHED| EMPLOYEES | 0 | 1 | 0 |

|- * 7 | INDEX RANGE SCAN | EMP_DEP_IX | 0 | 10 | 0 |

| * 8 | TABLE ACCESS FULL | EMPLOYEES | 1 | 1 | 1 |

---------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - access("DEPARTMENTS"."DEPARTMENT_ID"="EMPLOYEES"."DEPARTMENT_ID")

5 - filter("DEPARTMENTS"."DEPARTMENT_NAME" LIKE '%ing')

6 - filter("EMPLOYEES"."SALARY">20000)

7 - access("DEPARTMENTS"."DEPARTMENT_ID"="EMPLOYEES"."DEPARTMENT_ID")

8 - filter("EMPLOYEES"."SALARY">20000)

Note

-----

- this is an adaptive plan (rows marked '-' are inactive)Here I can see that the hash join is now activated instead of the nested loop. The actual number of rows (A-Rows) is higher than the estimated (E-Rows). The nested loop operations have never occured (Starts=0).

The STATISTICS COLLECTOR has buffered the rows and decided to switch to hash join when it reached the inflection point that has been calculated at optimization time. Then the join is done with the buffered rows and with the remaing rows that will be read.

Let’s see how that inflection point was calculated.

I’ll dump CBO trace (also known as 10053 event):

SQL> alter session set tracefile_identifier='cbo_trace';

Session altered.

SQL> exec dbms_sqldiag.dump_trace(p_sql_id=>'gys8mb9n367gq',p_child_number=>0,p_component=>'Compiler',p_file_id=>'');

PL/SQL procedure successfully completed.

Now I am looking for dynamic plan lines which starts by DP:

SQL> host grep -hE "^DP|^AP" ../trace/DB1_ora_*cbo_trace.tr? | tail

AP: Searching for inflection point at value 33.35

DP: Costing Nested Loops Join for inflection point at card 17.35

DP: Costing Hash Join for inflection point at card 17.35

AP: lcost=8.93, rcost=4.02

AP: Searching for inflection point at value 17.35

DP: Costing Nested Loops Join for inflection point at card 9.35

DP: Costing Hash Join for inflection point at card 9.35

AP: lcost=5.67, rcost=4.02

AP: Searching for inflection point at value 9.35

DP: Costing Nested Loops Join for inflection point at card 5.35

DP: Costing Hash Join for inflection point at card 5.35

AP: lcost=4.04, rcost=4.02

AP: Searching for inflection point at value 5.35

DP: Costing Nested Loops Join for inflection point at card 3.35

DP: Costing Hash Join for inflection point at card 3.35

AP: lcost=3.22, rcost=4.02

AP: Searching for inflection point at value 3.35

DP: Costing Nested Loops Join for inflection point at card 4.35

DP: Costing Hash Join for inflection point at card 4.35

AP: lcost=3.63, rcost=4.02

DP: Costing Hash Join for inflection point at card 4.35

DP: Found point of inflection for NLJ vs. HJ: card = 4.35

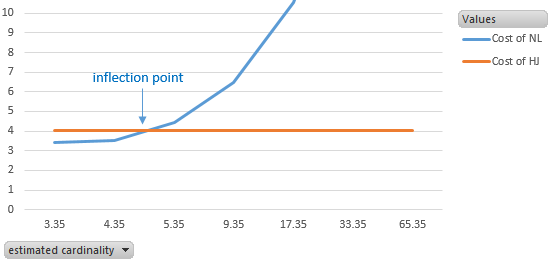

You can see how it was calculated: the CBO sets a cardinality and calculates the cost of a Nested Loop join and Hash join for this cardinality. I reproduced only the last lines here but in my example the costing has started at card 262144.00 which is a large power of two.

Then the CBO divides the cardinality by 2 and calculates the costs again. The point where the cost of one join is lower than the other one will be the inflection point. Here the inflection point is 4.35.

So, when rows coming from DEPARTMENTS are less than 4, a nested loop will be chosen (as in the first explain plan which estimates card=1) and if the actual number of rows is higher then a hash join will be used.

I’ve made a graph about all the costs calculated for each cardinality from the whole dump file. I’m showing only the lower part because the nested loop cost is quickly exponential:

The aim of adaptive plan is to avoid the zone where Nested Loop occur with high number of rows because of an underestimated cardinality.

Here, I have only small tables. If the table is bigger, then the horizontal line for Hash Join will be higher. If the clustering factor of the index is better, then the slope for Nested Loop line wil be lower. All that will determine how far the inflection point goes.

Note that when the actual number of rows is in the same ballpark as the inflection point, CBO has to make a choice anyway, but I consider in that case that none of the plans are optimal, both being probably too expensive. And that’s also bad for plan stability because a few additional rows will change the plan on a new parse (see Jonathan Lewis’ philosophy). When this is the case, I advise to check the index efficiency (selectivity and clustering factor) or see how to lower to full scan (partition, smart scan,…).

This adaptive plan behaviour only occurs at the first execution. The goal is not to be adaptive over time, but just to defer the optimization decision from parse time to execution time. It’s even better than dynamic sampling: the actual number of rows is used to make the decision.

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/oracle-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/05/JDE_Web-1-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/11/LTO_WEB.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2025/07/ALK_MIN.jpeg)